Script 'mail_helper' called by obssrc

Hello community,

here is the log from the commit of package python-requests for openSUSE:Factory

checked in at 2022-01-07 12:44:41

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Comparing /work/SRC/openSUSE:Factory/python-requests (Old)

and /work/SRC/openSUSE:Factory/.python-requests.new.1896 (New)

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Package is "python-requests"

Fri Jan 7 12:44:41 2022 rev:72 rq:944115 version:2.27.1

Changes:

--------

--- /work/SRC/openSUSE:Factory/python-requests/python-requests.changes

2021-11-21 23:51:41.346348295 +0100

+++

/work/SRC/openSUSE:Factory/.python-requests.new.1896/python-requests.changes

2022-01-07 12:45:10.923795495 +0100

@@ -1,0 +2,41 @@

+Wed Jan 5 17:09:11 UTC 2022 - Michael Str??der <mich...@stroeder.com>

+

+- update to 2.27.1

+ * Fixed parsing issue that resulted in the auth component being

+ dropped from proxy URLs. (#6028)

+

+-------------------------------------------------------------------

+Tue Jan 4 16:20:04 UTC 2022 - Dirk M??ller <dmuel...@suse.com>

+

+- update to 2.27.0:

+* Officially added support for Python 3.10. (#5928)

+

+* Added a `requests.exceptions.JSONDecodeError` to unify JSON exceptions

between

+ Python 2 and 3. This gets raised in the `response.json()` method, and is

+ backwards compatible as it inherits from previously thrown exceptions.

+ Can be caught from `requests.exceptions.RequestException` as well. (#5856)

+

+* Improved error text for misnamed `InvalidSchema` and `MissingSchema`

+ exceptions. This is a temporary fix until exceptions can be renamed

+ (Schema->Scheme). (#6017)

+* Improved proxy parsing for proxy URLs missing a scheme. This will address

+ recent changes to `urlparse` in Python 3.9+. (#5917)

+* Fixed defect in `extract_zipped_paths` which could result in an infinite loop

+ for some paths. (#5851)

+* Fixed handling for `AttributeError` when calculating length of files obtained

+ by `Tarfile.extractfile()`. (#5239)

+* Fixed urllib3 exception leak, wrapping `urllib3.exceptions.InvalidHeader`

with

+ `requests.exceptions.InvalidHeader`. (#5914)

+* Fixed bug where two Host headers were sent for chunked requests. (#5391)

+* Fixed regression in Requests 2.26.0 where `Proxy-Authorization` was

+ incorrectly stripped from all requests sent with `Session.send`. (#5924)

+* Fixed performance regression in 2.26.0 for hosts with a large number of

+ proxies available in the environment. (#5924)

+* Fixed idna exception leak, wrapping `UnicodeError` with

+ `requests.exceptions.InvalidURL` for URLs with a leading dot (.) in the

+ domain. (#5414)

+* Requests support for Python 2.7 and 3.6 will be ending in 2022. While we

+ don't have exact dates, Requests 2.27.x is likely to be the last release

+ series providing support.

+

+-------------------------------------------------------------------

Old:

----

requests-2.26.0.tar.gz

New:

----

requests-2.27.1.tar.gz

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Other differences:

------------------

++++++ python-requests.spec ++++++

--- /var/tmp/diff_new_pack.2DdMB1/_old 2022-01-07 12:45:11.991796236 +0100

+++ /var/tmp/diff_new_pack.2DdMB1/_new 2022-01-07 12:45:11.999796242 +0100

@@ -1,7 +1,7 @@

#

# spec file

#

-# Copyright (c) 2021 SUSE LLC

+# Copyright (c) 2022 SUSE LLC

#

# All modifications and additions to the file contributed by third parties

# remain the property of their copyright owners, unless otherwise agreed

@@ -26,12 +26,12 @@

%endif

%{?!python_module:%define python_module() python-%{**} python3-%{**}}

Name: python-requests%{psuffix}

-Version: 2.26.0

+Version: 2.27.1

Release: 0

Summary: Python HTTP Library

License: Apache-2.0

Group: Development/Languages/Python

-URL: http://python-requests.org/

+URL: https://docs.python-requests.org/

Source:

https://files.pythonhosted.org/packages/source/r/requests/requests-%{version}.tar.gz

# PATCH-FIX-SUSE: do not hardcode versions in setup.py/requirements

Patch0: requests-no-hardcoded-version.patch

++++++ requests-2.26.0.tar.gz -> requests-2.27.1.tar.gz ++++++

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/HISTORY.md

new/requests-2.27.1/HISTORY.md

--- old/requests-2.26.0/HISTORY.md 2021-07-13 16:52:57.000000000 +0200

+++ new/requests-2.27.1/HISTORY.md 2022-01-05 16:36:01.000000000 +0100

@@ -4,7 +4,63 @@

dev

---

-- \[Short description of non-trivial change.\]

+- \[Short description of non-trivial change.\]

+

+2.27.1 (2022-01-05)

+-------------------

+

+**Bugfixes**

+

+- Fixed parsing issue that resulted in the `auth` component being

+ dropped from proxy URLs. (#6028)

+

+2.27.0 (2022-01-03)

+-------------------

+

+**Improvements**

+

+- Officially added support for Python 3.10. (#5928)

+

+- Added a `requests.exceptions.JSONDecodeError` to unify JSON exceptions

between

+ Python 2 and 3. This gets raised in the `response.json()` method, and is

+ backwards compatible as it inherits from previously thrown exceptions.

+ Can be caught from `requests.exceptions.RequestException` as well. (#5856)

+

+- Improved error text for misnamed `InvalidSchema` and `MissingSchema`

+ exceptions. This is a temporary fix until exceptions can be renamed

+ (Schema->Scheme). (#6017)

+

+- Improved proxy parsing for proxy URLs missing a scheme. This will address

+ recent changes to `urlparse` in Python 3.9+. (#5917)

+

+**Bugfixes**

+

+- Fixed defect in `extract_zipped_paths` which could result in an infinite loop

+ for some paths. (#5851)

+

+- Fixed handling for `AttributeError` when calculating length of files obtained

+ by `Tarfile.extractfile()`. (#5239)

+

+- Fixed urllib3 exception leak, wrapping `urllib3.exceptions.InvalidHeader`

with

+ `requests.exceptions.InvalidHeader`. (#5914)

+

+- Fixed bug where two Host headers were sent for chunked requests. (#5391)

+

+- Fixed regression in Requests 2.26.0 where `Proxy-Authorization` was

+ incorrectly stripped from all requests sent with `Session.send`. (#5924)

+

+- Fixed performance regression in 2.26.0 for hosts with a large number of

+ proxies available in the environment. (#5924)

+

+- Fixed idna exception leak, wrapping `UnicodeError` with

+ `requests.exceptions.InvalidURL` for URLs with a leading dot (.) in the

+ domain. (#5414)

+

+**Deprecations**

+

+- Requests support for Python 2.7 and 3.6 will be ending in 2022. While we

+ don't have exact dates, Requests 2.27.x is likely to be the last release

+ series providing support.

2.26.0 (2021-07-13)

-------------------

@@ -61,7 +117,7 @@

- Requests now supports chardet v4.x.

2.25.0 (2020-11-11)

-------------------

+-------------------

**Improvements**

@@ -108,7 +164,7 @@

**Dependencies**

- Pinning for `chardet` and `idna` now uses major version instead of minor.

- This hopefully reduces the need for releases everytime a dependency is

updated.

+ This hopefully reduces the need for releases every time a dependency is

updated.

2.22.0 (2019-05-15)

-------------------

@@ -463,7 +519,7 @@

- Fixed regression from 2.12.2 where non-string types were rejected in

the basic auth parameters. While support for this behaviour has been

- readded, the behaviour is deprecated and will be removed in the

+ re-added, the behaviour is deprecated and will be removed in the

future.

2.12.3 (2016-12-01)

@@ -1697,12 +1753,12 @@

- Automatic Authentication API Change

- Smarter Query URL Parameterization

- Allow file uploads and POST data together

--

+-

New Authentication Manager System

: - Simpler Basic HTTP System

- - Supports all build-in urllib2 Auths

+ - Supports all built-in urllib2 Auths

- Allows for custom Auth Handlers

0.2.4 (2011-02-19)

@@ -1716,7 +1772,7 @@

0.2.3 (2011-02-15)

------------------

--

+-

New HTTPHandling Methods

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/PKG-INFO new/requests-2.27.1/PKG-INFO

--- old/requests-2.26.0/PKG-INFO 2021-07-13 16:54:32.000000000 +0200

+++ new/requests-2.27.1/PKG-INFO 2022-01-05 16:40:31.000000000 +0100

@@ -1,6 +1,6 @@

Metadata-Version: 2.1

Name: requests

-Version: 2.26.0

+Version: 2.27.1

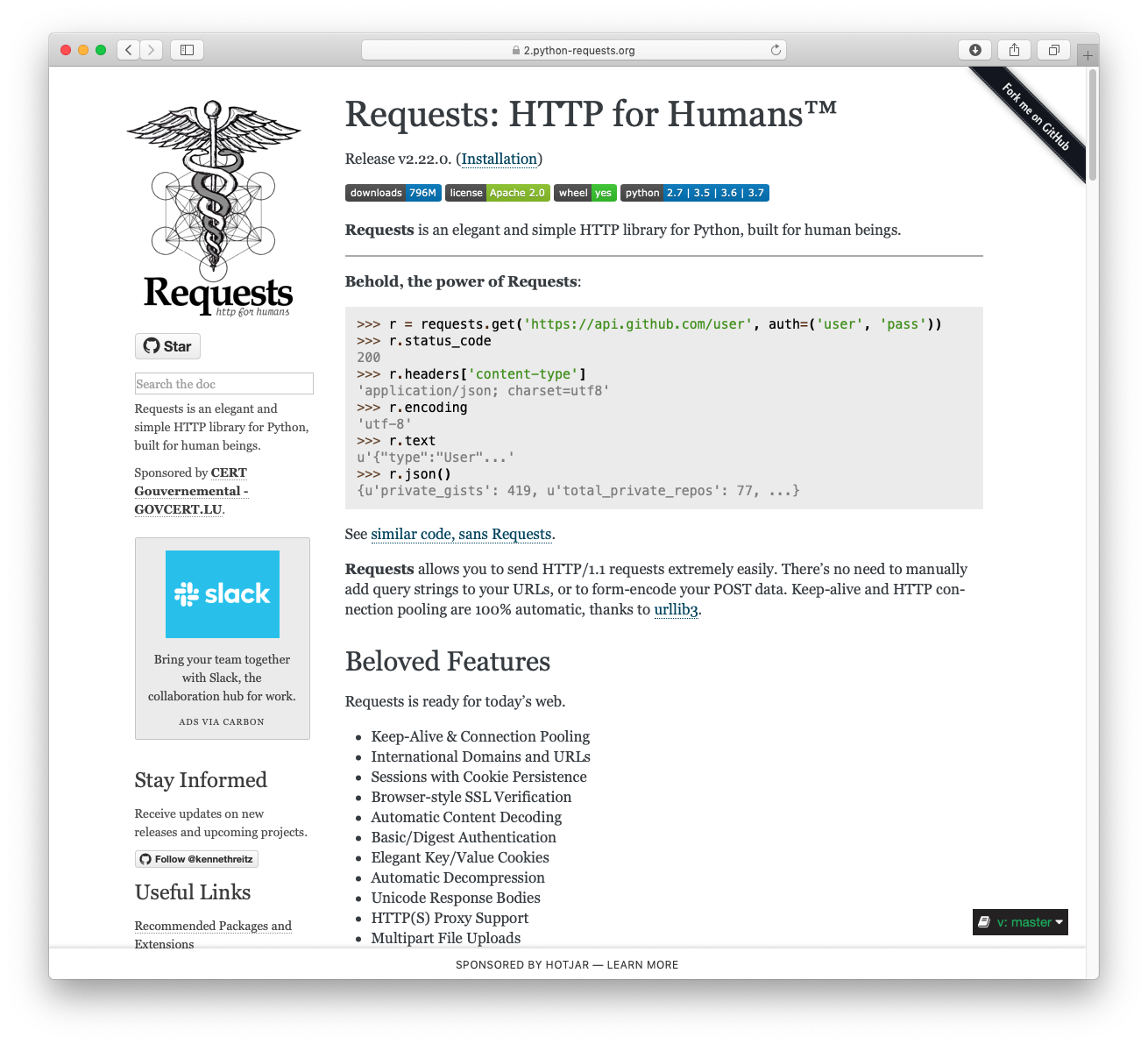

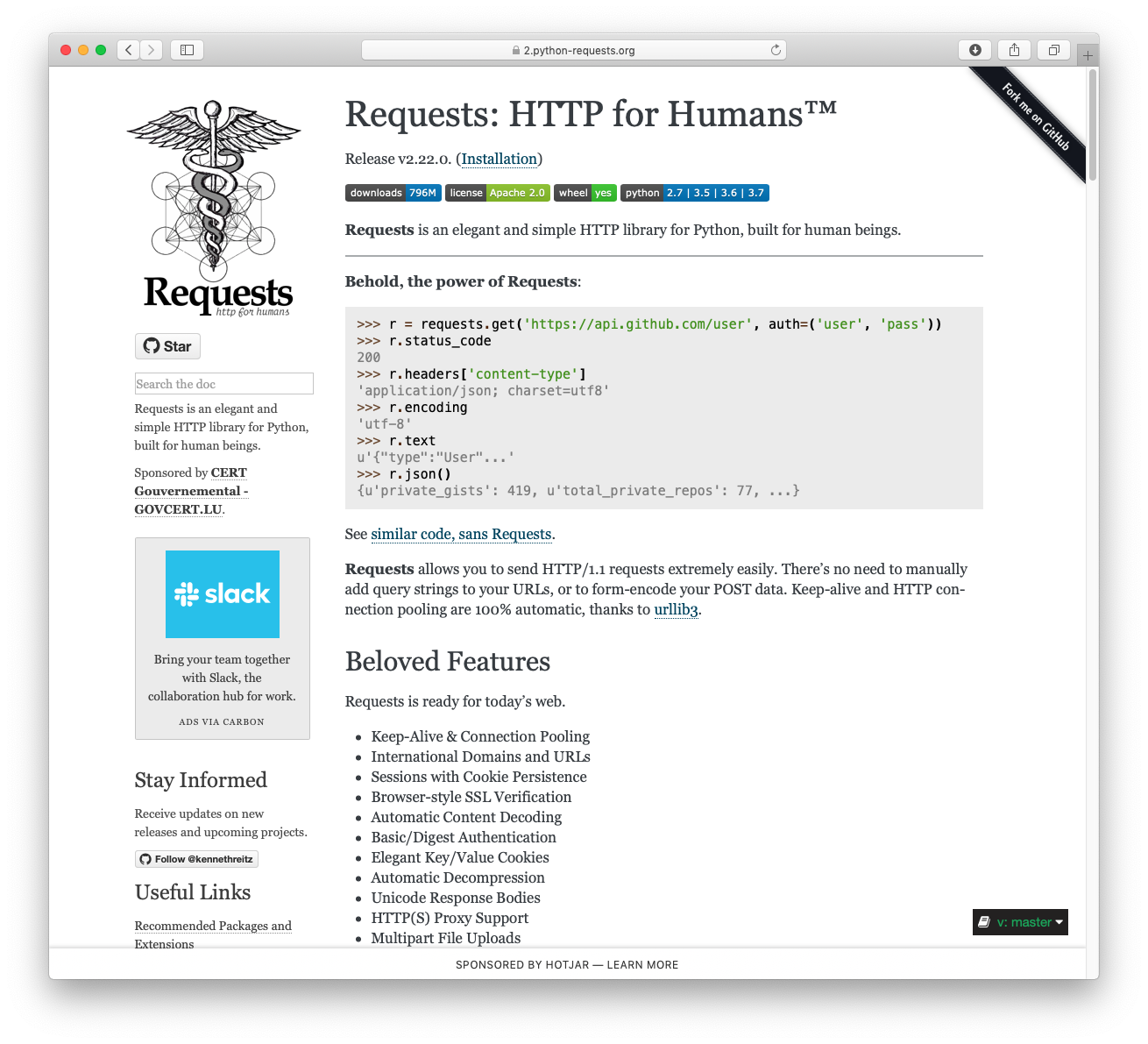

Summary: Python HTTP for Humans.

Home-page: https://requests.readthedocs.io

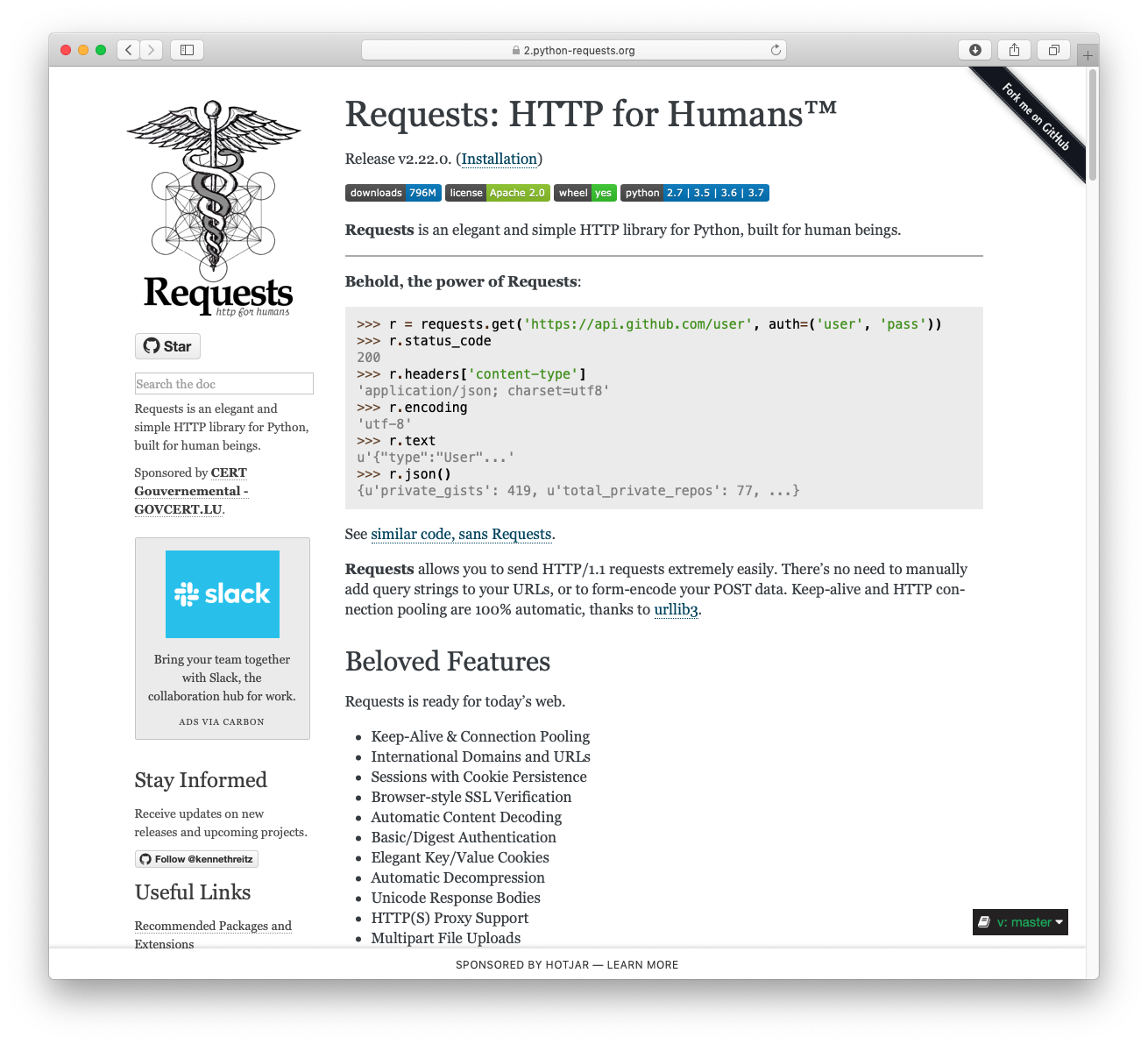

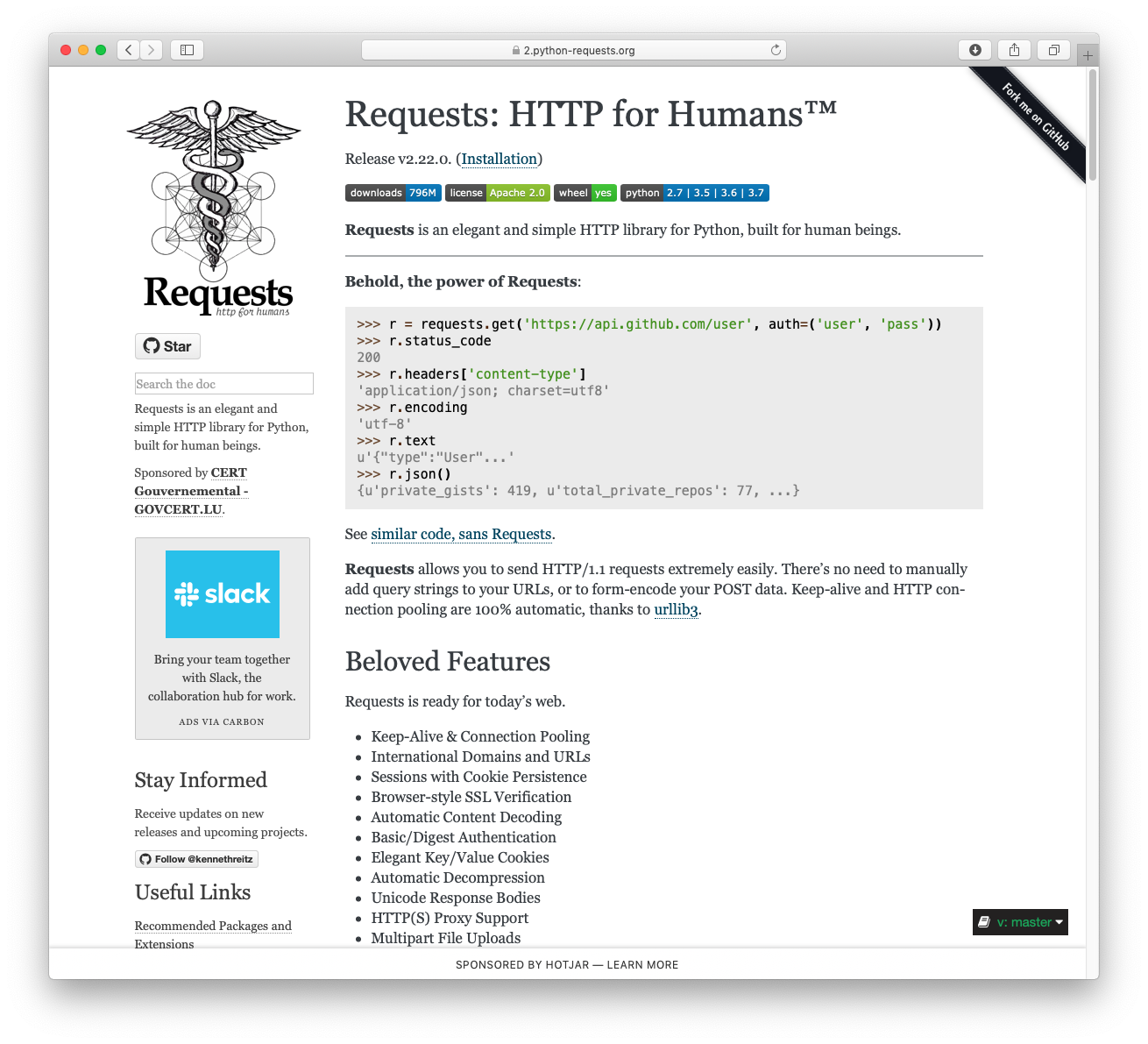

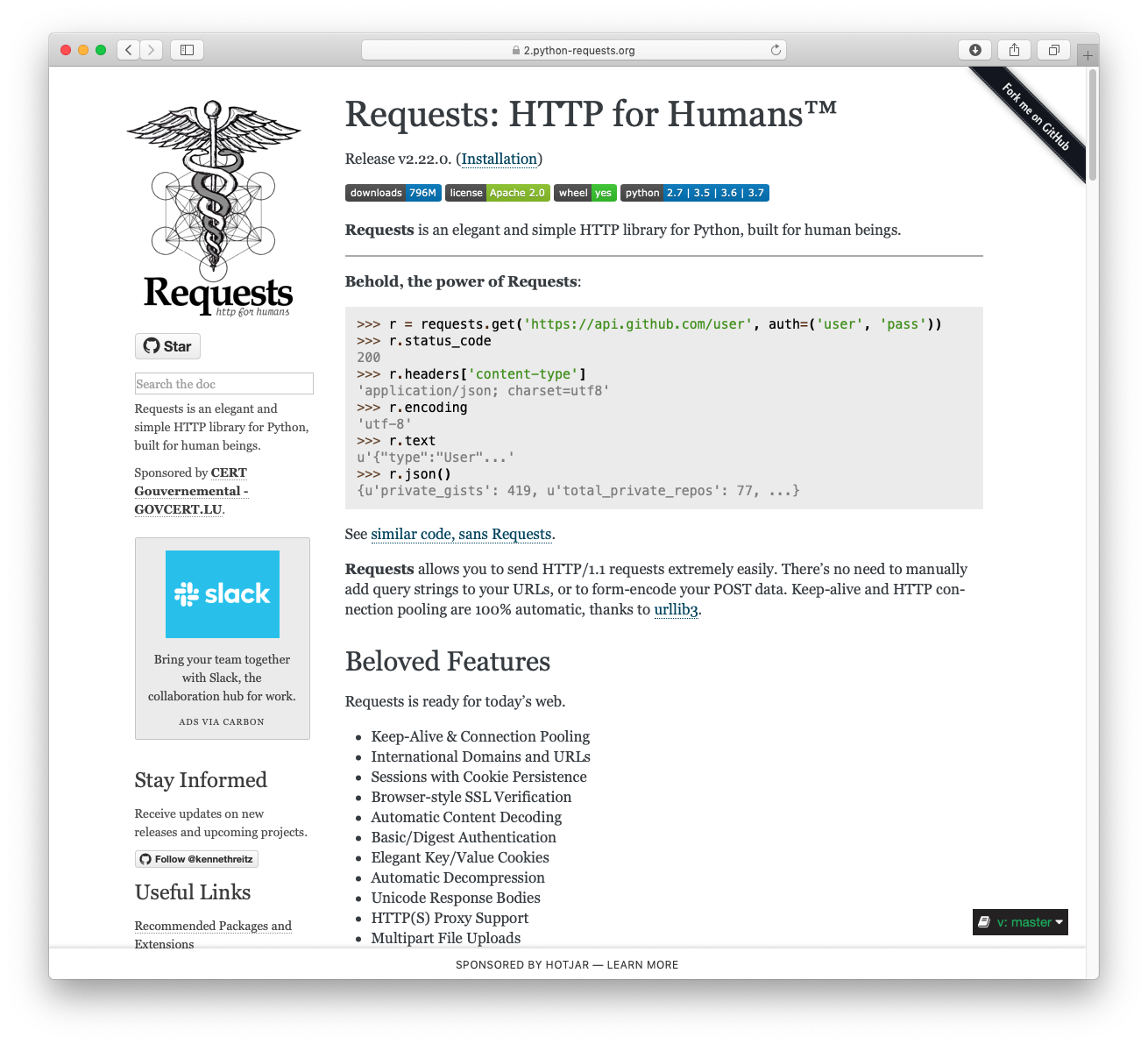

Author: Kenneth Reitz

@@ -14,7 +14,7 @@

```python

>>> import requests

- >>> r = requests.get('https://api.github.com/user', auth=('user',

'pass'))

+ >>> r = requests.get('https://httpbin.org/basic-auth/user/pass',

auth=('user', 'pass'))

>>> r.status_code

200

>>> r.headers['content-type']

@@ -22,16 +22,16 @@

>>> r.encoding

'utf-8'

>>> r.text

- '{"type":"User"...'

+ '{"authenticated": true, ...'

>>> r.json()

- {'disk_usage': 368627, 'private_gists': 484, ...}

+ {'authenticated': True, ...}

```

Requests allows you to send HTTP/1.1 requests extremely easily.

There???s no need to manually add query strings to your URLs, or to form-encode

your `PUT` & `POST` data ??? but nowadays, just use the `json` method!

- Requests is one of the most downloaded Python package today, pulling

in around `14M downloads / week`??? according to GitHub, Requests is currently

[depended

upon](https://github.com/psf/requests/network/dependents?package_id=UGFja2FnZS01NzA4OTExNg%3D%3D)

by `500,000+` repositories. You may certainly put your trust in this code.

+ Requests is one of the most downloaded Python packages today, pulling

in around `30M downloads / week`??? according to GitHub, Requests is currently

[depended

upon](https://github.com/psf/requests/network/dependents?package_id=UGFja2FnZS01NzA4OTExNg%3D%3D)

by `1,000,000+` repositories. You may certainly put your trust in this code.

-

[](https://pepy.tech/project/requests/month)

+

[](https://pepy.tech/project/requests)

[](https://pypi.org/project/requests)

[](https://github.com/psf/requests/graphs/contributors)

@@ -65,7 +65,7 @@

## API Reference and User Guide available on [Read the

Docs](https://requests.readthedocs.io)

- [](https://requests.readthedocs.io)

+ [](https://requests.readthedocs.io)

## Cloning the repository

@@ -85,13 +85,15 @@

---

- [](https://kennethreitz.org)

[](https://www.python.org/psf)

+ [](https://kennethreitz.org)

[](https://www.python.org/psf)

Platform: UNKNOWN

Classifier: Development Status :: 5 - Production/Stable

+Classifier: Environment :: Web Environment

Classifier: Intended Audience :: Developers

-Classifier: Natural Language :: English

Classifier: License :: OSI Approved :: Apache Software License

+Classifier: Natural Language :: English

+Classifier: Operating System :: OS Independent

Classifier: Programming Language :: Python

Classifier: Programming Language :: Python :: 2

Classifier: Programming Language :: Python :: 2.7

@@ -100,8 +102,11 @@

Classifier: Programming Language :: Python :: 3.7

Classifier: Programming Language :: Python :: 3.8

Classifier: Programming Language :: Python :: 3.9

+Classifier: Programming Language :: Python :: 3.10

Classifier: Programming Language :: Python :: Implementation :: CPython

Classifier: Programming Language :: Python :: Implementation :: PyPy

+Classifier: Topic :: Internet :: WWW/HTTP

+Classifier: Topic :: Software Development :: Libraries

Requires-Python: >=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*, !=3.5.*

Description-Content-Type: text/markdown

Provides-Extra: security

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/README.md

new/requests-2.27.1/README.md

--- old/requests-2.26.0/README.md 2021-07-13 16:52:57.000000000 +0200

+++ new/requests-2.27.1/README.md 2022-01-05 16:36:01.000000000 +0100

@@ -4,7 +4,7 @@

```python

>>> import requests

->>> r = requests.get('https://api.github.com/user', auth=('user', 'pass'))

+>>> r = requests.get('https://httpbin.org/basic-auth/user/pass', auth=('user',

'pass'))

>>> r.status_code

200

>>> r.headers['content-type']

@@ -12,16 +12,16 @@

>>> r.encoding

'utf-8'

>>> r.text

-'{"type":"User"...'

+'{"authenticated": true, ...'

>>> r.json()

-{'disk_usage': 368627, 'private_gists': 484, ...}

+{'authenticated': True, ...}

```

Requests allows you to send HTTP/1.1 requests extremely easily. There???s no

need to manually add query strings to your URLs, or to form-encode your `PUT` &

`POST` data ??? but nowadays, just use the `json` method!

-Requests is one of the most downloaded Python package today, pulling in around

`14M downloads / week`??? according to GitHub, Requests is currently [depended

upon](https://github.com/psf/requests/network/dependents?package_id=UGFja2FnZS01NzA4OTExNg%3D%3D)

by `500,000+` repositories. You may certainly put your trust in this code.

+Requests is one of the most downloaded Python packages today, pulling in

around `30M downloads / week`??? according to GitHub, Requests is currently

[depended

upon](https://github.com/psf/requests/network/dependents?package_id=UGFja2FnZS01NzA4OTExNg%3D%3D)

by `1,000,000+` repositories. You may certainly put your trust in this code.

-[](https://pepy.tech/project/requests/month)

+[](https://pepy.tech/project/requests)

[](https://pypi.org/project/requests)

[](https://github.com/psf/requests/graphs/contributors)

@@ -55,7 +55,7 @@

## API Reference and User Guide available on [Read the

Docs](https://requests.readthedocs.io)

-[](https://requests.readthedocs.io)

+[](https://requests.readthedocs.io)

## Cloning the repository

@@ -75,4 +75,4 @@

---

-[](https://kennethreitz.org)

[](https://www.python.org/psf)

+[](https://kennethreitz.org)

[](https://www.python.org/psf)

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/__init__.py

new/requests-2.27.1/requests/__init__.py

--- old/requests-2.26.0/requests/__init__.py 2021-07-13 00:29:50.000000000

+0200

+++ new/requests-2.27.1/requests/__init__.py 2022-01-05 16:36:01.000000000

+0100

@@ -139,7 +139,7 @@

from .exceptions import (

RequestException, Timeout, URLRequired,

TooManyRedirects, HTTPError, ConnectionError,

- FileModeWarning, ConnectTimeout, ReadTimeout

+ FileModeWarning, ConnectTimeout, ReadTimeout, JSONDecodeError

)

# Set default logging handler to avoid "No handler found" warnings.

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/__version__.py

new/requests-2.27.1/requests/__version__.py

--- old/requests-2.26.0/requests/__version__.py 2021-07-13 16:52:57.000000000

+0200

+++ new/requests-2.27.1/requests/__version__.py 2022-01-05 16:36:01.000000000

+0100

@@ -5,10 +5,10 @@

__title__ = 'requests'

__description__ = 'Python HTTP for Humans.'

__url__ = 'https://requests.readthedocs.io'

-__version__ = '2.26.0'

-__build__ = 0x022600

+__version__ = '2.27.1'

+__build__ = 0x022701

__author__ = 'Kenneth Reitz'

__author_email__ = 'm...@kennethreitz.org'

__license__ = 'Apache 2.0'

-__copyright__ = 'Copyright 2020 Kenneth Reitz'

+__copyright__ = 'Copyright 2022 Kenneth Reitz'

__cake__ = u'\u2728 \U0001f370 \u2728'

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/adapters.py

new/requests-2.27.1/requests/adapters.py

--- old/requests-2.26.0/requests/adapters.py 2021-07-09 19:35:09.000000000

+0200

+++ new/requests-2.27.1/requests/adapters.py 2022-01-05 16:36:01.000000000

+0100

@@ -19,6 +19,7 @@

from urllib3.exceptions import ClosedPoolError

from urllib3.exceptions import ConnectTimeoutError

from urllib3.exceptions import HTTPError as _HTTPError

+from urllib3.exceptions import InvalidHeader as _InvalidHeader

from urllib3.exceptions import MaxRetryError

from urllib3.exceptions import NewConnectionError

from urllib3.exceptions import ProxyError as _ProxyError

@@ -37,7 +38,7 @@

from .cookies import extract_cookies_to_jar

from .exceptions import (ConnectionError, ConnectTimeout, ReadTimeout,

SSLError,

ProxyError, RetryError, InvalidSchema,

InvalidProxyURL,

- InvalidURL)

+ InvalidURL, InvalidHeader)

from .auth import _basic_auth_str

try:

@@ -457,9 +458,11 @@

low_conn = conn._get_conn(timeout=DEFAULT_POOL_TIMEOUT)

try:

+ skip_host = 'Host' in request.headers

low_conn.putrequest(request.method,

url,

- skip_accept_encoding=True)

+ skip_accept_encoding=True,

+ skip_host=skip_host)

for header, value in request.headers.items():

low_conn.putheader(header, value)

@@ -527,6 +530,8 @@

raise SSLError(e, request=request)

elif isinstance(e, ReadTimeoutError):

raise ReadTimeout(e, request=request)

+ elif isinstance(e, _InvalidHeader):

+ raise InvalidHeader(e, request=request)

else:

raise

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/compat.py

new/requests-2.27.1/requests/compat.py

--- old/requests-2.26.0/requests/compat.py 2021-07-13 00:29:50.000000000

+0200

+++ new/requests-2.27.1/requests/compat.py 2022-01-05 16:36:01.000000000

+0100

@@ -28,8 +28,10 @@

#: Python 3.x?

is_py3 = (_ver[0] == 3)

+has_simplejson = False

try:

import simplejson as json

+ has_simplejson = True

except ImportError:

import json

@@ -49,13 +51,13 @@

# Keep OrderedDict for backwards compatibility.

from collections import Callable, Mapping, MutableMapping, OrderedDict

-

builtin_str = str

bytes = str

str = unicode

basestring = basestring

numeric_types = (int, long, float)

integer_types = (int, long)

+ JSONDecodeError = ValueError

elif is_py3:

from urllib.parse import urlparse, urlunparse, urljoin, urlsplit,

urlencode, quote, unquote, quote_plus, unquote_plus, urldefrag

@@ -66,6 +68,10 @@

# Keep OrderedDict for backwards compatibility.

from collections import OrderedDict

from collections.abc import Callable, Mapping, MutableMapping

+ if has_simplejson:

+ from simplejson import JSONDecodeError

+ else:

+ from json import JSONDecodeError

builtin_str = str

str = str

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/exceptions.py

new/requests-2.27.1/requests/exceptions.py

--- old/requests-2.26.0/requests/exceptions.py 2021-07-13 00:29:50.000000000

+0200

+++ new/requests-2.27.1/requests/exceptions.py 2022-01-05 16:36:01.000000000

+0100

@@ -8,6 +8,8 @@

"""

from urllib3.exceptions import HTTPError as BaseHTTPError

+from .compat import JSONDecodeError as CompatJSONDecodeError

+

class RequestException(IOError):

"""There was an ambiguous exception that occurred while handling your

@@ -29,6 +31,10 @@

"""A JSON error occurred."""

+class JSONDecodeError(InvalidJSONError, CompatJSONDecodeError):

+ """Couldn't decode the text into json"""

+

+

class HTTPError(RequestException):

"""An HTTP error occurred."""

@@ -74,11 +80,11 @@

class MissingSchema(RequestException, ValueError):

- """The URL schema (e.g. http or https) is missing."""

+ """The URL scheme (e.g. http or https) is missing."""

class InvalidSchema(RequestException, ValueError):

- """See defaults.py for valid schemas."""

+ """The URL scheme provided is either invalid or unsupported."""

class InvalidURL(RequestException, ValueError):

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/models.py

new/requests-2.27.1/requests/models.py

--- old/requests-2.26.0/requests/models.py 2021-07-13 00:29:50.000000000

+0200

+++ new/requests-2.27.1/requests/models.py 2022-01-05 16:36:01.000000000

+0100

@@ -29,7 +29,9 @@

from .cookies import cookiejar_from_dict, get_cookie_header, _copy_cookie_jar

from .exceptions import (

HTTPError, MissingSchema, InvalidURL, ChunkedEncodingError,

- ContentDecodingError, ConnectionError, StreamConsumedError,

InvalidJSONError)

+ ContentDecodingError, ConnectionError, StreamConsumedError,

+ InvalidJSONError)

+from .exceptions import JSONDecodeError as RequestsJSONDecodeError

from ._internal_utils import to_native_string, unicode_is_ascii

from .utils import (

guess_filename, get_auth_from_url, requote_uri,

@@ -38,7 +40,7 @@

from .compat import (

Callable, Mapping,

cookielib, urlunparse, urlsplit, urlencode, str, bytes,

- is_py2, chardet, builtin_str, basestring)

+ is_py2, chardet, builtin_str, basestring, JSONDecodeError)

from .compat import json as complexjson

from .status_codes import codes

@@ -384,7 +386,7 @@

raise InvalidURL(*e.args)

if not scheme:

- error = ("Invalid URL {0!r}: No schema supplied. Perhaps you meant

http://{0}?";)

+ error = ("Invalid URL {0!r}: No scheme supplied. Perhaps you meant

http://{0}?";)

error = error.format(to_native_string(url, 'utf8'))

raise MissingSchema(error)

@@ -401,7 +403,7 @@

host = self._get_idna_encoded_host(host)

except UnicodeError:

raise InvalidURL('URL has an invalid label.')

- elif host.startswith(u'*'):

+ elif host.startswith((u'*', u'.')):

raise InvalidURL('URL has an invalid label.')

# Carefully reconstruct the network location

@@ -468,9 +470,9 @@

content_type = 'application/json'

try:

- body = complexjson.dumps(json, allow_nan=False)

+ body = complexjson.dumps(json, allow_nan=False)

except ValueError as ve:

- raise InvalidJSONError(ve, request=self)

+ raise InvalidJSONError(ve, request=self)

if not isinstance(body, bytes):

body = body.encode('utf-8')

@@ -882,12 +884,8 @@

r"""Returns the json-encoded content of a response, if any.

:param \*\*kwargs: Optional arguments that ``json.loads`` takes.

- :raises simplejson.JSONDecodeError: If the response body does not

- contain valid json and simplejson is installed.

- :raises json.JSONDecodeError: If the response body does not contain

- valid json and simplejson is not installed on Python 3.

- :raises ValueError: If the response body does not contain valid

- json and simplejson is not installed on Python 2.

+ :raises requests.exceptions.JSONDecodeError: If the response body does

not

+ contain valid json.

"""

if not self.encoding and self.content and len(self.content) > 3:

@@ -907,7 +905,16 @@

# and the server didn't bother to tell us what codec *was*

# used.

pass

- return complexjson.loads(self.text, **kwargs)

+

+ try:

+ return complexjson.loads(self.text, **kwargs)

+ except JSONDecodeError as e:

+ # Catch JSON-related errors and raise as requests.JSONDecodeError

+ # This aliases json.JSONDecodeError and simplejson.JSONDecodeError

+ if is_py2: # e is a ValueError

+ raise RequestsJSONDecodeError(e.message)

+ else:

+ raise RequestsJSONDecodeError(e.msg, e.doc, e.pos)

@property

def links(self):

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/sessions.py

new/requests-2.27.1/requests/sessions.py

--- old/requests-2.26.0/requests/sessions.py 2021-07-09 19:35:18.000000000

+0200

+++ new/requests-2.27.1/requests/sessions.py 2022-01-05 16:36:01.000000000

+0100

@@ -29,7 +29,7 @@

from .utils import (

requote_uri, get_environ_proxies, get_netrc_auth, should_bypass_proxies,

- get_auth_from_url, rewind_body

+ get_auth_from_url, rewind_body, resolve_proxies

)

from .status_codes import codes

@@ -269,7 +269,6 @@

if new_auth is not None:

prepared_request.prepare_auth(new_auth)

-

def rebuild_proxies(self, prepared_request, proxies):

"""This method re-evaluates the proxy configuration by considering the

environment variables. If we are redirected to a URL covered by

@@ -282,21 +281,9 @@

:rtype: dict

"""

- proxies = proxies if proxies is not None else {}

headers = prepared_request.headers

- url = prepared_request.url

- scheme = urlparse(url).scheme

- new_proxies = proxies.copy()

- no_proxy = proxies.get('no_proxy')

-

- bypass_proxy = should_bypass_proxies(url, no_proxy=no_proxy)

- if self.trust_env and not bypass_proxy:

- environ_proxies = get_environ_proxies(url, no_proxy=no_proxy)

-

- proxy = environ_proxies.get(scheme, environ_proxies.get('all'))

-

- if proxy:

- new_proxies.setdefault(scheme, proxy)

+ scheme = urlparse(prepared_request.url).scheme

+ new_proxies = resolve_proxies(prepared_request, proxies,

self.trust_env)

if 'Proxy-Authorization' in headers:

del headers['Proxy-Authorization']

@@ -633,7 +620,10 @@

kwargs.setdefault('stream', self.stream)

kwargs.setdefault('verify', self.verify)

kwargs.setdefault('cert', self.cert)

- kwargs.setdefault('proxies', self.rebuild_proxies(request,

self.proxies))

+ if 'proxies' not in kwargs:

+ kwargs['proxies'] = resolve_proxies(

+ request, self.proxies, self.trust_env

+ )

# It's possible that users might accidentally send a Request object.

# Guard against that specific failure case.

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests/utils.py

new/requests-2.27.1/requests/utils.py

--- old/requests-2.26.0/requests/utils.py 2021-07-09 19:35:18.000000000

+0200

+++ new/requests-2.27.1/requests/utils.py 2022-01-05 16:36:01.000000000

+0100

@@ -21,6 +21,7 @@

import zipfile

from collections import OrderedDict

from urllib3.util import make_headers

+from urllib3.util import parse_url

from .__version__ import __version__

from . import certs

@@ -124,7 +125,10 @@

elif hasattr(o, 'fileno'):

try:

fileno = o.fileno()

- except io.UnsupportedOperation:

+ except (io.UnsupportedOperation, AttributeError):

+ # AttributeError is a surprising exception, seeing as how we've

just checked

+ # that `hasattr(o, 'fileno')`. It happens for objects obtained via

+ # `Tarfile.extractfile()`, per issue 5229.

pass

else:

total_length = os.fstat(fileno).st_size

@@ -154,7 +158,7 @@

current_position = total_length

else:

if hasattr(o, 'seek') and total_length is None:

- # StringIO and BytesIO have seek but no useable fileno

+ # StringIO and BytesIO have seek but no usable fileno

try:

# seek to end of file

o.seek(0, 2)

@@ -251,6 +255,10 @@

archive, member = os.path.split(path)

while archive and not os.path.exists(archive):

archive, prefix = os.path.split(archive)

+ if not prefix:

+ # If we don't check for an empty prefix after the split (in other

words, archive remains unchanged after the split),

+ # we _can_ end up in an infinite loop on a rare corner case

affecting a small number of users

+ break

member = '/'.join([prefix, member])

if not zipfile.is_zipfile(archive):

@@ -826,6 +834,33 @@

return proxy

+def resolve_proxies(request, proxies, trust_env=True):

+ """This method takes proxy information from a request and configuration

+ input to resolve a mapping of target proxies. This will consider settings

+ such a NO_PROXY to strip proxy configurations.

+

+ :param request: Request or PreparedRequest

+ :param proxies: A dictionary of schemes or schemes and hosts to proxy URLs

+ :param trust_env: Boolean declaring whether to trust environment configs

+

+ :rtype: dict

+ """

+ proxies = proxies if proxies is not None else {}

+ url = request.url

+ scheme = urlparse(url).scheme

+ no_proxy = proxies.get('no_proxy')

+ new_proxies = proxies.copy()

+

+ if trust_env and not should_bypass_proxies(url, no_proxy=no_proxy):

+ environ_proxies = get_environ_proxies(url, no_proxy=no_proxy)

+

+ proxy = environ_proxies.get(scheme, environ_proxies.get('all'))

+

+ if proxy:

+ new_proxies.setdefault(scheme, proxy)

+ return new_proxies

+

+

def default_user_agent(name="python-requests"):

"""

Return a string representing the default user agent.

@@ -928,15 +963,27 @@

:rtype: str

"""

- scheme, netloc, path, params, query, fragment = urlparse(url, new_scheme)

+ parsed = parse_url(url)

+ scheme, auth, host, port, path, query, fragment = parsed

- # urlparse is a finicky beast, and sometimes decides that there isn't a

- # netloc present. Assume that it's being over-cautious, and switch netloc

- # and path if urlparse decided there was no netloc.

+ # A defect in urlparse determines that there isn't a netloc present in some

+ # urls. We previously assumed parsing was overly cautious, and swapped the

+ # netloc and path. Due to a lack of tests on the original defect, this is

+ # maintained with parse_url for backwards compatibility.

+ netloc = parsed.netloc

if not netloc:

netloc, path = path, netloc

- return urlunparse((scheme, netloc, path, params, query, fragment))

+ if auth:

+ # parse_url doesn't provide the netloc with auth

+ # so we'll add it ourselves.

+ netloc = '@'.join([auth, netloc])

+ if scheme is None:

+ scheme = new_scheme

+ if path is None:

+ path = ''

+

+ return urlunparse((scheme, netloc, path, '', query, fragment))

def get_auth_from_url(url):

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requests.egg-info/PKG-INFO

new/requests-2.27.1/requests.egg-info/PKG-INFO

--- old/requests-2.26.0/requests.egg-info/PKG-INFO 2021-07-13

16:54:31.000000000 +0200

+++ new/requests-2.27.1/requests.egg-info/PKG-INFO 2022-01-05

16:40:31.000000000 +0100

@@ -1,6 +1,6 @@

Metadata-Version: 2.1

Name: requests

-Version: 2.26.0

+Version: 2.27.1

Summary: Python HTTP for Humans.

Home-page: https://requests.readthedocs.io

Author: Kenneth Reitz

@@ -14,7 +14,7 @@

```python

>>> import requests

- >>> r = requests.get('https://api.github.com/user', auth=('user',

'pass'))

+ >>> r = requests.get('https://httpbin.org/basic-auth/user/pass',

auth=('user', 'pass'))

>>> r.status_code

200

>>> r.headers['content-type']

@@ -22,16 +22,16 @@

>>> r.encoding

'utf-8'

>>> r.text

- '{"type":"User"...'

+ '{"authenticated": true, ...'

>>> r.json()

- {'disk_usage': 368627, 'private_gists': 484, ...}

+ {'authenticated': True, ...}

```

Requests allows you to send HTTP/1.1 requests extremely easily.

There???s no need to manually add query strings to your URLs, or to form-encode

your `PUT` & `POST` data ??? but nowadays, just use the `json` method!

- Requests is one of the most downloaded Python package today, pulling

in around `14M downloads / week`??? according to GitHub, Requests is currently

[depended

upon](https://github.com/psf/requests/network/dependents?package_id=UGFja2FnZS01NzA4OTExNg%3D%3D)

by `500,000+` repositories. You may certainly put your trust in this code.

+ Requests is one of the most downloaded Python packages today, pulling

in around `30M downloads / week`??? according to GitHub, Requests is currently

[depended

upon](https://github.com/psf/requests/network/dependents?package_id=UGFja2FnZS01NzA4OTExNg%3D%3D)

by `1,000,000+` repositories. You may certainly put your trust in this code.

-

[](https://pepy.tech/project/requests/month)

+

[](https://pepy.tech/project/requests)

[](https://pypi.org/project/requests)

[](https://github.com/psf/requests/graphs/contributors)

@@ -65,7 +65,7 @@

## API Reference and User Guide available on [Read the

Docs](https://requests.readthedocs.io)

- [](https://requests.readthedocs.io)

+ [](https://requests.readthedocs.io)

## Cloning the repository

@@ -85,13 +85,15 @@

---

- [](https://kennethreitz.org)

[](https://www.python.org/psf)

+ [](https://kennethreitz.org)

[](https://www.python.org/psf)

Platform: UNKNOWN

Classifier: Development Status :: 5 - Production/Stable

+Classifier: Environment :: Web Environment

Classifier: Intended Audience :: Developers

-Classifier: Natural Language :: English

Classifier: License :: OSI Approved :: Apache Software License

+Classifier: Natural Language :: English

+Classifier: Operating System :: OS Independent

Classifier: Programming Language :: Python

Classifier: Programming Language :: Python :: 2

Classifier: Programming Language :: Python :: 2.7

@@ -100,8 +102,11 @@

Classifier: Programming Language :: Python :: 3.7

Classifier: Programming Language :: Python :: 3.8

Classifier: Programming Language :: Python :: 3.9

+Classifier: Programming Language :: Python :: 3.10

Classifier: Programming Language :: Python :: Implementation :: CPython

Classifier: Programming Language :: Python :: Implementation :: PyPy

+Classifier: Topic :: Internet :: WWW/HTTP

+Classifier: Topic :: Software Development :: Libraries

Requires-Python: >=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*, !=3.5.*

Description-Content-Type: text/markdown

Provides-Extra: security

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/requirements-dev.txt

new/requests-2.27.1/requirements-dev.txt

--- old/requests-2.26.0/requirements-dev.txt 2021-07-13 16:52:57.000000000

+0200

+++ new/requests-2.27.1/requirements-dev.txt 2022-01-05 16:36:01.000000000

+0100

@@ -1,4 +1,4 @@

-pytest>=2.8.0,<=3.10.1

+pytest>=2.8.0,<=6.2.5

pytest-cov

pytest-httpbin==1.0.0

pytest-mock==2.0.0

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/setup.py new/requests-2.27.1/setup.py

--- old/requests-2.26.0/setup.py 2021-07-13 16:52:57.000000000 +0200

+++ new/requests-2.27.1/setup.py 2022-01-05 16:36:01.000000000 +0100

@@ -84,9 +84,11 @@

zip_safe=False,

classifiers=[

'Development Status :: 5 - Production/Stable',

+ 'Environment :: Web Environment',

'Intended Audience :: Developers',

- 'Natural Language :: English',

'License :: OSI Approved :: Apache Software License',

+ 'Natural Language :: English',

+ 'Operating System :: OS Independent',

'Programming Language :: Python',

'Programming Language :: Python :: 2',

'Programming Language :: Python :: 2.7',

@@ -95,8 +97,11 @@

'Programming Language :: Python :: 3.7',

'Programming Language :: Python :: 3.8',

'Programming Language :: Python :: 3.9',

+ 'Programming Language :: Python :: 3.10',

'Programming Language :: Python :: Implementation :: CPython',

- 'Programming Language :: Python :: Implementation :: PyPy'

+ 'Programming Language :: Python :: Implementation :: PyPy',

+ 'Topic :: Internet :: WWW/HTTP',

+ 'Topic :: Software Development :: Libraries',

],

cmdclass={'test': PyTest},

tests_require=test_requirements,

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/tests/conftest.py

new/requests-2.27.1/tests/conftest.py

--- old/requests-2.26.0/tests/conftest.py 2021-07-13 16:52:57.000000000

+0200

+++ new/requests-2.27.1/tests/conftest.py 2022-01-05 16:36:01.000000000

+0100

@@ -13,7 +13,6 @@

import pytest

from requests.compat import urljoin

-import trustme

def prepare_url(value):

@@ -38,6 +37,10 @@

@pytest.fixture

def nosan_server(tmp_path_factory):

+ # delay importing until the fixture in order to make it possible

+ # to deselect the test via command-line when trustme is not available

+ import trustme

+

tmpdir = tmp_path_factory.mktemp("certs")

ca = trustme.CA()

# only commonName, no subjectAltName

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/tests/test_lowlevel.py

new/requests-2.27.1/tests/test_lowlevel.py

--- old/requests-2.26.0/tests/test_lowlevel.py 2021-07-09 19:35:14.000000000

+0200

+++ new/requests-2.27.1/tests/test_lowlevel.py 2022-01-05 16:36:01.000000000

+0100

@@ -9,6 +9,18 @@

from .utils import override_environ

+def echo_response_handler(sock):

+ """Simple handler that will take request and echo it back to requester."""

+ request_content = consume_socket_content(sock, timeout=0.5)

+

+ text_200 = (

+ b'HTTP/1.1 200 OK\r\n'

+ b'Content-Length: %d\r\n\r\n'

+ b'%s'

+ ) % (len(request_content), request_content)

+ sock.send(text_200)

+

+

def test_chunked_upload():

"""can safely send generators"""

close_server = threading.Event()

@@ -24,6 +36,90 @@

assert r.request.headers['Transfer-Encoding'] == 'chunked'

+def test_chunked_encoding_error():

+ """get a ChunkedEncodingError if the server returns a bad response"""

+

+ def incomplete_chunked_response_handler(sock):

+ request_content = consume_socket_content(sock, timeout=0.5)

+

+ # The server never ends the request and doesn't provide any valid

chunks

+ sock.send(b"HTTP/1.1 200 OK\r\n" +

+ b"Transfer-Encoding: chunked\r\n")

+

+ return request_content

+

+ close_server = threading.Event()

+ server = Server(incomplete_chunked_response_handler)

+

+ with server as (host, port):

+ url = 'http://{}:{}/'.format(host, port)

+ with pytest.raises(requests.exceptions.ChunkedEncodingError):

+ r = requests.get(url)

+ close_server.set() # release server block

+

+

+def test_chunked_upload_uses_only_specified_host_header():

+ """Ensure we use only the specified Host header for chunked requests."""

+ close_server = threading.Event()

+ server = Server(echo_response_handler, wait_to_close_event=close_server)

+

+ data = iter([b'a', b'b', b'c'])

+ custom_host = 'sample-host'

+

+ with server as (host, port):

+ url = 'http://{}:{}/'.format(host, port)

+ r = requests.post(url, data=data, headers={'Host': custom_host},

stream=True)

+ close_server.set() # release server block

+

+ expected_header = b'Host: %s\r\n' % custom_host.encode('utf-8')

+ assert expected_header in r.content

+ assert r.content.count(b'Host: ') == 1

+

+

+def test_chunked_upload_doesnt_skip_host_header():

+ """Ensure we don't omit all Host headers with chunked requests."""

+ close_server = threading.Event()

+ server = Server(echo_response_handler, wait_to_close_event=close_server)

+

+ data = iter([b'a', b'b', b'c'])

+

+ with server as (host, port):

+ expected_host = '{}:{}'.format(host, port)

+ url = 'http://{}:{}/'.format(host, port)

+ r = requests.post(url, data=data, stream=True)

+ close_server.set() # release server block

+

+ expected_header = b'Host: %s\r\n' % expected_host.encode('utf-8')

+ assert expected_header in r.content

+ assert r.content.count(b'Host: ') == 1

+

+

+def test_conflicting_content_lengths():

+ """Ensure we correctly throw an InvalidHeader error if multiple

+ conflicting Content-Length headers are returned.

+ """

+

+ def multiple_content_length_response_handler(sock):

+ request_content = consume_socket_content(sock, timeout=0.5)

+

+ sock.send(b"HTTP/1.1 200 OK\r\n" +

+ b"Content-Type: text/plain\r\n" +

+ b"Content-Length: 16\r\n" +

+ b"Content-Length: 32\r\n\r\n" +

+ b"-- Bad Actor -- Original Content\r\n")

+

+ return request_content

+

+ close_server = threading.Event()

+ server = Server(multiple_content_length_response_handler)

+

+ with server as (host, port):

+ url = 'http://{}:{}/'.format(host, port)

+ with pytest.raises(requests.exceptions.InvalidHeader):

+ r = requests.get(url)

+ close_server.set()

+

+

def test_digestauth_401_count_reset_on_redirect():

"""Ensure we correctly reset num_401_calls after a successful digest auth,

followed by a 302 redirect to another digest auth prompt.

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/tests/test_requests.py

new/requests-2.27.1/tests/test_requests.py

--- old/requests-2.26.0/tests/test_requests.py 2021-07-13 16:52:57.000000000

+0200

+++ new/requests-2.27.1/tests/test_requests.py 2022-01-05 16:36:01.000000000

+0100

@@ -81,6 +81,8 @@

(InvalidSchema, 'localhost.localdomain:3128/'),

(InvalidSchema, '10.122.1.1:3128/'),

(InvalidURL, 'http://'),

+ (InvalidURL, 'http://*example.com'),

+ (InvalidURL, 'http://.example.com'),

))

def test_invalid_url(self, exception, url):

with pytest.raises(exception):

@@ -590,6 +592,15 @@

session = requests.Session()

session.request(method='GET', url=httpbin())

+ def test_proxy_authorization_preserved_on_request(self, httpbin):

+ proxy_auth_value = "Bearer XXX"

+ session = requests.Session()

+ session.headers.update({"Proxy-Authorization": proxy_auth_value})

+ resp = session.request(method='GET', url=httpbin('get'))

+ sent_headers = resp.json().get('headers', {})

+

+ assert sent_headers.get("Proxy-Authorization") == proxy_auth_value

+

def test_basicauth_with_netrc(self, httpbin):

auth = ('user', 'pass')

wrong_auth = ('wronguser', 'wrongpass')

@@ -2570,4 +2581,9 @@

def test_post_json_nan(self, httpbin):

data = {"foo": float("nan")}

with pytest.raises(requests.exceptions.InvalidJSONError):

- r = requests.post(httpbin('post'), json=data)

\ No newline at end of file

+ r = requests.post(httpbin('post'), json=data)

+

+ def test_json_decode_compatibility(self, httpbin):

+ r = requests.get(httpbin('bytes/20'))

+ with pytest.raises(requests.exceptions.JSONDecodeError):

+ r.json()

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/tests/test_utils.py

new/requests-2.27.1/tests/test_utils.py

--- old/requests-2.26.0/tests/test_utils.py 2020-12-11 05:36:00.000000000

+0100

+++ new/requests-2.27.1/tests/test_utils.py 2022-01-05 16:36:01.000000000

+0100

@@ -4,6 +4,7 @@

import copy

import filecmp

from io import BytesIO

+import tarfile

import zipfile

from collections import deque

@@ -86,6 +87,18 @@

assert super_len(fd) == 4

assert len(recwarn) == warnings_num

+ def test_tarfile_member(self, tmpdir):

+ file_obj = tmpdir.join('test.txt')

+ file_obj.write('Test')

+

+ tar_obj = str(tmpdir.join('test.tar'))

+ with tarfile.open(tar_obj, 'w') as tar:

+ tar.add(str(file_obj), arcname='test.txt')

+

+ with tarfile.open(tar_obj) as tar:

+ member = tar.extractfile('test.txt')

+ assert super_len(member) == 4

+

def test_super_len_with__len__(self):

foo = [1,2,3,4]

len_foo = super_len(foo)

@@ -285,6 +298,10 @@

assert os.path.exists(extracted_path)

assert filecmp.cmp(extracted_path, __file__)

+ def test_invalid_unc_path(self):

+ path = r"\\localhost\invalid\location"

+ assert extract_zipped_paths(path) == path

+

class TestContentEncodingDetection:

@@ -584,6 +601,15 @@

'value, expected', (

('example.com/path', 'http://example.com/path'),

('//example.com/path', 'http://example.com/path'),

+ ('example.com:80', 'http://example.com:80'),

+ (

+ 'http://user:p...@example.com/path?query',

+ 'http://user:p...@example.com/path?query'

+ ),

+ (

+ 'http://u...@example.com/path?query',

+ 'http://u...@example.com/path?query'

+ )

))

def test_prepend_scheme_if_needed(value, expected):

assert prepend_scheme_if_needed(value, 'http') == expected

diff -urN '--exclude=CVS' '--exclude=.cvsignore' '--exclude=.svn'

'--exclude=.svnignore' old/requests-2.26.0/tests/testserver/server.py

new/requests-2.27.1/tests/testserver/server.py

--- old/requests-2.26.0/tests/testserver/server.py 2020-12-11

05:36:00.000000000 +0100

+++ new/requests-2.27.1/tests/testserver/server.py 2022-01-05

16:36:01.000000000 +0100

@@ -78,7 +78,9 @@

def _create_socket_and_bind(self):

sock = socket.socket()

sock.bind((self.host, self.port))

- sock.listen(0)

+ # NB: when Python 2.7 is no longer supported, the argument

+ # can be removed to use a default backlog size

+ sock.listen(5)

return sock

def _close_server_sock_ignore_errors(self):