abhilash1in opened a new issue #11003:

URL: https://github.com/apache/airflow/issues/11003

<!--

Welcome to Apache Airflow! For a smooth issue process, try to answer the

following questions.

Don't worry if they're not all applicable; just try to include what you can

:-)

If you need to include code snippets or logs, please put them in fenced code

blocks. If they're super-long, please use the details tag like

<details><summary>super-long log</summary> lots of stuff </details>

Please delete these comment blocks before submitting the issue.

-->

<!--

IMPORTANT!!!

PLEASE CHECK "SIMILAR TO X EXISTING ISSUES" OPTION IF VISIBLE

NEXT TO "SUBMIT NEW ISSUE" BUTTON!!!

PLEASE CHECK IF THIS ISSUE HAS BEEN REPORTED PREVIOUSLY USING SEARCH!!!

Please complete the next sections or the issue will be closed.

These questions are the first thing we need to know to understand the

context.

-->

**Apache Airflow version**: 1.10.12

**Kubernetes version (if you are using kubernetes)** (use `kubectl

version`): N/A

**Environment**:

- **Cloud provider or hardware configuration**: Local machine

- **OS** (e.g. from /etc/os-release): macOS Catalina

- **Kernel** (e.g. `uname -a`): `Darwin Abhilashs-MBP 19.6.0 Darwin Kernel

Version 19.6.0: Thu Jun 18 20:49:00 PDT 2020;

root:xnu-6153.141.1~1/RELEASE_X86_64 x86_6`

- **Install tools**:

- **Others**:

**What happened**:

Created 2 zipped DAGs as follows:

1. **sample-dag-test.zip**

Contents:

**sample-dag-test.zip/sample_dag/configs/config.py:**

```

env = 'test'

servers = [

f'server1-{env}',

f'server2-{env}'

]

```

**sample-dag-test.zip/dag.py:**

```

from datetime import datetime

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from sample_dag.configs import config

with DAG('sample-dag-test',

schedule_interval='0 12 * * *',

start_date=datetime(2020, 9, 17), catchup=False) as dag:

for server in config.servers:

t_1 = DummyOperator(task_id=f'task1_{server}')

t_2 = DummyOperator(task_id=f'task2_{server}')

t_1 >> t_2

```

2. **sample-dag-prod.zip**

Contents:

**sample-dag-prod.zip/sample_dag/configs/config.py:**

```

env = 'prod'

servers = [

f'server1-{env}',

f'server2-{env}'

]

```

**sample-dag-prod.zip/dag.py:**

```

from datetime import datetime

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from sample_dag.configs import config

with DAG('sample-dag-prod',

schedule_interval='0 12 * * *',

start_date=datetime(2020, 9, 17), catchup=False) as dag:

for server in config.servers:

t_1 = DummyOperator(task_id=f'task1_{server}')

t_2 = DummyOperator(task_id=f'task2_{server}')

t_1 >> t_2

```

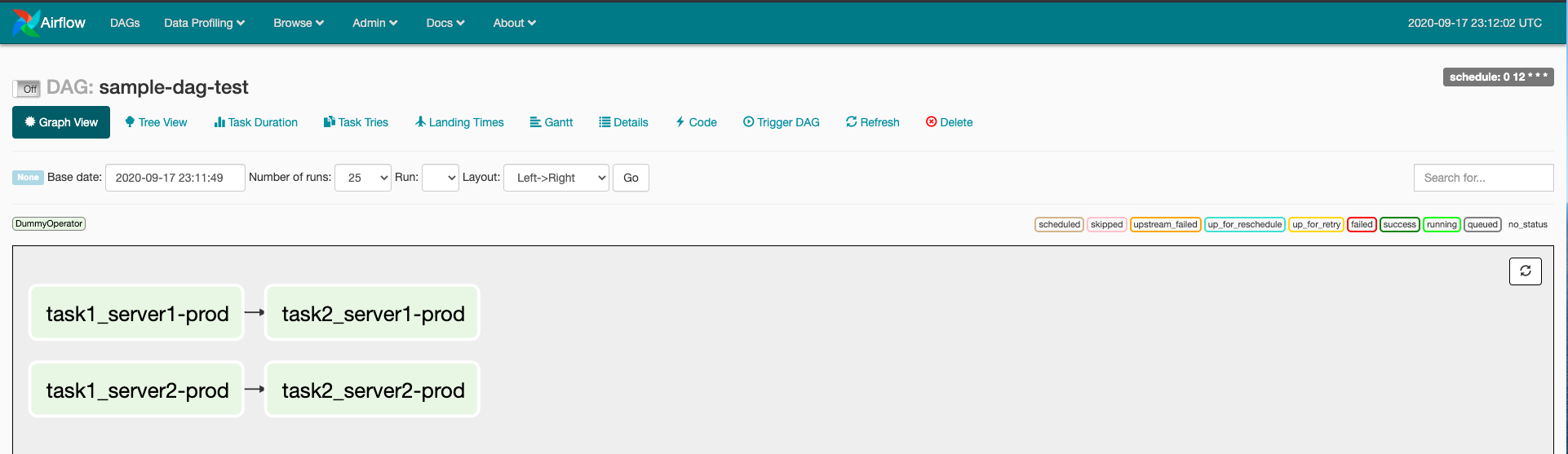

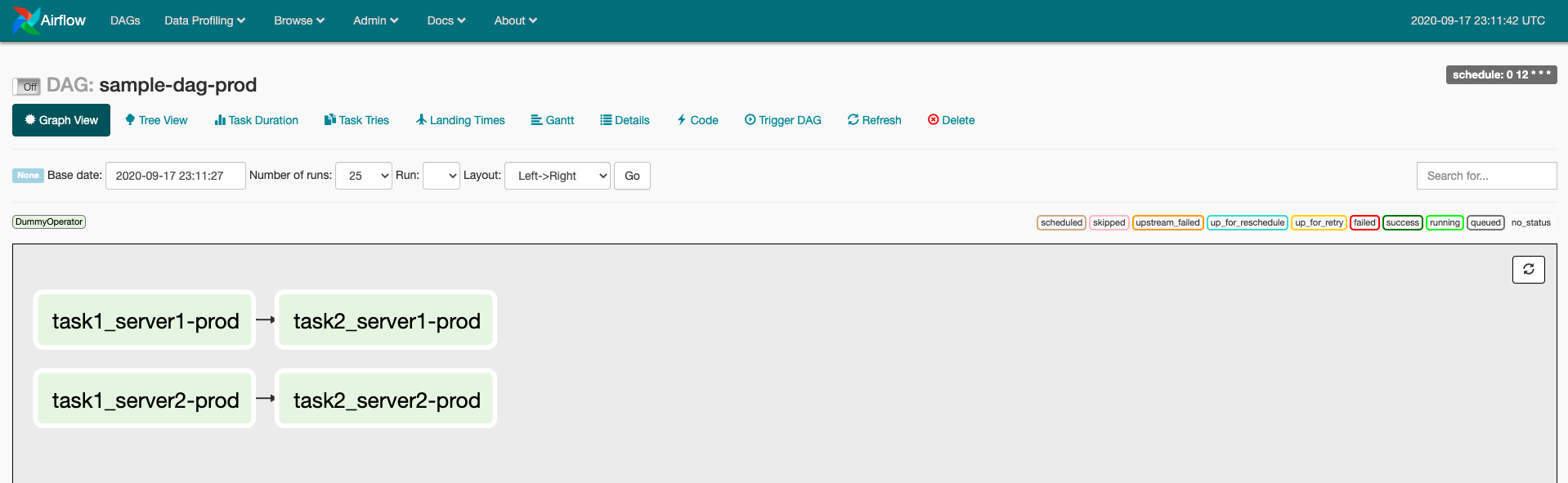

Airflow created 2 DAGs as follows:

`sample-dag-test` has `-prod` tasks which is wrong! (`sample-dag-prod` has

`-prod` tasks which is correct).

In a different installation of Airflow, `sample-dag-prod` had `-test` tasks

and `sample-dag-test` also had `-test` tasks.

**What you expected to happen**:

Airflow should create `sample-dag-test` DAG with only `-test` tasks and

`sample-dag-prod` DAG with only `-prod` tasks.

**How to reproduce it**:

<!---

As minimally and precisely as possible. Keep in mind we do not have access

to your cluster or dags.

If you are using kubernetes, please attempt to recreate the issue using

minikube or kind.

## Install minikube/kind

- Minikube https://minikube.sigs.k8s.io/docs/start/

- Kind https://kind.sigs.k8s.io/docs/user/quick-start/

If this is a UI bug, please provide a screenshot of the bug or a link to a

youtube video of the bug in action

You can include images using the .md style of

To record a screencast, mac users can use QuickTime and then create an

unlisted youtube video with the resulting .mov file.

--->

As described above.

**Anything else we need to know**:

<!--

How often does this problem occur? Once? Every time etc?

Any relevant logs to include? Put them here in side a detail tag:

<details><summary>x.log</summary> lots of stuff </details>

-->

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]