tanelk opened a new issue #12102: URL: https://github.com/apache/airflow/issues/12102

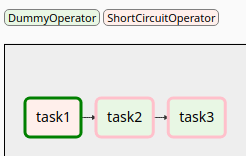

<!-- Welcome to Apache Airflow! For a smooth issue process, try to answer the following questions. Don't worry if they're not all applicable; just try to include what you can :-) If you need to include code snippets or logs, please put them in fenced code blocks. If they're super-long, please use the details tag like <details><summary>super-long log</summary> lots of stuff </details> Please delete these comment blocks before submitting the issue. --> <!-- IMPORTANT!!! PLEASE CHECK "SIMILAR TO X EXISTING ISSUES" OPTION IF VISIBLE NEXT TO "SUBMIT NEW ISSUE" BUTTON!!! PLEASE CHECK IF THIS ISSUE HAS BEEN REPORTED PREVIOUSLY USING SEARCH!!! Please complete the next sections or the issue will be closed. These questions are the first thing we need to know to understand the context. --> **Apache Airflow version**:1.10.9 **Environment**: - **OS** (e.g. from /etc/os-release):Debian GNU/Linux 10 (buster) - **Kernel** (e.g. `uname -a`):5.9.1-arch1-1 - **Install tools**:pip **What happened**: Task with `TriggerRule.ALL_DONE` will get skipped if any of its upstream tasks is skipped. **What you expected to happen**: The [docs](https://airflow.apache.org/docs/stable/concepts.html#trigger-rules) states that > Skipped tasks will cascade through trigger rules all_success and all_failed but not all_done, one_failed, one_success, none_failed, none_failed_or_skipped, none_skipped and dummy. So I would assume that a task with `TriggerRule.ALL_DONE` will never get skipped. **How to reproduce it**: A minimal example: ``` from airflow import DAG from airflow.operators.dummy_operator import DummyOperator from airflow.operators.python_operator import ShortCircuitOperator from airflow.utils.trigger_rule import TriggerRule from datetime import datetime dag_args = { 'dag_id': 'test', 'start_date': datetime(2020, 1, 1, 0, 0), } with DAG(**dag_args) as dag: task1 = ShortCircuitOperator( task_id='task1', python_callable=lambda: False ) task2 = DummyOperator(task_id='task2') task3 = DummyOperator( task_id='task3', trigger_rule=TriggerRule.ALL_DONE ) task1 >> task2 >> task3 ``` And the resulting DAG:  I would asume that task3 still gets executed or that the documentation gets updated and that there would be a trigger rule 'always', like it was asked in #10275. Thank you. ---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]