sha12br opened a new issue #13785: URL: https://github.com/apache/airflow/issues/13785

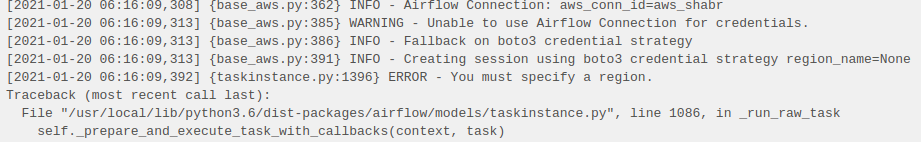

Hi Team, Am trying to create a data pipeline where the EMR cluster gets provisioned, runs a spark job and terminate upon completion, so the flow would be similar as below EmrCreateJobFlowOperator --> EmrAddStepsOperator --> EmrTerminateJobFlowOperator The class "airflow.providers.amazon.aws.operators.emr_create_job_flow.EmrCreateJobFlowOperator" is taking in "region_name" as argument and it is easy to define the region in DAG script. Moreover this step works good and a cluster is provisioned. Where as the next step "EmrAddStepsOperator" --> the underlying class [airflow.providers.amazon.aws.operators.emr_add_steps.EmrAddStepsOperator] does not has any region_name as argument. So at first it throws up an error "ERROR - You must specify a region", since there is no such way to pass a region name to the class, i decided to create a new connection with conn_id as aws_shabr and connection uri as this --> aws://?region=eu-west-1 (so that it takes default login and pass, and region is mentioned). But upon running it still throws up the same error "ERROR - You must specify a region".  link to Doc --> http://apache-airflow-docs.s3-website.eu-central-1.amazonaws.com/docs/apache-airflow-providers-amazon/latest/_api/airflow/providers/amazon/aws/operators/emr_add_steps/index.html Is there any other way to pass region_name to "EmrAddStepsOperator" step, if so please help me out here. Thanks in Advance Shabr ---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]