quoc-t-le opened a new issue #15396: URL: https://github.com/apache/airflow/issues/15396

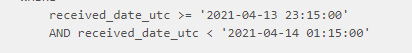

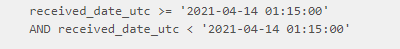

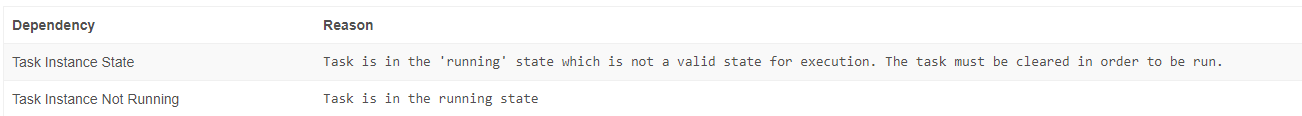

**Apache Airflow version: 2.0.1 **Python: 3.7 **Install tools**: docker In attempt to see if the SQLtoGCSOperator is bugged on airflow 1.10.x when exporting out columns with emoji with format JSON, I am trying to upgrade to Airflow 2. I installed Airflow 1.10.15 and run the "airflow update_check". Made all the import updates and all. I have it running. There are two issues I am running into: 1. I have a Dag that checks to see if any events came in -- ShortCircuit if none. Otherwise, run the subdag. Seems like the subdag is receiving invalid prev_execution_date macros ? Dag:  SubDag:  they both are received_date_utc >= prev_execution_date.to_datetime_string() and received_date_utc < next_execution_date.to_datetime_string() but you can see the difference there. It did not behave like that in the older version. 2. The other issue is, the SubDag step won't run unless I cleared it from the Dag side. I don't know why there. Locally, I am running SequentialExecutor / sqlite -- ran fine with my previous version. After clearing out the subdag, it ran the first step of it. scheduled the next and just stop Here is the sub dag instance details:  **What you expected to happen**: SubDag runs; SubDag have access to correct prev_execution_date -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]