SamWheating edited a comment on issue #15559: URL: https://github.com/apache/airflow/issues/15559#issuecomment-831450306

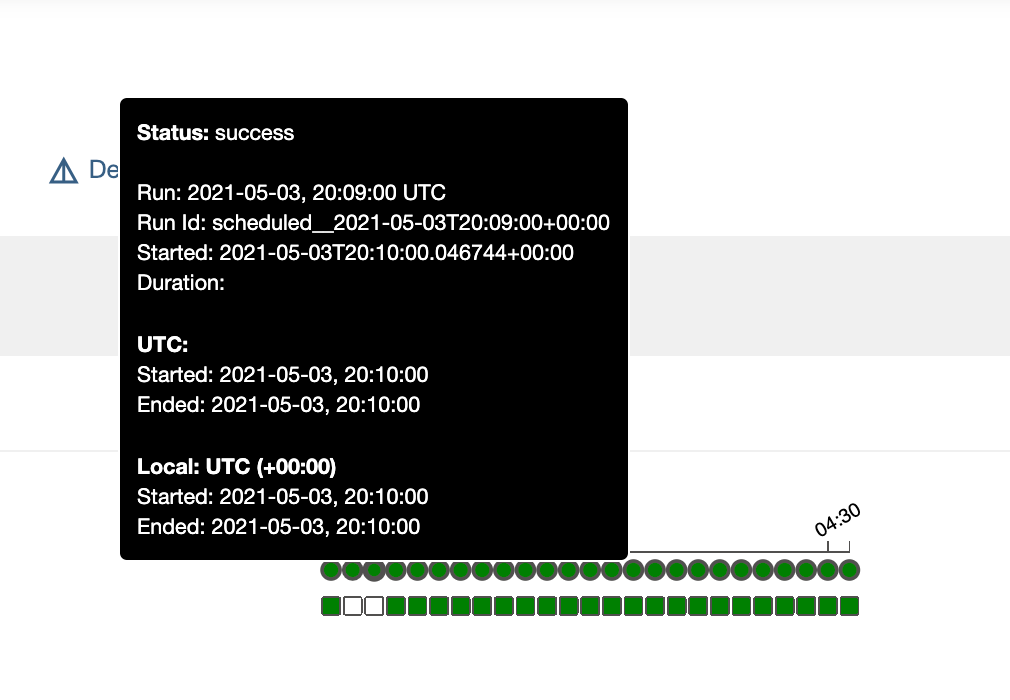

We've been experiencing the same issues (Airflow 2.0.2, 2x schedulers, MySQL 8). Furthermore, when a task has `depends_on_past=True` this will cause the DAG to completely lock as no future runs can be created. I'm currently trying to recreate this by running some high-frequency DAGs with and without multiple schedulers, I'll update here with my findings. ### Update: I was able to get this to happen by running 3 schedulers with a DAG running every 1 minute:  From the scheduler logs: `DEBUG - number of tis tasks for <DagRun airflow-utils.send-airflow-heartbeat_every_minute @ 2021-05-03 20:09:00+00:00: scheduled__2021-05-03T20:09:00+00:00, externally triggered: False>: 0 task(s)` So it looks like the scheduler was running [DagRun.update_state()](https://github.com/apache/airflow/blob/73187871703bce22783a42db3d3cec9045ee1de2/airflow/models/dagrun.py#L384) before any Task instances had been created, which would cause this DAG to be marked as successful. Do you think this could this be either: 1) A race condition in the scheduler code? 2) A result of improper scheduler locking? (We're using mySQL 8 with `use_row_level_locking=True`) ### Update 2: I did some more investigating today and found that while the DAGRun and TaskInstances are created by one scheduler, they are soon after marked as successful by a different scheduler. Here's some selected logs from one occurence of this issue to demonstrate this (I added debug log entries to the TaskInstance and DagRun constructors so that I could see when and where they were being created) **airflow-scheduler-1:** ``` 2021-05-04 20:12:00,123 dag.py: DEBUG - Created DagRun <DagRun airflow-utils.send-airflow-heartbeat_every_minute @ 2021-05-04 20:11:00+00:00: scheduled__2021-05-04T20:11:00+00:00, externally triggered: False> 2021-05-04 20:12:00,126 taskinstance.py: DEBUG - Created TaskInstance <TaskInstance: airflow-utils.send-airflow-heartbeat_every_minute.send_heartbeat 2021-05-04 20:11:00+00:00 [None]> 2021-05-04 20:12:00,134 scheduler_job.py: INFO - DAG airflow-utils.send-airflow-heartbeat_every_minute is at (or above) max_active_runs (1 of 1), not creating any more runs ``` **airflow-scheduler-2:** ``` 2021-05-04 20:12:00,156 dagrun.py: DEBUG - number of tis tasks for <DagRun airflow-utils.send-airflow-heartbeat_every_minute @ 2021-05-04 20:11:00+00:00: scheduled__2021-05-04T20:11:00+00:00, externally triggered: False>: 0 task(s) 2021-05-04 20:12:00,156 dagrun.py: INFO - Marking run <DagRun airflow-utils.send-airflow-heartbeat_every_minute @ 2021-05-04 20:11:00+00:00: scheduled__2021-05-04T20:11:00+00:00, externally triggered: False> successful ``` So within ~30ms of the DagRun being created, another scheduler instance marked it as complete based on having no tasks. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]