c-thiel opened a new issue #15793: URL: https://github.com/apache/airflow/issues/15793

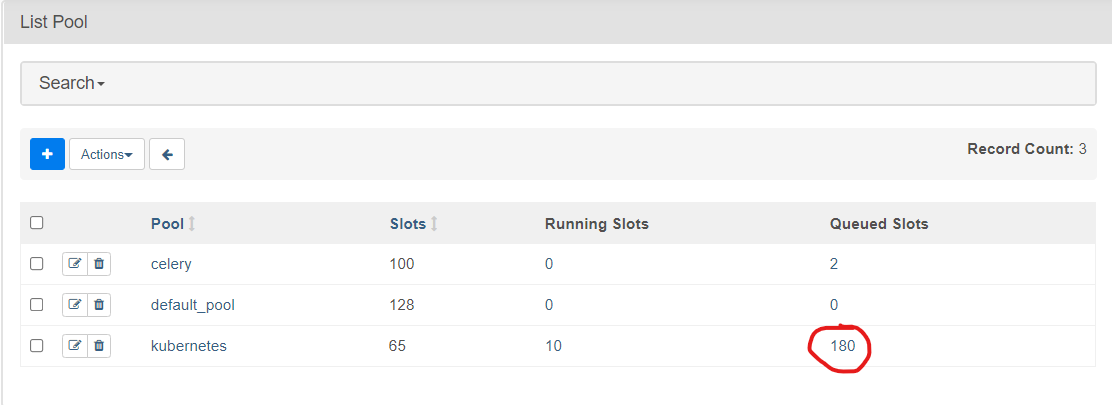

**Apache Airflow version**: 2.0.2 **Kubernetes version (if you are using kubernetes)** (use `kubectl version`): 1.17.14 **Environment**: - **Helm-Chart**: https://github.com/airflow-helm/charts/tree/main/charts/airflow - **Executor**: CeleryExector on Kubernetes - **Operator**: Mostly KubernetesPodOperator, most tasks take 5 or 10 pool slots - **Parallelism**: Very high (1000) - **AIRFLOW__SCHEDULER__PARSING_PROCESSES**: 1 - **AIRFLOW__CELERY__WORKER_PREFETCH_MULTIPLIER**: 1 - **scheduler pods**: 1 **What happened**: The scheduler queues more slots than are available in the pod:  As a result (I think), 80% of the Tasks fail: ``` *** Reading remote log from s3://wyrm-logs/airflow/endurance-wyrm-dev/logs/DAG-ID/my_task/2021-05-11T07:12:49.813565+00:00/2.log. [2021-05-12 07:31:29,247] {taskinstance.py:877} INFO - Dependencies all met for <TaskInstance: DAG-ID.my_task 2021-05-11T07:12:49.813565+00:00 [queued]> [2021-05-12 07:31:29,261] {taskinstance.py:867} INFO - Dependencies not met for <TaskInstance: DAG-ID.my_task 2021-05-11T07:12:49.813565+00:00 [queued]>, dependency 'Pool Slots Available' FAILED: ('Not scheduling since there are %s open slots in pool %s and require %s pool slots', -70, 'kubernetes', 5) [2021-05-12 07:31:29,261] {taskinstance.py:1053} WARNING - -------------------------------------------------------------------------------- [2021-05-12 07:31:29,261] {taskinstance.py:1054} WARNING - Rescheduling due to concurrency limits reached at task runtime. Attempt 2 of 3. State set to NONE. [2021-05-12 07:31:29,261] {taskinstance.py:1061} WARNING - -------------------------------------------------------------------------------- [2021-05-12 07:31:29,287] {local_task_job.py:93} INFO - Task is not able to be run [2021-05-12 07:31:34,003] {taskinstance.py:877} INFO - Dependencies all met for <TaskInstance: DAG-ID.my_task 2021-05-11T07:12:49.813565+00:00 [queued]> [2021-05-12 07:31:34,017] {taskinstance.py:867} INFO - Dependencies not met for <TaskInstance: DAG-ID.my_task 2021-05-11T07:12:49.813565+00:00 [queued]>, dependency 'Pool Slots Available' FAILED: ('Not scheduling since there are %s open slots in pool %s and require %s pool slots', -115, 'kubernetes', 5) [2021-05-12 07:31:34,017] {taskinstance.py:1053} WARNING - -------------------------------------------------------------------------------- [2021-05-12 07:31:34,017] {taskinstance.py:1054} WARNING - Rescheduling due to concurrency limits reached at task runtime. Attempt 2 of 3. State set to NONE. [2021-05-12 07:31:34,017] {taskinstance.py:1061} WARNING - -------------------------------------------------------------------------------- [2021-05-12 07:31:34,027] {local_task_job.py:93} INFO - Task is not able to be run ``` The Task is in the failed state afterwards. The number of ``Rescheduling due to concurrency limits reached`` messages depends from task to task. **What you expected to happen**: The task is Rescheduled until pool slots are available. Then it runs. It does not fail due to depleted pool slots. **How to reproduce it**: Increase Parrallelism to a very high number, create a pool with only very few slots. --- I will try to add an example based on MiniKube later today --- **What I think what happened** Firstly the scheduler is for some reason queuing more tasks than there are slots in the pool - I think it is simply ignoring the queued slots. The executors, beeing capable of depleting the pool instantly, are then just pulling too many tasks of the too long queue. The last tasks notice that the pool is depleted and mark them as failed. I am not too deep in the code - but I see two problems here: The scheduler is queuing more tasks than slots are available and the worker (probably) mark the tasks as failed if the pool is depleted instead of rescheduling it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]