wolfier opened a new issue #16737:

URL: https://github.com/apache/airflow/issues/16737

**Apache Airflow version**: 2.0.2

**Kubernetes version**:

```

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3",

GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean",

BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc",

Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"18+",

GitVersion:"v1.18.17-gke.1901",

GitCommit:"b5bc948aea9982cd8b1e89df8d50e30ffabdd368", GitTreeState:"clean",

BuildDate:"2021-05-27T19:56:12Z", GoVersion:"go1.13.15b4", Compiler:"gc",

Platform:"linux/amd64"}

```

**Environment**: Kubernetes setup where the schedule component ran its own

pod.

**What happened**:

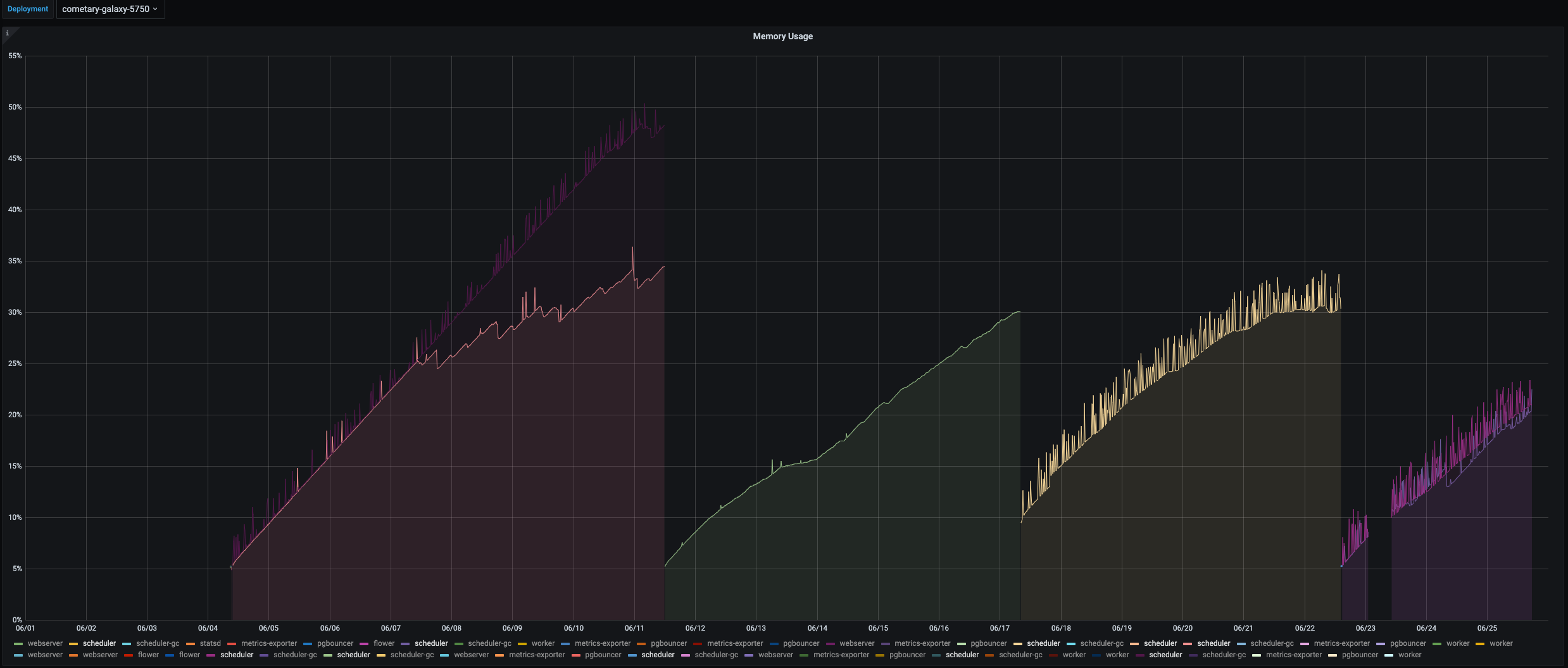

Scheduler memory crept up for no apparent reason given a deployment with two

simple DAGs.

**What you expected to happen**:

I expect the scheduler memory usage to not build up over time and should

remain relatively flat throughout the scheduler process lifetime.

**How to reproduce it**:

Start a scheduler process in a pod and watch the memory usage over time.

These are the scheduler configurations

- 1 CPU and 3.75 GB memory

- CeleryExecutor

- 2 parsing processes

- 2 simple DAGs

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]