dungdm93 opened a new issue #17202: URL: https://github.com/apache/airflow/issues/17202

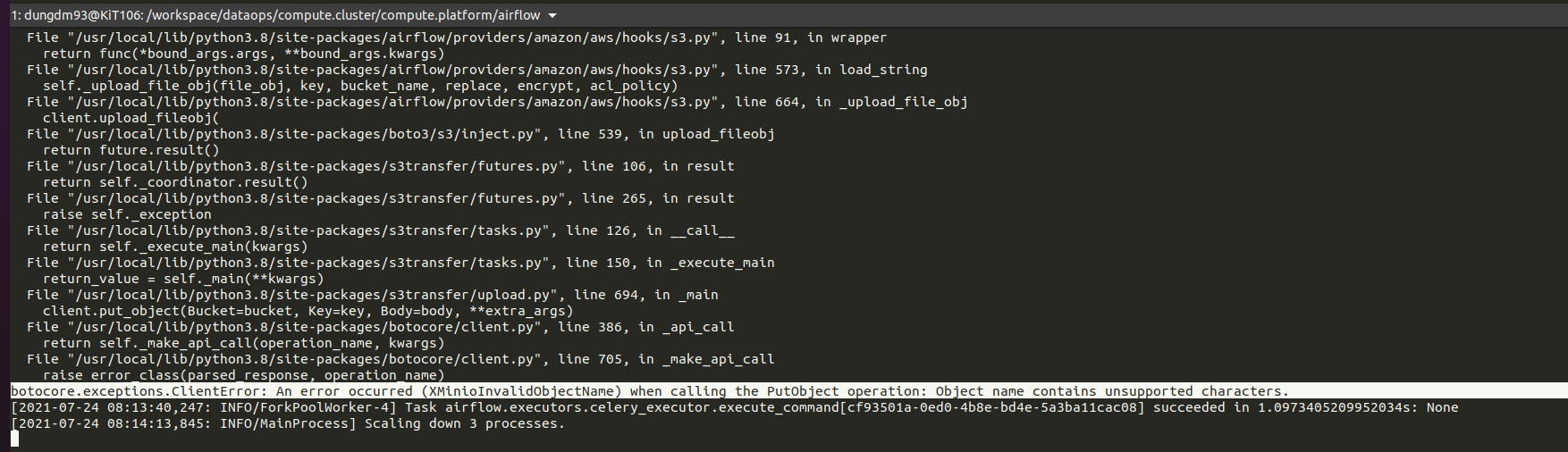

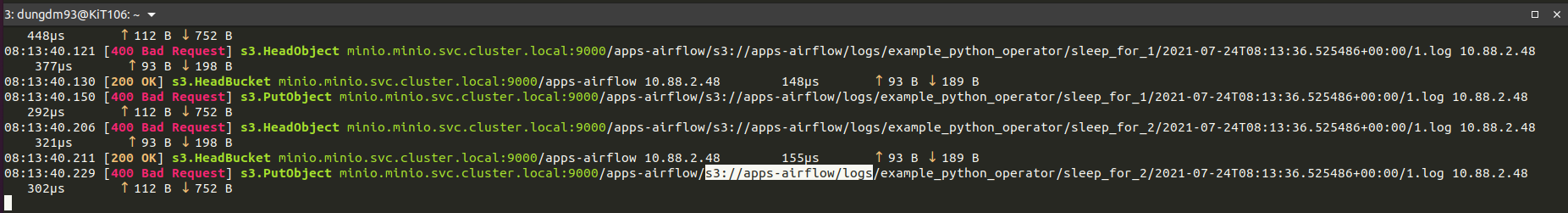

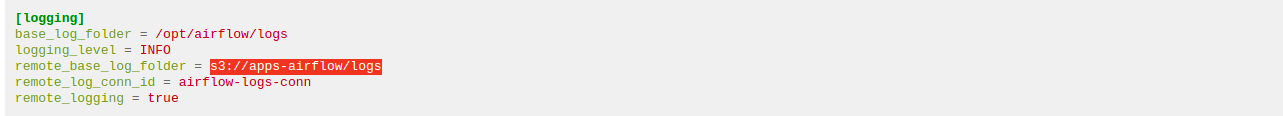

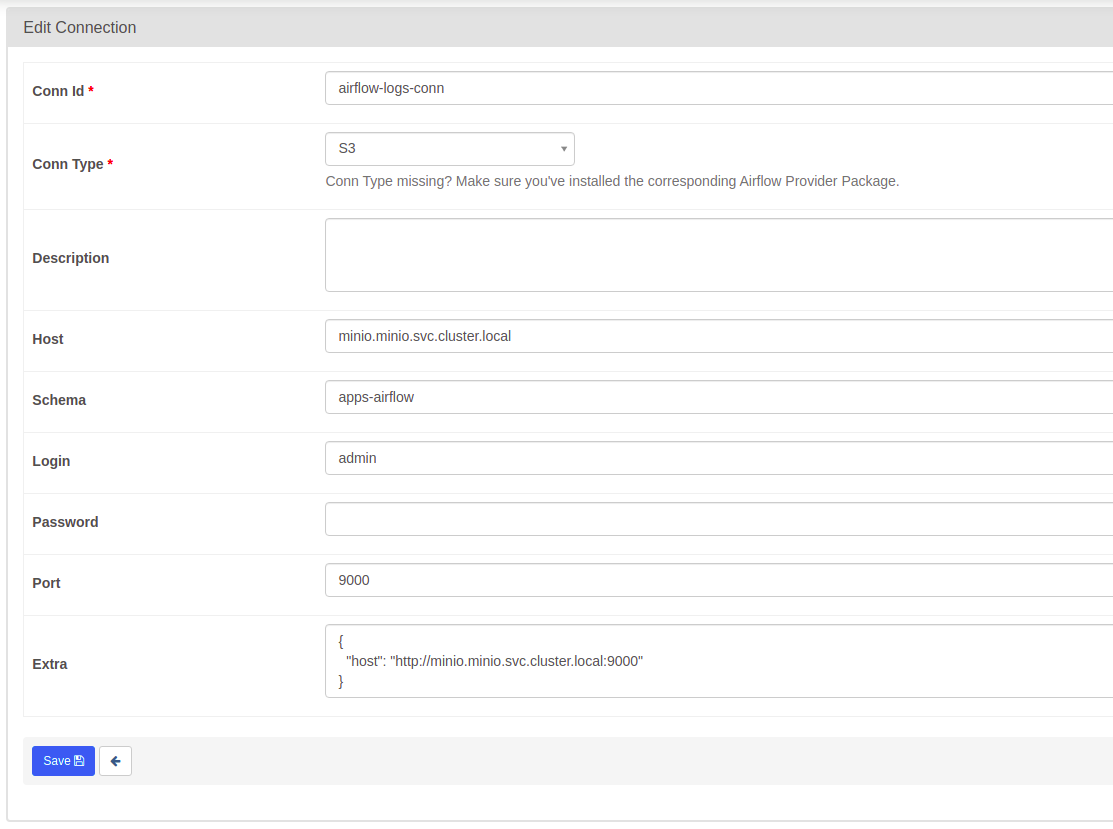

**Apache Airflow version**: `2.1.2+d25854dd413aa68ea70fb1ade7fe01425f456192` **Kubernetes version (if you are using kubernetes)** (use `kubectl version`): `v1.19.10-gke.1600` **Environment**: - **Cloud provider or hardware configuration**: - **OS** (e.g. from /etc/os-release): - **Kernel** (e.g. `uname -a`): - **Install tools**: - **Others**: **What happened**: My airflow cluster use S3 remote logs to MinIO (a S3 compatible object store) follow [this guide](https://airflow.apache.org/docs/apache-airflow-providers-amazon/stable/logging/s3-task-handler.html). But when some (example) DAG run, I got the following error:  After some investigation, I find out that airflow don't truncate `s3://<bucket>` part from `remote_base_log_folder` when uploading logs to S3.  (`mc admin trace <target>`) **What you expected to happen**: `s3://<bucket>` MUST be truncated from `remote_base_log_folder` or allow config `remote_base_log_folder` without `s3://` prefix and bucket. (only base path) **How to reproduce it**: * Airflow config:  * Airflow logs connection:  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]