mgorsk1 opened a new issue #17238: URL: https://github.com/apache/airflow/issues/17238

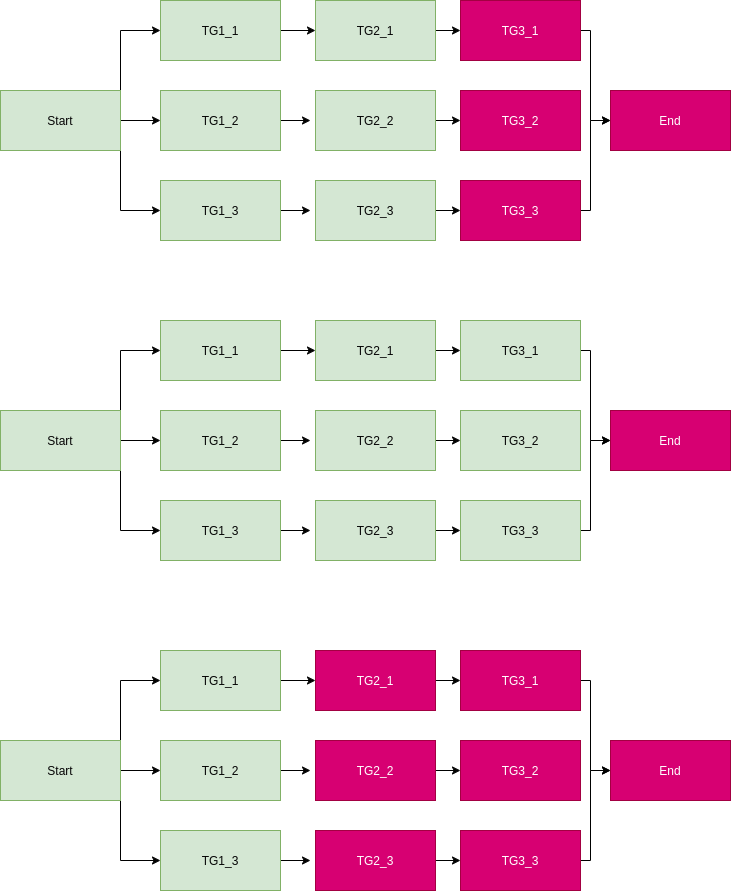

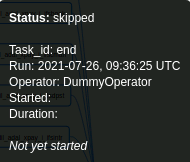

**Apache Airflow version**: **2.1.2** **Kubernetes version (if you are using kubernetes)** (use `kubectl version`): **v1.19.4** **Environment**: - **Cloud provider or hardware configuration**: self-hosted k8s - **OS** (e.g. from /etc/os-release): - **Kernel** (e.g. `uname -a`): - **Install tools**: - **Others**: **KubernetesExecutor** **What happened**: We are running DAG on k8s using DummyOperator, PythonOperator and KubernetesPodOperator. From time to time random tasks in the DAG are marked as `skipped` although we are not raising AirflowSkipException in our code and all previous tasks finished successfully:  From the UI it looks like the task was not run at all (and there are no task execution logs in UI) it actually seems that the task pod (worker) was executed and finished. We are able to find in Airflow scheduler logs what was the unique pod name for the `end` task and logs proving that it has completed ok. Additionally our k8s monitoring says that the pod of the same name indeed existed within the namespace at the exact time Airflow logs say it was spawned. The situation occurs for every type of operator (so it's not only for DummyOperator used for `end` task). It also makes whole DAG Run land in `failed` state. **What you expected to happen**: Both task instance and DAG run are `success` **How to reproduce it**: It occurs randomly and we are not sure how to reproduce it. It seems that it occurs more often if there are a lot of DAGs/tasks running in Airflow at once (although we limit task parallelism to 16 per DAG and 32 per whole Airflow instance). **Anything else we need to know**: What's also interesting is that it almost every time happens to whole group of tasks. Below diagram shows rendered DAG and 3 situations of equal probability of happening (green box is task=success and pink box is task=skipped). TG1 TG2 TG3 are identifiers for different operators used (either python or kubernetespodoperator):  How often does this problem occur? Once? Every time etc? It occurs randomly - one day the same DAG is ok, the other way it suffers from this issue. Any relevant logs to include? Put them here in side a detail tag:  Above log shows that Airflow actually executed worker pod with task and it succeeded, but status of the task in UI claims otherwise:  --> -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]