zockette edited a comment on issue #19409: URL: https://github.com/apache/airflow/issues/19409#issuecomment-962650499

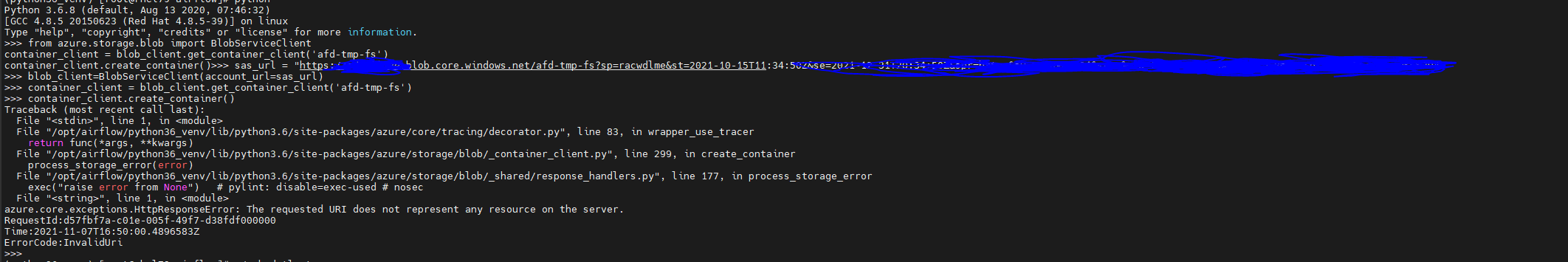

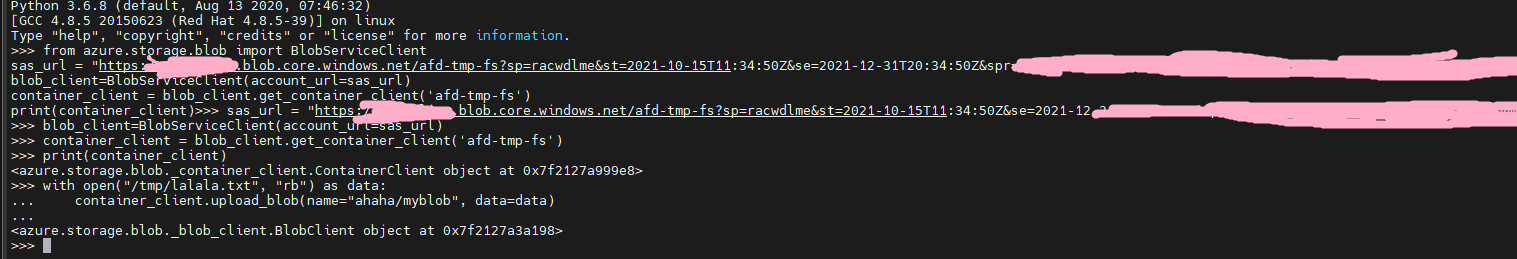

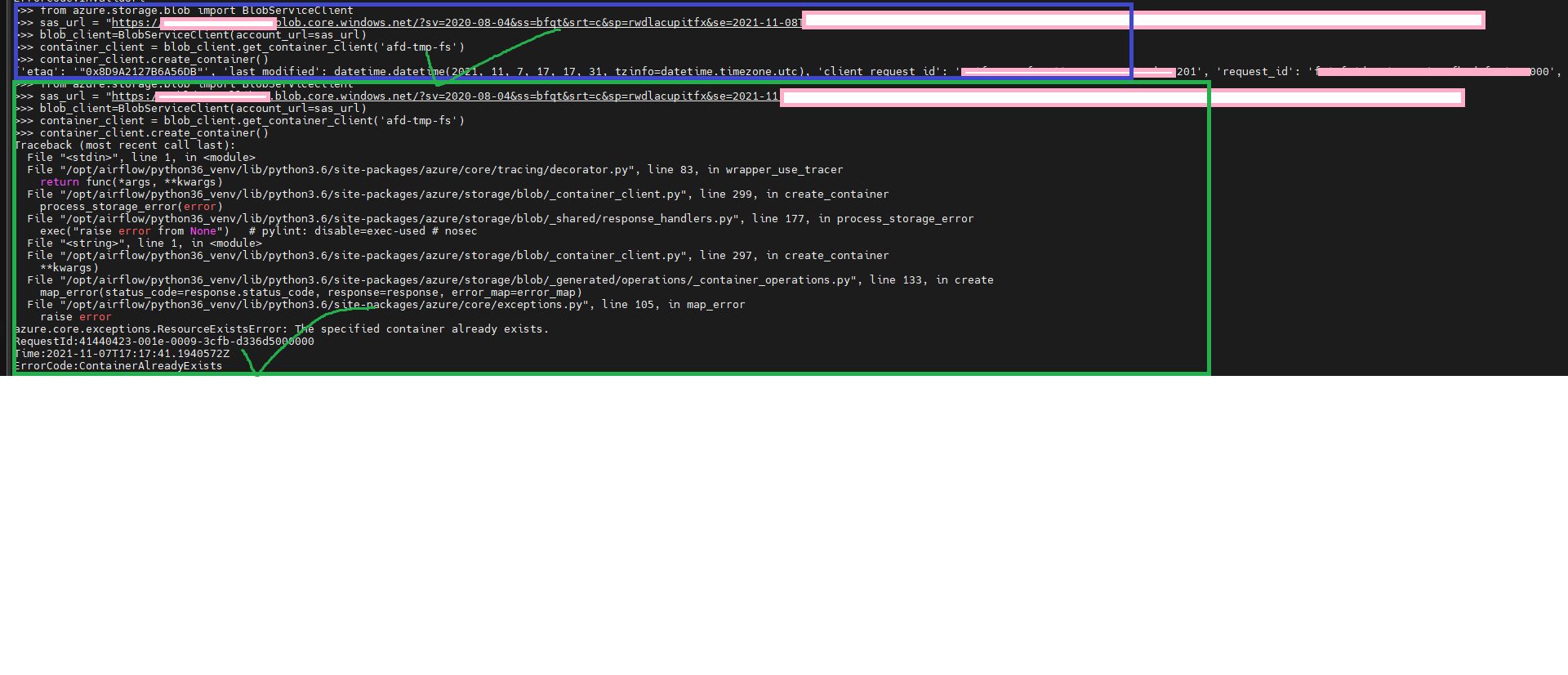

Well I can say that create_container error is the same via the CLI:  Uploading works just fine:  Your SAS token has been generated at Storage level, not at Container leveln yes? I'm pretty sure because Storage level tokens start with ?sv while Container level tokens start with ?sp. After further digging I can fairly confidently say that BlobServiceClient doesn't behave the same if it's a Container SAS token and it only works "as intended" with a Storage SAS token. Works fine with Storage level token:  So now the question I guess is: should and will the microsoft-azure provider handle the error that comes when trying to run create_container with a Container level SAS token? Handling could be done by either confirming the behaviour with the guys managing the BlobServiceClient code and adding it to the error mappings, by having a flag at operator level to bypass the container existence check/container creation attempt bit or by documenting that Container level SAS token are not supported (pls no D:). Also one extra bit of weirdness: using a Container level SAS token will make the "container" parameter of the operator act as a path on the container. Not sure if intended :-) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]