Yuriowindiatmoko2401 opened a new issue, #23878: URL: https://github.com/apache/airflow/issues/23878

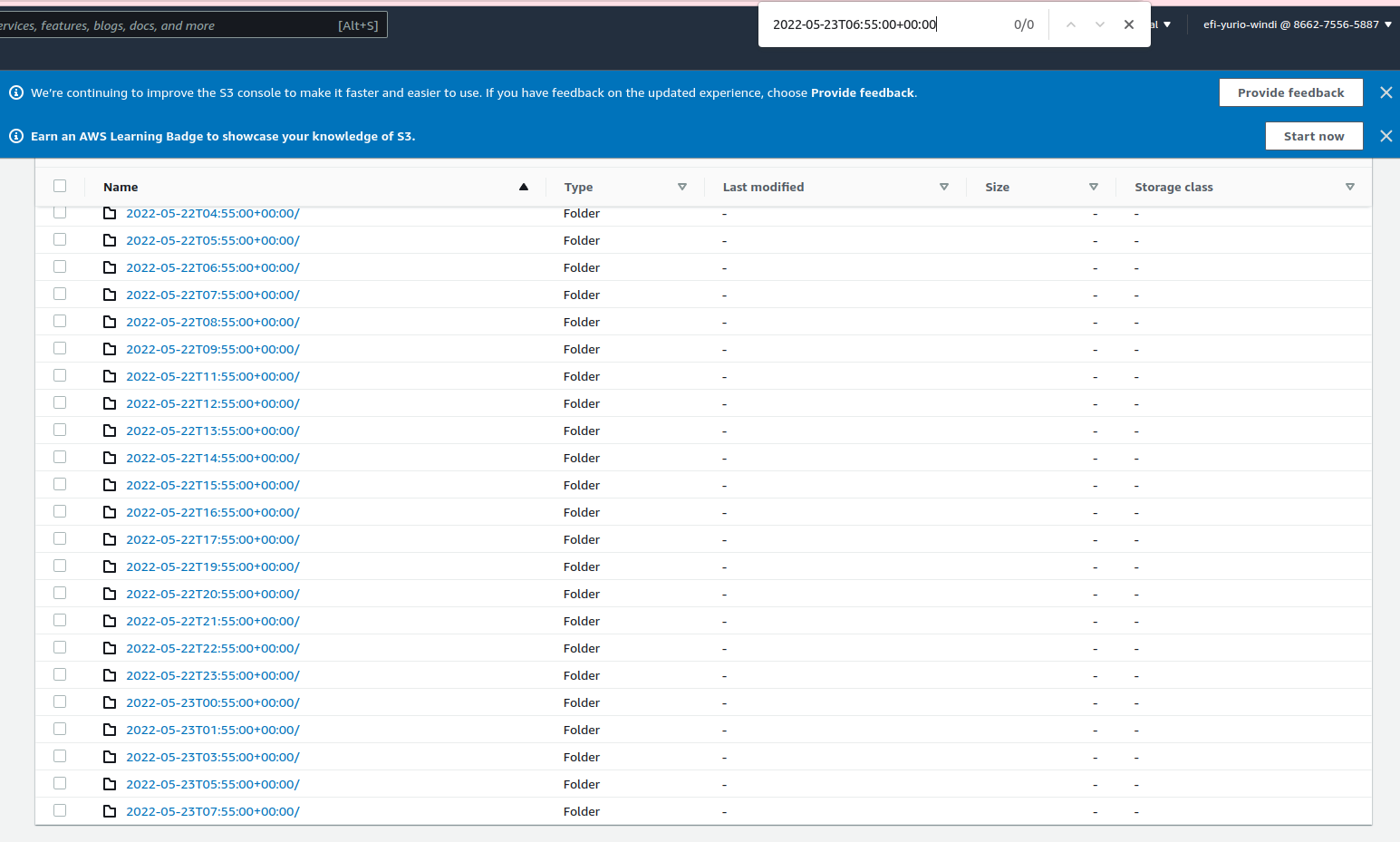

### Apache Airflow version 2.1.2 ### What happened S3 remote logs celery worker sometimes not written in s3 bucket, i've been using 2 celery worker in airflow 2.1.2 version implementing using docker container inside nomad cluster, this is not described as using docker compose but rather separate and independent containers that are connected to each other with a db connection and rabbitmq as a broker. <img width="1420" alt="Screen Shot 2022-05-24 at 10 42 38" src="https://user-images.githubusercontent.com/33838663/169944422-880d6394-d0a8-457d-9830-2620b7254829.png";> this is what looks like when opening ui, logs not found  so i tried to tracing to s3 bucket directly then it also not found so then i tried to find inside the first worker container , it was not found <img width="1318" alt="Screen Shot 2022-05-24 at 10 49 34" src="https://user-images.githubusercontent.com/33838663/169945165-e104f9a2-e669-4c40-914d-5eaad73ef5cc.png";> but then in the 2nd worker container, it is found succeed not error <img width="1200" alt="Screen Shot 2022-05-24 at 10 49 16" src="https://user-images.githubusercontent.com/33838663/169945175-58573cc5-efe0-47c8-98b3-36419038d330.png";> this our airflow info ```bash airflow@6a19f3dee333:/opt/airflow/logs/reverse_etl_sales_farm_activity/load_leads_ref$ airflow info Apache Airflow version | 2.1.2 executor | CeleryExecutor task_logging_handler | airflow.providers.amazon.aws.log.s3_task_handler.S3TaskHandler sql_alchemy_conn | postgresql+psycopg2://*******:*******@*******:6432/airflow-prod dags_folder | /opt/airflow/dags plugins_folder | /opt/airflow/plugins base_log_folder | /opt/airflow/logs remote_base_log_folder | s3://efi-data/efi-airflow/logs/ System info OS | Linux architecture | x86_64 uname | uname_result(system='Linux', node='6a19f3dee333', release='4.14.193-149.317.amzn2.x86_64', version='#1 SMP Thu Sep 3 19:04:44 UTC 2020', machine='x86_64', | processor='') locale | ('en_US', 'UTF-8') python_version | 3.6.15 (default, Dec 3 2021, 03:28:14) [GCC 8.3.0] python_location | /usr/local/bin/python Tools info git | NOT AVAILABLE ssh | OpenSSH_7.9p1 Debian-10+deb10u2, OpenSSL 1.1.1d 10 Sep 2019 kubectl | NOT AVAILABLE gcloud | NOT AVAILABLE cloud_sql_proxy | NOT AVAILABLE mysql | mysql Ver 8.0.28 for Linux on x86_64 (MySQL Community Server - GPL) sqlite3 | 3.27.2 2019-02-25 16:06:06 bd49a8271d650fa89e446b42e513b595a717b9212c91dd384aab871fc1d0alt1 psql | psql (PostgreSQL) 11.14 (Debian 11.14-0+deb10u1) Paths info airflow_home | /opt/airflow system_path | /home/airflow/.local/bin:/usr/local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin python_path | /home/airflow/.local/bin:/usr/local/lib/python36.zip:/usr/local/lib/python3.6:/usr/local/lib/python3.6/lib-dynload:/home/airflow/.local/lib/python3.6/site-pack | ages:/usr/local/lib/python3.6/site-packages:/opt/airflow/dags:/opt/airflow/config:/opt/airflow/plugins airflow_on_path | True Providers info apache-airflow-providers-amazon | 2.0.0 apache-airflow-providers-celery | 2.0.0 apache-airflow-providers-cncf-kubernetes | 2.0.0 apache-airflow-providers-docker | 2.0.0 apache-airflow-providers-elasticsearch | 2.0.2 apache-airflow-providers-ftp | 2.0.0 apache-airflow-providers-google | 4.0.0 apache-airflow-providers-grpc | 2.0.0 apache-airflow-providers-hashicorp | 2.0.0 apache-airflow-providers-http | 2.0.0 apache-airflow-providers-imap | 2.0.0 apache-airflow-providers-microsoft-azure | 3.0.0 apache-airflow-providers-mongo | 2.3.1 apache-airflow-providers-mysql | 2.0.0 apache-airflow-providers-odbc | 2.0.0 apache-airflow-providers-postgres | 2.0.0 apache-airflow-providers-redis | 2.0.0 apache-airflow-providers-sendgrid | 2.0.0 apache-airflow-providers-sftp | 2.0.0 apache-airflow-providers-slack | 4.0.0 apache-airflow-providers-sqlite | 2.0.0 apache-airflow-providers-ssh | 2.0.0 ``` ### What you think should happen instead i hope it can be written normally in s3 remote logging all of the task logs, ### How to reproduce _No response_ ### Operating System PRETTY_NAME="Debian GNU/Linux 10 (buster)" NAME="Debian GNU/Linux" VERSION_ID="10" VERSION="10 (buster)" VERSION_CODENAME=buster ID=debian HOME_URL="https://www.debian.org/"; SUPPORT_URL="https://www.debian.org/support"; BUG_REPORT_URL="https://bugs.debian.org/"; ### Versions of Apache Airflow Providers apache-airflow-providers-amazon==2.0.0 apache-airflow-providers-celery==2.0.0 apache-airflow-providers-cncf-kubernetes==2.0.0 apache-airflow-providers-docker==2.0.0 apache-airflow-providers-elasticsearch==2.0.2 apache-airflow-providers-ftp==2.0.0 apache-airflow-providers-google==4.0.0 apache-airflow-providers-grpc==2.0.0 apache-airflow-providers-hashicorp==2.0.0 apache-airflow-providers-http==2.0.0 apache-airflow-providers-imap==2.0.0 apache-airflow-providers-microsoft-azure==3.0.0 apache-airflow-providers-mongo==2.3.1 apache-airflow-providers-mysql==2.0.0 apache-airflow-providers-odbc==2.0.0 apache-airflow-providers-postgres==2.0.0 apache-airflow-providers-redis==2.0.0 apache-airflow-providers-sendgrid==2.0.0 apache-airflow-providers-sftp==2.0.0 apache-airflow-providers-slack==4.0.0 apache-airflow-providers-sqlite==2.0.0 apache-airflow-providers-ssh==2.0.0 ### Deployment Other Docker-based deployment ### Deployment details docker container inside nomad cluster it was like container separating for each component , worker , scheduler, flower , postgresql, and rabbitmq ### Anything else it sometimes happen logs of task doesn't write to s3 , but i dunno how to debug this ### Are you willing to submit PR? - [X] Yes I am willing to submit a PR! ### Code of Conduct - [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]