potiuk commented on code in PR #23715:

URL: https://github.com/apache/airflow/pull/23715#discussion_r902282371

##########

dev/breeze/src/airflow_breeze/commands/testing_commands.py:

##########

@@ -220,6 +227,48 @@ def run_with_progress(

finally:

os.unlink(f.name)

return result

+

+def run_tests(

+ shell_params: ShellParams, dry_run: bool, verbose: bool,

extra_pytest_args: Tuple

+) -> Tuple[int, str]:

+ env_variables = get_env_variables_for_docker_commands(shell_params)

+ perform_environment_checks(verbose=verbose)

+ cmd = ['docker-compose', 'run', '--service-ports', '--rm', 'airflow']

+ cmd.extend(list(extra_pytest_args))

+ test_result = run_command(

+ cmd,

+ verbose=verbose,

+ dry_run=dry_run,

+ env=env_variables,

+ )

+ return (

+ test_result.returncode,

+ f"Running tests for Python {shell_params.python}",

+ )

+

+

+def run_tests_in_parallel(

+ shell_params_list: List[ShellParams],

+ python_version_list: List[str],

+ parallelism: int,

+ dry_run: bool,

+ verbose: bool,

+):

+ """Run tests in parallel"""

+ get_console().print(

+ f"\n[info]Running tests with parallelism = {parallelism} "

+ f"for the python versions: {python_version_list}[/]"

Review Comment:

One big comment here @Bowrna - we do not want to run tests in parallel for

different Python versions, but we want to run them for different test types. So

instead of passing list of python versions here we should pass the list of test

types that we want to run.

The thing is that we want to use the same Pythoin image downloaded once to

run all the tests on one machine. See any of the CI builds here:

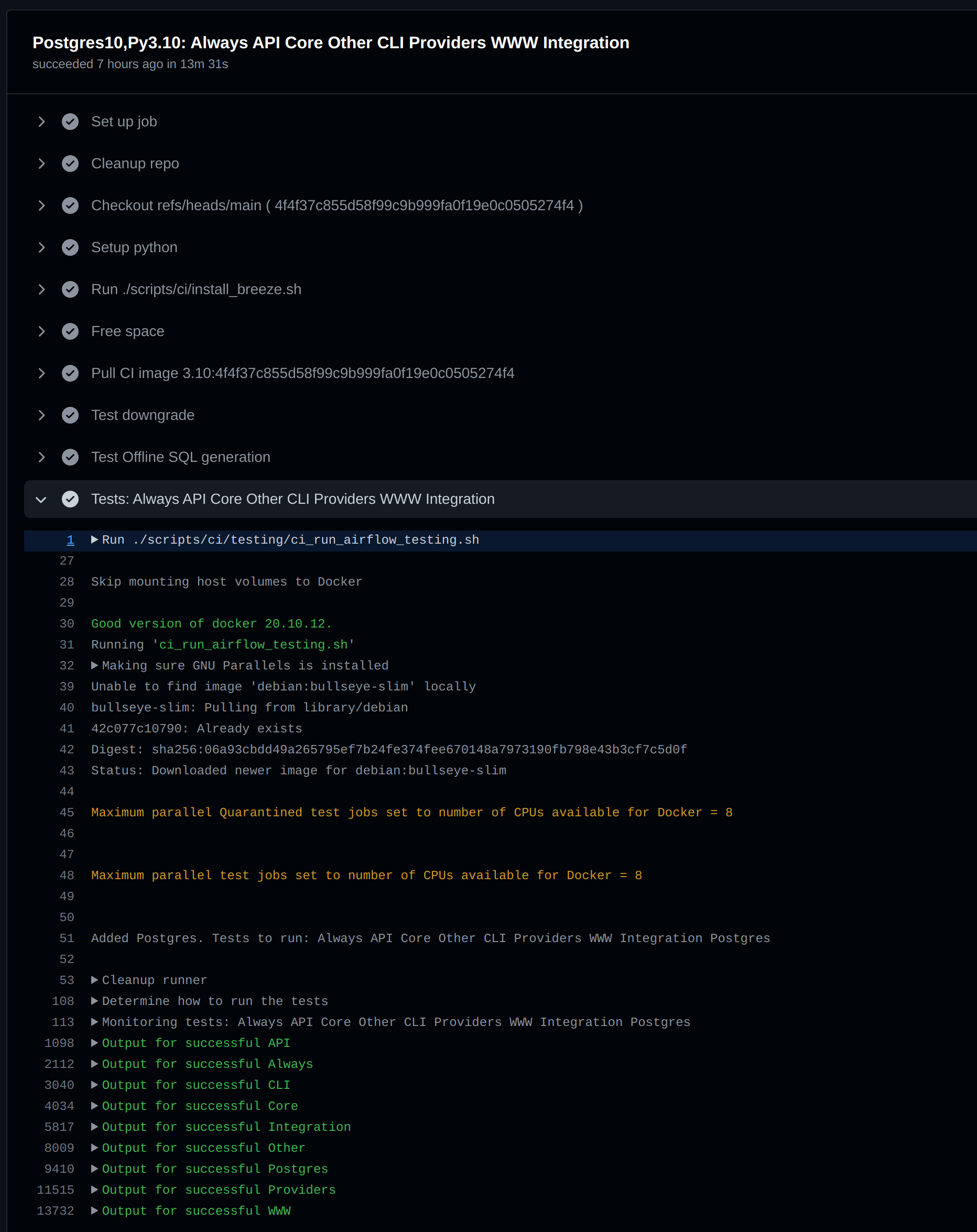

For example here:

https://github.com/apache/airflow/runs/6976269780?check_suite_focus=true#step:10:1

All those test types are run using Python 3.10 (and the same python image) -

and parallelism is done via running those test types in parallel:

"Always API Core Other CLI Providers WWW Integration Postgres":

Our goal is the same. You should be able to run - in parallell tests for

example with:

```

breeze tests --test-types "Always Core Other CLI Providers WWW Integration

Postgres" --run-in-parallell --parallelism 4

``

This should run all those test types in parallell (max 4 parallel task

should run at the same time). Under the hood it should run 4 docker-compose

commands to run tests in parallel (and when each finishes, it should start the

next one). This is why pool.apply_async does for us.

But we need to make sure those parallel runs CAN actualy run in parallel.

The problem are not the containers, the problem is database. Each of those

tests should run its own docker-compose under the hood - Docker-compose starts

database (and in case of integration tests also integrations). And we cannot

really run multiple "the same" docker-composes. But this can be easily done by

setting different project name in docker-compose command - this way you can

start N parallel docker-compose commands.

Iin the current script this is done here:

https://github.com/apache/airflow/blob/main/scripts/ci/testing/ci_run_single_airflow_test_in_docker.sh#L100

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]