hf200012 opened a new pull request, #11927:

URL: https://github.com/apache/doris/pull/11927

```

CREATE EXTERNAL RESOURCE "spark_resource"

PROPERTIES

(

"type" = "spark",

"spark.master" = "yarn",

"spark.submit.deployMode" = "cluster",

"spark.executor.memory" = "1g",

"spark.yarn.queue" = "default",

"spark.hadoop.yarn.resourcemanager.address" = "localhost:50056",

"spark.hadoop.fs.defaultFS" = "hdfs://localhost:9000",

"working_dir" = "hdfs://localhost:9000/tmp/doris",

"broker" = "my_hdfs_broker"

);

create table test_partition_01(

id int,

name varchar(200),

age int

)

UNIQUE KEY(`id`)

DISTRIBUTED BY HASH(`id`) BUCKETS 1

PROPERTIES (

"replication_allocation" = "tag.location.default: 1"

);

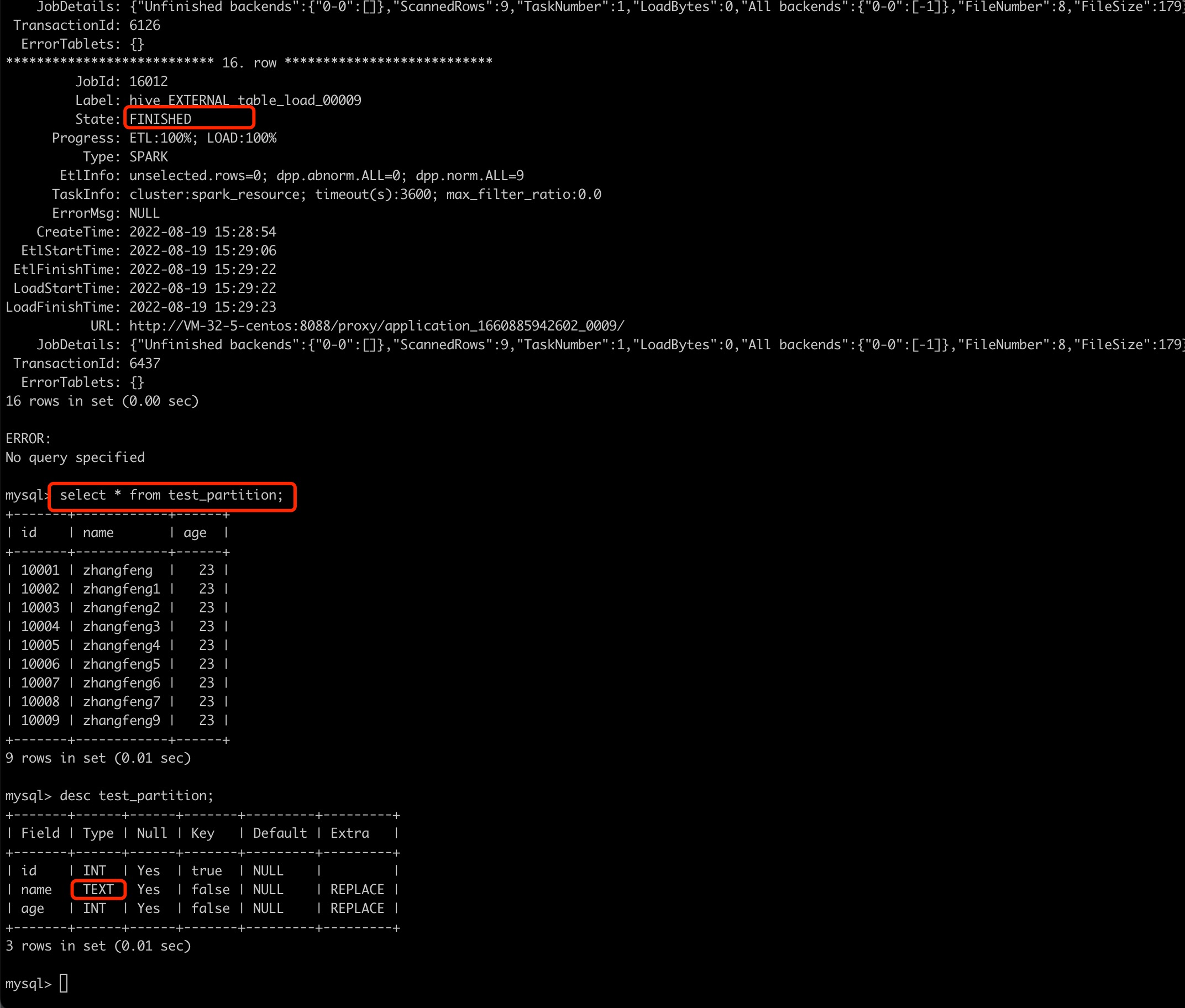

LOAD LABEL demo.hive_EXTERNAL_table_load_00009

(

DATA

INFILE("hdfs://VM-32-5-centos:9000/user/hive/warehouse/demo.db/test/*/*")

INTO TABLE test_partition

COLUMNS TERMINATED BY ","

FORMAT AS "csv"

)

WITH RESOURCE 'spark_resource'

(

"spark.executor.memory" = "1g",

"spark.shuffle.compress" = "true"

)

PROPERTIES

(

"timeout" = "3600"

);

```

# Proposed changes

Issue Number: close #11926

## Problem summary

Describe your changes.

## Checklist(Required)

1. Does it affect the original behavior:

- [ ] Yes

- [x] No

- [ ] I don't know

2. Has unit tests been added:

- [ ] Yes

- [x] No

- [ ] No Need

3. Has document been added or modified:

- [ ] Yes

- [x] No

- [ ] No Need

4. Does it need to update dependencies:

- [ ] Yes

- [x] No

5. Are there any changes that cannot be rolled back:

- [ ] Yes (If Yes, please explain WHY)

- [x] No

## Further comments

If this is a relatively large or complex change, kick off the discussion at

[[email protected]](mailto:[email protected]) by explaining why you

chose the solution you did and what alternatives you considered, etc...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]