DavidZ1 opened a new issue, #8060: URL: https://github.com/apache/hudi/issues/8060

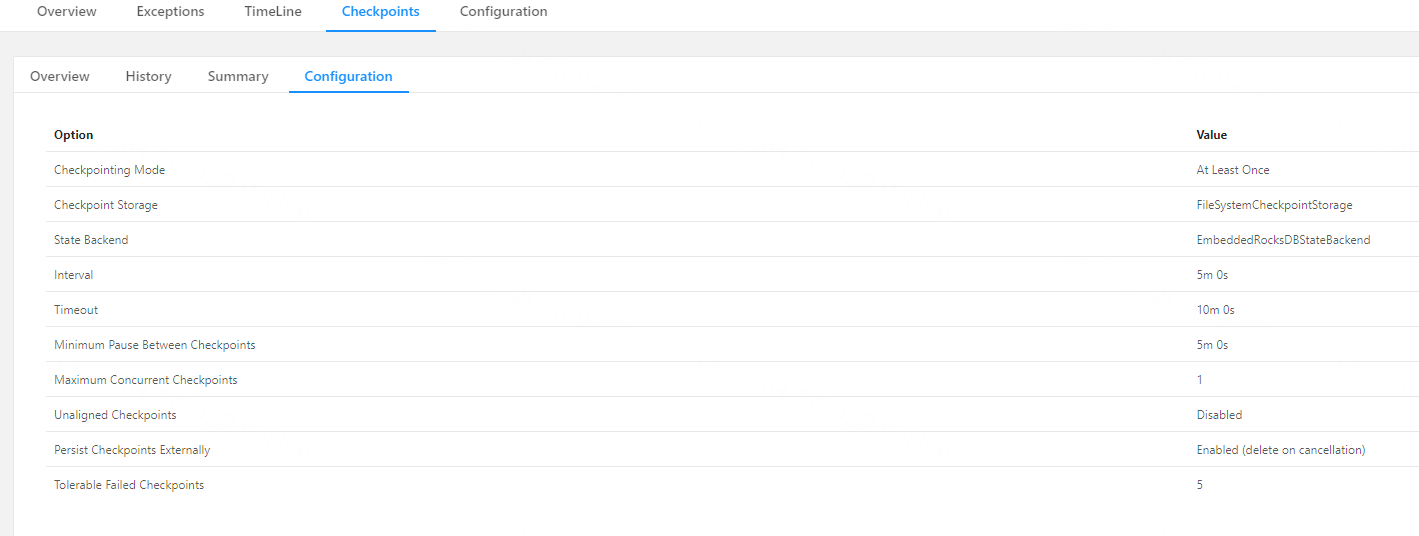

**_Tips before filing an issue_** - Have you gone through our [FAQs](https://hudi.apache.org/learn/faq/)? - Join the mailing list to engage in conversations and get faster support at [email protected]. - If you have triaged this as a bug, then file an [issue](https://issues.apache.org/jira/projects/HUDI/issues) directly. **Describe the problem you faced** We currently use Hudi version 0.13.0 and write to hudi through the flink job task for using jar, but when we restart the flink job, an instant exception message appears, and the task cannot return to normal. **To Reproduce** Steps to reproduce the behavior: 1.Create a flink jar job that runs fine for a few hours; 2. When the flink job has been running normally for a period of time, when the flink job is restarted, an instant exception message will appear. **Expected behavior** A clear and concise description of what you expected to happen. **Environment Description** * Hudi version : 0.13.0 * Spark version : 3.2.3 * Hive version : 3.2.1 * Hadoop version : 3.2 * Storage (HDFS/S3/GCS..) : HDFS * Running on Docker? (yes/no) : Yes **Additional context** Flink DAG:   hudi config: ` checkpoint.interval=300 checkpoint.timeout=600 compaction.max_memory=1024 payload.class.name=org.apache.hudi.common.model.OverwriteNonDefaultsWithLatestAvroPayload compaction.delta_commits=20 compaction.trigger.strategy=num_or_time compaction.delta_seconds=3600 clean.policy=KEEP_LATEST_COMMITS clean.retain_commits=2 hoodie.bucket.index.num.buckets=40 archive.max_commits=50 archive.min_commits=40 compaction.async.enabled=false table.type=MERGE_ON_READ hoodie.datasource.write.hive_style_partitioning=true index.type=BUCKET write.operation=upsert ` Add any other context about the problem here. **Stacktrace** ```Add the stacktrace of the error.``` ` 2023-02-27 17:21:16 org.apache.flink.util.FlinkException: Global failure triggered by OperatorCoordinator for 'bucket_write: ods_icv_can_hudi_temp -> Sink: clean_commits' (operator 01779b9ce77677c962695f2adc9c1b67). at org.apache.flink.runtime.operators.coordination.OperatorCoordinatorHolder$LazyInitializedCoordinatorContext.failJob(OperatorCoordinatorHolder.java:553) at org.apache.hudi.sink.StreamWriteOperatorCoordinator.lambda$start$0(StreamWriteOperatorCoordinator.java:190) at org.apache.hudi.sink.utils.NonThrownExecutor.handleException(NonThrownExecutor.java:142) at org.apache.hudi.sink.utils.NonThrownExecutor.lambda$wrapAction$0(NonThrownExecutor.java:133) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:750) Caused by: org.apache.hudi.exception.HoodieException: Executor executes action [handle write metadata event for instant ] error ... 6 more Caused by: java.lang.IllegalStateException: Receive an unexpected event for instant 20230227170723317 from task 37 at org.apache.hudi.common.util.ValidationUtils.checkState(ValidationUtils.java:67) at org.apache.hudi.sink.StreamWriteOperatorCoordinator.handleWriteMetaEvent(StreamWriteOperatorCoordinator.java:447) at org.apache.hudi.sink.StreamWriteOperatorCoordinator.lambda$handleEventFromOperator$4(StreamWriteOperatorCoordinator.java:288) at org.apache.hudi.sink.utils.NonThrownExecutor.lambda$wrapAction$0(NonThrownExecutor.java:130) ... 3 more ` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]