DavidZ1 opened a new issue, #8267: URL: https://github.com/apache/hudi/issues/8267

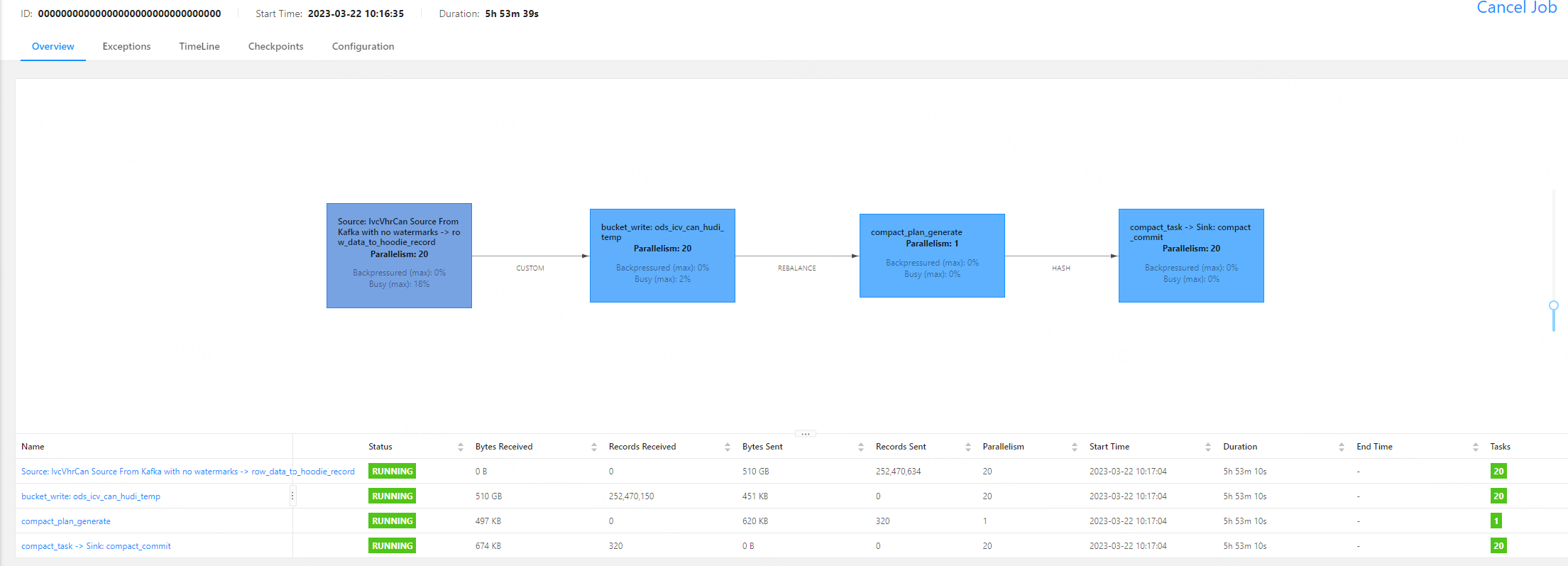

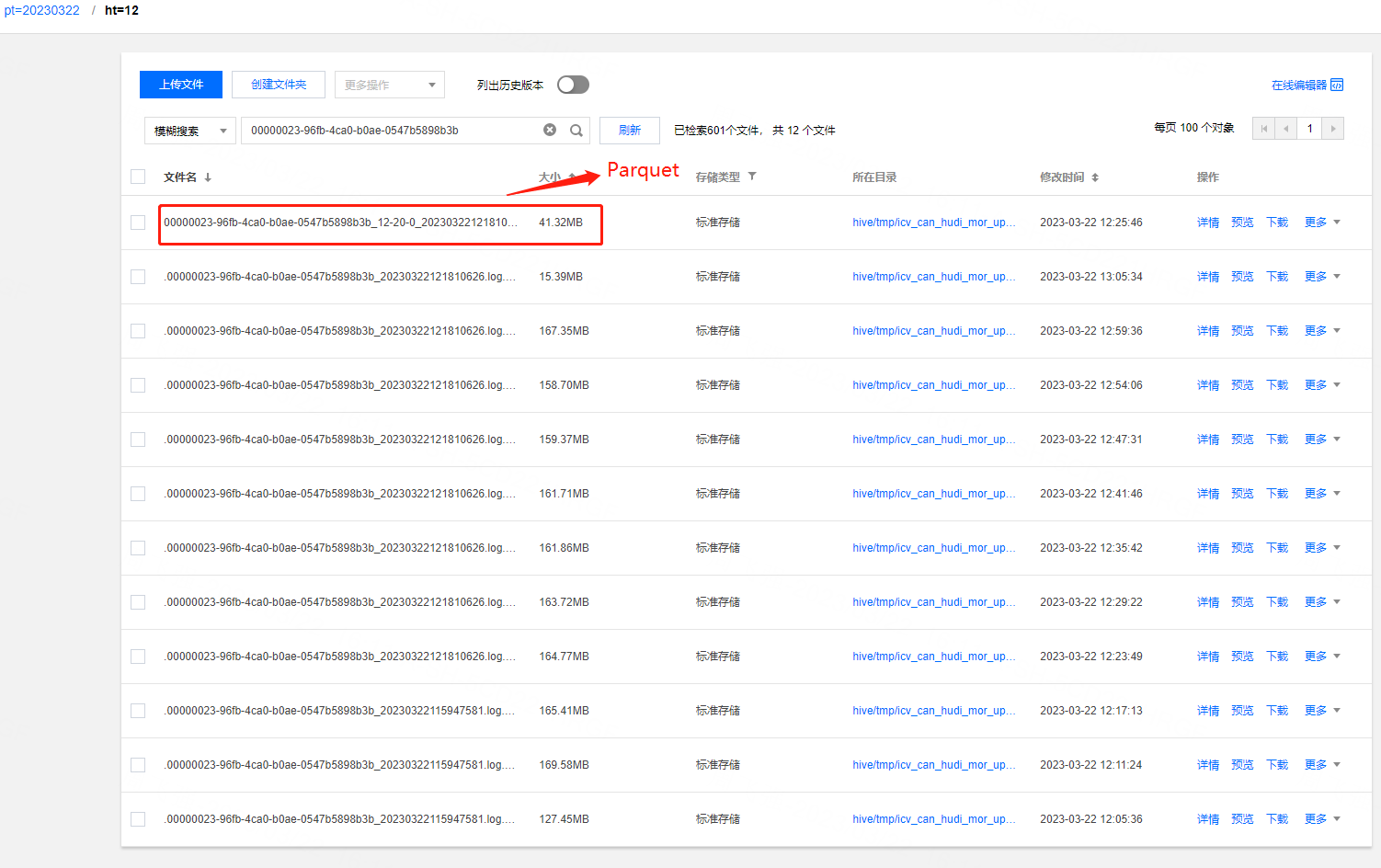

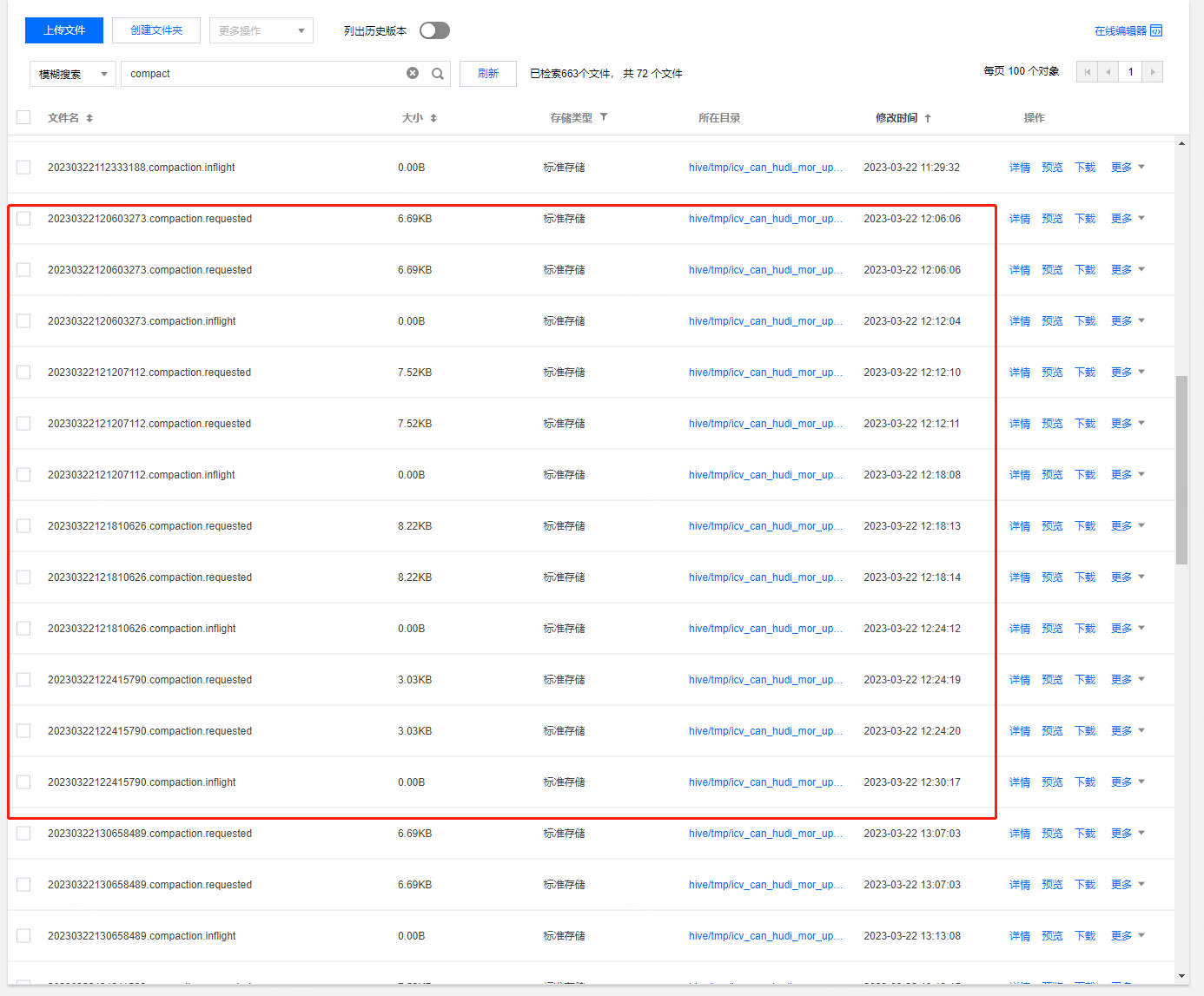

**_Tips before filing an issue_** - Have you gone through our [FAQs](https://hudi.apache.org/learn/faq/)? - Join the mailing list to engage in conversations and get faster support at [email protected]. - If you have triaged this as a bug, then file an [issue](https://issues.apache.org/jira/projects/HUDI/issues) directly. **Describe the problem you faced** We have a flink task that consumes kafka messages and then writes them into the hudi table, using MOR table, index using buecket index, and the write mode of the table is upsert. Our MOR table has 2 levels of partitions, day and hour. After the flink task has been running for a period of time, we found that the log files in each hour partition were not converted to parquet files. We also checked the compaction request file and found that it did not contain all the log files. I don’t know how to solve it? At the same time, I also want to know how to judge that the data logs files of a certain partition have been compacted? **To Reproduce** Steps to reproduce the behavior: 1. 2. 3. 4. **Expected behavior** A clear and concise description of what you expected to happen. **Environment Description** * Hudi version : 0.13.0 * Spark version : 3.2.1 * Hive version : 3.2.1 * Hadoop version : 3.2.1 * Storage (HDFS/S3/GCS..) : COSN * Running on Docker? (yes/no) : yes **Additional context** 1.Hudi config ```java checkpoint.interval=300 checkpoint.timeout=900 compaction.max_memory=1024 payload.class.name=org.apache.hudi.common.model.OverwriteNonDefaultsWithLatestAvroPayload compaction.delta_commits=5 compaction.trigger.strategy=num_or_time compaction.delta_seconds=3600 clean.policy=KEEP_LATEST_COMMITS clean.retain_commits=1 hoodie.bucket.index.num.buckets=50 archive.max_commits=50 archive.min_commits=40 compaction.async.enabled=true write.operation=upsert table.type=MERGE_ON_READ index.type=BUCKET checkpoint.incremental.enable=true ``` 2.hoodie.properties ```java hoodie.table.precombine.field=acquire_timestamp hoodie.datasource.write.drop.partition.columns=false hoodie.table.partition.fields=pt,ht hoodie.table.type=MERGE_ON_READ hoodie.archivelog.folder=archived hoodie.table.cdc.enabled=false hoodie.compaction.payload.class=org.apache.hudi.common.model.OverwriteNonDefaultsWithLatestAvroPayload hoodie.table.version=5 hoodie.timeline.layout.version=1 hoodie.table.recordkey.fields=vin,acquire_timestamp hoodie.datasource.write.partitionpath.urlencode=false hoodie.table.name=ods_icv_can_hudi_temp hoodie.table.keygenerator.class=org.apache.hudi.keygen.ComplexAvroKeyGenerator hoodie.compaction.record.merger.strategy=eeb8d96f-b1e4-49fd-bbf8-28ac514178e5 hoodie.datasource.write.hive_style_partitioning=true ``` 3.DAG  4.Data file  00000023-96fb-4ca0-b0ae-0547b5898b3b fileId parquet size is 40MB,but arvo logs files size 1500MB+,so some arvo logs not compact to parquet.  We found that the compact.request file under the hoodie directory does not contain all arvo log files. **Stacktrace** 1. Clean file exception ``` 2023-03-22 14:32:37.627 [pool-18-thread-1] WARN org.apache.hudi.table.action.clean.CleanActionExecutor [] - Failed to perform previous clean operation, instant: [==>20230322143231759__clean__REQUESTED] java.lang.NullPointerException: Expected a non-null value. Got null at org.apache.hudi.common.util.Option.<init>(Option.java:65) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.common.util.Option.of(Option.java:76) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.table.action.clean.CleanActionExecutor.runClean(CleanActionExecutor.java:230) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.table.action.clean.CleanActionExecutor.runPendingClean(CleanActionExecutor.java:187) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.table.action.clean.CleanActionExecutor.lambda$execute$8(CleanActionExecutor.java:256) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at java.util.ArrayList.forEach(ArrayList.java:1259) ~[?:1.8.0_332] at org.apache.hudi.table.action.clean.CleanActionExecutor.execute(CleanActionExecutor.java:250) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.table.HoodieFlinkCopyOnWriteTable.clean(HoodieFlinkCopyOnWriteTable.java:322) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.client.BaseHoodieTableServiceClient.clean(BaseHoodieTableServiceClient.java:554) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.client.BaseHoodieWriteClient.clean(BaseHoodieWriteClient.java:758) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.client.BaseHoodieWriteClient.clean(BaseHoodieWriteClient.java:730) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at org.apache.hudi.async.AsyncCleanerService.lambda$startService$0(AsyncCleanerService.java:55) ~[blob_p-7584645ba23f46692000bbfac6ef844cbd0e30ce-451b376bd445dd495f01c72e3dff67e5:?] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604) [?:1.8.0_332] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_332] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_332] at java.lang.Thread.run(Thread.java:750) [?:1.8.0_332] ``` 2.When we use MOR table + Insert model,There are warn logs such as compact,but the MOR table + Upsert do not has this. The following exception occurs: `2023-03-21 14:32:36.033 [JettyServerThreadPool-334] WARN org.apache.hudi.timeline.service.RequestHandler [] - Bad request response due to client view behind server view. Last known instant from client was 20230321142047972 but server has the following timeline [[20230321125010487__deltacommit__COMPLETED], [20230321125525961__deltacommit__COMPLETED], [20230321130051999__deltacommit__COMPLETED], [20230321130617771__deltacommit__COMPLETED], [20230321131133084__deltacommit__COMPLETED], [20230321131650502__deltacommit__COMPLETED], [==>20230321132210140__compaction__INFLIGHT], [20230321132212886__deltacommit__COMPLETED], [==>20230321132729719__compaction__INFLIGHT], [20230321132731672__deltacommit__COMPLETED], [==>20230321133253906__compaction__INFLIGHT], [20230321133256109__deltacommit__COMPLETED], [==>20230321133820416__compaction__INFLIGHT], [20230321133822486__deltacommit__COMPLETED], [==>20230321134348164__compaction__INFLIGHT], [20230321134350553__deltacommit__COMPLETED], [20230 321134912462__deltacommit__COMPLETED], [20230321135434761__deltacommit__COMPLETED], [20230321140440297__rollback__COMPLETED], [20230321140440947__rollback__COMPLETED], [20230321140443670__deltacommit__COMPLETED], [20230321140445923__rollback__COMPLETED], [20230321140450567__rollback__COMPLETED], [20230321140454064__rollback__COMPLETED], [20230321140456989__rollback__COMPLETED], [==>20230321140910025__compaction__REQUESTED], [20230321140913981__deltacommit__COMPLETED], [==>20230321141505445__compaction__REQUESTED], [20230321141508195__deltacommit__COMPLETED], [20230321142047972__deltacommit__COMPLETED], [20230321142644822__deltacommit__COMPLETED]]` ```Add the stacktrace of the error.``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]