This is an automated email from the ASF dual-hosted git repository.

jin pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/incubator-hugegraph-ai.git

The following commit(s) were added to refs/heads/main by this push:

new b027744 chore: add docker-compose deployment and improve container

networking instructions (#280)

b027744 is described below

commit b027744850f75317be284dbc7c07128b3a7a7421

Author: Linyu <94553312+weijing...@users.noreply.github.com>

AuthorDate: Tue Jul 1 19:53:10 2025 +0800

chore: add docker-compose deployment and improve container networking

instructions (#280)

This PR updates the hugegraph-llm/README.md and related Docker files to:

- Add a new recommended deployment method using docker-compose

(docker/docker-compose-network.yml), enabling one-command startup of

both HugeGraph Server and RAG containers in the same network.

- Clearly distinguish between docker-compose (multi-container,

recommended) and manual single-container deployment, with step-by-step

instructions for both.

- Provide explicit examples for .env mounting, network creation, and

container inter-communication (using container name as hostname).

This update makes it much easier for users to deploy, manage, and

connect the RAG and HugeGraph containers, and helps avoid common

pitfalls with Docker networking and environment configuration.

---------

Co-authored-by: imbajin <j...@apache.org>

---

README.md | 194 +++++++++++++-----

docker/docker-compose-network.yml | 51 +++++

docker/env.template | 7 +

hugegraph-llm/README.md | 409 +++++++++++++++++++-------------------

4 files changed, 415 insertions(+), 246 deletions(-)

diff --git a/README.md b/README.md

index 638ddd4..268925c 100644

--- a/README.md

+++ b/README.md

@@ -3,56 +3,160 @@

[](https://www.apache.org/licenses/LICENSE-2.0.html)

[](https://deepwiki.com/apache/incubator-hugegraph-ai)

-`hugegraph-ai` aims to explore the integration of

[HugeGraph](https://github.com/apache/hugegraph) with artificial

-intelligence (AI) and provide comprehensive support for developers to leverage

HugeGraph's AI capabilities

-in their projects.

-

-

-## Modules

-

-- [hugegraph-llm](./hugegraph-llm): The `hugegraph-llm` will house the

implementation and research related to large language models.

-It will include runnable demos and can also be used as a third-party library,

reducing the cost of using graph systems

-and the complexity of building knowledge graphs. Graph systems can help large

models address challenges like timeliness

-and hallucination, while large models can help graph systems with cost-related

issues. Therefore, this module will

-explore more applications and integration solutions for graph systems and

large language models. (GraphRAG/Agent)

-- [hugegraph-ml](./hugegraph-ml): The `hugegraph-ml` will focus on integrating

HugeGraph with graph machine learning,

-graph neural networks, and graph embeddings libraries. It will build an

efficient and versatile intermediate layer

-to seamlessly connect with third-party graph-related ML frameworks.

-- [hugegraph-python-client](./hugegraph-python-client): The

`hugegraph-python-client` is a Python client for HugeGraph.

-It is used to define graph structures and perform CRUD operations on graph

data. Both the `hugegraph-llm` and

- `hugegraph-ml` modules will depend on this foundational library.

-

-## Learn More

-

-The [project

homepage](https://hugegraph.apache.org/docs/quickstart/hugegraph-ai/) contains

more information about

-hugegraph-ai.

-

-And here are links of other repositories:

-1. [hugegraph](https://github.com/apache/hugegraph) (graph's core component -

Graph server + PD + Store)

-2. [hugegraph-toolchain](https://github.com/apache/hugegraph-toolchain) (graph

tools

**[loader](https://github.com/apache/incubator-hugegraph-toolchain/tree/master/hugegraph-loader)/[dashboard](https://github.com/apache/incubator-hugegraph-toolchain/tree/master/hugegraph-hubble)/[tool](https://github.com/apache/incubator-hugegraph-toolchain/tree/master/hugegraph-tools)/[client](https://github.com/apache/incubator-hugegraph-toolchain/tree/master/hugegraph-client)**)

-3. [hugegraph-computer](https://github.com/apache/hugegraph-computer)

(integrated **graph computing** system)

-4. [hugegraph-website](https://github.com/apache/hugegraph-doc) (**doc &

website** code)

-

-

-## Contributing

-

-- Welcome to contribute to HugeGraph, please see

[Guidelines](https://hugegraph.apache.org/docs/contribution-guidelines/) for

more information.

-- Note: It's recommended to use [GitHub Desktop](https://desktop.github.com/)

to greatly simplify the PR and commit process.

-- Code format: Please run

[`./style/code_format_and_analysis.sh`](style/code_format_and_analysis.sh) to

format your code before submitting a PR. (Use `pylint` to check code style)

-- Thank you to all the people who already contributed to HugeGraph!

+`hugegraph-ai` integrates [HugeGraph](https://github.com/apache/hugegraph)

with artificial intelligence capabilities, providing comprehensive support for

developers to build AI-powered graph applications.

+

+## ✨ Key Features

+

+- **GraphRAG**: Build intelligent question-answering systems with

graph-enhanced retrieval

+- **Knowledge Graph Construction**: Automated graph building from text using

LLMs

+- **Graph ML**: Integration with 20+ graph learning algorithms (GCN, GAT,

GraphSAGE, etc.)

+- **Python Client**: Easy-to-use Python interface for HugeGraph operations

+- **AI Agents**: Intelligent graph analysis and reasoning capabilities

+

+## 🚀 Quick Start

+

+> [!NOTE]

+> For a complete deployment guide and detailed examples, please refer to

[hugegraph-llm/README.md](./hugegraph-llm/README.md)

+

+### Prerequisites

+- Python 3.9+ (3.10+ recommended for hugegraph-llm)

+- [uv](https://docs.astral.sh/uv/) (recommended package manager)

+- HugeGraph Server 1.3+ (1.5+ recommended)

+- Docker (optional, for containerized deployment)

+

+### Option 1: Docker Deployment (Recommended)

+

+```bash

+# Clone the repository

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai

+

+# Set up environment and start services

+cp docker/env.template docker/.env

+# Edit docker/.env to set your PROJECT_PATH

+cd docker

+docker-compose -f docker-compose-network.yml up -d

+

+# Access services:

+# - HugeGraph Server: http://localhost:8080

+# - RAG Service: http://localhost:8001

+```

+

+### Option 2: Source Installation

+

+```bash

+# 1. Start HugeGraph Server

+docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

+

+# 2. Clone and set up the project

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai/hugegraph-llm

+

+# 3. Install dependencies

+uv venv && source .venv/bin/activate

+uv pip install -e .

+

+# 4. Start the demo

+python -m hugegraph_llm.demo.rag_demo.app

+# Visit http://127.0.0.1:8001

+```

+

+### Basic Usage Examples

+

+#### GraphRAG - Question Answering

+```python

+from hugegraph_llm.operators.graph_rag_task import RAGPipeline

+

+# Initialize RAG pipeline

+graph_rag = RAGPipeline()

+

+# Ask questions about your graph

+result = (graph_rag

+ .extract_keywords(text="Tell me about Al Pacino.")

+ .keywords_to_vid()

+ .query_graphdb(max_deep=2, max_graph_items=30)

+ .synthesize_answer()

+ .run())

+```

+

+#### Knowledge Graph Construction

+```python

+from hugegraph_llm.models.llms.init_llm import LLMs

+from hugegraph_llm.operators.kg_construction_task import KgBuilder

+

+# Build KG from text

+TEXT = "Your text content here..."

+builder = KgBuilder(LLMs().get_chat_llm())

+

+(builder

+ .import_schema(from_hugegraph="hugegraph")

+ .chunk_split(TEXT)

+ .extract_info(extract_type="property_graph")

+ .commit_to_hugegraph()

+ .run())

+```

+

+#### Graph Machine Learning

+```python

+from pyhugegraph.client import PyHugeClient

+# Connect to HugeGraph and run ML algorithms

+# See hugegraph-ml documentation for detailed examples

+```

+

+## 📦 Modules

+

+### [hugegraph-llm](./hugegraph-llm) [](https://deepwiki.com/apache/incubator-hugegraph-ai)

+Large language model integration for graph applications:

+- **GraphRAG**: Retrieval-augmented generation with graph data

+- **Knowledge Graph Construction**: Build KGs from text automatically

+- **Natural Language Interface**: Query graphs using natural language

+- **AI Agents**: Intelligent graph analysis and reasoning

+

+### [hugegraph-ml](./hugegraph-ml)

+Graph machine learning with 20+ implemented algorithms:

+- **Node Classification**: GCN, GAT, GraphSAGE, APPNP, etc.

+- **Graph Classification**: DiffPool, P-GNN, etc.

+- **Graph Embedding**: DeepWalk, Node2Vec, GRACE, etc.

+- **Link Prediction**: SEAL, GATNE, etc.

+

+### [hugegraph-python-client](./hugegraph-python-client)

+Python client for HugeGraph operations:

+- **Schema Management**: Define vertex/edge labels and properties

+- **CRUD Operations**: Create, read, update, delete graph data

+- **Gremlin Queries**: Execute graph traversal queries

+- **REST API**: Complete HugeGraph REST API coverage

+

+## 📚 Learn More

+

+- [Project

Homepage](https://hugegraph.apache.org/docs/quickstart/hugegraph-ai/)

+- [LLM Quick Start Guide](./hugegraph-llm/quick_start.md)

+- [DeepWiki AI

Documentation](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+## 🔗 Related Projects

+

+- [hugegraph](https://github.com/apache/hugegraph) - Core graph database

+- [hugegraph-toolchain](https://github.com/apache/hugegraph-toolchain) -

Development tools (Loader, Dashboard, etc.)

+- [hugegraph-computer](https://github.com/apache/hugegraph-computer) - Graph

computing system

+

+## 🤝 Contributing

+

+We welcome contributions! Please see our [contribution

guidelines](https://hugegraph.apache.org/docs/contribution-guidelines/) for

details.

+

+**Development Setup:**

+- Use [GitHub Desktop](https://desktop.github.com/) for easier PR management

+- Run `./style/code_format_and_analysis.sh` before submitting PRs

+- Check existing issues before reporting bugs

[](https://github.com/apache/incubator-hugegraph-ai/graphs/contributors)

-

-## License

+## 📄 License

hugegraph-ai is licensed under [Apache 2.0 License](./LICENSE).

+## 📞 Contact Us

-## Contact Us

-

- - [GitHub Issues](https://github.com/apache/incubator-hugegraph-ai/issues):

Feedback on usage issues and functional requirements (quick response)

- - Feedback Email:

[d...@hugegraph.apache.org](mailto:d...@hugegraph.apache.org)

([subscriber](https://hugegraph.apache.org/docs/contribution-guidelines/subscribe/)

only)

- - WeChat public account: Apache HugeGraph, welcome to scan this QR code to

follow us.

+- **GitHub Issues**: [Report bugs or request

features](https://github.com/apache/incubator-hugegraph-ai/issues) (fastest

response)

+- **Email**: [d...@hugegraph.apache.org](mailto:d...@hugegraph.apache.org)

([subscription

required](https://hugegraph.apache.org/docs/contribution-guidelines/subscribe/))

+- **WeChat**: Follow "Apache HugeGraph" official account

- <img

src="https://raw.githubusercontent.com/apache/hugegraph-doc/master/assets/images/wechat.png";

alt="QR png" width="350"/>

+<img

src="https://raw.githubusercontent.com/apache/hugegraph-doc/master/assets/images/wechat.png";

alt="Apache HugeGraph WeChat QR Code" width="200"/>

diff --git a/docker/docker-compose-network.yml

b/docker/docker-compose-network.yml

new file mode 100644

index 0000000..04ab6e0

--- /dev/null

+++ b/docker/docker-compose-network.yml

@@ -0,0 +1,51 @@

+networks:

+ hugegraph-network:

+ driver: bridge

+

+services:

+ # HugeGraph Server

+ hugegraph-server:

+ image: hugegraph/hugegraph

+ container_name: server

+ restart: unless-stopped

+ ports:

+ - "8080:8080"

+ networks:

+ - hugegraph-network

+ environment:

+ - HUGEGRAPH_HOST=0.0.0.0

+ - HUGEGRAPH_PORT=8080

+ healthcheck:

+ test: ["CMD", "curl", "-f", "http://localhost:8080/versions";]

+ interval: 30s

+ timeout: 10s

+ retries: 3

+ start_period: 40s

+

+ # HugeGraph LLM RAG Service

+ hugegraph-rag:

+ image: hugegraph/rag

+ container_name: rag

+ restart: unless-stopped

+ ports:

+ - "8001:8001"

+ volumes:

+ # Mount .env file, please modify according to actual path

+ - ${PROJECT_PATH}/hugegraph-llm/.env:/home/work/hugegraph-llm/.env

+ # Optional: mount resources file

+ # -

${PROJECT_PATH}/hugegraph-llm/src/hugegraph_llm/resources:/home/work/hugegraph-llm/src/hugegraph_llm/resources

+ networks:

+ - hugegraph-network

+ environment:

+ # Set HugeGraph server address, use container name as hostname

+ - HUGEGRAPH_HOST=server

+ - HUGEGRAPH_PORT=8080

+ depends_on:

+ hugegraph-server:

+ condition: service_healthy

+ healthcheck:

+ test: ["CMD", "curl", "-f", "http://localhost:8001/";]

+ interval: 30s

+ timeout: 10s

+ retries: 3

+ start_period: 60s

diff --git a/docker/env.template b/docker/env.template

new file mode 100644

index 0000000..a1e839f

--- /dev/null

+++ b/docker/env.template

@@ -0,0 +1,7 @@

+# HugeGraph LLM Environment Configuration File

+# Please copy this file to .env and modify the configuration according to

actual needs

+

+# ==================== Project Path Configuration ====================

+# Please set to your project absolute path (must be absolute path)

+# Example: /home/user/incubator-hugegraph-ai

+PROJECT_PATH=path_to_project

diff --git a/hugegraph-llm/README.md b/hugegraph-llm/README.md

index 8c3e296..8907c31 100644

--- a/hugegraph-llm/README.md

+++ b/hugegraph-llm/README.md

@@ -1,220 +1,227 @@

-# hugegraph-llm [](https://deepwiki.com/apache/incubator-hugegraph-ai)

+# HugeGraph-LLM [](https://deepwiki.com/apache/incubator-hugegraph-ai)

-## 1. Summary

+> **Bridge the gap between Graph Databases and Large Language Models**

-The `hugegraph-llm` is a tool for the implementation and research related to

large language models.

-This project includes runnable demos, it can also be used as a third-party

library.

+## 🎯 Overview

-As we know, graph systems can help large models address challenges like

timeliness and hallucination,

-while large models can help graph systems with cost-related issues.

+HugeGraph-LLM is a comprehensive toolkit that combines the power of graph

databases with large language models. It enables seamless integration between

HugeGraph and LLMs for building intelligent applications.

-With this project, we aim to reduce the cost of using graph systems and

decrease the complexity of

-building knowledge graphs. This project will offer more applications and

integration solutions for

-graph systems and large language models.

-1. Construct knowledge graph by LLM + HugeGraph

-2. Use natural language to operate graph databases (Gremlin/Cypher)

-3. Knowledge graph supplements answer context (GraphRAG → Graph Agent)

+### Key Features

+- 🏗️ **Knowledge Graph Construction** - Build KGs automatically using LLMs +

HugeGraph

+- 🗣️ **Natural Language Querying** - Operate graph databases using natural

language (Gremlin/Cypher)

+- 🔍 **Graph-Enhanced RAG** - Leverage knowledge graphs to improve answer

accuracy (GraphRAG & Graph Agent)

-> [!NOTE]

-> For the detailed documentation generated by AI, please visit our

[DeepWiki](https://deepwiki.com/apache/incubator-hugegraph-ai) page.

+For detailed source code doc, visit our

[DeepWiki](https://deepwiki.com/apache/incubator-hugegraph-ai) page.

(Recommended)

+

+## 📋 Prerequisites

-## 2. Environment Requirements

> [!IMPORTANT]

-> - python 3.10+ (not tested in 3.12)

-> - hugegraph-server 1.3+ (better to use 1.5+)

-> - uv 0.7+

-

-## 3. Deployment Options

-

-You can choose one of the following two deployment methods:

-

-### 3.1 Docker Deployment

-

-**Docker Deployment**

- Deploy HugeGraph-AI using Docker for quick setup:

- - Ensure Docker is installed

- - We provide two container images to choose from:

- - **Image 1**:

[hugegraph/rag](https://hub.docker.com/r/hugegraph/rag/tags)

- For building and running RAG functionality for rapid deployment and

direct source code modification

- - **Image 2**:

[hugegraph/rag-bin](https://hub.docker.com/r/hugegraph/rag-bin/tags)

- A binary translation of C compiled with Nuitka, for better performance

and efficiency.

- - Pull one of the Docker images:

- ```bash

- docker pull hugegraph/rag:latest # Pull Image 1

- docker pull hugegraph/rag-bin:latest # Pull Image 2

- ```

- - Start one of the Docker containers:

- ```bash

- # Replace '/path/to/.env' with your actual .env file path

- docker run -itd --name rag -v /path/to/.env:/home/work/hugegraph-llm/.env

-p 8001:8001 hugegraph/rag

- # or

- docker run -itd --name rag-bin -v

/path/to/.env:/home/work/hugegraph-llm/.env -p 8001:8001 hugegraph/rag-bin

- ```

- - Access the interface at http://localhost:8001

-

-### 3.2 Build from Source

-

-1. Start the HugeGraph database, you can run it via

[Docker](https://hub.docker.com/r/hugegraph/hugegraph)/[Binary

Package](https://hugegraph.apache.org/docs/download/download/)

- There is a simple method by docker:

- ```bash

- docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

- ```

- You can refer to the detailed documents

[doc](/docs/quickstart/hugegraph/hugegraph-server/#31-use-docker-container-convenient-for-testdev)

for more guidance.

-

-2. Configure the uv environment by using the official installer to install uv.

See the [uv documentation](https://docs.astral.sh/uv/configuration/installer/)

for other installation methods

- ```bash

- # You could try pipx or pip to install uv when meet network issues, refer

the uv doc for more details

- curl -LsSf https://astral.sh/uv/install.sh | sh - # install the latest

version like 0.7.3+

- ```

-

-3. Clone this project

- ```bash

- git clone https://github.com/apache/incubator-hugegraph-ai.git

- ```

-4. Configure dependency environment

- ```bash

- cd incubator-hugegraph-ai/hugegraph-llm

- uv venv && source .venv/bin/activate

- uv pip install -e .

- ```

- If dependency download fails or too slow due to network issues, it is

recommended to modify `hugegraph-llm/pyproject.toml`.

-

-5. To start the Gradio interactive demo for **Graph RAG**, run the following

command, then open http://127.0.0.1:8001 in your browser.

- ```bash

- python -m hugegraph_llm.demo.rag_demo.app # same as "uv run xxx"

- ```

- The default host is `0.0.0.0` and the port is `8001`. You can change them

by passing command line arguments`--host` and `--port`.

- ```bash

- python -m hugegraph_llm.demo.rag_demo.app --host 127.0.0.1 --port 18001

- ```

-

-6. After running the web demo, the config file `.env` will be automatically

generated at the path `hugegraph-llm/.env`. Additionally, a prompt-related

configuration file `config_prompt.yaml` will also be generated at the path

`hugegraph-llm/src/hugegraph_llm/resources/demo/config_prompt.yaml`.

- You can modify the content on the web page, and it will be automatically

saved to the configuration file after the corresponding feature is triggered.

You can also modify the file directly without restarting the web application;

refresh the page to load your latest changes.

- (Optional)To regenerate the config file, you can use `config.generate`

with `-u` or `--update`.

- ```bash

- python -m hugegraph_llm.config.generate --update

- ```

- Note: `Litellm` support multi-LLM provider, refer

[litellm.ai](https://docs.litellm.ai/docs/providers) to config it

-7. (__Optional__) You could use

-

[hugegraph-hubble](/docs/quickstart/toolchain/hugegraph-hubble/#21-use-docker-convenient-for-testdev)

- to visit the graph data, could run it via

[Docker/Docker-Compose](https://hub.docker.com/r/hugegraph/hubble)

- for guidance. (Hubble is a graph-analysis dashboard that includes data

loading/schema management/graph traverser/display).

-8. (__Optional__) offline download NLTK stopwords

- ```bash

- python ./hugegraph_llm/operators/common_op/nltk_helper.py

- ```

+> - **Python**: 3.10+ (not tested on 3.12)

+> - **HugeGraph Server**: 1.3+ (recommended: 1.5+)

+> - **UV Package Manager**: 0.7+

+

+## 🚀 Quick Start

+

+Choose your preferred deployment method:

+

+### Option 1: Docker Compose (Recommended)

+

+The fastest way to get started with both HugeGraph Server and RAG Service:

+

+```bash

+# 1. Set up environment

+cp docker/env.template docker/.env

+# Edit docker/.env and set PROJECT_PATH to your actual project path

+

+# 2. Deploy services

+cd docker

+docker-compose -f docker-compose-network.yml up -d

+

+# 3. Verify deployment

+docker-compose -f docker-compose-network.yml ps

+

+# 4. Access services

+# HugeGraph Server: http://localhost:8080

+# RAG Service: http://localhost:8001

+```

+

+### Option 2: Individual Docker Containers

+

+For more control over individual components:

+

+#### Available Images

+- **`hugegraph/rag`** - Development image with source code access

+- **`hugegraph/rag-bin`** - Production-optimized binary (compiled with Nuitka)

+

+```bash

+# 1. Create network

+docker network create -d bridge hugegraph-net

+

+# 2. Start HugeGraph Server

+docker run -itd --name=server -p 8080:8080 --network hugegraph-net

hugegraph/hugegraph

+

+# 3. Start RAG Service

+docker pull hugegraph/rag:latest

+docker run -itd --name rag \

+ -v /path/to/your/hugegraph-llm/.env:/home/work/hugegraph-llm/.env \

+ -p 8001:8001 --network hugegraph-net hugegraph/rag

+

+# 4. Monitor logs

+docker logs -f rag

+```

+

+### Option 3: Build from Source

+

+For development and customization:

+

+```bash

+# 1. Start HugeGraph Server

+docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

+

+# 2. Install UV package manager

+curl -LsSf https://astral.sh/uv/install.sh | sh

+

+# 3. Clone and setup project

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai/hugegraph-llm

+

+# 4. Create virtual environment and install dependencies

+uv venv && source .venv/bin/activate

+uv pip install -e .

+

+# 5. Launch RAG demo

+python -m hugegraph_llm.demo.rag_demo.app

+# Access at: http://127.0.0.1:8001

+

+# 6. (Optional) Custom host/port

+python -m hugegraph_llm.demo.rag_demo.app --host 127.0.0.1 --port 18001

+```

+

+#### Additional Setup (Optional)

+

+```bash

+# Download NLTK stopwords for better text processing

+python ./hugegraph_llm/operators/common_op/nltk_helper.py

+

+# Update configuration files

+python -m hugegraph_llm.config.generate --update

+```

+

> [!TIP]

-> You can also refer to our

[quick-start](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)

doc to understand how to use it & the basic query logic 🚧

+> Check our [Quick Start

Guide](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)

for detailed usage examples and query logic explanations.

+

+## 💡 Usage Examples

+

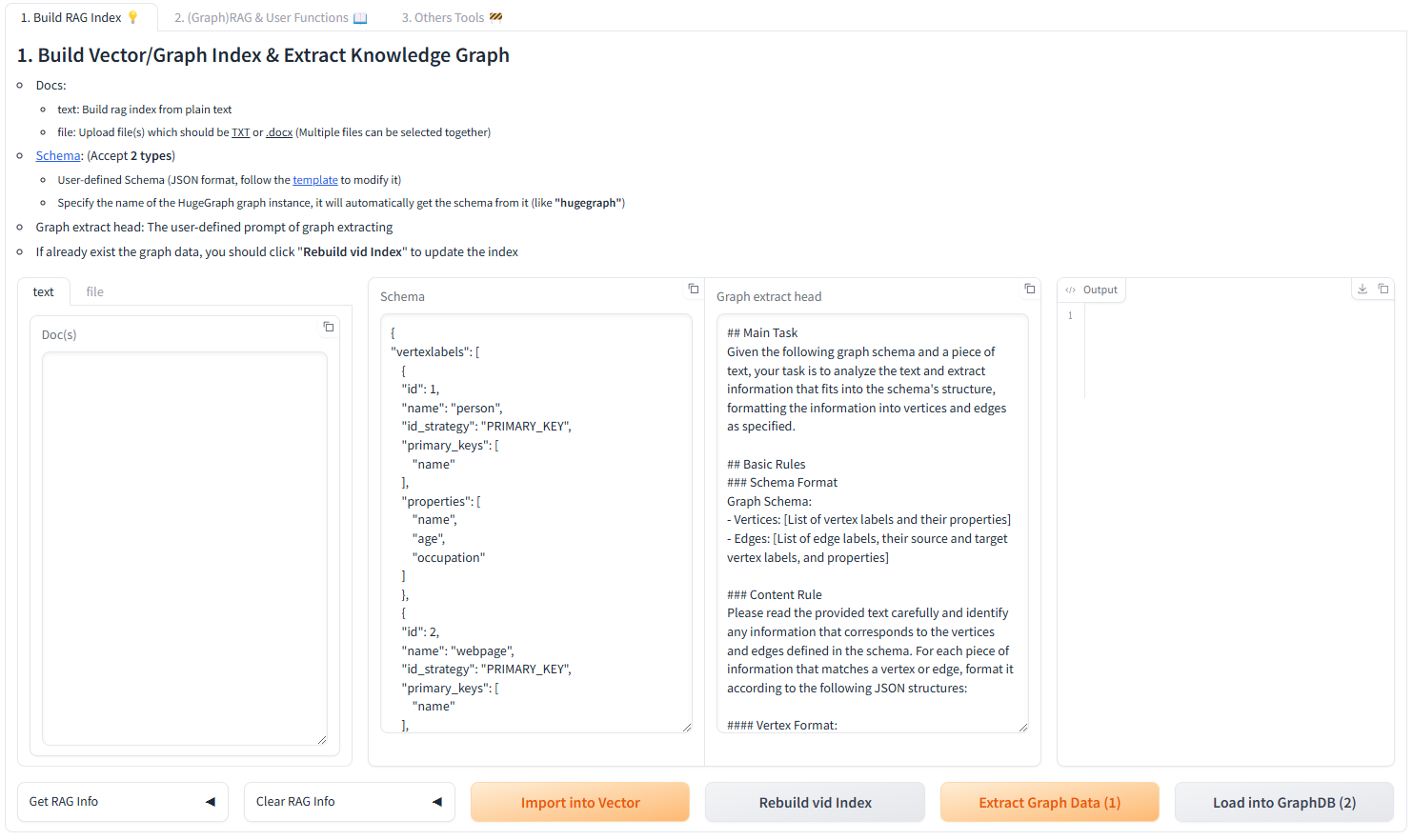

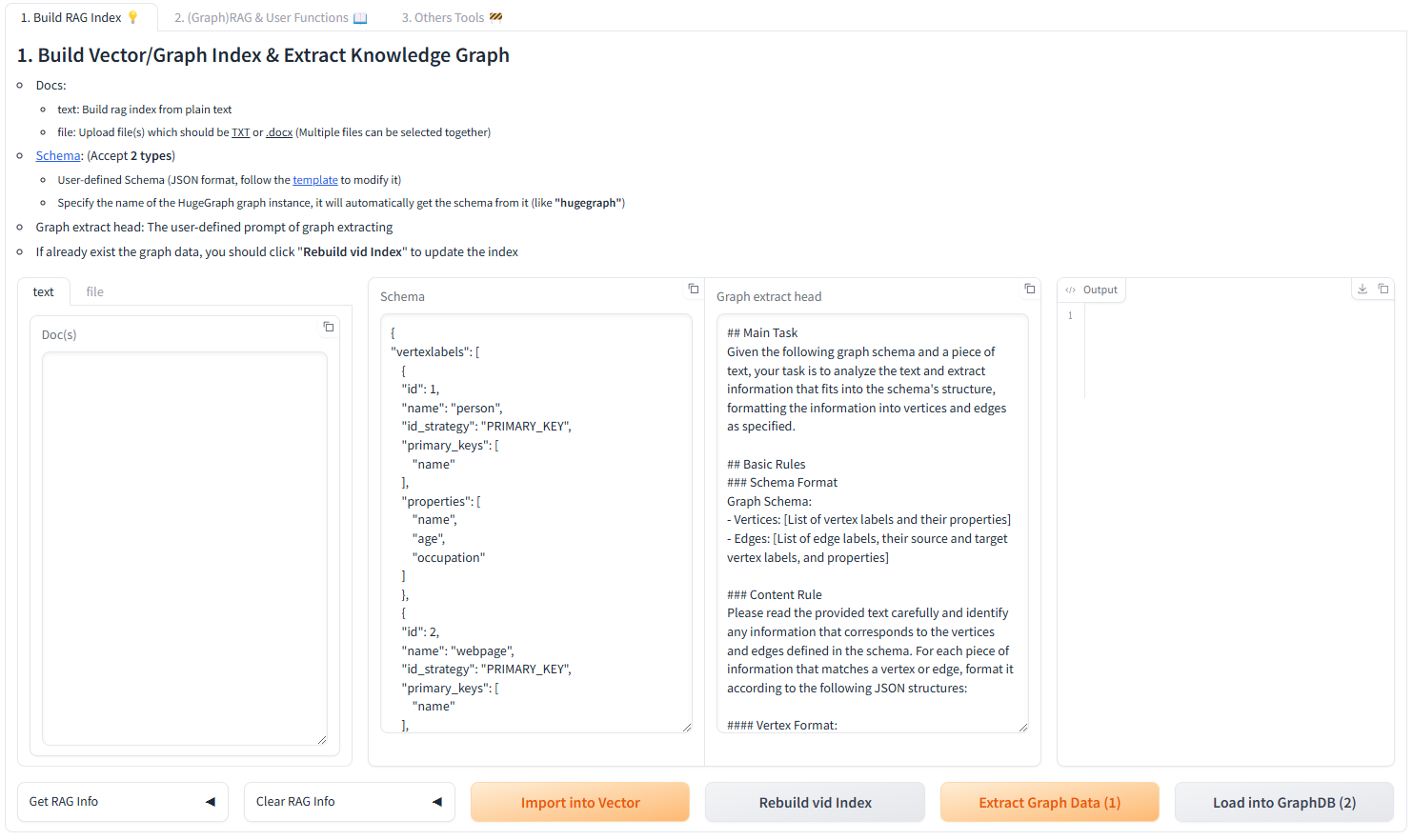

+### Knowledge Graph Construction

-## 4. Examples

+#### Interactive Web Interface

-### 4.1 Build a knowledge graph in HugeGraph through LLM

+Use the Gradio interface for visual knowledge graph building:

-#### 4.1.1 Build a knowledge graph through the gradio interactive interface

+**Input Options:**

+- **Text**: Direct text input for RAG index creation

+- **Files**: Upload TXT or DOCX files (multiple selection supported)

-**Parameter description:**

+**Schema Configuration:**

+- **Custom Schema**: JSON format following our

[template](https://github.com/apache/incubator-hugegraph-ai/blob/aff3bbe25fa91c3414947a196131be812c20ef11/hugegraph-llm/src/hugegraph_llm/config/config_data.py#L125)

+- **HugeGraph Schema**: Use existing graph instance schema (e.g., "hugegraph")

-- Docs:

- - text: Build rag index from plain text

- - file: Upload file(s) which should be <u>TXT</u> or <u>.docx</u> (Multiple

files can be selected together)

-- [Schema](https://hugegraph.apache.org/docs/clients/restful-api/schema/):

(Except **2 types**)

- - User-defined Schema (JSON format, follow the

[template](https://github.com/apache/incubator-hugegraph-ai/blob/aff3bbe25fa91c3414947a196131be812c20ef11/hugegraph-llm/src/hugegraph_llm/config/config_data.py#L125)

- to modify it)

- - Specify the name of the HugeGraph graph instance, it will automatically

get the schema from it (like

- **"hugegraph"**)

-- Graph extract head: The user-defined prompt of graph extracting

-- If it already exists the graph data, you should click "**Rebuild vid

Index**" to update the index

+

-

+#### Programmatic Construction

-#### 4.1.2 Build a knowledge graph through code

+Build knowledge graphs with code using the `KgBuilder` class:

-The `KgBuilder` class is used to construct a knowledge graph. Here is a brief

usage guide:

+```python

+from hugegraph_llm.models.llms.init_llm import LLMs

+from hugegraph_llm.operators.kg_construction_task import KgBuilder

-1. **Initialization**: The `KgBuilder` class is initialized with an instance

of a language model.

-This can be obtained from the `LLMs` class.

- Initialize the LLMs instance, get the LLM, and then create a task instance

`KgBuilder` for graph construction. `KgBuilder` defines multiple operators, and

users can freely combine them according to their needs. (tip: `print_result()`

can print the result of each step in the console, without affecting the overall

execution logic)

+# Initialize and chain operations

+TEXT = "Your input text here..."

+builder = KgBuilder(LLMs().get_chat_llm())

- ```python

- from hugegraph_llm.models.llms.init_llm import LLMs

- from hugegraph_llm.operators.kg_construction_task import KgBuilder

+(

+ builder

+ .import_schema(from_hugegraph="talent_graph").print_result()

+ .chunk_split(TEXT).print_result()

+ .extract_info(extract_type="property_graph").print_result()

+ .commit_to_hugegraph()

+ .run()

+)

+```

+

+**Pipeline Workflow:**

+```mermaid

+graph LR

+ A[Import Schema] --> B[Chunk Split]

+ B --> C[Extract Info]

+ C --> D[Commit to HugeGraph]

+ D --> E[Execute Pipeline]

+

+ style A fill:#fff2cc

+ style B fill:#d5e8d4

+ style C fill:#dae8fc

+ style D fill:#f8cecc

+ style E fill:#e1d5e7

+```

+

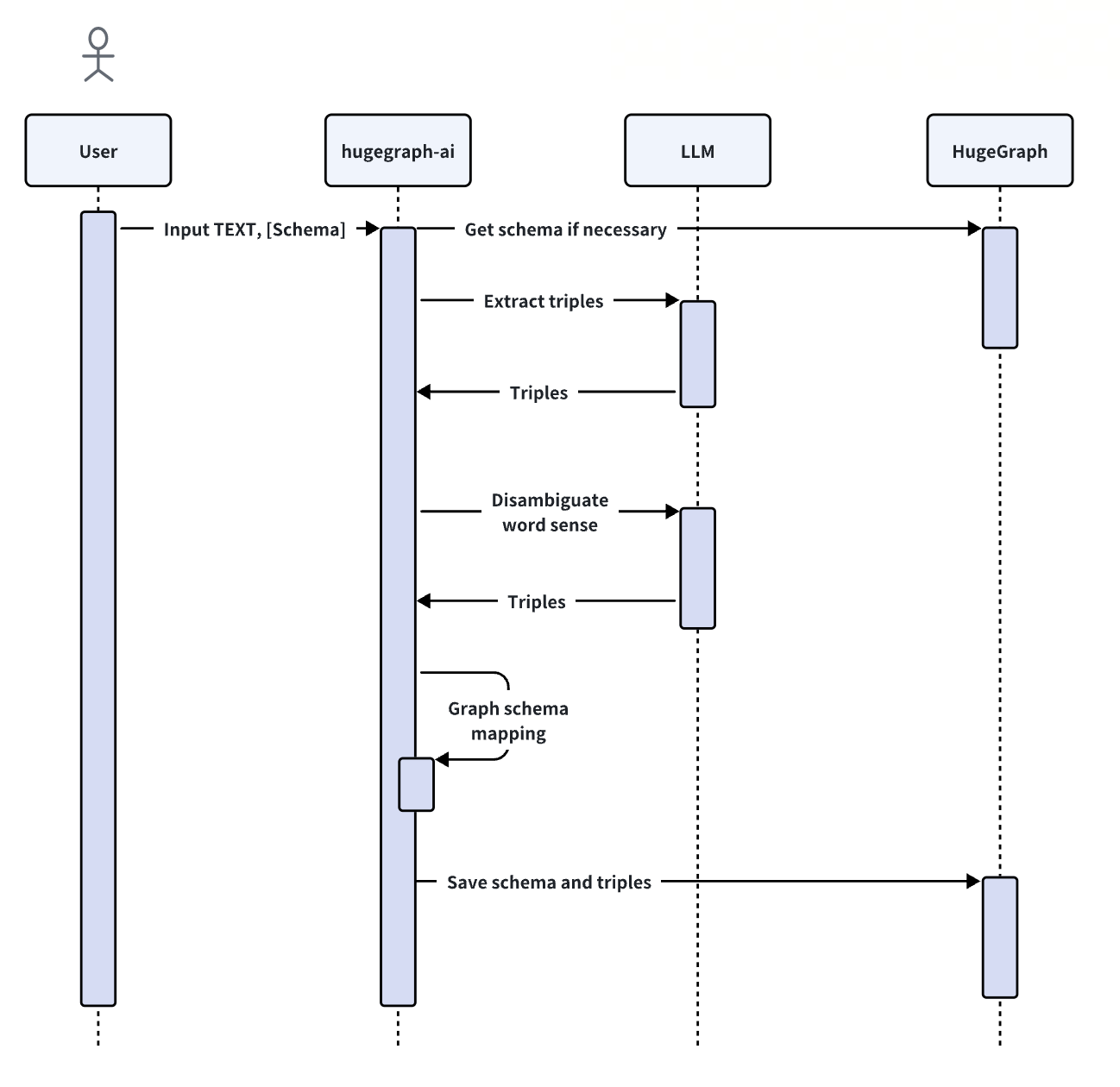

+### Graph-Enhanced RAG

+

+Leverage HugeGraph for retrieval-augmented generation:

+

+```python

+from hugegraph_llm.operators.graph_rag_task import RAGPipeline

+

+# Initialize RAG pipeline

+graph_rag = RAGPipeline()

+

+# Execute RAG workflow

+(

+ graph_rag

+ .extract_keywords(text="Tell me about Al Pacino.")

+ .keywords_to_vid()

+ .query_graphdb(max_deep=2, max_graph_items=30)

+ .merge_dedup_rerank()

+ .synthesize_answer(vector_only_answer=False, graph_only_answer=True)

+ .run(verbose=True)

+)

+```

+

+**RAG Pipeline Flow:**

+```mermaid

+graph TD

+ A[User Query] --> B[Extract Keywords]

+ B --> C[Match Graph Nodes]

+ C --> D[Retrieve Graph Context]

+ D --> E[Rerank Results]

+ E --> F[Generate Answer]

- TEXT = ""

- builder = KgBuilder(LLMs().get_chat_llm())

- (

- builder

- .import_schema(from_hugegraph="talent_graph").print_result()

- .chunk_split(TEXT).print_result()

- .extract_info(extract_type="property_graph").print_result()

- .commit_to_hugegraph()

- .run()

- )

- ```

-

-2. **Import Schema**: The `import_schema` method is used to import a schema

from a source. The source can be a HugeGraph instance, a user-defined schema,

or an extraction result. The method `print_result` can be chained to print the

result.

- ```python

- # Import schema from a HugeGraph instance

- builder.import_schema(from_hugegraph="xxx").print_result()

- # Import schema from an extraction result

- builder.import_schema(from_extraction="xxx").print_result()

- # Import schema from user-defined schema

- builder.import_schema(from_user_defined="xxx").print_result()

- ```

-3. **Chunk Split**: The `chunk_split` method is used to split the input text

into chunks. The text should be passed as a string argument to the method.

- ```python

- # Split the input text into documents

- builder.chunk_split(TEXT, split_type="document").print_result()

- # Split the input text into paragraphs

- builder.chunk_split(TEXT, split_type="paragraph").print_result()

- # Split the input text into sentences

- builder.chunk_split(TEXT, split_type="sentence").print_result()

- ```

-4. **Extract Info**: The `extract_info` method is used to extract info from a

text. The text should be passed as a string argument to the method.

- ```python

- TEXT = "Meet Sarah, a 30-year-old attorney, and her roommate, James, whom

she's shared a home with since 2010."

- # extract property graph from the input text

- builder.extract_info(extract_type="property_graph").print_result()

- # extract triples from the input text

- builder.extract_info(extract_type="property_graph").print_result()

- ```

-5. **Commit to HugeGraph**: The `commit_to_hugegraph` method is used to commit

the constructed knowledge graph to a HugeGraph instance.

- ```python

- builder.commit_to_hugegraph().print_result()

- ```

-6. **Run**: The `run` method is used to execute the chained operations.

- ```python

- builder.run()

- ```

- The methods of the `KgBuilder` class can be chained together to perform a

sequence of operations.

-

-### 4.2 Retrieval augmented generation (RAG) based on HugeGraph

-

-The `RAGPipeline` class is used to integrate HugeGraph with large language

models to provide retrieval-augmented generation capabilities.

-Here is a brief usage guide:

-

-1. **Extract Keyword**: Extract keywords and expand synonyms.

- ```python

- from hugegraph_llm.operators.graph_rag_task import RAGPipeline

- graph_rag = RAGPipeline()

- graph_rag.extract_keywords(text="Tell me about Al Pacino.").print_result()

- ```

-2. **Match Vid from Keywords**: Match the nodes with the keywords in the graph.

- ```python

- graph_rag.keywords_to_vid().print_result()

- ```

-3. **Query Graph for Rag**: Retrieve the corresponding keywords and their

multi-degree associated relationships from HugeGraph.

- ```python

- graph_rag.query_graphdb(max_deep=2, max_graph_items=30).print_result()

- ```

-4. **Rerank Searched Result**: Rerank the searched results based on the

similarity between the question and the results.

- ```python

- graph_rag.merge_dedup_rerank().print_result()

- ```

-5. **Synthesize Answer**: Summarize the results and organize the language to

answer the question.

- ```python

- graph_rag.synthesize_answer(vector_only_answer=False,

graph_only_answer=True).print_result()

- ```

-6. **Run**: The `run` method is used to execute the above operations.

- ```python

- graph_rag.run(verbose=True)

- ```

+ style A fill:#e3f2fd

+ style B fill:#f3e5f5

+ style C fill:#e8f5e8

+ style D fill:#fff3e0

+ style E fill:#fce4ec

+ style F fill:#e0f2f1

+```

+

+## 🔧 Configuration

+

+After running the demo, configuration files are automatically generated:

+

+- **Environment**: `hugegraph-llm/.env`

+- **Prompts**:

`hugegraph-llm/src/hugegraph_llm/resources/demo/config_prompt.yaml`

+

+> [!NOTE]

+> Configuration changes are automatically saved when using the web interface.

For manual changes, simply refresh the page to load updates.

+

+**LLM Provider Support**: This project uses

[LiteLLM](https://docs.litellm.ai/docs/providers) for multi-provider LLM

support.

+

+## 📚 Additional Resources

+

+- **Graph Visualization**: Use [HugeGraph

Hubble](https://hub.docker.com/r/hugegraph/hubble) for data analysis and schema

management

+- **API Documentation**: Explore our REST API endpoints for integration

+- **Community**: Join our discussions and contribute to the project

+

+---

+

+**License**: Apache License 2.0 | **Community**: [Apache

HugeGraph](https://hugegraph.apache.org/)