This is an automated email from the ASF dual-hosted git repository.

jin pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-hugegraph-doc.git

The following commit(s) were added to refs/heads/master by this push:

new 3e1c6102 refactor(ai): sync the ai doc/structure (#409)

3e1c6102 is described below

commit 3e1c610281c91ea3a5c600b393088e9ffae34799

Author: Haibo Yang <haibo94...@gmail.com>

AuthorDate: Wed Jul 2 02:41:00 2025 -0700

refactor(ai): sync the ai doc/structure (#409)

* Refactor HugeGraph-AI quickstart docs and add LLM guide

---------

Co-authored-by: imbajin <j...@apache.org>

---

.gitignore | 2 +

config.toml | 4 +

content/cn/docs/quickstart/hugegraph-ai/_index.md | 379 +++++++++-----------

.../docs/quickstart/hugegraph-ai/hugegraph-llm.md | 235 +++++++++++++

.../cn/docs/quickstart/hugegraph-ai/quick_start.md | 2 +-

content/en/docs/quickstart/hugegraph-ai/_index.md | 383 +++++++++------------

.../docs/quickstart/hugegraph-ai/hugegraph-llm.md | 237 +++++++++++++

.../en/docs/quickstart/hugegraph-ai/quick_start.md | 2 +-

8 files changed, 800 insertions(+), 444 deletions(-)

diff --git a/.gitignore b/.gitignore

index bf7cbe32..34a7eee3 100644

--- a/.gitignore

+++ b/.gitignore

@@ -15,3 +15,5 @@ nohup.out

/dataSources/

/dataSources.local.xml

.idea/

+

+.DS_Store

diff --git a/config.toml b/config.toml

index b583dc4a..b56a4f9f 100644

--- a/config.toml

+++ b/config.toml

@@ -21,6 +21,10 @@ enableGitInfo = true

# Comment out to enable taxonomies in Docsy

# disableKinds = ["taxonomy", "taxonomyTerm"]

+# TODO: upgrade docsy version to latest one (curretly mermaid.js is not

working)

+[params.mermaid]

+enable = true

+

###############################################################################

# Hugo - Top-level navigation (horizontal)

###############################################################################

diff --git a/content/cn/docs/quickstart/hugegraph-ai/_index.md

b/content/cn/docs/quickstart/hugegraph-ai/_index.md

index c9a8eadd..ba2c9722 100644

--- a/content/cn/docs/quickstart/hugegraph-ai/_index.md

+++ b/content/cn/docs/quickstart/hugegraph-ai/_index.md

@@ -4,222 +4,163 @@ linkTitle: "HugeGraph-AI"

weight: 3

---

-> 请参阅 AI 仓库的

[README](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-llm#readme)

以获取最新的文档,官网会**定期**更新同步。

-

-> AI 总结项目文档:[](https://deepwiki.com/apache/incubator-hugegraph-ai)

-

-## 1. 摘要

-

-`hugegraph-llm` 是一个用于实现和研究大语言模型相关功能的工具。

-该项目包含可运行的演示(demo),也可以作为第三方库使用。

-

-众所周知,图系统可以帮助大模型解决时效性和幻觉等挑战,

-而大模型则可以帮助图系统解决成本相关的问题。

-

-通过这个项目,我们旨在降低图系统的使用成本,并减少构建知识图谱的复杂性。

-本项目将为图系统和大语言模型提供更多的应用和集成解决方案。

-1. 通过 LLM + HugeGraph 构建知识图谱

-2. 使用自然语言操作图数据库 (Gremlin/Cypher)

-3. 知识图谱补充答案上下文 (GraphRAG → Graph Agent)

-

-## 2. 环境要求

-> [!IMPORTANT]

-> - python 3.10+ (未在 3.12 中测试)

-> - hugegraph-server 1.3+ (建议使用 1.5+)

-> - uv 0.7+

-

-## 3. 准备工作

-

-### 3.1 Docker

-

-**Docker 部署**

- 您也可以使用 Docker 来部署 HugeGraph-AI:

- - 确保您已安装 Docker

- - 我们提供两个容器镜像:

- - **镜像 1**: [hugegraph/rag](https://hub.docker.com/r/hugegraph/rag/tags)

- 用于构建和运行 RAG 功能,适合快速部署和直接修改源码

- - **镜像 2**:

[hugegraph/rag-bin](https://hub.docker.com/r/hugegraph/rag-bin/tags)

- 使用 Nuitka 编译的 C 二进制转译版本,性能更好、更高效

- - 拉取 Docker 镜像:

- ```bash

- docker pull hugegraph/rag:latest # 拉取镜像1

- docker pull hugegraph/rag-bin:latest # 拉取镜像2

- ```

- - 启动 Docker 容器:

- ```bash

- docker run -it --name rag -v /path/to/.env:/home/work/hugegraph-llm/.env

-p 8001:8001 hugegraph/rag bash

- docker run -it --name rag-bin -v

/path/to/.env:/home/work/hugegraph-llm/.env -p 8001:8001 hugegraph/rag-bin bash

- ```

- - 启动 Graph RAG 演示:

- ```bash

- # 针对镜像 1

- python ./src/hugegraph_llm/demo/rag_demo/app.py # 或运行 python -m

hugegraph_llm.demo.rag_demo.app

-

- # 针对镜像 2

- ./app.dist/app.bin

- ```

- - 访问接口 http://localhost:8001

-

-### 3.2 从源码构建

-

-1. 启动 HugeGraph 数据库,您可以通过

[Docker](https://hub.docker.com/r/hugegraph/hugegraph)/[二进制包](https://hugegraph.apache.org/docs/download/download/)

运行它。

- 有一个使用 docker 的简单方法:

- ```bash

- docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

- ```

- 更多指引请参阅详细文档

[doc](/docs/quickstart/hugegraph/hugegraph-server/#31-use-docker-container-convenient-for-testdev)。

-

-2. 配置 uv 环境,使用官方安装器安装 uv,其他安装方法请参见 [uv

文档](https://docs.astral.sh/uv/configuration/installer/)。

- ```bash

- # 如果遇到网络问题,可以尝试使用 pipx 或 pip 安装 uv,详情请参阅 uv 文档

- curl -LsSf

[https://astral.sh/uv/install.sh](https://astral.sh/uv/install.sh) | sh - #

安装最新版本,如 0.7.3+

- ```

-

-3. 克隆本项目

- ```bash

- git clone

[https://github.com/apache/incubator-hugegraph-ai.git](https://github.com/apache/incubator-hugegraph-ai.git)

- ```

-4. 配置依赖环境

- ```bash

- cd incubator-hugegraph-ai/hugegraph-llm

- uv venv && source .venv/bin/activate

- uv pip install -e .

- ```

- 如果由于网络问题导致依赖下载失败或过慢,建议修改 `hugegraph-llm/pyproject.toml`。

-

-5. 启动 **Graph RAG** 的 Gradio 交互式演示,运行以下命令,然后在浏览器中打开 http://127.0.0.1:8001。

- ```bash

- python -m hugegraph_llm.demo.rag_demo.app # 等同于 "uv run xxx"

- ```

- 默认主机是 `0.0.0.0`,端口是 `8001`。您可以通过传递命令行参数 `--host` 和 `--port` 来更改它们。

- ```bash

- python -m hugegraph_llm.demo.rag_demo.app --host 127.0.0.1 --port 18001

- ```

-

-6. 运行 Web 演示后,将在路径 `hugegraph-llm/.env` 下自动生成配置文件 `.env`。此外,还将在路径

`hugegraph-llm/src/hugegraph_llm/resources/demo/config_prompt.yaml`

下生成一个与提示(prompt)相关的配置文件 `config_prompt.yaml`。

- 您可以在网页上修改内容,触发相应功能后,更改将自动保存到配置文件中。您也可以直接修改文件而无需重启 Web 应用;刷新页面即可加载您的最新更改。

- (可选) 要重新生成配置文件,您可以使用 `config.generate` 并加上 `-u` 或 `--update` 参数。

- ```bash

- python -m hugegraph_llm.config.generate --update

- ```

- 注意:`Litellm` 支持多个 LLM 提供商,请参阅

[litellm.ai](https://docs.litellm.ai/docs/providers) 进行配置。

-7. (__可选__) 您可以使用

-

[hugegraph-hubble](/docs/quickstart/toolchain/hugegraph-hubble/#21-use-docker-convenient-for-testdev)

- 来访问图数据,可以通过

[Docker/Docker-Compose](https://hub.docker.com/r/hugegraph/hubble)

- 运行它以获取指导。(Hubble 是一个图分析仪表盘,包括数据加载/Schema管理/图遍历/展示功能)。

-8. (__可选__) 离线下载 NLTK 停用词

- ```bash

- python ./hugegraph_llm/operators/common_op/nltk_helper.py

- ```

-> [!TIP]

->

您也可以参考我们的[快速入门](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)文档来了解如何使用它以及基本的查询逻辑

🚧

-

-## 4. 示例

-

-### 4.1 通过 LLM 在 HugeGraph 中构建知识图谱

-

-#### 4.1.1 通过 Gradio 交互界面构建知识图谱

-

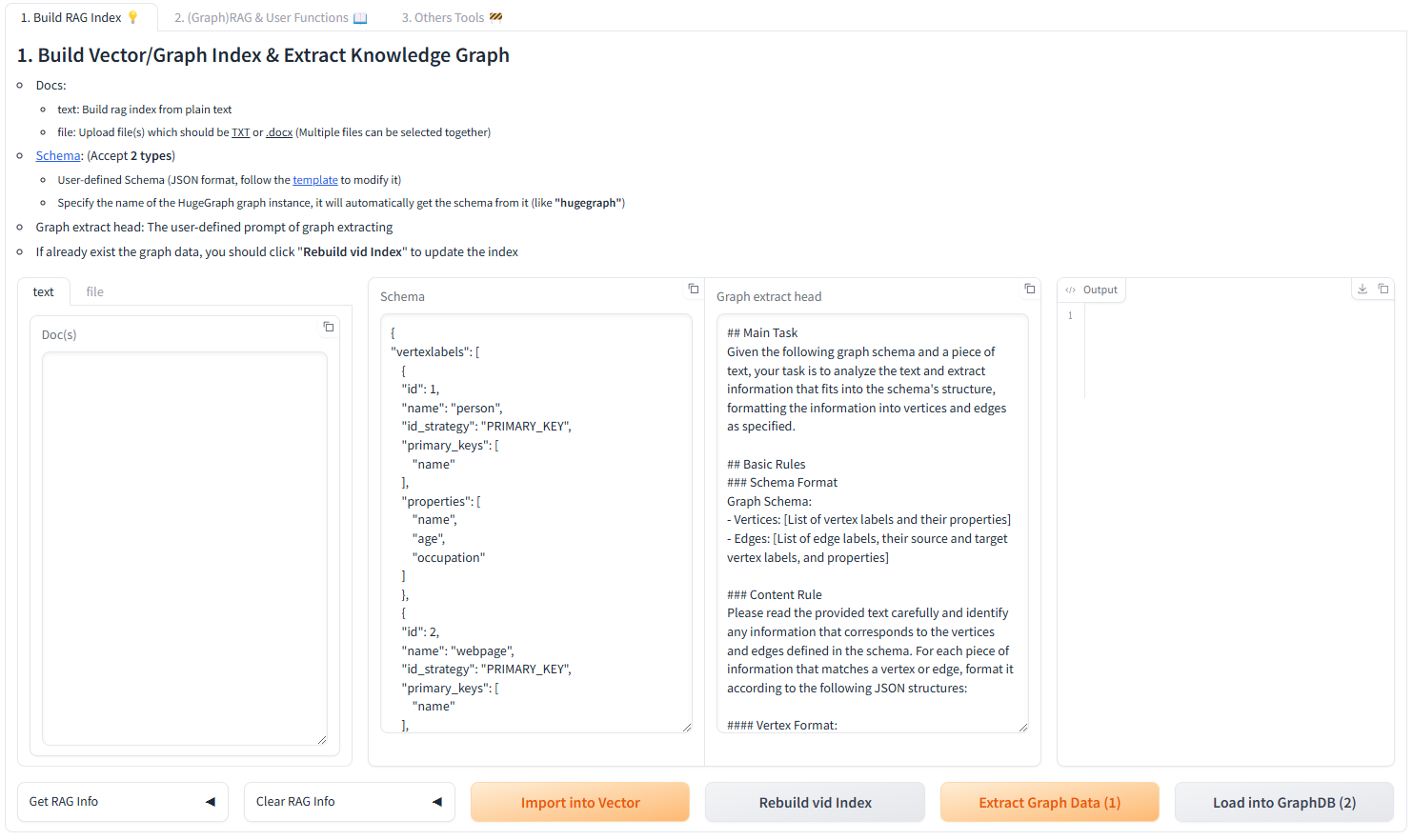

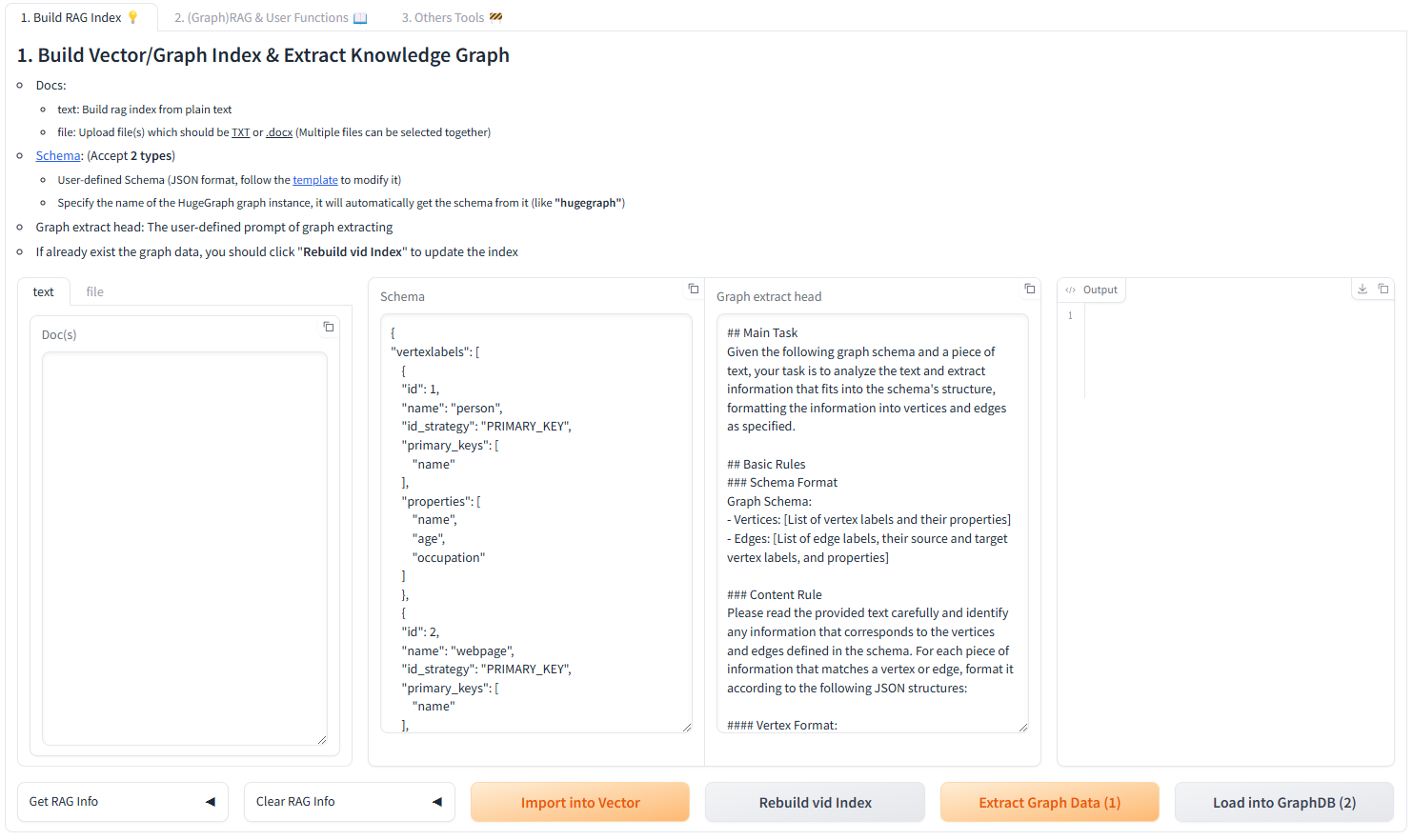

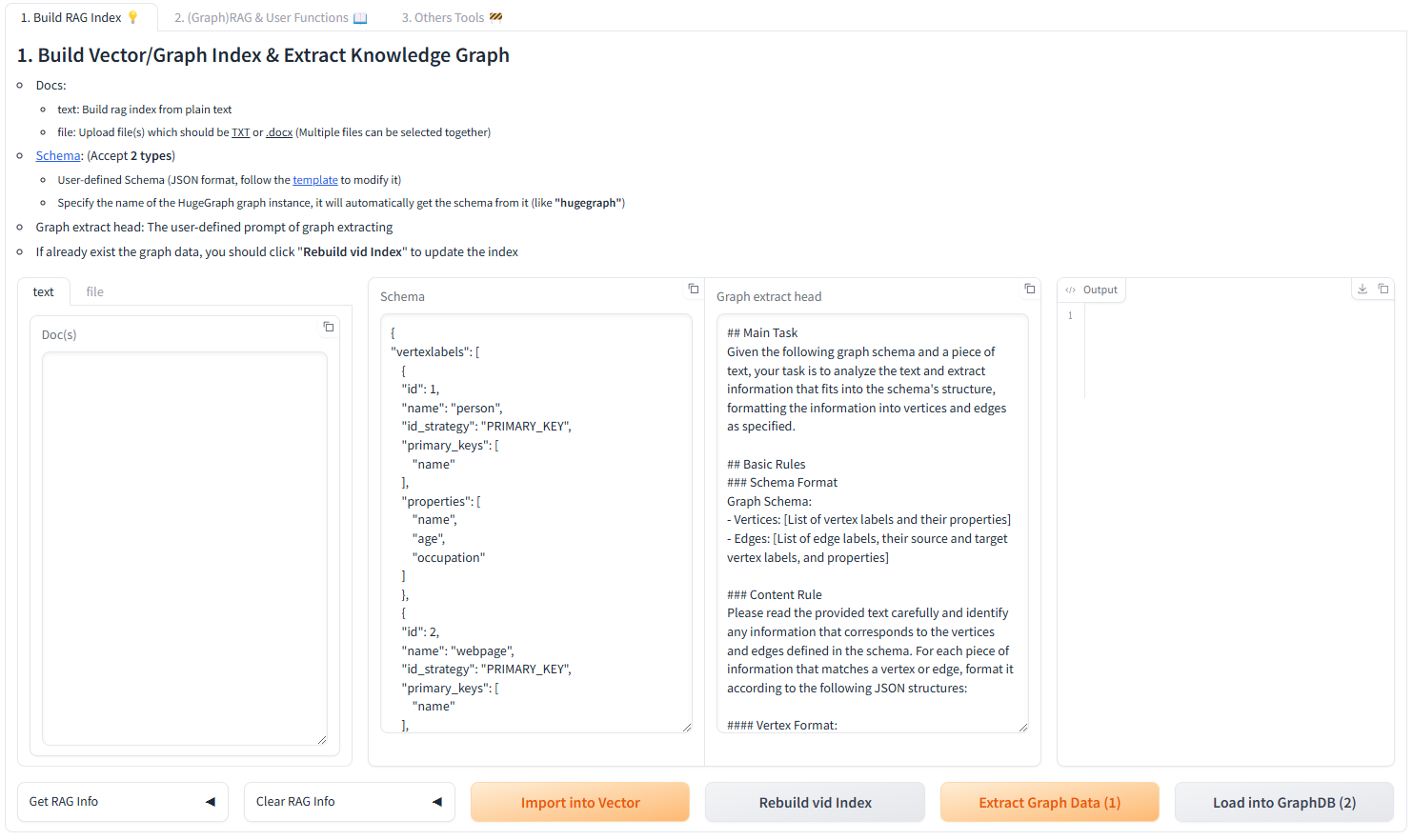

-**参数说明:**

-

-- Docs(文档):

- - text: 从纯文本构建 RAG 索引

- - file: 上传文件,文件应为 <u>.txt</u> 或 <u>.docx</u> (可同时选择多个文件)

-- [Schema](https://hugegraph.apache.org/docs/clients/restful-api/schema/)

(模式): (除**两种类型**外)

- - 用户自定义 Schema (JSON 格式,

遵循此[模板](https://github.com/apache/incubator-hugegraph-ai/blob/aff3bbe25fa91c3414947a196131be812c20ef11/hugegraph-llm/src/hugegraph_llm/config/config_data.py#L125)进行修改)

- - 指定 HugeGraph 图实例的名称,它将自动从中获取 Schema (例如 **"hugegraph"**)

-- Graph extract head (图谱抽取提示头): 用户自定义的图谱抽取提示

-- 如果已存在图数据,您应点击 "**Rebuild vid Index**" (重建顶点ID索引) 来更新索引

-

-

-

-#### 4.1.2 通过代码构建知识图谱

-

-`KgBuilder` 类用于构建知识图谱。以下是简要使用指南:

-

-1. **初始化**: `KgBuilder` 类使用一个语言模型的实例进行初始化。该实例可以从 `LLMs` 类中获取。

- 初始化 LLMs 实例,获取 LLM,然后为图谱构建创建一个任务实例 `KgBuilder`。`KgBuilder`

定义了多个算子,用户可以根据需要自由组合它们。(提示: `print_result()` 可以在控制台中打印每一步的结果,而不影响整体执行逻辑)

-

- ```python

- from hugegraph_llm.models.llms.init_llm import LLMs

- from hugegraph_llm.operators.kg_construction_task import KgBuilder

-

- TEXT = ""

- builder = KgBuilder(LLMs().get_chat_llm())

- (

- builder

- .import_schema(from_hugegraph="talent_graph").print_result()

- .chunk_split(TEXT).print_result()

- .extract_info(extract_type="property_graph").print_result()

- .commit_to_hugegraph()

- .run()

- )

- ```

-

-2. **导入 Schema**: `import_schema` 方法用于从一个来源导入 Schema。来源可以是一个 HugeGraph

实例、一个用户定义的 Schema 或一个抽取结果。可以链接 `print_result` 方法来打印结果。

- ```python

- # 从 HugeGraph 实例导入 Schema

- builder.import_schema(from_hugegraph="xxx").print_result()

- # 从抽取结果导入 Schema

- builder.import_schema(from_extraction="xxx").print_result()

- # 从用户定义的 Schema 导入

- builder.import_schema(from_user_defined="xxx").print_result()

- ```

-3. **分块切分**: `chunk_split` 方法用于将输入文本切分成块。文本应作为字符串参数传递给该方法。

- ```python

- # 将输入文本切分成文档

- builder.chunk_split(TEXT, split_type="document").print_result()

- # 将输入文本切分成段落

- builder.chunk_split(TEXT, split_type="paragraph").print_result()

- # 将输入文本切分成句子

- builder.chunk_split(TEXT, split_type="sentence").print_result()

- ```

-4. **提取信息**: `extract_info` 方法用于从文本中提取信息。文本应作为字符串参数传递给该方法。

- ```python

- TEXT = "Meet Sarah, a 30-year-old attorney, and her roommate, James, whom

she's shared a home with since 2010."

- # 从输入文本中提取属性图

- builder.extract_info(extract_type="property_graph").print_result()

- # 从输入文本中提取三元组

- builder.extract_info(extract_type="property_graph").print_result()

- ```

-5. **提交到 HugeGraph**: `commit_to_hugegraph` 方法用于将构建的知识图谱提交到一个 HugeGraph 实例。

- ```python

- builder.commit_to_hugegraph().print_result()

- ```

-6. **运行**: `run` 方法用于执行链式操作。

- ```python

- builder.run()

- ```

- `KgBuilder` 类的方法可以链接在一起以执行一系列操作。

-

-### 4.2 基于 HugeGraph 的检索增强生成 (RAG)

-

-`RAGPipeline` 类用于将 HugeGraph 与大语言模型集成,以提供检索增强生成的能力。

-以下是简要使用指南:

-

-1. **提取关键词**: 提取关键词并扩展同义词。

- ```python

- from hugegraph_llm.operators.graph_rag_task import RAGPipeline

- graph_rag = RAGPipeline()

- graph_rag.extract_keywords(text="告诉我关于 Al Pacino 的事情。").print_result()

- ```

-2. **从关键词匹配顶点ID**: 在图中用关键词匹配节点。

- ```python

- graph_rag.keywords_to_vid().print_result()

- ```

-3. **查询图以进行 RAG**: 从 HugeGraph 中检索相应的关键词及其多跳关联关系。

- ```python

- graph_rag.query_graphdb(max_deep=2, max_graph_items=30).print_result()

- ```

-4. **重排搜索结果**: 根据问题和结果之间的相似度对搜索结果进行重排序。

- ```python

- graph_rag.merge_dedup_rerank().print_result()

- ```

-5. **综合答案**: 总结结果并组织语言来回答问题。

- ```python

- graph_rag.synthesize_answer(vector_only_answer=False,

graph_only_answer=True).print_result()

- ```

-6. **运行**: `run` 方法用于执行上述操作。

- ```python

- graph_rag.run(verbose=True)

- ```

\ No newline at end of file

+[](https://www.apache.org/licenses/LICENSE-2.0.html)

+[](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+`hugegraph-ai` 整合了 [HugeGraph](https://github.com/apache/hugegraph)

与人工智能功能,为开发者构建 AI 驱动的图应用提供全面支持。

+

+## ✨ 核心功能

+

+- **GraphRAG**:利用图增强检索构建智能问答系统

+- **知识图谱构建**:使用大语言模型从文本自动构建图谱

+- **图机器学习**:集成 20 多种图学习算法(GCN、GAT、GraphSAGE 等)

+- **Python 客户端**:易于使用的 HugeGraph Python 操作接口

+- **AI 智能体**:提供智能图分析与推理能力

+

+## 🚀 快速开始

+

+> [!NOTE]

+> 如需完整的部署指南和详细示例,请参阅

[hugegraph-llm/README.md](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/README.md)。

+

+### 环境要求

+- Python 3.9+(建议 hugegraph-llm 使用 3.10+)

+- [uv](https://docs.astral.sh/uv/)(推荐的包管理器)

+- HugeGraph Server 1.3+(建议 1.5+)

+- Docker(可选,用于容器化部署)

+

+### 方案一:Docker 部署(推荐)

+

+```bash

+# 克隆仓库

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai

+

+# 设置环境并启动服务

+cp docker/env.template docker/.env

+# 编辑 docker/.env 设置你的 PROJECT_PATH

+cd docker

+docker-compose -f docker-compose-network.yml up -d

+

+# 访问服务:

+# - HugeGraph Server: http://localhost:8080

+# - RAG 服务: http://localhost:8001

+```

+

+### 方案二:源码安装

+

+```bash

+# 1. 启动 HugeGraph Server

+docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

+

+# 2. 克隆并设置项目

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai/hugegraph-llm

+

+# 3. 安装依赖

+uv venv && source .venv/bin/activate

+uv pip install -e .

+

+# 4. 启动演示

+python -m hugegraph_llm.demo.rag_demo.app

+# 访问 http://127.0.0.1:8001

+```

+

+### 基本用法示例

+

+#### GraphRAG - 问答

+```python

+from hugegraph_llm.operators.graph_rag_task import RAGPipeline

+

+# 初始化 RAG 工作流

+graph_rag = RAGPipeline()

+

+# 对你的图进行提问

+result = (graph_rag

+ .extract_keywords(text="给我讲讲 Al Pacino 的故事。")

+ .keywords_to_vid()

+ .query_graphdb(max_deep=2, max_graph_items=30)

+ .synthesize_answer()

+ .run())

+```

+

+#### 知识图谱构建

+```python

+from hugegraph_llm.models.llms.init_llm import LLMs

+from hugegraph_llm.operators.kg_construction_task import KgBuilder

+

+# 从文本构建知识图谱

+TEXT = "你的文本内容..."

+builder = KgBuilder(LLMs().get_chat_llm())

+

+(builder

+ .import_schema(from_hugegraph="hugegraph")

+ .chunk_split(TEXT)

+ .extract_info(extract_type="property_graph")

+ .commit_to_hugegraph()

+ .run())

+```

+

+#### 图机器学习

+```python

+from pyhugegraph.client import PyHugeClient

+# 连接 HugeGraph 并运行机器学习算法

+# 详细示例请参阅 hugegraph-ml 文档

+```

+

+## 📦 模块

+

+###

[hugegraph-llm](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-llm)

[](https://deepwiki.com/apache/incubator-hugegraph-ai)

+用于图应用的大语言模型集成:

+- **GraphRAG**:基于图数据的检索增强生成

+- **知识图谱构建**:从文本自动构建知识图谱

+- **自然语言接口**:使用自然语言查询图

+- **AI 智能体**:智能图分析与推理

+

+###

[hugegraph-ml](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-ml)

+包含 20+ 算法的图机器学习:

+- **节点分类**:GCN、GAT、GraphSAGE、APPNP 等

+- **图分类**:DiffPool、P-GNN 等

+- **图嵌入**:DeepWalk、Node2Vec、GRACE 等

+- **链接预测**:SEAL、GATNE 等

+

+###

[hugegraph-python-client](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-python-client)

+用于 HugeGraph 操作的 Python 客户端:

+- **Schema 管理**:定义顶点/边标签和属性

+- **CRUD 操作**:创建、读取、更新、删除图数据

+- **Gremlin 查询**:执行图遍历查询

+- **REST API**:完整的 HugeGraph REST API 覆盖

+

+## 📚 了解更多

+

+- [项目主页](https://hugegraph.apache.org/docs/quickstart/hugegraph-ai/)

+- [LLM

快速入门指南](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)

+- [DeepWiki AI 文档](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+## 🔗 相关项目

+

+- [hugegraph](https://github.com/apache/hugegraph) - 核心图数据库

+- [hugegraph-toolchain](https://github.com/apache/hugegraph-toolchain) -

开发工具(加载器、仪表盘等)

+- [hugegraph-computer](https://github.com/apache/hugegraph-computer) - 图计算系统

+

+## 🤝 贡献

+

+我们欢迎贡献!详情请参阅我们的[贡献指南](https://hugegraph.apache.org/docs/contribution-guidelines/)。

+

+**开发设置:**

+- 使用 [GitHub Desktop](https://desktop.github.com/) 更轻松地管理 PR

+- 提交 PR 前运行 `./style/code_format_and_analysis.sh`

+- 报告错误前检查现有问题

+

+[](https://github.com/apache/incubator-hugegraph-ai/graphs/contributors)

+

+## 📄 许可证

+

+hugegraph-ai 采用 [Apache 2.0

许可证](https://github.com/apache/incubator-hugegraph-ai/blob/main/LICENSE)。

+

+## 📞 联系我们

+

+- **GitHub

Issues**:[报告错误或请求功能](https://github.com/apache/incubator-hugegraph-ai/issues)(响应最快)

+-

**电子邮件**:[d...@hugegraph.apache.org](mailto:d...@hugegraph.apache.org)([需要订阅](https://hugegraph.apache.org/docs/contribution-guidelines/subscribe/))

+- **微信**:关注“Apache HugeGraph”官方公众号

+

+<img

src="https://raw.githubusercontent.com/apache/hugegraph-doc/master/assets/images/wechat.png";

alt="Apache HugeGraph WeChat QR Code" width="200"/>

diff --git a/content/cn/docs/quickstart/hugegraph-ai/hugegraph-llm.md

b/content/cn/docs/quickstart/hugegraph-ai/hugegraph-llm.md

new file mode 100644

index 00000000..b353a8fb

--- /dev/null

+++ b/content/cn/docs/quickstart/hugegraph-ai/hugegraph-llm.md

@@ -0,0 +1,235 @@

+---

+title: "HugeGraph-LLM"

+linkTitle: "HugeGraph-LLM"

+weight: 1

+---

+

+> 本文为中文翻译版本,内容基于英文版进行,我们欢迎您随时提出修改建议。我们推荐您阅读 [AI 仓库

README](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-llm#readme)

以获取最新信息,官网会定期同步更新。

+

+> **连接图数据库与大语言模型的桥梁**

+

+> AI 总结项目文档:[](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+## 🎯 概述

+

+HugeGraph-LLM 是一个功能强大的工具包,它融合了图数据库和大型语言模型的优势,实现了 HugeGraph 与 LLM

之间的无缝集成,助力开发者构建智能应用。

+

+### 核心功能

+- 🏗️ **知识图谱构建**:利用 LLM 和 HugeGraph 自动构建知识图谱。

+- 🗣️ **自然语言查询**:通过自然语言(Gremlin/Cypher)操作图数据库。

+- 🔍 **图增强 RAG**:借助知识图谱提升问答准确性(GraphRAG 和 Graph Agent)。

+

+更多源码文档,请访问我们的 [DeepWiki](https://deepwiki.com/apache/incubator-hugegraph-ai)

页面(推荐)。

+

+## 📋 环境要求

+

+> [!IMPORTANT]

+> - **Python**:3.10+(未在 3.12 版本测试)

+> - **HugeGraph Server**:1.3+(推荐 1.5+)

+> - **UV 包管理器**:0.7+

+

+## 🚀 快速开始

+

+请选择您偏好的部署方式:

+

+### 方案一:Docker Compose(推荐)

+

+这是同时启动 HugeGraph Server 和 RAG 服务的最快方法:

+

+```bash

+# 1. 设置环境

+cp docker/env.template docker/.env

+# 编辑 docker/.env,将 PROJECT_PATH 设置为您的实际项目路径

+

+# 2. 部署服务

+cd docker

+docker-compose -f docker-compose-network.yml up -d

+

+# 3. 验证部署

+docker-compose -f docker-compose-network.yml ps

+

+# 4. 访问服务

+# HugeGraph Server: http://localhost:8080

+# RAG 服务: http://localhost:8001

+```

+

+### 方案二:独立 Docker 容器

+

+如果您希望对各组件进行更精细的控制:

+

+#### 可用镜像

+- **`hugegraph/rag`**:开发镜像,可访问源代码

+- **`hugegraph/rag-bin`**:生产优化的二进制文件(使用 Nuitka 编译)

+

+```bash

+# 1. 创建网络

+docker network create -d bridge hugegraph-net

+

+# 2. 启动 HugeGraph Server

+docker run -itd --name=server -p 8080:8080 --network hugegraph-net

hugegraph/hugegraph

+

+# 3. 启动 RAG 服务

+docker pull hugegraph/rag:latest

+docker run -itd --name rag \

+ -v /path/to/your/hugegraph-llm/.env:/home/work/hugegraph-llm/.env \

+ -p 8001:8001 --network hugegraph-net hugegraph/rag

+

+# 4. 监控日志

+docker logs -f rag

+```

+

+### 方案三:从源码构建

+

+适用于开发和自定义场景:

+

+```bash

+# 1. 启动 HugeGraph Server

+docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

+

+# 2. 安装 UV 包管理器

+curl -LsSf https://astral.sh/uv/install.sh | sh

+

+# 3. 克隆并设置项目

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai/hugegraph-llm

+

+# 4. 创建虚拟环境并安装依赖

+uv venv && source .venv/bin/activate

+uv pip install -e .

+

+# 5. 启动 RAG 演示

+python -m hugegraph_llm.demo.rag_demo.app

+# 访问: http://127.0.0.1:8001

+

+# 6. (可选) 自定义主机/端口

+python -m hugegraph_llm.demo.rag_demo.app --host 127.0.0.1 --port 18001

+```

+

+#### 额外设置(可选)

+

+```bash

+# 下载 NLTK 停用词以优化文本处理

+python ./hugegraph_llm/operators/common_op/nltk_helper.py

+

+# 更新配置文件

+python -m hugegraph_llm.config.generate --update

+```

+

+> [!TIP]

+>

查看我们的[快速入门指南](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)获取详细用法示例和查询逻辑解释。

+

+## 💡 用法示例

+

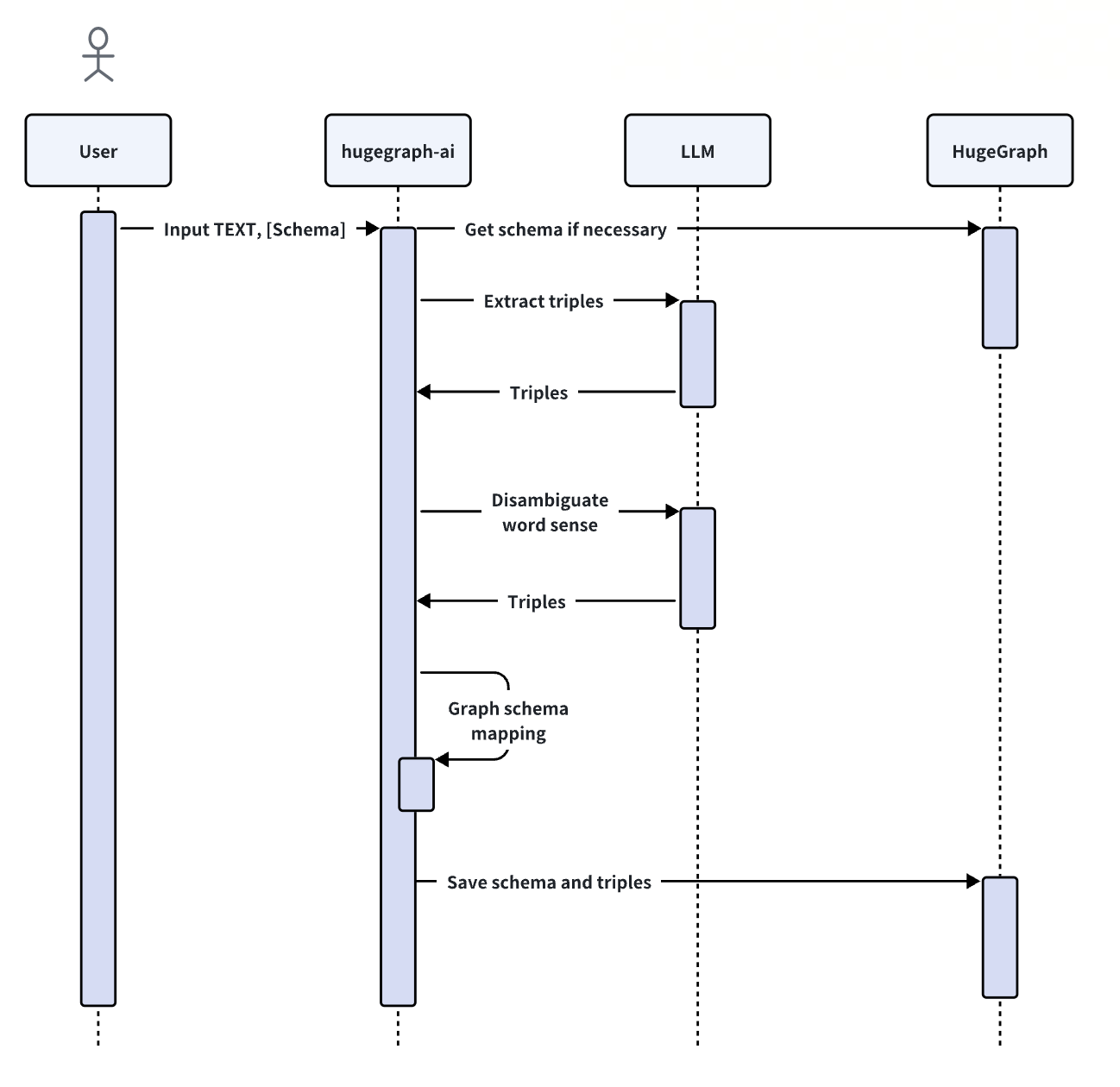

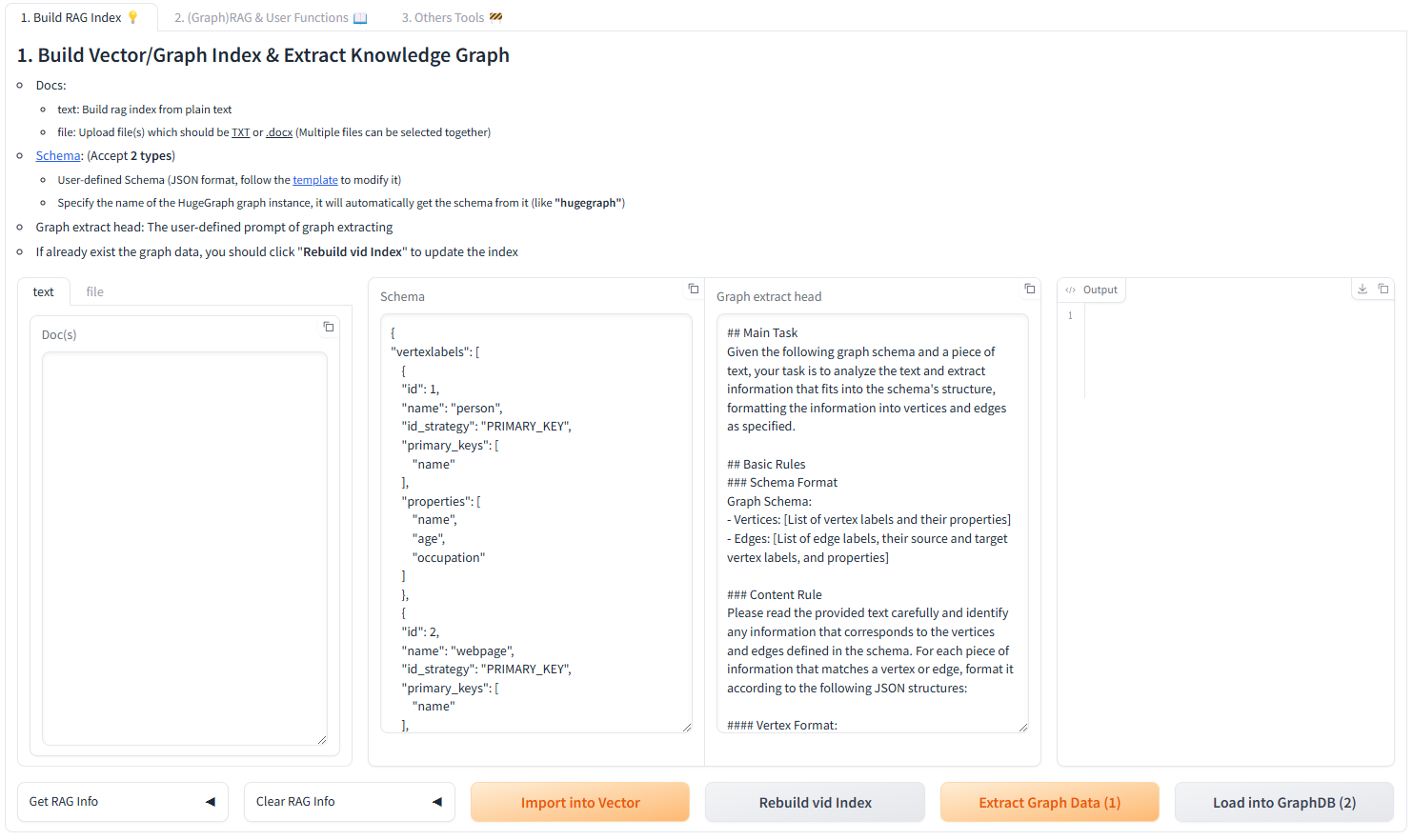

+### 知识图谱构建

+

+#### 交互式 Web 界面

+

+使用 Gradio 界面进行可视化知识图谱构建:

+

+**输入选项:**

+- **文本**:直接输入文本用于 RAG 索引创建

+- **文件**:上传 TXT 或 DOCX 文件(支持多选)

+

+**Schema 配置:**

+- **自定义

Schema**:遵循我们[模板](https://github.com/apache/incubator-hugegraph-ai/blob/aff3bbe25fa91c3414947a196131be812c20ef11/hugegraph-llm/src/hugegraph_llm/config/config_data.py#L125)的

JSON 格式

+- **HugeGraph Schema**:使用现有图实例的 Schema(例如,“hugegraph”)

+

+

+

+#### 代码构建

+

+使用 `KgBuilder` 类通过代码构建知识图谱:

+

+```python

+from hugegraph_llm.models.llms.init_llm import LLMs

+from hugegraph_llm.operators.kg_construction_task import KgBuilder

+

+# 初始化并链式操作

+TEXT = "在此处输入您的文本内容..."

+builder = KgBuilder(LLMs().get_chat_llm())

+

+(

+ builder

+ .import_schema(from_hugegraph="talent_graph").print_result()

+ .chunk_split(TEXT).print_result()

+ .extract_info(extract_type="property_graph").print_result()

+ .commit_to_hugegraph()

+ .run()

+)

+```

+

+**工作流:**

+```mermaid

+graph LR

+ A[导入 Schema] --> B[文本分块]

+ B --> C[提取信息]

+ C --> D[提交到 HugeGraph]

+ D --> E[执行工作流]

+

+ style A fill:#fff2cc

+ style B fill:#d5e8d4

+ style C fill:#dae8fc

+ style D fill:#f8cecc

+ style E fill:#e1d5e7

+```

+

+### 图增强 RAG

+

+利用 HugeGraph 进行检索增强生成:

+

+```python

+from hugegraph_llm.operators.graph_rag_task import RAGPipeline

+

+# 初始化 RAG 工作流

+graph_rag = RAGPipeline()

+

+# 执行 RAG 工作流

+(

+ graph_rag

+ .extract_keywords(text="给我讲讲 Al Pacino 的故事。")

+ .keywords_to_vid()

+ .query_graphdb(max_deep=2, max_graph_items=30)

+ .merge_dedup_rerank()

+ .synthesize_answer(vector_only_answer=False, graph_only_answer=True)

+ .run(verbose=True)

+)

+```

+

+**RAG 工作流:**

+```mermaid

+graph TD

+ A[用户查询] --> B[提取关键词]

+ B --> C[匹配图节点]

+ C --> D[检索图上下文]

+ D --> E[重排序结果]

+ E --> F[生成答案]

+

+ style A fill:#e3f2fd

+ style B fill:#f3e5f5

+ style C fill:#e8f5e8

+ style D fill:#fff3e0

+ style E fill:#fce4ec

+ style F fill:#e0f2f1

+```

+

+## 🔧 配置

+

+运行演示后,将自动生成配置文件:

+

+- **环境**:`hugegraph-llm/.env`

+- **提示**:`hugegraph-llm/src/hugegraph_llm/resources/demo/config_prompt.yaml`

+

+> [!NOTE]

+> 使用 Web 界面时,配置更改会自动保存。对于手动更改,刷新页面即可加载更新。

+

+**LLM 提供商支持**:本项目使用 [LiteLLM](https://docs.litellm.ai/docs/providers) 实现多提供商

LLM 支持。

+

+## 📚 其他资源

+

+- **图可视化**:使用 [HugeGraph Hubble](https://hub.docker.com/r/hugegraph/hubble)

进行数据分析和 Schema 管理

+- **API 文档**:浏览我们的 REST API 端点以进行集成

+- **社区**:加入我们的讨论并为项目做出贡献

+

+---

+

+**许可证**:Apache License 2.0 | **社区**:[Apache

HugeGraph](https://hugegraph.apache.org/)

diff --git a/content/cn/docs/quickstart/hugegraph-ai/quick_start.md

b/content/cn/docs/quickstart/hugegraph-ai/quick_start.md

index 8af42d37..6d8d22f9 100644

--- a/content/cn/docs/quickstart/hugegraph-ai/quick_start.md

+++ b/content/cn/docs/quickstart/hugegraph-ai/quick_start.md

@@ -1,7 +1,7 @@

---

title: "GraphRAG UI Details"

linkTitle: "GraphRAG UI Details"

-weight: 1

+weight: 4

---

> 接续[主文档](../)介绍基础 UI 功能及详情,欢迎随时更新和改进,谢谢

diff --git a/content/en/docs/quickstart/hugegraph-ai/_index.md

b/content/en/docs/quickstart/hugegraph-ai/_index.md

index 6be862fb..1095042b 100644

--- a/content/en/docs/quickstart/hugegraph-ai/_index.md

+++ b/content/en/docs/quickstart/hugegraph-ai/_index.md

@@ -4,226 +4,163 @@ linkTitle: "HugeGraph-AI"

weight: 3

---

-> Please refer to the AI repository

[README](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-llm#readme)

for the most up-to-date documentation, and the official website **regularly**

is updated and synchronized.

-

-> AI summarizes the project documentation: [](https://deepwiki.com/apache/incubator-hugegraph-ai)

-

-## 1. Summary

-

-The `hugegraph-llm` is a tool for the implementation and research related to

large language models.

-This project includes runnable demos, it can also be used as a third-party

library.

-

-As we know, graph systems can help large models address challenges like

timeliness and hallucination,

-while large models can help graph systems with cost-related issues.

-

-With this project, we aim to reduce the cost of using graph systems and

decrease the complexity of

-building knowledge graphs. This project will offer more applications and

integration solutions for

-graph systems and large language models.

-1. Construct knowledge graph by LLM + HugeGraph

-2. Use natural language to operate graph databases (Gremlin/Cypher)

-3. Knowledge graph supplements answer context (GraphRAG → Graph Agent)

-

-## 2. Environment Requirements

-> [!IMPORTANT]

-> - python 3.10+ (not tested in 3.12)

-> - hugegraph-server 1.3+ (better to use 1.5+)

-> - uv 0.7+

-

-## 3. Preparation

-

-### 3.1 Docker

-

-**Docker Deployment**

- Alternatively, you can deploy HugeGraph-AI using Docker:

- - Ensure you have Docker installed

- - We provide two container images:

- - **Image 1**:

[hugegraph/rag](https://hub.docker.com/r/hugegraph/rag/tags)

- For building and running RAG functionality for rapid deployment and

direct source code modification

- - **Image 2**:

[hugegraph/rag-bin](https://hub.docker.com/r/hugegraph/rag-bin/tags)

- A binary translation of C compiled with Nuitka, for better performance

and efficiency.

- - Pull the Docker images:

- ```bash

- docker pull hugegraph/rag:latest # Pull Image 1

- docker pull hugegraph/rag-bin:latest # Pull Image 2

- ```

- - Start the Docker container:

- ```bash

- docker run -it --name rag -v

path2project/hugegraph-llm/.env:/home/work/hugegraph-llm/.env -p 8001:8001

hugegraph/rag bash

- docker run -it --name rag-bin -v

path2project/hugegraph-llm/.env:/home/work/hugegraph-llm/.env -p 8001:8001

hugegraph/rag-bin bash

- ```

- - Start the Graph RAG demo:

- ```bash

- # For Image 1

- python ./src/hugegraph_llm/demo/rag_demo/app.py # or run python -m

hugegraph_llm.demo.rag_demo.app

-

- # For Image 2

- ./app.dist/app.bin

- ```

- - Access the interface at http://localhost:8001

-

-### 3.2 Build from Source

-

-1. Start the HugeGraph database, you can run it via

[Docker](https://hub.docker.com/r/hugegraph/hugegraph)/[Binary

Package](https://hugegraph.apache.org/docs/download/download/).

- There is a simple method by docker:

- ```bash

- docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

- ```

- You can refer to the detailed documents

[doc](/docs/quickstart/hugegraph/hugegraph-server/#31-use-docker-container-convenient-for-testdev)

for more guidance.

-

-2. Configuring the uv environment, Use the official installer to install uv,

See the [uv documentation](https://docs.astral.sh/uv/configuration/installer/)

for other installation methods

- ```bash

- # You could try pipx or pip to install uv when meet network issues, refer

the uv doc for more details

- curl -LsSf https://astral.sh/uv/install.sh | sh - # install the latest

version like 0.7.3+

- ```

-

-3. Clone this project

- ```bash

- git clone https://github.com/apache/incubator-hugegraph-ai.git

- ```

-4. Configuration dependency environment

- ```bash

- cd incubator-hugegraph-ai/hugegraph-llm

- uv venv && source .venv/bin/activate

- uv pip install -e .

- ```

- If dependency download fails or too slow due to network issues, it is

recommended to modify `hugegraph-llm/pyproject.toml`.

-

-5. To start the Gradio interactive demo for **Graph RAG**, run the following

command, then open http://127.0.0.1:8001 in your browser.

- ```bash

- python -m hugegraph_llm.demo.rag_demo.app # same as "uv run xxx"

- ```

- The default host is `0.0.0.0` and the port is `8001`. You can change them

by passing command line arguments`--host` and `--port`.

- ```bash

- python -m hugegraph_llm.demo.rag_demo.app --host 127.0.0.1 --port 18001

- ```

-

-6. After running the web demo, the config file `.env` will be automatically

generated at the path `hugegraph-llm/.env`. Additionally, a prompt-related

configuration file `config_prompt.yaml` will also be generated at the path

`hugegraph-llm/src/hugegraph_llm/resources/demo/config_prompt.yaml`.

- You can modify the content on the web page, and it will be automatically

saved to the configuration file after the corresponding feature is triggered.

You can also modify the file directly without restarting the web application;

refresh the page to load your latest changes.

- (Optional)To regenerate the config file, you can use `config.generate`

with `-u` or `--update`.

- ```bash

- python -m hugegraph_llm.config.generate --update

- ```

- Note: `Litellm` support multi-LLM provider, refer

[litellm.ai](https://docs.litellm.ai/docs/providers) to config it

-7. (__Optional__) You could use

-

[hugegraph-hubble](/docs/quickstart/toolchain/hugegraph-hubble/#21-use-docker-convenient-for-testdev)

- to visit the graph data, could run it via

[Docker/Docker-Compose](https://hub.docker.com/r/hugegraph/hubble)

- for guidance. (Hubble is a graph-analysis dashboard that includes data

loading/schema management/graph traverser/display).

-8. (__Optional__) offline download NLTK stopwords

- ```bash

- python ./hugegraph_llm/operators/common_op/nltk_helper.py

- ```

-> [!TIP]

-> You can also refer to our

[quick-start](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)

doc to understand how to use it & the basic query logic 🚧

-

-## 4. Examples

-

-### 4.1 Build a knowledge graph in HugeGraph through LLM

-

-#### 4.1.1 Build a knowledge graph through the gradio interactive interface

-

-**Parameter description:**

-

-- Docs:

- - text: Build rag index from plain text

- - file: Upload file(s) which should be <u>TXT</u> or <u>.docx</u> (Multiple

files can be selected together)

-- [Schema](https://hugegraph.apache.org/docs/clients/restful-api/schema/):

(Except **2 types**)

- - User-defined Schema (JSON format, follow the

[template](https://github.com/apache/incubator-hugegraph-ai/blob/aff3bbe25fa91c3414947a196131be812c20ef11/hugegraph-llm/src/hugegraph_llm/config/config_data.py#L125)

- to modify it)

- - Specify the name of the HugeGraph graph instance, it will automatically

get the schema from it (like

- **"hugegraph"**)

-- Graph extract head: The user-defined prompt of graph extracting

-- If it already exists the graph data, you should click "**Rebuild vid

Index**" to update the index

-

-

-

-#### 4.1.2 Build a knowledge graph through code

-

-The `KgBuilder` class is used to construct a knowledge graph. Here is a brief

usage guide:

-

-1. **Initialization**: The `KgBuilder` class is initialized with an instance

of a language model.

-This can be obtained from the `LLMs` class.

- Initialize the LLMs instance, get the LLM, and then create a task instance

`KgBuilder` for graph construction. `KgBuilder` defines multiple operators, and

users can freely combine them according to their needs. (tip: `print_result()`

can print the result of each step in the console, without affecting the overall

execution logic)

-

- ```python

- from hugegraph_llm.models.llms.init_llm import LLMs

- from hugegraph_llm.operators.kg_construction_task import KgBuilder

-

- TEXT = ""

- builder = KgBuilder(LLMs().get_chat_llm())

- (

- builder

- .import_schema(from_hugegraph="talent_graph").print_result()

- .chunk_split(TEXT).print_result()

- .extract_info(extract_type="property_graph").print_result()

- .commit_to_hugegraph()

- .run()

- )

- ```

-

-2. **Import Schema**: The `import_schema` method is used to import a schema

from a source. The source can be a HugeGraph instance, a user-defined schema,

or an extraction result. The method `print_result` can be chained to print the

result.

- ```python

- # Import schema from a HugeGraph instance

- builder.import_schema(from_hugegraph="xxx").print_result()

- # Import schema from an extraction result

- builder.import_schema(from_extraction="xxx").print_result()

- # Import schema from user-defined schema

- builder.import_schema(from_user_defined="xxx").print_result()

- ```

-3. **Chunk Split**: The `chunk_split` method is used to split the input text

into chunks. The text should be passed as a string argument to the method.

- ```python

- # Split the input text into documents

- builder.chunk_split(TEXT, split_type="document").print_result()

- # Split the input text into paragraphs

- builder.chunk_split(TEXT, split_type="paragraph").print_result()

- # Split the input text into sentences

- builder.chunk_split(TEXT, split_type="sentence").print_result()

- ```

-4. **Extract Info**: The `extract_info` method is used to extract info from a

text. The text should be passed as a string argument to the method.

- ```python

- TEXT = "Meet Sarah, a 30-year-old attorney, and her roommate, James, whom

she's shared a home with since 2010."

- # extract property graph from the input text

- builder.extract_info(extract_type="property_graph").print_result()

- # extract triples from the input text

- builder.extract_info(extract_type="property_graph").print_result()

- ```

-5. **Commit to HugeGraph**: The `commit_to_hugegraph` method is used to commit

the constructed knowledge graph to a HugeGraph instance.

- ```python

- builder.commit_to_hugegraph().print_result()

- ```

-6. **Run**: The `run` method is used to execute the chained operations.

- ```python

- builder.run()

- ```

- The methods of the `KgBuilder` class can be chained together to perform a

sequence of operations.

-

-### 4.2 Retrieval augmented generation (RAG) based on HugeGraph

-

-The `RAGPipeline` class is used to integrate HugeGraph with large language

models to provide retrieval-augmented generation capabilities.

-Here is a brief usage guide:

-

-1. **Extract Keyword**: Extract keywords and expand synonyms.

- ```python

- from hugegraph_llm.operators.graph_rag_task import RAGPipeline

- graph_rag = RAGPipeline()

- graph_rag.extract_keywords(text="Tell me about Al Pacino.").print_result()

- ```

-2. **Match Vid from Keywords**: Match the nodes with the keywords in the graph.

- ```python

- graph_rag.keywords_to_vid().print_result()

- ```

-3. **Query Graph for Rag**: Retrieve the corresponding keywords and their

multi-degree associated relationships from HugeGraph.

- ```python

- graph_rag.query_graphdb(max_deep=2, max_graph_items=30).print_result()

- ```

-4. **Rerank Searched Result**: Rerank the searched results based on the

similarity between the question and the results.

- ```python

- graph_rag.merge_dedup_rerank().print_result()

- ```

-5. **Synthesize Answer**: Summarize the results and organize the language to

answer the question.

- ```python

- graph_rag.synthesize_answer(vector_only_answer=False,

graph_only_answer=True).print_result()

- ```

-6. **Run**: The `run` method is used to execute the above operations.

- ```python

- graph_rag.run(verbose=True)

- ```

+[](https://www.apache.org/licenses/LICENSE-2.0.html)

+[](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+`hugegraph-ai` integrates [HugeGraph](https://github.com/apache/hugegraph)

with artificial intelligence capabilities, providing comprehensive support for

developers to build AI-powered graph applications.

+

+## ✨ Key Features

+

+- **GraphRAG**: Build intelligent question-answering systems with

graph-enhanced retrieval

+- **Knowledge Graph Construction**: Automated graph building from text using

LLMs

+- **Graph ML**: Integration with 20+ graph learning algorithms (GCN, GAT,

GraphSAGE, etc.)

+- **Python Client**: Easy-to-use Python interface for HugeGraph operations

+- **AI Agents**: Intelligent graph analysis and reasoning capabilities

+

+## 🚀 Quick Start

+

+> [!NOTE]

+> For a complete deployment guide and detailed examples, please refer to

[hugegraph-llm/README.md](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/README.md)

+

+### Prerequisites

+- Python 3.9+ (3.10+ recommended for hugegraph-llm)

+- [uv](https://docs.astral.sh/uv/) (recommended package manager)

+- HugeGraph Server 1.3+ (1.5+ recommended)

+- Docker (optional, for containerized deployment)

+

+### Option 1: Docker Deployment (Recommended)

+

+```bash

+# Clone the repository

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai

+

+# Set up environment and start services

+cp docker/env.template docker/.env

+# Edit docker/.env to set your PROJECT_PATH

+cd docker

+docker-compose -f docker-compose-network.yml up -d

+

+# Access services:

+# - HugeGraph Server: http://localhost:8080

+# - RAG Service: http://localhost:8001

+```

+

+### Option 2: Source Installation

+

+```bash

+# 1. Start HugeGraph Server

+docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

+

+# 2. Clone and set up the project

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai/hugegraph-llm

+

+# 3. Install dependencies

+uv venv && source .venv/bin/activate

+uv pip install -e .

+

+# 4. Start the demo

+python -m hugegraph_llm.demo.rag_demo.app

+# Visit http://127.0.0.1:8001

+```

+

+### Basic Usage Examples

+

+#### GraphRAG - Question Answering

+```python

+from hugegraph_llm.operators.graph_rag_task import RAGPipeline

+

+# Initialize RAG pipeline

+graph_rag = RAGPipeline()

+

+# Ask questions about your graph

+result = (graph_rag

+ .extract_keywords(text="Tell me about Al Pacino.")

+ .keywords_to_vid()

+ .query_graphdb(max_deep=2, max_graph_items=30)

+ .synthesize_answer()

+ .run())

+```

+

+#### Knowledge Graph Construction

+```python

+from hugegraph_llm.models.llms.init_llm import LLMs

+from hugegraph_llm.operators.kg_construction_task import KgBuilder

+

+# Build KG from text

+TEXT = "Your text content here..."

+builder = KgBuilder(LLMs().get_chat_llm())

+

+(builder

+ .import_schema(from_hugegraph="hugegraph")

+ .chunk_split(TEXT)

+ .extract_info(extract_type="property_graph")

+ .commit_to_hugegraph()

+ .run())

+```

+

+#### Graph Machine Learning

+```python

+from pyhugegraph.client import PyHugeClient

+# Connect to HugeGraph and run ML algorithms

+# See hugegraph-ml documentation for detailed examples

+```

+

+## 📦 Modules

+

+###

[hugegraph-llm](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-llm)

[](https://deepwiki.com/apache/incubator-hugegraph-ai)

+Large language model integration for graph applications:

+- **GraphRAG**: Retrieval-augmented generation with graph data

+- **Knowledge Graph Construction**: Build KGs from text automatically

+- **Natural Language Interface**: Query graphs using natural language

+- **AI Agents**: Intelligent graph analysis and reasoning

+

+###

[hugegraph-ml](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-ml)

+Graph machine learning with 20+ implemented algorithms:

+- **Node Classification**: GCN, GAT, GraphSAGE, APPNP, etc.

+- **Graph Classification**: DiffPool, P-GNN, etc.

+- **Graph Embedding**: DeepWalk, Node2Vec, GRACE, etc.

+- **Link Prediction**: SEAL, GATNE, etc.

+

+###

[hugegraph-python-client](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-python-client)

+Python client for HugeGraph operations:

+- **Schema Management**: Define vertex/edge labels and properties

+- **CRUD Operations**: Create, read, update, delete graph data

+- **Gremlin Queries**: Execute graph traversal queries

+- **REST API**: Complete HugeGraph REST API coverage

+

+## 📚 Learn More

+

+- [Project

Homepage](https://hugegraph.apache.org/docs/quickstart/hugegraph-ai/)

+- [LLM Quick Start

Guide](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)

+- [DeepWiki AI

Documentation](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+## 🔗 Related Projects

+

+- [hugegraph](https://github.com/apache/hugegraph) - Core graph database

+- [hugegraph-toolchain](https://github.com/apache/hugegraph-toolchain) -

Development tools (Loader, Dashboard, etc.)

+- [hugegraph-computer](https://github.com/apache/hugegraph-computer) - Graph

computing system

+

+## 🤝 Contributing

+

+We welcome contributions! Please see our [contribution

guidelines](https://hugegraph.apache.org/docs/contribution-guidelines/) for

details.

+

+**Development Setup:**

+- Use [GitHub Desktop](https://desktop.github.com/) for easier PR management

+- Run `./style/code_format_and_analysis.sh` before submitting PRs

+- Check existing issues before reporting bugs

+

+[](https://github.com/apache/incubator-hugegraph-ai/graphs/contributors)

+

+## 📄 License

+

+hugegraph-ai is licensed under [Apache 2.0

License](https://github.com/apache/incubator-hugegraph-ai/blob/main/LICENSE).

+

+## 📞 Contact Us

+

+- **GitHub Issues**: [Report bugs or request

features](https://github.com/apache/incubator-hugegraph-ai/issues) (fastest

response)

+- **Email**: [d...@hugegraph.apache.org](mailto:d...@hugegraph.apache.org)

([subscription

required](https://hugegraph.apache.org/docs/contribution-guidelines/subscribe/))

+- **WeChat**: Follow "Apache HugeGraph" official account

+

+<img

src="https://raw.githubusercontent.com/apache/hugegraph-doc/master/assets/images/wechat.png";

alt="Apache HugeGraph WeChat QR Code" width="200"/>

diff --git a/content/en/docs/quickstart/hugegraph-ai/hugegraph-llm.md

b/content/en/docs/quickstart/hugegraph-ai/hugegraph-llm.md

new file mode 100644

index 00000000..b64b1fa7

--- /dev/null

+++ b/content/en/docs/quickstart/hugegraph-ai/hugegraph-llm.md

@@ -0,0 +1,237 @@

+---

+title: "HugeGraph-LLM"

+linkTitle: "HugeGraph-LLM"

+weight: 1

+---

+

+> Please refer to the AI repository

[README](https://github.com/apache/incubator-hugegraph-ai/tree/main/hugegraph-llm#readme)

for the most up-to-date documentation, and the official website **regularly**

is updated and synchronized.

+

+> **Bridge the gap between Graph Databases and Large Language Models**

+

+> AI summarizes the project documentation: [](https://deepwiki.com/apache/incubator-hugegraph-ai)

+

+

+## 🎯 Overview

+

+HugeGraph-LLM is a comprehensive toolkit that combines the power of graph

databases with large language models.

+It enables seamless integration between HugeGraph and LLMs for building

intelligent applications.

+

+### Key Features

+- 🏗️ **Knowledge Graph Construction** - Build KGs automatically using LLMs +

HugeGraph

+- 🗣️ **Natural Language Querying** - Operate graph databases using natural

language (Gremlin/Cypher)

+- 🔍 **Graph-Enhanced RAG** - Leverage knowledge graphs to improve answer

accuracy (GraphRAG & Graph Agent)

+

+For detailed source code doc, visit our

[DeepWiki](https://deepwiki.com/apache/incubator-hugegraph-ai) page.

(Recommended)

+

+## 📋 Prerequisites

+

+> [!IMPORTANT]

+> - **Python**: 3.10+ (not tested on 3.12)

+> - **HugeGraph Server**: 1.3+ (recommended: 1.5+)

+> - **UV Package Manager**: 0.7+

+

+## 🚀 Quick Start

+

+Choose your preferred deployment method:

+

+### Option 1: Docker Compose (Recommended)

+

+The fastest way to get started with both HugeGraph Server and RAG Service:

+

+```bash

+# 1. Set up environment

+cp docker/env.template docker/.env

+# Edit docker/.env and set PROJECT_PATH to your actual project path

+

+# 2. Deploy services

+cd docker

+docker-compose -f docker-compose-network.yml up -d

+

+# 3. Verify deployment

+docker-compose -f docker-compose-network.yml ps

+

+# 4. Access services

+# HugeGraph Server: http://localhost:8080

+# RAG Service: http://localhost:8001

+```

+

+### Option 2: Individual Docker Containers

+

+For more control over individual components:

+

+#### Available Images

+- **`hugegraph/rag`** - Development image with source code access

+- **`hugegraph/rag-bin`** - Production-optimized binary (compiled with Nuitka)

+

+```bash

+# 1. Create network

+docker network create -d bridge hugegraph-net

+

+# 2. Start HugeGraph Server

+docker run -itd --name=server -p 8080:8080 --network hugegraph-net

hugegraph/hugegraph

+

+# 3. Start RAG Service

+docker pull hugegraph/rag:latest

+docker run -itd --name rag \

+ -v /path/to/your/hugegraph-llm/.env:/home/work/hugegraph-llm/.env \

+ -p 8001:8001 --network hugegraph-net hugegraph/rag

+

+# 4. Monitor logs

+docker logs -f rag

+```

+

+### Option 3: Build from Source

+

+For development and customization:

+

+```bash

+# 1. Start HugeGraph Server

+docker run -itd --name=server -p 8080:8080 hugegraph/hugegraph

+

+# 2. Install UV package manager

+curl -LsSf https://astral.sh/uv/install.sh | sh

+

+# 3. Clone and setup project

+git clone https://github.com/apache/incubator-hugegraph-ai.git

+cd incubator-hugegraph-ai/hugegraph-llm

+

+# 4. Create virtual environment and install dependencies

+uv venv && source .venv/bin/activate

+uv pip install -e .

+

+# 5. Launch RAG demo

+python -m hugegraph_llm.demo.rag_demo.app

+# Access at: http://127.0.0.1:8001

+

+# 6. (Optional) Custom host/port

+python -m hugegraph_llm.demo.rag_demo.app --host 127.0.0.1 --port 18001

+```

+

+#### Additional Setup (Optional)

+

+```bash

+# Download NLTK stopwords for better text processing

+python ./hugegraph_llm/operators/common_op/nltk_helper.py

+

+# Update configuration files

+python -m hugegraph_llm.config.generate --update

+```

+

+> [!TIP]

+> Check our [Quick Start

Guide](https://github.com/apache/incubator-hugegraph-ai/blob/main/hugegraph-llm/quick_start.md)

for detailed usage examples and query logic explanations.

+

+## 💡 Usage Examples

+

+### Knowledge Graph Construction

+

+#### Interactive Web Interface

+

+Use the Gradio interface for visual knowledge graph building:

+

+**Input Options:**

+- **Text**: Direct text input for RAG index creation

+- **Files**: Upload TXT or DOCX files (multiple selection supported)

+

+**Schema Configuration:**

+- **Custom Schema**: JSON format following our

[template](https://github.com/apache/incubator-hugegraph-ai/blob/aff3bbe25fa91c3414947a196131be812c20ef11/hugegraph-llm/src/hugegraph_llm/config/config_data.py#L125)

+- **HugeGraph Schema**: Use existing graph instance schema (e.g., "hugegraph")

+

+

+

+#### Programmatic Construction

+

+Build knowledge graphs with code using the `KgBuilder` class:

+

+```python

+from hugegraph_llm.models.llms.init_llm import LLMs

+from hugegraph_llm.operators.kg_construction_task import KgBuilder

+

+# Initialize and chain operations

+TEXT = "Your input text here..."

+builder = KgBuilder(LLMs().get_chat_llm())

+

+(

+ builder

+ .import_schema(from_hugegraph="talent_graph").print_result()

+ .chunk_split(TEXT).print_result()

+ .extract_info(extract_type="property_graph").print_result()

+ .commit_to_hugegraph()

+ .run()

+)

+```

+

+**Pipeline Workflow:**

+```mermaid

+graph LR

+ A[Import Schema] --> B[Chunk Split]

+ B --> C[Extract Info]

+ C --> D[Commit to HugeGraph]

+ D --> E[Execute Pipeline]

+

+ style A fill:#fff2cc

+ style B fill:#d5e8d4

+ style C fill:#dae8fc

+ style D fill:#f8cecc

+ style E fill:#e1d5e7

+```

+

+### Graph-Enhanced RAG

+

+Leverage HugeGraph for retrieval-augmented generation:

+

+```python

+from hugegraph_llm.operators.graph_rag_task import RAGPipeline

+

+# Initialize RAG pipeline

+graph_rag = RAGPipeline()

+

+# Execute RAG workflow

+(

+ graph_rag

+ .extract_keywords(text="Tell me about Al Pacino.")

+ .keywords_to_vid()

+ .query_graphdb(max_deep=2, max_graph_items=30)

+ .merge_dedup_rerank()

+ .synthesize_answer(vector_only_answer=False, graph_only_answer=True)

+ .run(verbose=True)

+)

+```

+

+**RAG Pipeline Flow:**

+```mermaid

+graph TD

+ A[User Query] --> B[Extract Keywords]

+ B --> C[Match Graph Nodes]

+ C --> D[Retrieve Graph Context]

+ D --> E[Rerank Results]

+ E --> F[Generate Answer]

+

+ style A fill:#e3f2fd

+ style B fill:#f3e5f5

+ style C fill:#e8f5e8

+ style D fill:#fff3e0

+ style E fill:#fce4ec

+ style F fill:#e0f2f1

+```

+

+## 🔧 Configuration

+

+After running the demo, configuration files are automatically generated:

+

+- **Environment**: `hugegraph-llm/.env`

+- **Prompts**:

`hugegraph-llm/src/hugegraph_llm/resources/demo/config_prompt.yaml`

+

+> [!NOTE]

+> Configuration changes are automatically saved when using the web interface.

For manual changes, simply refresh the page to load updates.

+

+**LLM Provider Support**: This project uses

[LiteLLM](https://docs.litellm.ai/docs/providers) for multi-provider LLM

support.

+

+## 📚 Additional Resources

+

+- **Graph Visualization**: Use [HugeGraph

Hubble](https://hub.docker.com/r/hugegraph/hubble) for data analysis and schema

management

+- **API Documentation**: Explore our REST API endpoints for integration

+- **Community**: Join our discussions and contribute to the project

+

+---

+

+**License**: Apache License 2.0 | **Community**: [Apache

HugeGraph](https://hugegraph.apache.org/)

diff --git a/content/en/docs/quickstart/hugegraph-ai/quick_start.md

b/content/en/docs/quickstart/hugegraph-ai/quick_start.md

index 31290e3f..58e36778 100644

--- a/content/en/docs/quickstart/hugegraph-ai/quick_start.md

+++ b/content/en/docs/quickstart/hugegraph-ai/quick_start.md

@@ -1,7 +1,7 @@

---

title: "GraphRAG UI Details"

linkTitle: "GraphRAG UI Details"

-weight: 1

+weight: 4

---

> Follow up [main doc](../) to introduce the basic UI function & details,

> welcome to update and improve at any time, thanks