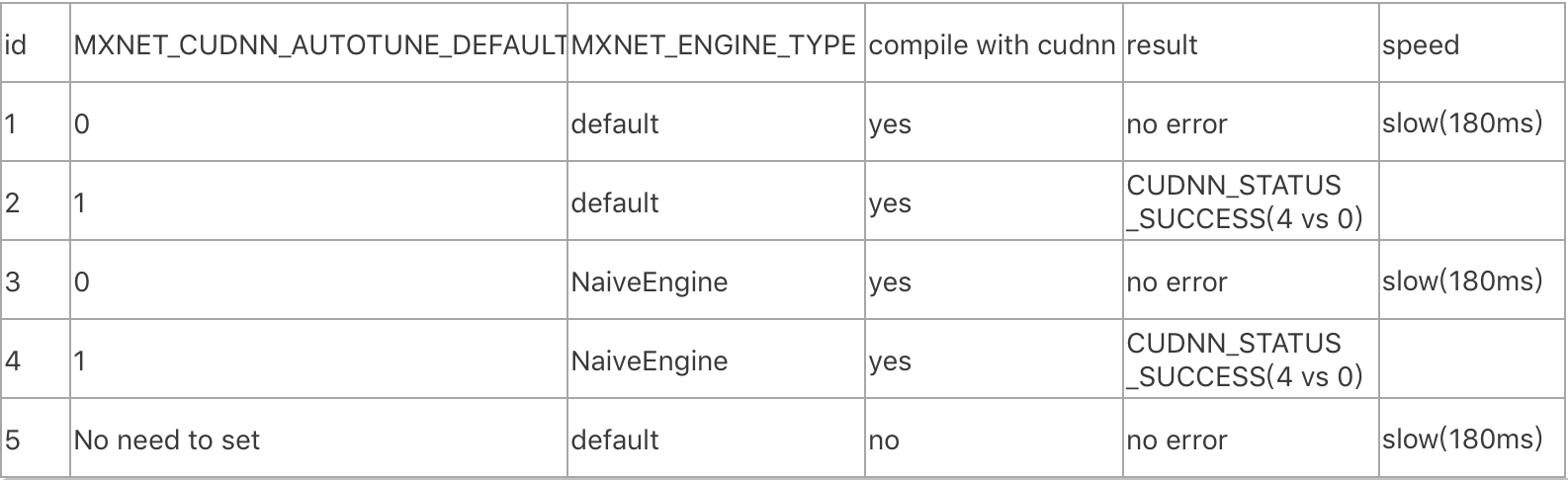

yanhn commented on issue #9612: CUDNN_STATUS_SUCCESS (4 vs. 0) cuDNN: CUDNN_STATUS_INTERNAL_ERROR on jetson TX2 URL: https://github.com/apache/incubator-mxnet/issues/9612#issuecomment-361805409 hi @larroy?thank you for replying. I tried ssd demo `python demo.py --gpu 0` under path `XX/incubator-mxnet/example/ssd` as you suggested. Still the same error. I set MXNET_ENGINE_TYPE to NaiveEngine? And the result is as below: nvidia@tegra-ubuntu:~/Workspace/incubator-mxnet/example/ssd$ export MXNET_ENGINE_TYPE=NaiveEngine nvidia@tegra-ubuntu:~/Workspace/incubator-mxnet/example/ssd$ python demo.py --gpu 0 [01:25:38] src/nnvm/legacy_json_util.cc:190: Loading symbol saved by previous version v0.10.1. Attempting to upgrade... [01:25:38] src/nnvm/legacy_json_util.cc:198: Symbol successfully upgraded! [01:25:38] src/engine/engine.cc:55: MXNet start using engine: NaiveEngine [01:25:43] src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:107: Running performance tests to find the best convolution algorithm, this can take a while... (setting env variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) Traceback (most recent call last): File "demo.py", line 155, in <module> ctx, len(class_names), args.nms_thresh, args.force_nms) File "demo.py", line 60, in get_detector detector = Detector(net, prefix, epoch, data_shape, mean_pixels, ctx=ctx) File "/home/nvidia/Workspace/incubator-mxnet/example/ssd/detect/detector.py", line 58, in __init__ self.mod.bind(data_shapes=[('data', (batch_size, 3, data_shape[0], data_shape[1]))]) File "/home/nvidia/Workspace/incubator-mxnet/python/mxnet/module/module.py", line 429, in bind state_names=self._state_names) File "/home/nvidia/Workspace/incubator-mxnet/python/mxnet/module/executor_group.py", line 264, in __init__ self.bind_exec(data_shapes, label_shapes, shared_group) File "/home/nvidia/Workspace/incubator-mxnet/python/mxnet/module/executor_group.py", line 360, in bind_exec shared_group)) File "/home/nvidia/Workspace/incubator-mxnet/python/mxnet/module/executor_group.py", line 638, in _bind_ith_exec shared_buffer=shared_data_arrays, **input_shapes) File "/home/nvidia/Workspace/incubator-mxnet/python/mxnet/symbol/symbol.py", line 1518, in simple_bind raise RuntimeError(error_msg) RuntimeError: simple_bind error. Arguments: data: (1, 3, 512, 512) [01:25:57] src/operator/nn/./cudnn/cudnn_convolution-inl.h:628: Check failed: e == CUDNN_STATUS_SUCCESS (4 vs. 0) cuDNN: CUDNN_STATUS_INTERNAL_ERROR Stack trace returned 10 entries: [bt] (0) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(dmlc::StackTrace[abi:cxx11]()+0x58) [0x7f7b86e190] [bt] (1) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x44) [0x7f7b86ec8c] [bt] (2) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(mxnet::op::CuDNNConvolutionOp<float>::SelectAlgo(mxnet::Context const&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, cudnnDataType_t, cudnnDataType_t)::{lambda(mxnet::RunContext, mxnet::engine::CallbackOnComplete)#1}::operator()(mxnet::RunContext, mxnet::engine::CallbackOnComplete) const+0x53c) [0x7f7e1daa7c] [bt] (3) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(std::_Function_handler<void (mxnet::RunContext, mxnet::engine::CallbackOnComplete), mxnet::op::CuDNNConvolutionOp<float>::SelectAlgo(mxnet::Context const&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, cudnnDataType_t, cudnnDataType_t)::{lambda(mxnet::RunContext, mxnet::engine::CallbackOnComplete)#1}>::_M_invoke(std::_Any_data const&, mxnet::RunContext&&, mxnet::engine::CallbackOnComplete&&)+0x2c) [0x7f7e1db8ec] [bt] (4) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(mxnet::engine::NaiveEngine::PushAsync(std::function<void (mxnet::RunContext, mxnet::engine::CallbackOnComplete)>, mxnet::Context, std::vector<mxnet::engine::Var*, std::allocator<mxnet::engine::Var*> > const&, std::vector<mxnet::engine::Var*, std::allocator<mxnet::engine::Var*> > const&, mxnet::FnProperty, int, char const*)+0x3a8) [0x7f7d6a6818] [bt] (5) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(mxnet::op::CuDNNConvolutionOp<float>::SelectAlgo(mxnet::Context const&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, cudnnDataType_t, cudnnDataType_t)+0x2b8) [0x7f7e1d92f0] [bt] (6) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(mxnet::Operator* mxnet::op::CreateOp<mshadow::gpu>(mxnet::op::ConvolutionParam, int, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> >*, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> >*, mxnet::Context)+0xa08) [0x7f7e1c8548] [bt] (7) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(mxnet::op::ConvolutionProp::CreateOperatorEx(mxnet::Context, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> >*, std::vector<int, std::allocator<int> >*) const+0xb0) [0x7f7d1bd178] [bt] (8) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(mxnet::op::OpPropCreateLayerOp(nnvm::NodeAttrs const&, mxnet::Context, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<int, std::allocator<int> > const&)+0x2b0) [0x7f7d2b5a20] [bt] (9) /home/nvidia/Workspace/incubator-mxnet/python/mxnet/../../lib/libmxnet.so(std::_Function_handler<mxnet::OpStatePtr (nnvm::NodeAttrs const&, mxnet::Context, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<int, std::allocator<int> > const&), mxnet::OpStatePtr (*)(nnvm::NodeAttrs const&, mxnet::Context, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<int, std::allocator<int> > const&)>::_M_invoke(std::_Any_data const&, nnvm::NodeAttrs const&, mxnet::Context&&, std::vector<nnvm::TShape, std::allocator<nnvm::TShape> > const&, std::vector<int, std::allocator<int> > const&)+0x40) [0x7f7b98df80] [01:25:58] src/engine/naive_engine.cc:55: Engine shutdown But when I set MXNET_CUDNN_AUTOTUNE_DEFAULT to 0, it works without errors. But the speed is the same as that when compiling mxnet without cudnn.  some test results are summarized as below  My main doubt is I didn't build mxnet properly. Some said about adding `ADD_CFLAGS=-DMSHADOW_USE_SSE=0` in mshadow.mk. Don't know why but I'll give it a try. And I'll try with `pip install mxnet-jetson-tx2` to see what will happen.

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services