imzs opened a new issue, #5866: URL: https://github.com/apache/rocketmq/issues/5866

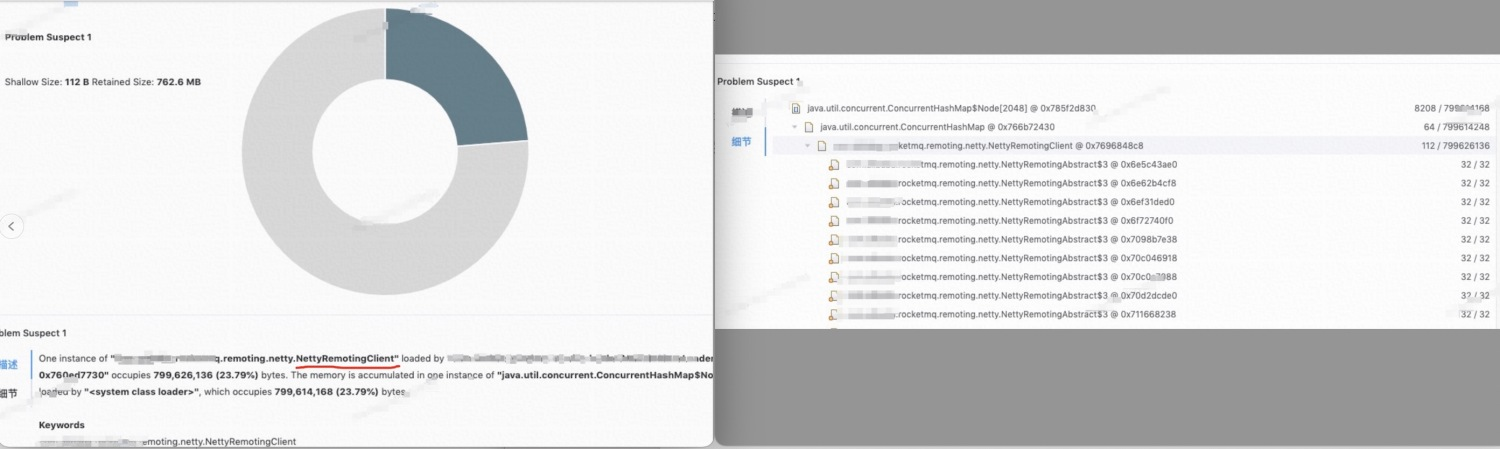

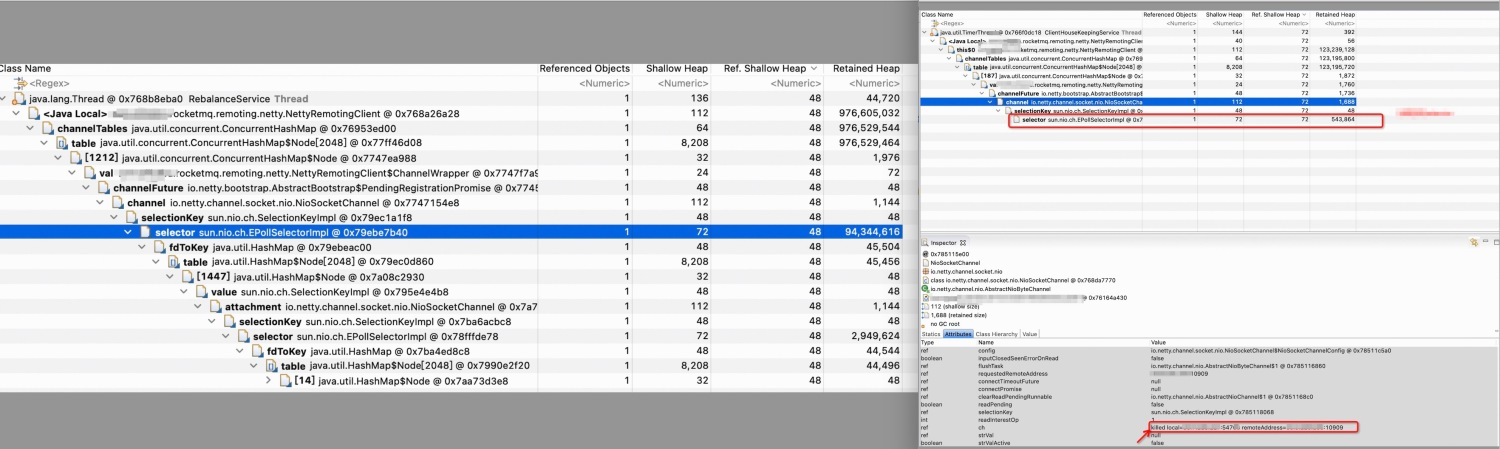

### [Abstract] Although server and client both have idle connection management, we found client-side memory leak of inacvite channels associated with port 10909, which, actually, is hard to described as a corner case. ### [Detail] Here is a case in point.  A high occupation of heap memory by NettyRemotingClient is shown above. Normally, a singleton instance should not be like this. Analyse the heap dump with MAT, it's easy to find a memory leak of channelTables.  Too many closed channels are still alive in channel table preventing garbage collection of themselves and other relative objects such as instances of ChannelWrapper, SelectionKeyImpl. <img width="600" alt="image" src="https://user-images.githubusercontent.com/7539566/211759511-28a0349a-9718-44d1-a89b-2445bab0e3df.png"; style="max-width: 100%;"> Use OQL, `select * from sun.nio.ch.SocketChannelImpl t where t.state = 4 // ST_KILLED` `select * from sun.nio.ch.SocketChannelImpl t where t.state = 4 and toString(t.remoteAddress).endsWith(":10909")` The entries count are almost the same, and is much larger than the num of acvite channels (ST_CONNECTED). This indicates that the application creates many socket connections and never clean the resources. Server port-10909 is used as fast remoting port, especially, of those 10909 tcp sockets, there are one active socket connection and many inactive connections with the same remote broker address. Is the channel reuse not working? No, the anwser is obvious, the created channel is somehow closed and the client has to recreate one and thus results in a memory leak. This situation only happens when a channel IdleStateEvent triggered in broker and it closes the channel directly, for example, a producer connects to the broker but sends message not frequently, the broker will close the channel which has not performed read, write operation for 120 seconds by default. So the reproduce procedure is simple, start a local producer and send messages every 125 seconds, FGC occurs eventually, that's why we say it's not a corner case but can only cause serious result after a long period of running. ### [Bug Fix] The root reason is that inactive event is an inbound event, the channel of the ChannelHandlerContext was registered is now inactive and reached its end of lifetime, channelInactive(ChannelHandlerContext ctx) is invoked when a channel leaves active state and is no longer connected to its remote peer. So the solution is to implement the channelInactive() method and close the channel on the client side. ### [Furthermore] What about the client channel connections with name server? Not the same, client instance will update topic route every 30 seconds even if no messages sent at all. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]