This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 202fe11f [SPARK-31030][SQL] Backward Compatibility for Parsing and

formatting Datetime

202fe11f is described below

commit 202fe11fa1d182fb86c495cada9c9568a5be6019

Author: Yuanjian Li <[email protected]>

AuthorDate: Wed Mar 11 14:11:13 2020 +0800

[SPARK-31030][SQL] Backward Compatibility for Parsing and formatting

Datetime

In Spark version 2.4 and earlier, datetime parsing, formatting and

conversion are performed by using the hybrid calendar (Julian + Gregorian).

Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as

well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using

Java 8 API classes (the java.time packages that are based on ISO chronology ).

The switching job is completed in SPARK-26651.

But after the switching, there are some patterns not compatible between

Java 8 and Java 7, Spark needs its own definition on the patterns rather than

depends on Java API.

In this PR, we achieve this by writing the document and shadow the

incompatible letters. See more details in

[SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030)

For backward compatibility.

No.

After we define our own datetime parsing and formatting patterns, it's same

to old Spark version.

Existing and new added UT.

Locally document test:

Closes #27830 from xuanyuanking/SPARK-31030.

Authored-by: Yuanjian Li <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

(cherry picked from commit 3493162c78822de0563f7d736040aebdb81e936b)

Signed-off-by: Wenchen Fan <[email protected]>

---

R/pkg/R/functions.R | 4 +-

docs/_data/menu-sql.yaml | 2 +

docs/sql-ref-datetime-pattern.md | 220 +++++++++++++++++++++

python/pyspark/sql/functions.py | 6 +-

python/pyspark/sql/readwriter.py | 16 +-

python/pyspark/sql/streaming.py | 8 +-

.../spark/sql/catalyst/catalog/interface.scala | 2 +-

.../catalyst/expressions/datetimeExpressions.scala | 6 +-

.../spark/sql/catalyst/util/DateFormatter.scala | 4 +-

.../catalyst/util/DateTimeFormatterHelper.scala | 5 +-

.../spark/sql/catalyst/util/DateTimeUtils.scala | 62 ++++--

.../sql/catalyst/util/TimestampFormatter.scala | 4 +-

.../sql/catalyst/util/DateTimeUtilsSuite.scala | 18 +-

.../org/apache/spark/sql/DataFrameReader.scala | 8 +-

.../org/apache/spark/sql/DataFrameWriter.scala | 8 +-

.../execution/datasources/PartitioningUtils.scala | 2 +-

.../scala/org/apache/spark/sql/functions.scala | 18 +-

.../spark/sql/streaming/DataStreamReader.scala | 8 +-

.../native/stringCastAndExpressions.sql.out | 6 +-

19 files changed, 341 insertions(+), 66 deletions(-)

diff --git a/R/pkg/R/functions.R b/R/pkg/R/functions.R

index 48f69d5..89c7cbe 100644

--- a/R/pkg/R/functions.R

+++ b/R/pkg/R/functions.R

@@ -2776,7 +2776,7 @@ setMethod("format_string", signature(format =

"character", x = "Column"),

#' head(tmp)}

#' @note from_unixtime since 1.5.0

setMethod("from_unixtime", signature(x = "Column"),

- function(x, format = "uuuu-MM-dd HH:mm:ss") {

+ function(x, format = "yyyy-MM-dd HH:mm:ss") {

jc <- callJStatic("org.apache.spark.sql.functions",

"from_unixtime",

x@jc, format)

@@ -3062,7 +3062,7 @@ setMethod("unix_timestamp", signature(x = "Column",

format = "missing"),

#' @aliases unix_timestamp,Column,character-method

#' @note unix_timestamp(Column, character) since 1.5.0

setMethod("unix_timestamp", signature(x = "Column", format = "character"),

- function(x, format = "uuuu-MM-dd HH:mm:ss") {

+ function(x, format = "yyyy-MM-dd HH:mm:ss") {

jc <- callJStatic("org.apache.spark.sql.functions",

"unix_timestamp", x@jc, format)

column(jc)

})

diff --git a/docs/_data/menu-sql.yaml b/docs/_data/menu-sql.yaml

index 38a5cf6..c17bfd3 100644

--- a/docs/_data/menu-sql.yaml

+++ b/docs/_data/menu-sql.yaml

@@ -223,3 +223,5 @@

url: sql-ref-syntax-aux-resource-mgmt-list-file.html

- text: LIST JAR

url: sql-ref-syntax-aux-resource-mgmt-list-jar.html

+ - text: Datetime Pattern

+ url: sql-ref-datetime-pattern.html

diff --git a/docs/sql-ref-datetime-pattern.md b/docs/sql-ref-datetime-pattern.md

new file mode 100644

index 0000000..429d781

--- /dev/null

+++ b/docs/sql-ref-datetime-pattern.md

@@ -0,0 +1,220 @@

+---

+layout: global

+title: Datetime patterns

+displayTitle: Datetime Patterns for Formatting and Parsing

+license: |

+ Licensed to the Apache Software Foundation (ASF) under one or more

+ contributor license agreements. See the NOTICE file distributed with

+ this work for additional information regarding copyright ownership.

+ The ASF licenses this file to You under the Apache License, Version 2.0

+ (the "License"); you may not use this file except in compliance with

+ the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+---

+

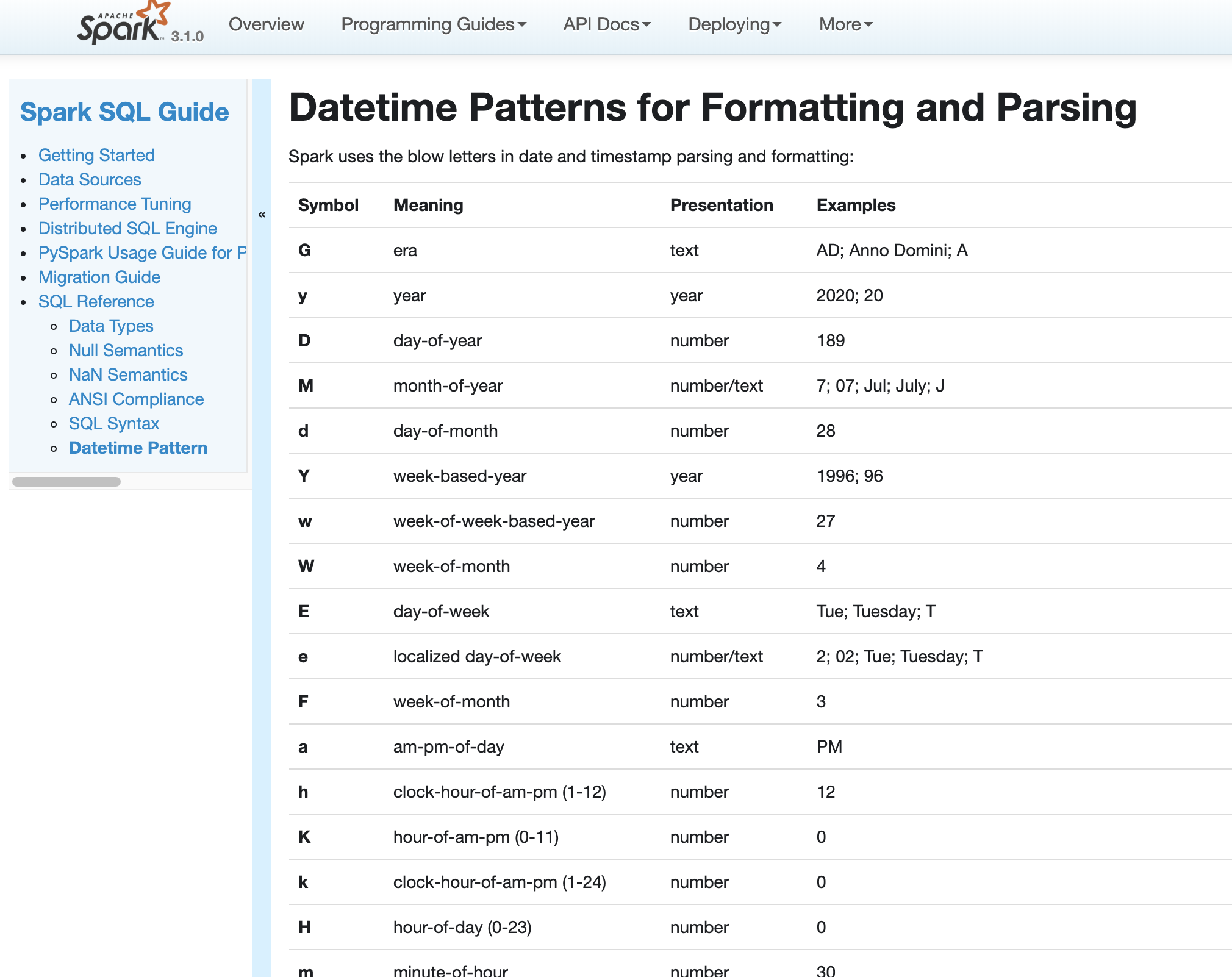

+There are several common scenarios for datetime usage in Spark:

+

+- CSV/JSON datasources use the pattern string for parsing and formatting time

content.

+

+- Datetime functions related to convert string to/from `DateType` or

`TimestampType`. For example, unix_timestamp, date_format, to_unix_timestamp,

from_unixtime, to_date, to_timestamp, from_utc_timestamp, to_utc_timestamp, etc.

+

+Spark uses the below letters in date and timestamp parsing and formatting:

+<table class="table">

+<tr>

+ <th> <b>Symbol</b> </th>

+ <th> <b>Meaning</b> </th>

+ <th> <b>Presentation</b> </th>

+ <th> <b>Examples</b> </th>

+</tr>

+<tr>

+ <td> <b>G</b> </td>

+ <td> era </td>

+ <td> text </td>

+ <td> AD; Anno Domini; A </td>

+</tr>

+<tr>

+ <td> <b>y</b> </td>

+ <td> year </td>

+ <td> year </td>

+ <td> 2020; 20 </td>

+</tr>

+<tr>

+ <td> <b>D</b> </td>

+ <td> day-of-year </td>

+ <td> number </td>

+ <td> 189 </td>

+</tr>

+<tr>

+ <td> <b>M</b> </td>

+ <td> month-of-year </td>

+ <td> number/text </td>

+ <td> 7; 07; Jul; July; J </td>

+</tr>

+<tr>

+ <td> <b>d</b> </td>

+ <td> day-of-month </td>

+ <td> number </td>

+ <td> 28 </td>

+</tr>

+<tr>

+ <td> <b>Y</b> </td>

+ <td> week-based-year </td>

+ <td> year </td>

+ <td> 1996; 96 </td>

+</tr>

+<tr>

+ <td> <b>w</b> </td>

+ <td> week-of-week-based-year </td>

+ <td> number </td>

+ <td> 27 </td>

+</tr>

+<tr>

+ <td> <b>W</b> </td>

+ <td> week-of-month </td>

+ <td> number </td>

+ <td> 4 </td>

+</tr>

+<tr>

+ <td> <b>E</b> </td>

+ <td> day-of-week </td>

+ <td> text </td>

+ <td> Tue; Tuesday; T </td>

+</tr>

+<tr>

+ <td> <b>e</b> </td>

+ <td> localized day-of-week </td>

+ <td> number/text </td>

+ <td> 2; 02; Tue; Tuesday; T </td>

+</tr>

+<tr>

+ <td> <b>F</b> </td>

+ <td> week-of-month </td>

+ <td> number </td>

+ <td> 3 </td>

+</tr>

+<tr>

+ <td> <b>a</b> </td>

+ <td> am-pm-of-day </td>

+ <td> text </td>

+ <td> PM </td>

+</tr>

+<tr>

+ <td> <b>h</b> </td>

+ <td> clock-hour-of-am-pm (1-12) </td>

+ <td> number </td>

+ <td> 12 </td>

+</tr>

+<tr>

+ <td> <b>K</b> </td>

+ <td> hour-of-am-pm (0-11) </td>

+ <td> number </td>

+ <td> 0 </td>

+</tr>

+<tr>

+ <td> <b>k</b> </td>

+ <td> clock-hour-of-day (1-24) </td>

+ <td> number </td>

+ <td> 0 </td>

+</tr>

+<tr>

+ <td> <b>H</b> </td>

+ <td> hour-of-day (0-23) </td>

+ <td> number </td>

+ <td> 0 </td>

+</tr>

+<tr>

+ <td> <b>m</b> </td>

+ <td> minute-of-hour </td>

+ <td> number </td>

+ <td> 30 </td>

+</tr>

+<tr>

+ <td> <b>s</b> </td>

+ <td> second-of-minute </td>

+ <td> number </td>

+ <td> 55 </td>

+</tr>

+<tr>

+ <td> <b>S</b> </td>

+ <td> fraction-of-second </td>

+ <td> fraction </td>

+ <td> 978 </td>

+</tr>

+<tr>

+ <td> <b>z</b> </td>

+ <td> time-zone name </td>

+ <td> zone-name </td>

+ <td> Pacific Standard Time; PST </td>

+</tr>

+<tr>

+ <td> <b>O</b> </td>

+ <td> localized zone-offset </td>

+ <td> offset-O </td>

+ <td> GMT+8; GMT+08:00; UTC-08:00; </td>

+</tr>

+<tr>

+ <td> <b>X</b> </td>

+ <td> zone-offset 'Z' for zero </td>

+ <td> offset-X </td>

+ <td> Z; -08; -0830; -08:30; -083015; -08:30:15; </td>

+</tr>

+<tr>

+ <td> <b>x</b> </td>

+ <td> zone-offset </td>

+ <td> offset-x </td>

+ <td> +0000; -08; -0830; -08:30; -083015; -08:30:15; </td>

+</tr>

+<tr>

+ <td> <b>Z</b> </td>

+ <td> zone-offset </td>

+ <td> offset-Z </td>

+ <td> +0000; -0800; -08:00; </td>

+</tr>

+<tr>

+ <td> <b>'</b> </td>

+ <td> escape for text </td>

+ <td> delimiter </td>

+ <td></td>

+</tr>

+<tr>

+ <td> <b>''</b> </td>

+ <td> single quote </td>

+ <td> literal </td>

+ <td> ' </td>

+</tr>

+</table>

+

+The count of pattern letters determines the format.

+

+- Text: The text style is determined based on the number of pattern letters

used. Less than 4 pattern letters will use the short form. Exactly 4 pattern

letters will use the full form. Exactly 5 pattern letters will use the narrow

form. Six or more letters will fail.

+

+- Number: If the count of letters is one, then the value is output using the

minimum number of digits and without padding. Otherwise, the count of digits is

used as the width of the output field, with the value zero-padded as necessary.

The following pattern letters have constraints on the count of letters. Only

one letter 'F' can be specified. Up to two letters of 'd', 'H', 'h', 'K', 'k',

'm', and 's' can be specified. Up to three letters of 'D' can be specified.

+

+- Number/Text: If the count of pattern letters is 3 or greater, use the Text

rules above. Otherwise use the Number rules above.

+

+- Fraction: Outputs the micro-of-second field as a fraction-of-second. The

micro-of-second value has six digits, thus the count of pattern letters is from

1 to 6. If it is less than 6, then the micro-of-second value is truncated, with

only the most significant digits being output.

+

+- Year: The count of letters determines the minimum field width below which

padding is used. If the count of letters is two, then a reduced two digit form

is used. For printing, this outputs the rightmost two digits. For parsing, this

will parse using the base value of 2000, resulting in a year within the range

2000 to 2099 inclusive. If the count of letters is less than four (but not

two), then the sign is only output for negative years. Otherwise, the sign is

output if the pad width is [...]

+

+- Zone names: This outputs the display name of the time-zone ID. If the count

of letters is one, two or three, then the short name is output. If the count of

letters is four, then the full name is output. Five or more letters will fail.

+

+- Offset X and x: This formats the offset based on the number of pattern

letters. One letter outputs just the hour, such as '+01', unless the minute is

non-zero in which case the minute is also output, such as '+0130'. Two letters

outputs the hour and minute, without a colon, such as '+0130'. Three letters

outputs the hour and minute, with a colon, such as '+01:30'. Four letters

outputs the hour and minute and optional second, without a colon, such as

'+013015'. Five letters outputs the [...]

+

+- Offset O: This formats the localized offset based on the number of pattern

letters. One letter outputs the short form of the localized offset, which is

localized offset text, such as 'GMT', with hour without leading zero, optional

2-digit minute and second if non-zero, and colon, for example 'GMT+8'. Four

letters outputs the full form, which is localized offset text, such as 'GMT,

with 2-digit hour and minute field, optional second field if non-zero, and

colon, for example 'GMT+08:00'. [...]

+

+- Offset Z: This formats the offset based on the number of pattern letters.

One, two or three letters outputs the hour and minute, without a colon, such as

'+0130'. The output will be '+0000' when the offset is zero. Four letters

outputs the full form of localized offset, equivalent to four letters of

Offset-O. The output will be the corresponding localized offset text if the

offset is zero. Five letters outputs the hour, minute, with optional second if

non-zero, with colon. It outputs ' [...]

+

+More details for the text style:

+

+- Short Form: Short text, typically an abbreviation. For example, day-of-week

Monday might output "Mon".

+

+- Full Form: Full text, typically the full description. For example,

day-of-week Monday might output "Monday".

+

+- Narrow Form: Narrow text, typically a single letter. For example,

day-of-week Monday might output "M".

diff --git a/python/pyspark/sql/functions.py b/python/pyspark/sql/functions.py

index 5bf8165..c23cc2b 100644

--- a/python/pyspark/sql/functions.py

+++ b/python/pyspark/sql/functions.py

@@ -1249,7 +1249,7 @@ def last_day(date):

@ignore_unicode_prefix

@since(1.5)

-def from_unixtime(timestamp, format="uuuu-MM-dd HH:mm:ss"):

+def from_unixtime(timestamp, format="yyyy-MM-dd HH:mm:ss"):

"""

Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC)

to a string

representing the timestamp of that moment in the current system time zone

in the given

@@ -1266,9 +1266,9 @@ def from_unixtime(timestamp, format="uuuu-MM-dd

HH:mm:ss"):

@since(1.5)

-def unix_timestamp(timestamp=None, format='uuuu-MM-dd HH:mm:ss'):

+def unix_timestamp(timestamp=None, format='yyyy-MM-dd HH:mm:ss'):

"""

- Convert time string with given pattern ('uuuu-MM-dd HH:mm:ss', by default)

+ Convert time string with given pattern ('yyyy-MM-dd HH:mm:ss', by default)

to Unix time stamp (in seconds), using the default timezone and the default

locale, return null if fail.

diff --git a/python/pyspark/sql/readwriter.py b/python/pyspark/sql/readwriter.py

index 2db2587..5904688 100644

--- a/python/pyspark/sql/readwriter.py

+++ b/python/pyspark/sql/readwriter.py

@@ -223,12 +223,12 @@ class DataFrameReader(OptionUtils):

:param dateFormat: sets the string that indicates a date format.

Custom date formats

follow the formats at

``java.time.format.DateTimeFormatter``. This

applies to date type. If None is set, it uses the

- default value, ``uuuu-MM-dd``.

+ default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

Custom date formats follow the formats at

``java.time.format.DateTimeFormatter``.

This applies to timestamp type. If None is

set, it uses the

- default value,

``uuuu-MM-dd'T'HH:mm:ss.SSSXXX``.

+ default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param multiLine: parse one record, which may span multiple lines, per

file. If None is

set, it uses the default value, ``false``.

:param allowUnquotedControlChars: allows JSON Strings to contain

unquoted control

@@ -432,12 +432,12 @@ class DataFrameReader(OptionUtils):

:param dateFormat: sets the string that indicates a date format.

Custom date formats

follow the formats at

``java.time.format.DateTimeFormatter``. This

applies to date type. If None is set, it uses the

- default value, ``uuuu-MM-dd``.

+ default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

Custom date formats follow the formats at

``java.time.format.DateTimeFormatter``.

This applies to timestamp type. If None is

set, it uses the

- default value,

``uuuu-MM-dd'T'HH:mm:ss.SSSXXX``.

+ default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param maxColumns: defines a hard limit of how many columns a record

can have. If None is

set, it uses the default value, ``20480``.

:param maxCharsPerColumn: defines the maximum number of characters

allowed for any given

@@ -852,12 +852,12 @@ class DataFrameWriter(OptionUtils):

:param dateFormat: sets the string that indicates a date format.

Custom date formats

follow the formats at

``java.time.format.DateTimeFormatter``. This

applies to date type. If None is set, it uses the

- default value, ``uuuu-MM-dd``.

+ default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

Custom date formats follow the formats at

``java.time.format.DateTimeFormatter``.

This applies to timestamp type. If None is

set, it uses the

- default value,

``uuuu-MM-dd'T'HH:mm:ss.SSSXXX``.

+ default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param encoding: specifies encoding (charset) of saved json files. If

None is set,

the default UTF-8 charset will be used.

:param lineSep: defines the line separator that should be used for

writing. If None is

@@ -957,12 +957,12 @@ class DataFrameWriter(OptionUtils):

:param dateFormat: sets the string that indicates a date format.

Custom date formats

follow the formats at

``java.time.format.DateTimeFormatter``. This

applies to date type. If None is set, it uses the

- default value, ``uuuu-MM-dd``.

+ default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

Custom date formats follow the formats at

``java.time.format.DateTimeFormatter``.

This applies to timestamp type. If None is

set, it uses the

- default value,

``uuuu-MM-dd'T'HH:mm:ss.SSSXXX``.

+ default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param ignoreLeadingWhiteSpace: a flag indicating whether or not

leading whitespaces from

values being written should be

skipped. If None is set, it

uses the default value, ``true``.

diff --git a/python/pyspark/sql/streaming.py b/python/pyspark/sql/streaming.py

index f989cb3..6a7624f 100644

--- a/python/pyspark/sql/streaming.py

+++ b/python/pyspark/sql/streaming.py

@@ -461,12 +461,12 @@ class DataStreamReader(OptionUtils):

:param dateFormat: sets the string that indicates a date format.

Custom date formats

follow the formats at

``java.time.format.DateTimeFormatter``. This

applies to date type. If None is set, it uses the

- default value, ``uuuu-MM-dd``.

+ default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

Custom date formats follow the formats at

``java.time.format.DateTimeFormatter``.

This applies to timestamp type. If None is

set, it uses the

- default value,

``uuuu-MM-dd'T'HH:mm:ss.SSSXXX``.

+ default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param multiLine: parse one record, which may span multiple lines, per

file. If None is

set, it uses the default value, ``false``.

:param allowUnquotedControlChars: allows JSON Strings to contain

unquoted control

@@ -673,12 +673,12 @@ class DataStreamReader(OptionUtils):

:param dateFormat: sets the string that indicates a date format.

Custom date formats

follow the formats at

``java.time.format.DateTimeFormatter``. This

applies to date type. If None is set, it uses the

- default value, ``uuuu-MM-dd``.

+ default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

Custom date formats follow the formats at

``java.time.format.DateTimeFormatter``.

This applies to timestamp type. If None is

set, it uses the

- default value,

``uuuu-MM-dd'T'HH:mm:ss.SSSXXX``.

+ default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param maxColumns: defines a hard limit of how many columns a record

can have. If None is

set, it uses the default value, ``20480``.

:param maxCharsPerColumn: defines the maximum number of characters

allowed for any given

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

index 81561c5..6e965ef 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

@@ -522,7 +522,7 @@ object CatalogColumnStat extends Logging {

val VERSION = 2

private def getTimestampFormatter(): TimestampFormatter = {

- TimestampFormatter(format = "uuuu-MM-dd HH:mm:ss.SSSSSS", zoneId =

ZoneOffset.UTC)

+ TimestampFormatter(format = "yyyy-MM-dd HH:mm:ss.SSSSSS", zoneId =

ZoneOffset.UTC)

}

/**

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

index d92d700..1dad440 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

@@ -671,7 +671,7 @@ case class DateFormatClass(left: Expression, right:

Expression, timeZoneId: Opti

Arguments:

* timeExp - A date/timestamp or string which is returned as a UNIX

timestamp.

* format - Date/time format pattern to follow. Ignored if `timeExp` is

not a string.

- Default value is "uuuu-MM-dd HH:mm:ss". See

`java.time.format.DateTimeFormatter`

+ Default value is "yyyy-MM-dd HH:mm:ss". See

`java.time.format.DateTimeFormatter`

for valid date and time format patterns.

""",

examples = """

@@ -705,7 +705,7 @@ case class ToUnixTimestamp(

* Converts time string with given pattern to Unix time stamp (in seconds),

returns null if fail.

* See

[https://docs.oracle.com/javase/8/docs/api/java/time/format/DateTimeFormatter.html].

* Note that hive Language Manual says it returns 0 if fail, but in fact it

returns null.

- * If the second parameter is missing, use "uuuu-MM-dd HH:mm:ss".

+ * If the second parameter is missing, use "yyyy-MM-dd HH:mm:ss".

* If no parameters provided, the first parameter will be current_timestamp.

* If the first parameter is a Date or Timestamp instead of String, we will

ignore the

* second parameter.

@@ -716,7 +716,7 @@ case class ToUnixTimestamp(

Arguments:

* timeExp - A date/timestamp or string. If not provided, this defaults

to current time.

* format - Date/time format pattern to follow. Ignored if `timeExp` is

not a string.

- Default value is "uuuu-MM-dd HH:mm:ss". See

`java.time.format.DateTimeFormatter`

+ Default value is "yyyy-MM-dd HH:mm:ss". See

`java.time.format.DateTimeFormatter`

for valid date and time format patterns.

""",

examples = """

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateFormatter.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateFormatter.scala

index caf58c1..d2e4e8b 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateFormatter.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateFormatter.scala

@@ -95,9 +95,7 @@ object DateFormatter {

val defaultLocale: Locale = Locale.US

- def defaultPattern(): String = {

- if (SQLConf.get.legacyTimeParserPolicy == LEGACY) "yyyy-MM-dd" else

"uuuu-MM-dd"

- }

+ val defaultPattern: String = "yyyy-MM-dd"

private def getFormatter(

format: Option[String],

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

index 33aa733..fab5041 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

@@ -52,10 +52,11 @@ trait DateTimeFormatterHelper {

// less synchronised.

// The Cache.get method is not used here to avoid creation of additional

instances of Callable.

protected def getOrCreateFormatter(pattern: String, locale: Locale):

DateTimeFormatter = {

- val key = (pattern, locale)

+ val newPattern = DateTimeUtils.convertIncompatiblePattern(pattern)

+ val key = (newPattern, locale)

var formatter = cache.getIfPresent(key)

if (formatter == null) {

- formatter = buildFormatter(pattern, locale)

+ formatter = buildFormatter(newPattern, locale)

cache.put(key, formatter)

}

formatter

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

index 6811bfc..2427a71 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

@@ -819,9 +819,9 @@ object DateTimeUtils {

/**

* Extracts special values from an input string ignoring case.

- * @param input - a trimmed string

- * @param zoneId - zone identifier used to get the current date.

- * @return some special value in lower case or None.

+ * @param input A trimmed string

+ * @param zoneId Zone identifier used to get the current date.

+ * @return Some special value in lower case or None.

*/

private def extractSpecialValue(input: String, zoneId: ZoneId):

Option[String] = {

def isValid(value: String, timeZoneId: String): Boolean = {

@@ -848,9 +848,9 @@ object DateTimeUtils {

/**

* Converts notational shorthands that are converted to ordinary timestamps.

- * @param input - a trimmed string

- * @param zoneId - zone identifier used to get the current date.

- * @return some of microseconds since the epoch if the conversion completed

+ * @param input A trimmed string

+ * @param zoneId Zone identifier used to get the current date.

+ * @return Some of microseconds since the epoch if the conversion completed

* successfully otherwise None.

*/

def convertSpecialTimestamp(input: String, zoneId: ZoneId):

Option[SQLTimestamp] = {

@@ -874,9 +874,9 @@ object DateTimeUtils {

/**

* Converts notational shorthands that are converted to ordinary dates.

- * @param input - a trimmed string

- * @param zoneId - zone identifier used to get the current date.

- * @return some of days since the epoch if the conversion completed

successfully otherwise None.

+ * @param input A trimmed string

+ * @param zoneId Zone identifier used to get the current date.

+ * @return Some of days since the epoch if the conversion completed

successfully otherwise None.

*/

def convertSpecialDate(input: String, zoneId: ZoneId): Option[SQLDate] = {

extractSpecialValue(input, zoneId).flatMap {

@@ -898,9 +898,9 @@ object DateTimeUtils {

/**

* Subtracts two dates.

- * @param endDate - the end date, exclusive

- * @param startDate - the start date, inclusive

- * @return an interval between two dates. The interval can be negative

+ * @param endDate The end date, exclusive

+ * @param startDate The start date, inclusive

+ * @return An interval between two dates. The interval can be negative

* if the end date is before the start date.

*/

def subtractDates(endDate: SQLDate, startDate: SQLDate): CalendarInterval = {

@@ -911,4 +911,42 @@ object DateTimeUtils {

val days = period.getDays

new CalendarInterval(months, days, 0)

}

+

+ /**

+ * In Spark 3.0, we switch to the Proleptic Gregorian calendar and use

DateTimeFormatter for

+ * parsing/formatting datetime values. The pattern string is incompatible

with the one defined

+ * by SimpleDateFormat in Spark 2.4 and earlier. This function converts all

incompatible pattern

+ * for the new parser in Spark 3.0. See more details in SPARK-31030.

+ * @param pattern The input pattern.

+ * @return The pattern for new parser

+ */

+ def convertIncompatiblePattern(pattern: String): String = {

+ val eraDesignatorContained = pattern.split("'").zipWithIndex.exists {

+ case (patternPart, index) =>

+ // Text can be quoted using single quotes, we only check the non-quote

parts.

+ index % 2 == 0 && patternPart.contains("G")

+ }

+ pattern.split("'").zipWithIndex.map {

+ case (patternPart, index) =>

+ if (index % 2 == 0) {

+ // The meaning of 'u' was day number of week in SimpleDateFormat, it

was changed to year

+ // in DateTimeFormatter. Substitute 'u' to 'e' and use

DateTimeFormatter to parse the

+ // string. If parsable, return the result; otherwise, fall back to

'u', and then use the

+ // legacy SimpleDateFormat parser to parse. When it is successfully

parsed, throw an

+ // exception and ask users to change the pattern strings or turn on

the legacy mode;

+ // otherwise, return NULL as what Spark 2.4 does.

+ val res = patternPart.replace("u", "e")

+ // In DateTimeFormatter, 'u' supports negative years. We substitute

'y' to 'u' here for

+ // keeping the support in Spark 3.0. If parse failed in Spark 3.0,

fall back to 'y'.

+ // We only do this substitution when there is no era designator

found in the pattern.

+ if (!eraDesignatorContained) {

+ res.replace("y", "u")

+ } else {

+ res

+ }

+ } else {

+ patternPart

+ }

+ }.mkString("'")

+ }

}

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/TimestampFormatter.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/TimestampFormatter.scala

index 866f81b..99139ca 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/TimestampFormatter.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/TimestampFormatter.scala

@@ -87,7 +87,7 @@ class Iso8601TimestampFormatter(

}

/**

- * The formatter parses/formats timestamps according to the pattern

`uuuu-MM-dd HH:mm:ss.[..fff..]`

+ * The formatter parses/formats timestamps according to the pattern

`yyyy-MM-dd HH:mm:ss.[..fff..]`

* where `[..fff..]` is a fraction of second up to microsecond resolution. The

formatter does not

* output trailing zeros in the fraction. For example, the timestamp

`2019-03-05 15:00:01.123400` is

* formatted as the string `2019-03-05 15:00:01.1234`.

@@ -193,7 +193,7 @@ object TimestampFormatter {

val defaultLocale: Locale = Locale.US

- def defaultPattern(): String = s"${DateFormatter.defaultPattern()} HH:mm:ss"

+ def defaultPattern(): String = s"${DateFormatter.defaultPattern} HH:mm:ss"

private def getFormatter(

format: Option[String],

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

index 7685b62..d98cd0a 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

@@ -89,7 +89,7 @@ class DateTimeUtilsSuite extends SparkFunSuite with Matchers

with SQLHelper {

test("SPARK-6785: java date conversion before and after epoch") {

def format(d: Date): String = {

- TimestampFormatter("uuuu-MM-dd",

defaultTimeZone().toZoneId).format(fromMillis(d.getTime))

+ TimestampFormatter("yyyy-MM-dd",

defaultTimeZone().toZoneId).format(fromMillis(d.getTime))

}

def checkFromToJavaDate(d1: Date): Unit = {

val d2 = toJavaDate(fromJavaDate(d1))

@@ -667,4 +667,20 @@ class DateTimeUtilsSuite extends SparkFunSuite with

Matchers with SQLHelper {

assert(toDate("tomorrow CET ", zoneId).get === today + 1)

}

}

+

+ test("check incompatible pattern") {

+ assert(convertIncompatiblePattern("MM-DD-u") === "MM-DD-e")

+ assert(convertIncompatiblePattern("yyyy-MM-dd'T'HH:mm:ss.SSSz")

+ === "uuuu-MM-dd'T'HH:mm:ss.SSSz")

+ assert(convertIncompatiblePattern("yyyy-MM'y contains in quoted

text'HH:mm:ss")

+ === "uuuu-MM'y contains in quoted text'HH:mm:ss")

+ assert(convertIncompatiblePattern("yyyy-MM-dd-u'T'HH:mm:ss.SSSz")

+ === "uuuu-MM-dd-e'T'HH:mm:ss.SSSz")

+ assert(convertIncompatiblePattern("yyyy-MM'u contains in quoted

text'HH:mm:ss")

+ === "uuuu-MM'u contains in quoted text'HH:mm:ss")

+ assert(convertIncompatiblePattern("yyyy-MM'u contains in quoted

text'''''HH:mm:ss")

+ === "uuuu-MM'u contains in quoted text'''''HH:mm:ss")

+ assert(convertIncompatiblePattern("yyyy-MM-dd'T'HH:mm:ss.SSSz G")

+ === "yyyy-MM-dd'T'HH:mm:ss.SSSz G")

+ }

}

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

index 6cce720..9416126 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

@@ -390,10 +390,10 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

* <li>`columnNameOfCorruptRecord` (default is the value specified in

* `spark.sql.columnNameOfCorruptRecord`): allows renaming the new field

having malformed string

* created by `PERMISSIVE` mode. This overrides

`spark.sql.columnNameOfCorruptRecord`.</li>

- * <li>`dateFormat` (default `uuuu-MM-dd`): sets the string that indicates a

date format.

+ * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

* Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

* This applies to date type.</li>

- * <li>`timestampFormat` (default `uuuu-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

+ * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

* `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

* <li>`multiLine` (default `false`): parse one record, which may span

multiple lines,

@@ -615,10 +615,10 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

* value.</li>

* <li>`negativeInf` (default `-Inf`): sets the string representation of a

negative infinity

* value.</li>

- * <li>`dateFormat` (default `uuuu-MM-dd`): sets the string that indicates a

date format.

+ * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

* Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

* This applies to date type.</li>

- * <li>`timestampFormat` (default `uuuu-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

+ * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

* `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

* <li>`maxColumns` (default `20480`): defines a hard limit of how many

columns

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

index fff1f4b..22b26ca 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

@@ -748,10 +748,10 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

* <li>`compression` (default `null`): compression codec to use when saving

to file. This can be

* one of the known case-insensitive shorten names (`none`, `bzip2`, `gzip`,

`lz4`,

* `snappy` and `deflate`). </li>

- * <li>`dateFormat` (default `uuuu-MM-dd`): sets the string that indicates a

date format.

+ * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

* Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

* This applies to date type.</li>

- * <li>`timestampFormat` (default `uuuu-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

+ * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

* `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

* <li>`encoding` (by default it is not set): specifies encoding (charset)

of saved json

@@ -869,10 +869,10 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

* <li>`compression` (default `null`): compression codec to use when saving

to file. This can be

* one of the known case-insensitive shorten names (`none`, `bzip2`, `gzip`,

`lz4`,

* `snappy` and `deflate`). </li>

- * <li>`dateFormat` (default `uuuu-MM-dd`): sets the string that indicates a

date format.

+ * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

* Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

* This applies to date type.</li>

- * <li>`timestampFormat` (default `uuuu-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

+ * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

* `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

* <li>`ignoreLeadingWhiteSpace` (default `true`): a flag indicating whether

or not leading

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/PartitioningUtils.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/PartitioningUtils.scala

index fdad43b2..b0ec24e 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/PartitioningUtils.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/PartitioningUtils.scala

@@ -60,7 +60,7 @@ object PartitionSpec {

object PartitioningUtils {

- val timestampPartitionPattern = "uuuu-MM-dd HH:mm:ss[.S]"

+ val timestampPartitionPattern = "yyyy-MM-dd HH:mm:ss[.S]"

private[datasources] case class PartitionValues(columnNames: Seq[String],

literals: Seq[Literal])

{

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

index 7a58957..f280ec3 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

@@ -2635,8 +2635,8 @@ object functions {

* See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

*

* @param dateExpr A date, timestamp or string. If a string, the data must

be in a format that

- * can be cast to a timestamp, such as `uuuu-MM-dd` or

`uuuu-MM-dd HH:mm:ss.SSSS`

- * @param format A pattern `dd.MM.uuuu` would return a string like

`18.03.1993`

+ * can be cast to a timestamp, such as `yyyy-MM-dd` or

`yyyy-MM-dd HH:mm:ss.SSSS`

+ * @param format A pattern `dd.MM.yyyy` would return a string like

`18.03.1993`

* @return A string, or null if `dateExpr` was a string that could not be

cast to a timestamp

* @note Use specialized functions like [[year]] whenever possible as they

benefit from a

* specialized implementation.

@@ -2873,7 +2873,7 @@ object functions {

/**

* Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC)

to a string

* representing the timestamp of that moment in the current system time zone

in the

- * uuuu-MM-dd HH:mm:ss format.

+ * yyyy-MM-dd HH:mm:ss format.

*

* @param ut A number of a type that is castable to a long, such as string

or integer. Can be

* negative for timestamps before the unix epoch

@@ -2918,11 +2918,11 @@ object functions {

}

/**

- * Converts time string in format uuuu-MM-dd HH:mm:ss to Unix timestamp (in

seconds),

+ * Converts time string in format yyyy-MM-dd HH:mm:ss to Unix timestamp (in

seconds),

* using the default timezone and the default locale.

*

* @param s A date, timestamp or string. If a string, the data must be in the

- * `uuuu-MM-dd HH:mm:ss` format

+ * `yyyy-MM-dd HH:mm:ss` format

* @return A long, or null if the input was a string not of the correct

format

* @group datetime_funcs

* @since 1.5.0

@@ -2937,7 +2937,7 @@ object functions {

* See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

*

* @param s A date, timestamp or string. If a string, the data must be in a

format that can be

- * cast to a date, such as `uuuu-MM-dd` or `uuuu-MM-dd

HH:mm:ss.SSSS`

+ * cast to a date, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

* @param p A date time pattern detailing the format of `s` when `s` is a

string

* @return A long, or null if `s` was a string that could not be cast to a

date or `p` was

* an invalid format

@@ -2950,7 +2950,7 @@ object functions {

* Converts to a timestamp by casting rules to `TimestampType`.

*

* @param s A date, timestamp or string. If a string, the data must be in a

format that can be

- * cast to a timestamp, such as `uuuu-MM-dd` or `uuuu-MM-dd

HH:mm:ss.SSSS`

+ * cast to a timestamp, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

* @return A timestamp, or null if the input was a string that could not be

cast to a timestamp

* @group datetime_funcs

* @since 2.2.0

@@ -2965,7 +2965,7 @@ object functions {

* See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

*

* @param s A date, timestamp or string. If a string, the data must be in

a format that can be

- * cast to a timestamp, such as `uuuu-MM-dd` or `uuuu-MM-dd

HH:mm:ss.SSSS`

+ * cast to a timestamp, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

* @param fmt A date time pattern detailing the format of `s` when `s` is a

string

* @return A timestamp, or null if `s` was a string that could not be cast

to a timestamp or

* `fmt` was an invalid format

@@ -2990,7 +2990,7 @@ object functions {

* See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

*

* @param e A date, timestamp or string. If a string, the data must be in

a format that can be

- * cast to a date, such as `uuuu-MM-dd` or `uuuu-MM-dd

HH:mm:ss.SSSS`

+ * cast to a date, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

* @param fmt A date time pattern detailing the format of `e` when `e`is a

string

* @return A date, or null if `e` was a string that could not be cast to a

date or `fmt` was an

* invalid format

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

index 0eb4776..6848be1 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

@@ -252,10 +252,10 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

* <li>`columnNameOfCorruptRecord` (default is the value specified in

* `spark.sql.columnNameOfCorruptRecord`): allows renaming the new field

having malformed string

* created by `PERMISSIVE` mode. This overrides

`spark.sql.columnNameOfCorruptRecord`.</li>

- * <li>`dateFormat` (default `uuuu-MM-dd`): sets the string that indicates a

date format.

+ * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

* Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

* This applies to date type.</li>

- * <li>`timestampFormat` (default `uuuu-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

+ * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

* `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

* <li>`multiLine` (default `false`): parse one record, which may span

multiple lines,

@@ -318,10 +318,10 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

* value.</li>

* <li>`negativeInf` (default `-Inf`): sets the string representation of a

negative infinity

* value.</li>

- * <li>`dateFormat` (default `uuuu-MM-dd`): sets the string that indicates a

date format.

+ * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

* Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

* This applies to date type.</li>

- * <li>`timestampFormat` (default `uuuu-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

+ * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

* `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

* <li>`maxColumns` (default `20480`): defines a hard limit of how many

columns

diff --git

a/sql/core/src/test/resources/sql-tests/results/typeCoercion/native/stringCastAndExpressions.sql.out

b/sql/core/src/test/resources/sql-tests/results/typeCoercion/native/stringCastAndExpressions.sql.out

index 7b419c6..5c56eff 100644

---

a/sql/core/src/test/resources/sql-tests/results/typeCoercion/native/stringCastAndExpressions.sql.out

+++

b/sql/core/src/test/resources/sql-tests/results/typeCoercion/native/stringCastAndExpressions.sql.out

@@ -144,7 +144,7 @@ NULL

-- !query

select to_unix_timestamp(a) from t

-- !query schema

-struct<to_unix_timestamp(a, uuuu-MM-dd HH:mm:ss):bigint>

+struct<to_unix_timestamp(a, yyyy-MM-dd HH:mm:ss):bigint>

-- !query output

NULL

@@ -160,7 +160,7 @@ NULL

-- !query

select unix_timestamp(a) from t

-- !query schema

-struct<unix_timestamp(a, uuuu-MM-dd HH:mm:ss):bigint>

+struct<unix_timestamp(a, yyyy-MM-dd HH:mm:ss):bigint>

-- !query output

NULL

@@ -176,7 +176,7 @@ NULL

-- !query

select from_unixtime(a) from t

-- !query schema

-struct<from_unixtime(CAST(a AS BIGINT), uuuu-MM-dd HH:mm:ss):string>

+struct<from_unixtime(CAST(a AS BIGINT), yyyy-MM-dd HH:mm:ss):string>

-- !query output

NULL

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]