This is an automated email from the ASF dual-hosted git repository.

yikun pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark-docker.git

The following commit(s) were added to refs/heads/master by this push:

new f488d73 [SPARK-40519] Add "Publish" workflow to help release

apache/spark image

f488d73 is described below

commit f488d732d254caa78c1e1a2ef74958e6c867dad6

Author: Yikun Jiang <[email protected]>

AuthorDate: Tue Nov 15 21:32:30 2022 +0800

[SPARK-40519] Add "Publish" workflow to help release apache/spark image

### What changes were proposed in this pull request?

The publish step will include 3 steps:

1. First build the local image.

2. Pass related test (K8s test / Standalone test) using image of first step.

3. After pass all test, will publish to `ghcr` (This might help RC test) or

`dockerhub`

It's about 30-40 mins to publish all images.

Add "Publish" workflow to help release apache/spark image.

### Why are the changes needed?

One click to create the `apche/spark` image.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

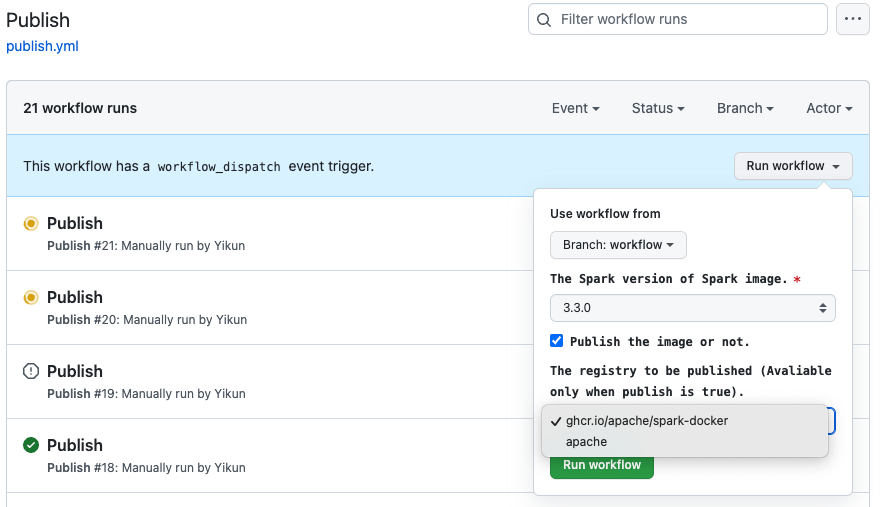

1. Set default branch in my fork repo

2. Run workflow manually,

https://github.com/Yikun/spark-docker/actions/workflows/publish.yml?query=is%3Asuccess

Closes #23 from Yikun/workflow.

Authored-by: Yikun Jiang <[email protected]>

Signed-off-by: Yikun Jiang <[email protected]>

---

.github/workflows/main.yml | 43 +++++++++++++++++++++++

.github/workflows/publish.yml | 66 ++++++++++++++++++++++++++++++++++

tools/manifest.py | 82 +++++++++++++++++++++++++++++++++++++++++++

versions.json | 64 +++++++++++++++++++++++++++++++++

4 files changed, 255 insertions(+)

diff --git a/.github/workflows/main.yml b/.github/workflows/main.yml

index accf8ae..dfb99e9 100644

--- a/.github/workflows/main.yml

+++ b/.github/workflows/main.yml

@@ -37,6 +37,16 @@ on:

required: true

type: string

default: 11

+ publish:

+ description: Publish the image or not.

+ required: false

+ type: boolean

+ default: false

+ repository:

+ description: The registry to be published (Avaliable only when publish

is selected).

+ required: false

+ type: string

+ default: ghcr.io/apache/spark-docker

jobs:

main:

@@ -83,6 +93,9 @@ jobs:

UNIQUE_IMAGE_TAG=${{ matrix.spark_version }}-$TAG

IMAGE_URL=$TEST_REPO/$IMAGE_NAME:$UNIQUE_IMAGE_TAG

+ PUBLISH_REPO=${{ inputs.repository }}

+ PUBLISH_IMAGE_URL=`tools/manifest.py tags -i

${PUBLISH_REPO}/${IMAGE_NAME} -p ${{ matrix.spark_version }}/${TAG}`

+

# Unique image tag in each version:

3.3.0-scala2.12-java11-python3-ubuntu

echo "UNIQUE_IMAGE_TAG=${UNIQUE_IMAGE_TAG}" >> $GITHUB_ENV

# Test repo: ghcr.io/apache/spark-docker

@@ -94,6 +107,9 @@ jobs:

# Image URL:

ghcr.io/apache/spark-docker/spark:3.3.0-scala2.12-java11-python3-ubuntu

echo "IMAGE_URL=${IMAGE_URL}" >> $GITHUB_ENV

+ echo "PUBLISH_REPO=${PUBLISH_REPO}" >> $GITHUB_ENV

+ echo "PUBLISH_IMAGE_URL=${PUBLISH_IMAGE_URL}" >> $GITHUB_ENV

+

- name: Print Image tags

run: |

echo "UNIQUE_IMAGE_TAG: "${UNIQUE_IMAGE_TAG}

@@ -102,6 +118,9 @@ jobs:

echo "IMAGE_PATH: "${IMAGE_PATH}

echo "IMAGE_URL: "${IMAGE_URL}

+ echo "PUBLISH_REPO:"${PUBLISH_REPO}

+ echo "PUBLISH_IMAGE_URL:"${PUBLISH_IMAGE_URL}

+

- name: Build and push test image

uses: docker/build-push-action@v2

with:

@@ -221,3 +240,27 @@ jobs:

with:

name: spark-on-kubernetes-it-log

path: "**/target/integration-tests.log"

+

+ - name: Publish - Login to GitHub Container Registry

+ if: ${{ inputs.publish }}

+ uses: docker/login-action@v2

+ with:

+ registry: ghcr.io

+ username: ${{ github.actor }}

+ password: ${{ secrets.GITHUB_TOKEN }}

+

+ - name: Publish - Login to Dockerhub Registry

+ if: ${{ inputs.publish }}

+ uses: docker/login-action@v2

+ with:

+ username: ${{ secrets.DOCKERHUB_USER }}

+ password: ${{ secrets.DOCKERHUB_TOKEN }}

+

+ - name: Publish - Push Image

+ if: ${{ inputs.publish }}

+ uses: docker/build-push-action@v2

+ with:

+ context: ${{ env.IMAGE_PATH }}

+ push: true

+ tags: ${{ env.PUBLISH_IMAGE_URL }}

+ platforms: linux/amd64,linux/arm64

diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

new file mode 100644

index 0000000..a44153b

--- /dev/null

+++ b/.github/workflows/publish.yml

@@ -0,0 +1,66 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+name: "Publish"

+

+on:

+ workflow_dispatch:

+ inputs:

+ spark:

+ description: 'The Spark version of Spark image.'

+ required: true

+ default: '3.3.0'

+ type: choice

+ options:

+ - 3.3.0

+ - 3.3.1

+ publish:

+ description: 'Publish the image or not.'

+ default: false

+ type: boolean

+ required: true

+ repository:

+ description: The registry to be published (Avaliable only when publish

is true).

+ required: false

+ default: ghcr.io/apache/spark-docker

+ type: choice

+ options:

+ # GHCR: This required the write permission of apache/spark-docker

(Spark Committer)

+ - ghcr.io/apache/spark-docker

+ # Dockerhub: This required the DOCKERHUB_TOKEN and DOCKERHUB_USER

(Spark Committer)

+ - apache

+

+jobs:

+ run-build:

+ # if: startsWith(inputs.spark, '3.3')

+ strategy:

+ matrix:

+ scala: [2.12]

+ java: [11]

+ permissions:

+ packages: write

+ name: Run

+ secrets: inherit

+ uses: ./.github/workflows/main.yml

+ with:

+ spark: ${{ inputs.spark }}

+ scala: ${{ matrix.scala }}

+ java: ${{ matrix.java }}

+ publish: ${{ inputs.publish }}

+ repository: ${{ inputs.repository }}

diff --git a/tools/manifest.py b/tools/manifest.py

new file mode 100755

index 0000000..fbfad6f

--- /dev/null

+++ b/tools/manifest.py

@@ -0,0 +1,82 @@

+#!/usr/bin/env python3

+

+#

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+from argparse import ArgumentParser

+import json

+from statistics import mode

+

+

+def parse_opts():

+ parser = ArgumentParser(prog="manifest.py")

+

+ parser.add_argument(

+ dest="mode",

+ choices=["tags"],

+ type=str,

+ help="The print mode of script",

+ )

+

+ parser.add_argument(

+ "-p",

+ "--path",

+ type=str,

+ help="The path to specific dockerfile",

+ )

+

+ parser.add_argument(

+ "-i",

+ "--image",

+ type=str,

+ help="The complete image registry url (such as `apache/spark`)",

+ )

+

+ parser.add_argument(

+ "-f",

+ "--file",

+ type=str,

+ default="versions.json",

+ help="The version json of image meta.",

+ )

+

+ args, unknown = parser.parse_known_args()

+ if unknown:

+ parser.error("Unsupported arguments: %s" % " ".join(unknown))

+ return args

+

+

+def main():

+ opts = parse_opts()

+ filepath = opts.path

+ image = opts.image

+ mode = opts.mode

+ version_file = opts.file

+

+ if mode == "tags":

+ tags = []

+ with open(version_file, "r") as f:

+ versions = json.load(f).get("versions")

+ # Filter the specific dockerfile

+ versions = list(filter(lambda x: x.get("path") == filepath,

versions))

+ # Get matched version's tags

+ tags = versions[0]["tags"] if versions else []

+ print(",".join(["%s:%s" % (image, t) for t in tags]))

+

+

+if __name__ == "__main__":

+ main()

diff --git a/versions.json b/versions.json

new file mode 100644

index 0000000..d42076d

--- /dev/null

+++ b/versions.json

@@ -0,0 +1,64 @@

+{

+ "versions": [

+ {

+ "path": "3.3.1/scala2.12-java11-python3-ubuntu",

+ "tags": [

+ "3.3.1-scala2.12-java11-python3-ubuntu",

+ "3.3.1-python3",

+ "3.3.1",

+ "python3",

+ "latest"

+ ]

+ },

+ {

+ "path": "3.3.1/scala2.12-java11-r-ubuntu",

+ "tags": [

+ "3.3.1-scala2.12-java11-r-ubuntu",

+ "3.3.1-r",

+ "r"

+ ]

+ },

+ {

+ "path": "3.3.1/scala2.12-java11-ubuntu",

+ "tags": [

+ "3.3.1-scala2.12-java11-ubuntu",

+ "3.3.1-scala",

+ "scala"

+ ]

+ },

+ {

+ "path": "3.3.1/scala2.12-java11-python3-r-ubuntu",

+ "tags": [

+ "3.3.1-scala2.12-java11-python3-r-ubuntu"

+ ]

+ },

+ {

+ "path": "3.3.0/scala2.12-java11-python3-ubuntu",

+ "tags": [

+ "3.3.0-scala2.12-java11-python3-ubuntu",

+ "3.3.0-python3",

+ "3.3.0"

+ ]

+ },

+ {

+ "path": "3.3.0/scala2.12-java11-r-ubuntu",

+ "tags": [

+ "3.3.0-scala2.12-java11-r-ubuntu",

+ "3.3.0-r"

+ ]

+ },

+ {

+ "path": "3.3.0/scala2.12-java11-ubuntu",

+ "tags": [

+ "3.3.0-scala2.12-java11-ubuntu",

+ "3.3.0-scala"

+ ]

+ },

+ {

+ "path": "3.3.0/scala2.12-java11-python3-r-ubuntu",

+ "tags": [

+ "3.3.0-scala2.12-java11-python3-r-ubuntu"

+ ]

+ }

+ ]

+}

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]