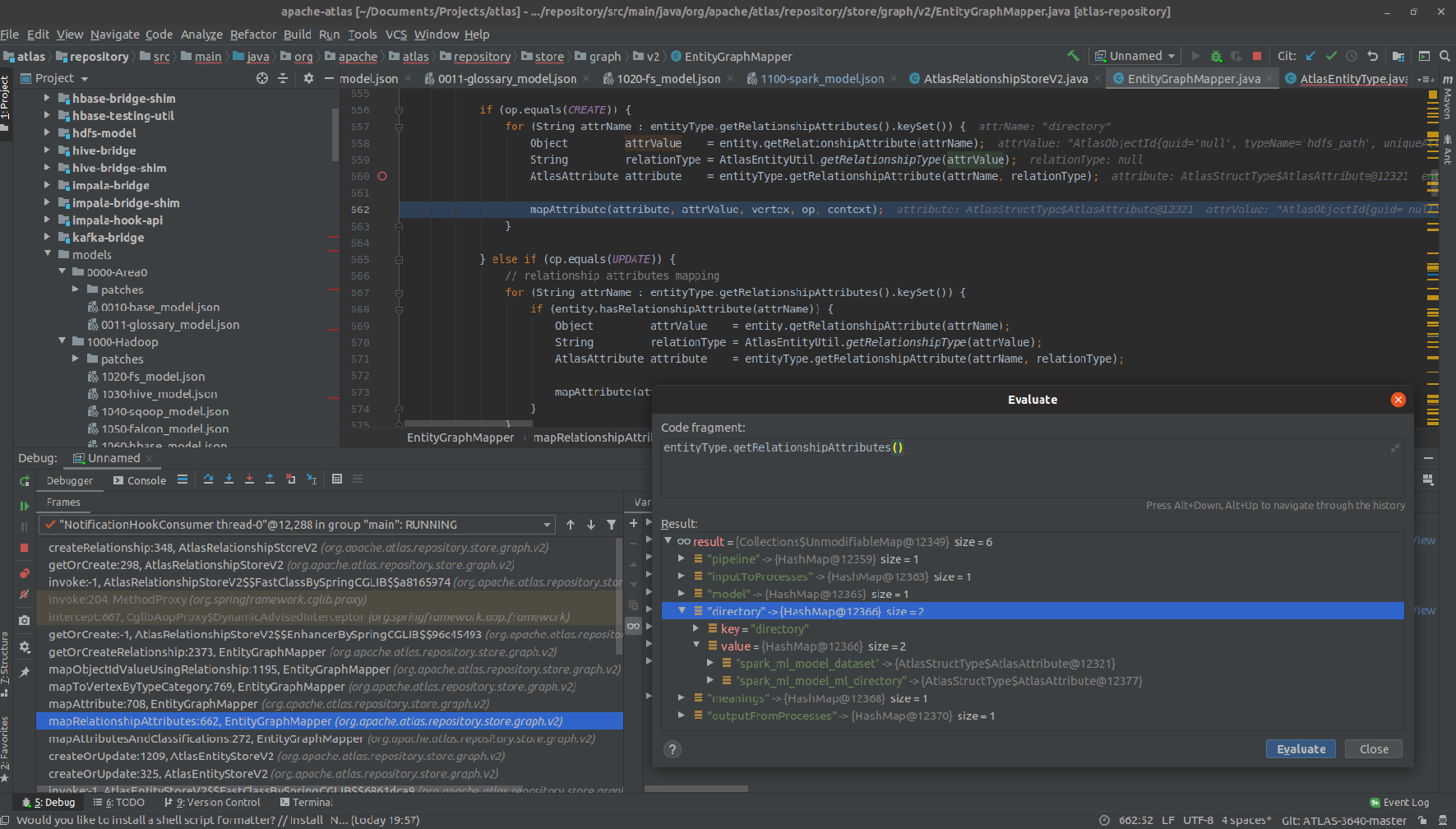

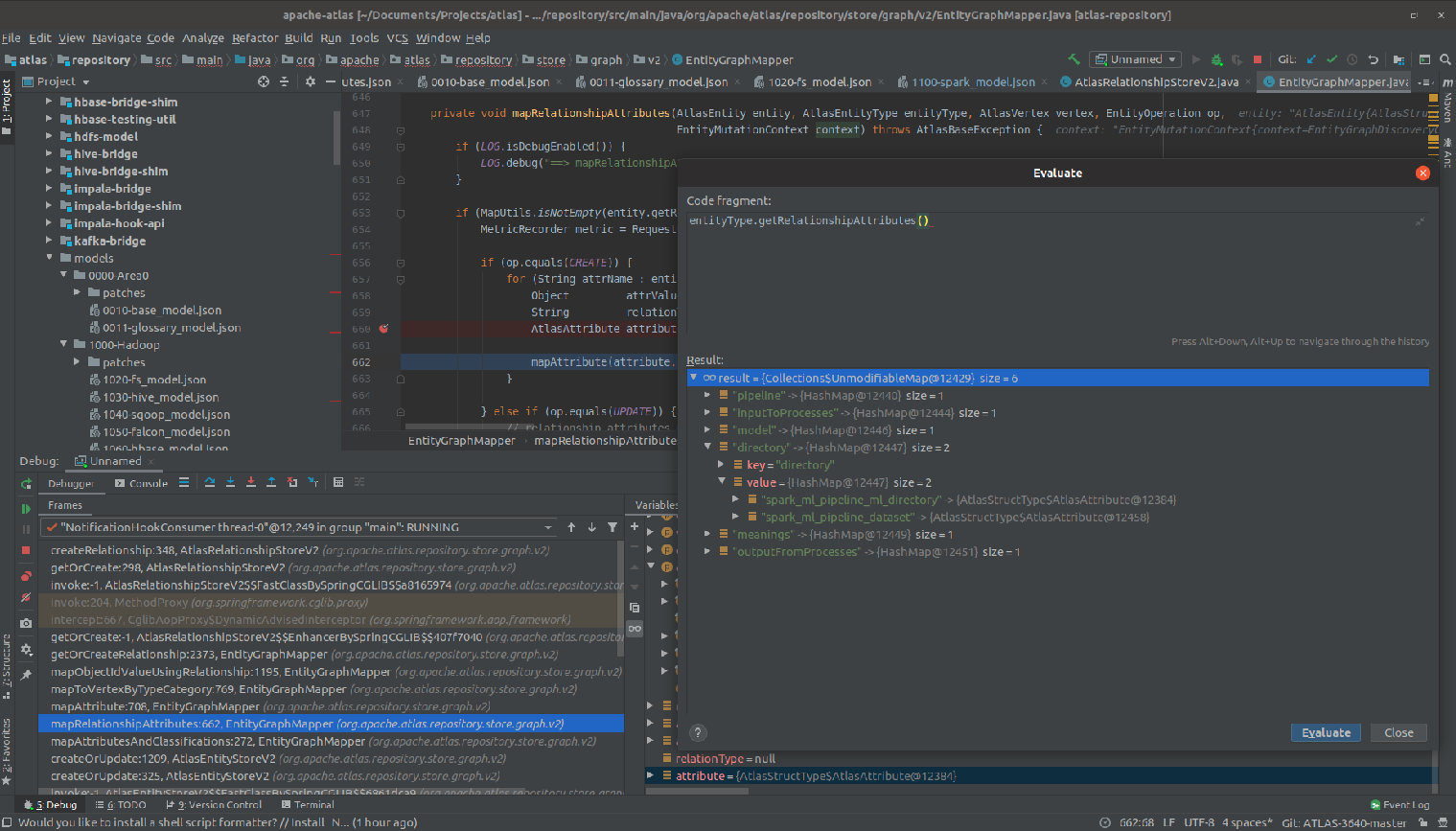

vladhlinsky commented on issue #88: ATLAS-3640 Update 'spark_ml_model_ml_directory' and 'spark_ml_pipeline_ml_directory' relationship definitions URL: https://github.com/apache/atlas/pull/88#issuecomment-592699723 Thanks, @HeartSaVioR! As it turned out, the proposed changes do not work correctly for an upgrade to Spark models 1.1. I tested the changes only for a new installation(with no existing entities in the HBase). I guess `relationshipCategory` can be updated only via a patch. An attempt to upgrade with proposed changes leads to: ``` 2020-02-28 09:57:11,783 ERROR - [main:] ~ graph rollback due to exception (GraphTransactionInterceptor:167) org.apache.atlas.exception.AtlasBaseException: invalid update for relationship spark_ml_model_ml_directory: new relationshipDef category AGGREGATION, existing relationshipDef category COMPOSITION at org.apache.atlas.repository.store.graph.v2.AtlasRelationshipDefStoreV2.preUpdateCheck(AtlasRelationshipDefStoreV2.java:432) ``` it's possible to use the following path to update this property ``` { "patches": [ { "id": "TYPEDEF_PATCH_1000_015_001", "description": "Update relationshipCategory to AGGREGATION", "action": "REMOVE_LEGACY_REF_ATTRIBUTES", "typeName": "spark_ml_model_ml_directory", "applyToVersion": "1.0", "updateToVersion": "1.1", "params": { "relationshipCategory": "AGGREGATION" } }, ... } ] } ``` however, there is no way to update `endDefs` types. I can not find a patch action for this purpose and an attempt to update it directly in the model file leads to: ``` 2020-02-28 12:14:05,151 INFO - [main:] ~ GraphTransaction intercept for org.apache.atlas.repository.store.graph.v2.AtlasTypeDefGraphStoreV2.createUpdateTypesDef (GraphTransactionAdvisor$1:41) 2020-02-28 12:14:05,213 ERROR - [main:] ~ graph rollback due to exception (GraphTransactionInterceptor:167) org.apache.atlas.exception.AtlasBaseException: invalid update for relationshipDef spark_ml_model_ml_directory: new end2 AtlasRelationshipEndDef{type='DataSet', name==>'model', description==>'null', isContainer==>'false', cardinality==>'SINGLE', isLegacyAttribute==>'false'}, existing end2 AtlasRelationshipEndDef{type='spark_ml_directory', name==>'model', description==>'null', isContainer==>'false', cardinality==>'SINGLE', isLegacyAttribute==>'false'} at org.apache.atlas.repository.store.graph.v2.AtlasRelationshipDefStoreV2.preUpdateCheck(AtlasRelationshipDefStoreV2.java:457) ``` Thus, it seems that the safest way to resolve this issue will be **creating a new relationship**. I tried to add the next relationship defs that use the same name `directory`: ``` { "name": "spark_ml_model_dataset", "serviceType": "spark", "typeVersion": "1.0", "relationshipCategory": "AGGREGATION", "endDef1": { "type": "spark_ml_model", "name": "directory", "isContainer": true, "cardinality": "SINGLE" }, "endDef2": { "type": "DataSet", "name": "model", "isContainer": false, "cardinality": "SINGLE" }, "propagateTags": "NONE" }, { "name": "spark_ml_pipeline_dataset", "serviceType": "spark", "typeVersion": "1.0", "relationshipCategory": "AGGREGATION", "endDef1": { "type": "spark_ml_pipeline", "name": "directory", "isContainer": true, "cardinality": "SINGLE" }, "endDef2": { "type": "DataSet", "name": "pipeline", "isContainer": false, "cardinality": "SINGLE" }, "propagateTags": "NONE" } ``` and it works perfectly fine for the `spark_ml_model` but fails for the `spark_ml_pipeline` with the following error: ``` 2020-02-28 21:34:00,933 WARN - [NotificationHookConsumer thread-0:] ~ Max retries exceeded for message {"version":{"version":"1.0.0","versionParts":[1]},"msgCompressionKind":"NONE","msgSplitIdx":1,"msgSplitCount":1,"msgCreationTime":1582918440918,"message":{"type":"ENTITY_CREATE_V2","user":"test","entities":{"entities":[{"typeName":"spark_ml_model","attributes":{"qualifiedName":"model_with_path8","name":"model_with_path8"},"guid":"-386799758271978","isIncomplete":false,"provenanceType":0,"version":0,"relationshipAttributes":{"directory":{"typeName":"hdfs_path","uniqueAttributes":{"qualifiedName":"path8"}}},"proxy":false}]}}} (NotificationHookConsumer$HookConsumer:793) org.apache.atlas.exception.AtlasBaseException: invalid relationshipDef: spark_ml_model_ml_directory: end type 1: spark_ml_directory, end type 2: spark_ml_model at org.apache.atlas.repository.store.graph.v2.AtlasRelationshipStoreV2.validateRelationship(AtlasRelationshipStoreV2.java:657) ``` Debugging shows that [AtlasEntityUtil.getRelationshipType](https://github.com/apache/atlas/blob/master/repository/src/main/java/org/apache/atlas/repository/store/graph/v2/EntityGraphMapper.java#L659) returns `null` for the `hdfs_path`(which is child of `DataSet`) attribute and this makes [entityType.getRelationshipAttribute](https://github.com/apache/atlas/blob/master/intg/src/main/java/org/apache/atlas/type/AtlasEntityType.java#L459) return first value of HashMap. In the case of `spark_ml_model` relation, it appears to be the right relation, but in the case of `spark_ml_pipeline` - the wrong one. See screenshots:

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected] With regards, Apache Git Services