timyuer commented on PR #984: URL: https://github.com/apache/bigtop/pull/984#issuecomment-1217757517

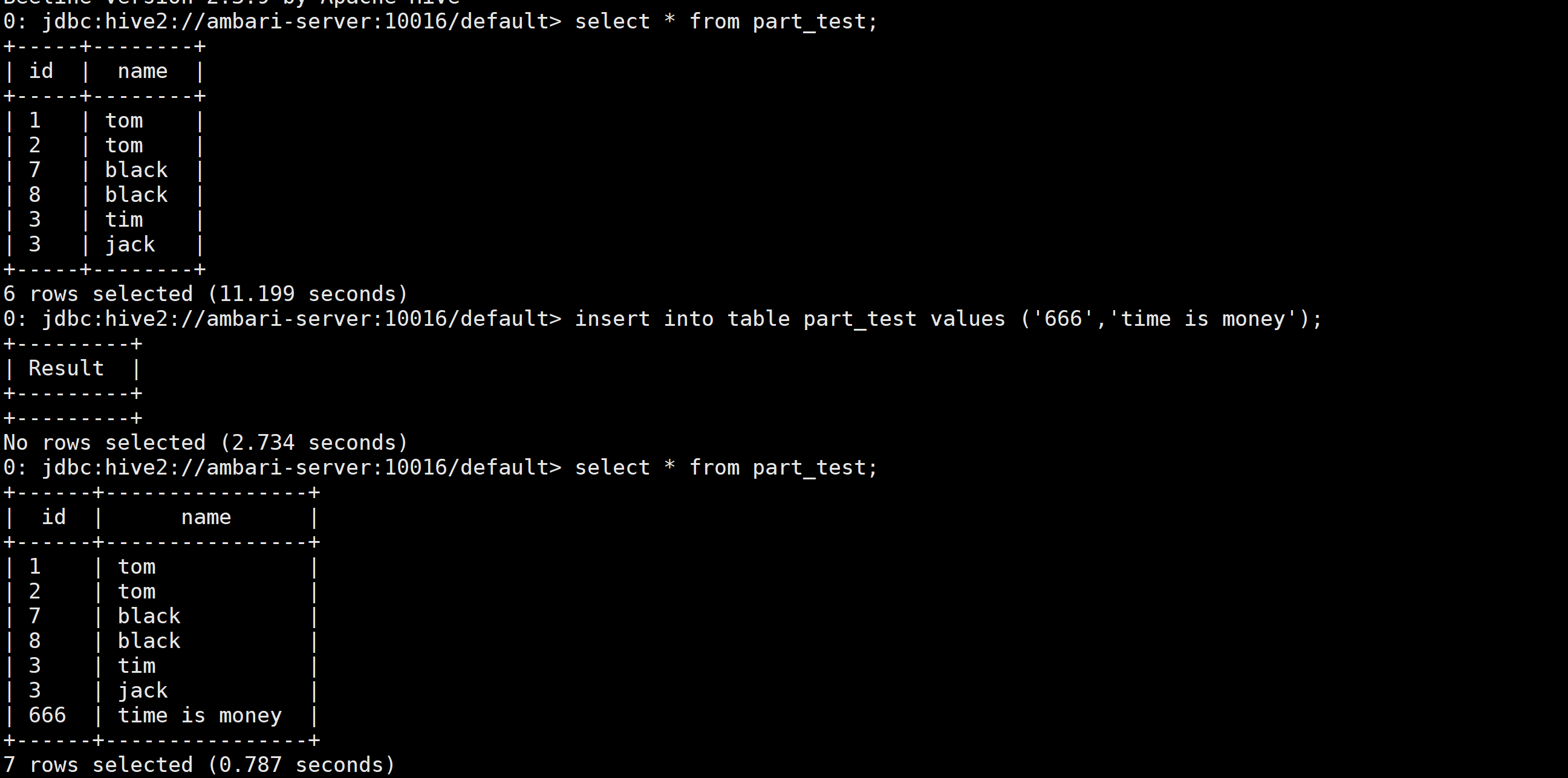

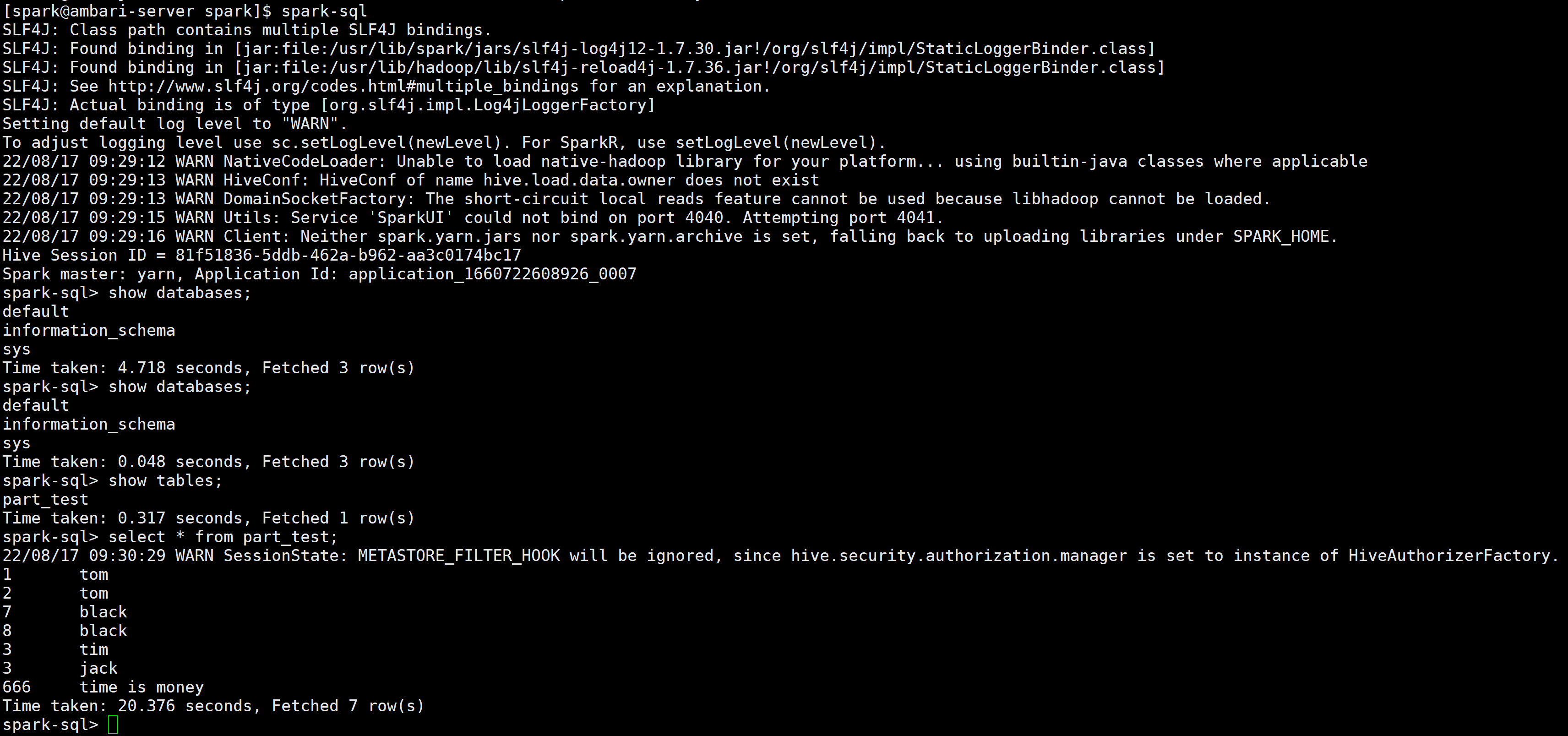

Spark Thrift Server Check  Spark submit Check ```bash [spark@ambari-server spark]$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode client --queue default examples/jars/spark-examples.jar 10 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/lib/spark/jars/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/lib/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 22/08/17 09:08:03 INFO SparkContext: Running Spark version 3.2.1 22/08/17 09:08:03 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 22/08/17 09:08:03 INFO ResourceUtils: ============================================================== 22/08/17 09:08:03 INFO ResourceUtils: No custom resources configured for spark.driver. 22/08/17 09:08:03 INFO ResourceUtils: ============================================================== 22/08/17 09:08:03 INFO SparkContext: Submitted application: Spark Pi 22/08/17 09:08:03 INFO ResourceProfile: Default ResourceProfile created, executor resources: Map(cores -> name: cores, amount: 1, script: , vendor: , memory -> name: memory, amount: 1024, script: , vendor: , offHeap -> name: offHeap, amount: 0, script: , vendor: ), task resources: Map(cpus -> name: cpus, amount: 1.0) 22/08/17 09:08:03 INFO ResourceProfile: Limiting resource is cpus at 1 tasks per executor 22/08/17 09:08:03 INFO ResourceProfileManager: Added ResourceProfile id: 0 22/08/17 09:08:03 INFO SecurityManager: Changing view acls to: spark 22/08/17 09:08:03 INFO SecurityManager: Changing modify acls to: spark 22/08/17 09:08:03 INFO SecurityManager: Changing view acls groups to: 22/08/17 09:08:03 INFO SecurityManager: Changing modify acls groups to: 22/08/17 09:08:03 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); groups with view permissions: Set(); users with modify permissions: Set(spark); groups with modify permissions: Set() 22/08/17 09:08:04 INFO Utils: Successfully started service 'sparkDriver' on port 41738. 22/08/17 09:08:04 INFO SparkEnv: Registering MapOutputTracker 22/08/17 09:08:04 INFO SparkEnv: Registering BlockManagerMaster 22/08/17 09:08:04 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 22/08/17 09:08:04 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 22/08/17 09:08:04 INFO SparkEnv: Registering BlockManagerMasterHeartbeat 22/08/17 09:08:04 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-89afb528-910d-47a3-92a9-d3c329250a5a 22/08/17 09:08:04 INFO MemoryStore: MemoryStore started with capacity 366.3 MiB 22/08/17 09:08:04 INFO SparkEnv: Registering OutputCommitCoordinator 22/08/17 09:08:04 INFO log: Logging initialized @3291ms to org.sparkproject.jetty.util.log.Slf4jLog 22/08/17 09:08:04 INFO Server: jetty-9.4.43.v20210629; built: 2021-06-30T11:07:22.254Z; git: 526006ecfa3af7f1a27ef3a288e2bef7ea9dd7e8; jvm 1.8.0_332-b09 22/08/17 09:08:04 INFO Server: Started @3449ms 22/08/17 09:08:04 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041. 22/08/17 09:08:04 INFO AbstractConnector: Started ServerConnector@39aa45a1{HTTP/1.1, (http/1.1)}{0.0.0.0:4041} 22/08/17 09:08:04 INFO Utils: Successfully started service 'SparkUI' on port 4041. 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@14faa38c{/jobs,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@759d81f3{/jobs/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5a4c638d{/jobs/job,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@1f12e153{/jobs/job/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5a101b1c{/stages,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@29f0802c{/stages/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@60f2e0bd{/stages/stage,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@203dd56b{/stages/stage/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6d64b553{/stages/pool,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@1d3e6d34{/stages/pool/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@26a94fa5{/storage,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@2873d672{/storage/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@577f9109{/storage/rdd,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@757529a4{/storage/rdd/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5c41d037{/environment,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5ec77191{/environment/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@1450078a{/executors,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@69c6161d{/executors/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@2e1792e7{/executors/threadDump,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@3eb631b8{/executors/threadDump/json,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6bff19ff{/static,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@3b0ca5e1{/,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@54dcbb9f{/api,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5bb8f9e2{/jobs/job/kill,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5f78de22{/stages/stage/kill,null,AVAILABLE,@Spark} 22/08/17 09:08:04 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://ambari-server:4041 22/08/17 09:08:04 INFO SparkContext: Added JAR file:/usr/lib/spark/examples/jars/spark-examples_2.12-3.2.1.jar at spark://ambari-server:41738/jars/spark-examples_2.12-3.2.1.jar with timestamp 1660727283432 22/08/17 09:08:05 INFO FairSchedulableBuilder: Creating Fair Scheduler pools from file:////etc/spark/conf/spark-thrift-fairscheduler.xml 22/08/17 09:08:05 INFO FairSchedulableBuilder: Created pool: default, schedulingMode: FAIR, minShare: 2, weight: 1 22/08/17 09:08:05 INFO DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at ambari-agent-02/172.18.0.4:8050 22/08/17 09:08:06 INFO AHSProxy: Connecting to Application History server at localhost/127.0.0.1:10200 22/08/17 09:08:06 INFO Client: Requesting a new application from cluster with 2 NodeManagers 22/08/17 09:08:07 WARN DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded. 22/08/17 09:08:07 INFO Configuration: resource-types.xml not found 22/08/17 09:08:07 INFO ResourceUtils: Unable to find 'resource-types.xml'. 22/08/17 09:08:07 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (15360 MB per container) 22/08/17 09:08:07 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead 22/08/17 09:08:07 INFO Client: Setting up container launch context for our AM 22/08/17 09:08:07 INFO Client: Setting up the launch environment for our AM container 22/08/17 09:08:07 INFO Client: Preparing resources for our AM container 22/08/17 09:08:07 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. 22/08/17 09:08:12 INFO Client: Uploading resource file:/tmp/spark-9f1844ca-32b6-49b1-a545-cfe3e9e12f40/__spark_libs__3058775863316389856.zip -> hdfs://ambari-agent-01:8020/user/spark/.sparkStaging/application_1660722608926_0006/__spark_libs__3058775863316389856.zip 22/08/17 09:08:15 INFO Client: Uploading resource file:/tmp/spark-9f1844ca-32b6-49b1-a545-cfe3e9e12f40/__spark_conf__3875795998828899735.zip -> hdfs://ambari-agent-01:8020/user/spark/.sparkStaging/application_1660722608926_0006/__spark_conf__.zip 22/08/17 09:08:16 INFO SecurityManager: Changing view acls to: spark 22/08/17 09:08:16 INFO SecurityManager: Changing modify acls to: spark 22/08/17 09:08:16 INFO SecurityManager: Changing view acls groups to: 22/08/17 09:08:16 INFO SecurityManager: Changing modify acls groups to: 22/08/17 09:08:16 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); groups with view permissions: Set(); users with modify permissions: Set(spark); groups with modify permissions: Set() 22/08/17 09:08:16 INFO Client: Submitting application application_1660722608926_0006 to ResourceManager 22/08/17 09:08:16 INFO YarnClientImpl: Submitted application application_1660722608926_0006 22/08/17 09:08:17 INFO Client: Application report for application_1660722608926_0006 (state: ACCEPTED) 22/08/17 09:08:17 INFO Client: client token: N/A diagnostics: AM container is launched, waiting for AM container to Register with RM ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: default start time: 1660727296266 final status: UNDEFINED tracking URL: http://ambari-agent-02:8088/proxy/application_1660722608926_0006/ user: spark 22/08/17 09:08:18 INFO Client: Application report for application_1660722608926_0006 (state: ACCEPTED) 22/08/17 09:08:19 INFO Client: Application report for application_1660722608926_0006 (state: ACCEPTED) 22/08/17 09:08:20 INFO Client: Application report for application_1660722608926_0006 (state: ACCEPTED) 22/08/17 09:08:21 INFO Client: Application report for application_1660722608926_0006 (state: ACCEPTED) 22/08/17 09:08:22 INFO Client: Application report for application_1660722608926_0006 (state: ACCEPTED) 22/08/17 09:08:23 INFO Client: Application report for application_1660722608926_0006 (state: RUNNING) 22/08/17 09:08:23 INFO Client: client token: N/A diagnostics: N/A ApplicationMaster host: 172.18.0.4 ApplicationMaster RPC port: -1 queue: default start time: 1660727296266 final status: UNDEFINED tracking URL: http://ambari-agent-02:8088/proxy/application_1660722608926_0006/ user: spark 22/08/17 09:08:23 INFO YarnClientSchedulerBackend: Application application_1660722608926_0006 has started running. 22/08/17 09:08:23 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 35127. 22/08/17 09:08:23 INFO NettyBlockTransferService: Server created on ambari-server:35127 22/08/17 09:08:23 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 22/08/17 09:08:23 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, ambari-server, 35127, None) 22/08/17 09:08:23 INFO BlockManagerMasterEndpoint: Registering block manager ambari-server:35127 with 366.3 MiB RAM, BlockManagerId(driver, ambari-server, 35127, None) 22/08/17 09:08:23 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, ambari-server, 35127, None) 22/08/17 09:08:23 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, ambari-server, 35127, None) 22/08/17 09:08:23 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@388623ad{/metrics/json,null,AVAILABLE,@Spark} 22/08/17 09:08:23 INFO SingleEventLogFileWriter: Logging events to hdfs:/spark-history/application_1660722608926_0006.inprogress 22/08/17 09:08:23 INFO YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> ambari-agent-02, PROXY_URI_BASES -> http://ambari-agent-02:8088/proxy/application_1660722608926_0006), /proxy/application_1660722608926_0006 22/08/17 09:08:24 INFO YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(spark-client://YarnAM) 22/08/17 09:08:33 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.0.2:38336) with ID 1, ResourceProfileId 0 22/08/17 09:08:33 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.0.2:38334) with ID 2, ResourceProfileId 0 22/08/17 09:08:33 INFO YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8 22/08/17 09:08:33 INFO BlockManagerMasterEndpoint: Registering block manager ambari-server:38665 with 366.3 MiB RAM, BlockManagerId(1, ambari-server, 38665, None) 22/08/17 09:08:33 INFO BlockManagerMasterEndpoint: Registering block manager ambari-server:42731 with 366.3 MiB RAM, BlockManagerId(2, ambari-server, 42731, None) 22/08/17 09:08:34 INFO SparkContext: Starting job: reduce at SparkPi.scala:38 22/08/17 09:08:34 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions 22/08/17 09:08:34 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38) 22/08/17 09:08:34 INFO DAGScheduler: Parents of final stage: List() 22/08/17 09:08:34 INFO DAGScheduler: Missing parents: List() 22/08/17 09:08:34 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents 22/08/17 09:08:34 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 4.0 KiB, free 366.3 MiB) 22/08/17 09:08:34 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 2.3 KiB, free 366.3 MiB) 22/08/17 09:08:34 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on ambari-server:35127 (size: 2.3 KiB, free: 366.3 MiB) 22/08/17 09:08:34 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1478 22/08/17 09:08:34 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9)) 22/08/17 09:08:34 INFO YarnScheduler: Adding task set 0.0 with 10 tasks resource profile 0 22/08/17 09:08:34 INFO FairSchedulableBuilder: Added task set TaskSet_0.0 tasks to pool default 22/08/17 09:08:34 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0) (ambari-server, executor 1, partition 0, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:34 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1) (ambari-server, executor 2, partition 1, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:34 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on ambari-server:38665 (size: 2.3 KiB, free: 366.3 MiB) 22/08/17 09:08:34 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on ambari-server:42731 (size: 2.3 KiB, free: 366.3 MiB) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2) (ambari-server, executor 2, partition 2, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 965 ms on ambari-server (executor 2) (1/10) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3) (ambari-server, executor 1, partition 3, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1007 ms on ambari-server (executor 1) (2/10) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4) (ambari-server, executor 2, partition 4, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 37 ms on ambari-server (executor 2) (3/10) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5) (ambari-server, executor 1, partition 5, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6) (ambari-server, executor 2, partition 6, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 48 ms on ambari-server (executor 1) (4/10) 22/08/17 09:08:35 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 32 ms on ambari-server (executor 2) (5/10) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7) (ambari-server, executor 1, partition 7, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 29 ms on ambari-server (executor 1) (6/10) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8) (ambari-server, executor 2, partition 8, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 29 ms on ambari-server (executor 2) (7/10) 22/08/17 09:08:35 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9) (ambari-server, executor 2, partition 9, PROCESS_LOCAL, 4589 bytes) taskResourceAssignments Map() 22/08/17 09:08:35 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 26 ms on ambari-server (executor 2) (8/10) 22/08/17 09:08:35 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 41 ms on ambari-server (executor 1) (9/10) 22/08/17 09:08:35 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 25 ms on ambari-server (executor 2) (10/10) 22/08/17 09:08:35 INFO YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool default 22/08/17 09:08:35 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 1.348 s 22/08/17 09:08:35 INFO DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job 22/08/17 09:08:35 INFO YarnScheduler: Killing all running tasks in stage 0: Stage finished 22/08/17 09:08:35 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 1.423045 s Pi is roughly 3.1396031396031394 22/08/17 09:08:35 INFO AbstractConnector: Stopped Spark@39aa45a1{HTTP/1.1, (http/1.1)}{0.0.0.0:4041} 22/08/17 09:08:35 INFO SparkUI: Stopped Spark web UI at http://ambari-server:4041 22/08/17 09:08:35 INFO YarnClientSchedulerBackend: Interrupting monitor thread 22/08/17 09:08:35 INFO YarnClientSchedulerBackend: Shutting down all executors 22/08/17 09:08:35 INFO YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down 22/08/17 09:08:35 INFO YarnClientSchedulerBackend: YARN client scheduler backend Stopped 22/08/17 09:08:35 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 22/08/17 09:08:35 INFO MemoryStore: MemoryStore cleared 22/08/17 09:08:35 INFO BlockManager: BlockManager stopped 22/08/17 09:08:35 INFO BlockManagerMaster: BlockManagerMaster stopped 22/08/17 09:08:35 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 22/08/17 09:08:35 INFO SparkContext: Successfully stopped SparkContext 22/08/17 09:08:35 INFO ShutdownHookManager: Shutdown hook called 22/08/17 09:08:35 INFO ShutdownHookManager: Deleting directory /tmp/spark-2e1ee283-8ac9-4187-9cb4-3e8b50e79617 22/08/17 09:08:35 INFO ShutdownHookManager: Deleting directory /tmp/spark-9f1844ca-32b6-49b1-a545-cfe3e9e12f40 ``` Spark Sql Check  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]