Git-Yang opened a new issue #697: URL: https://github.com/apache/rocketmq-externals/issues/697

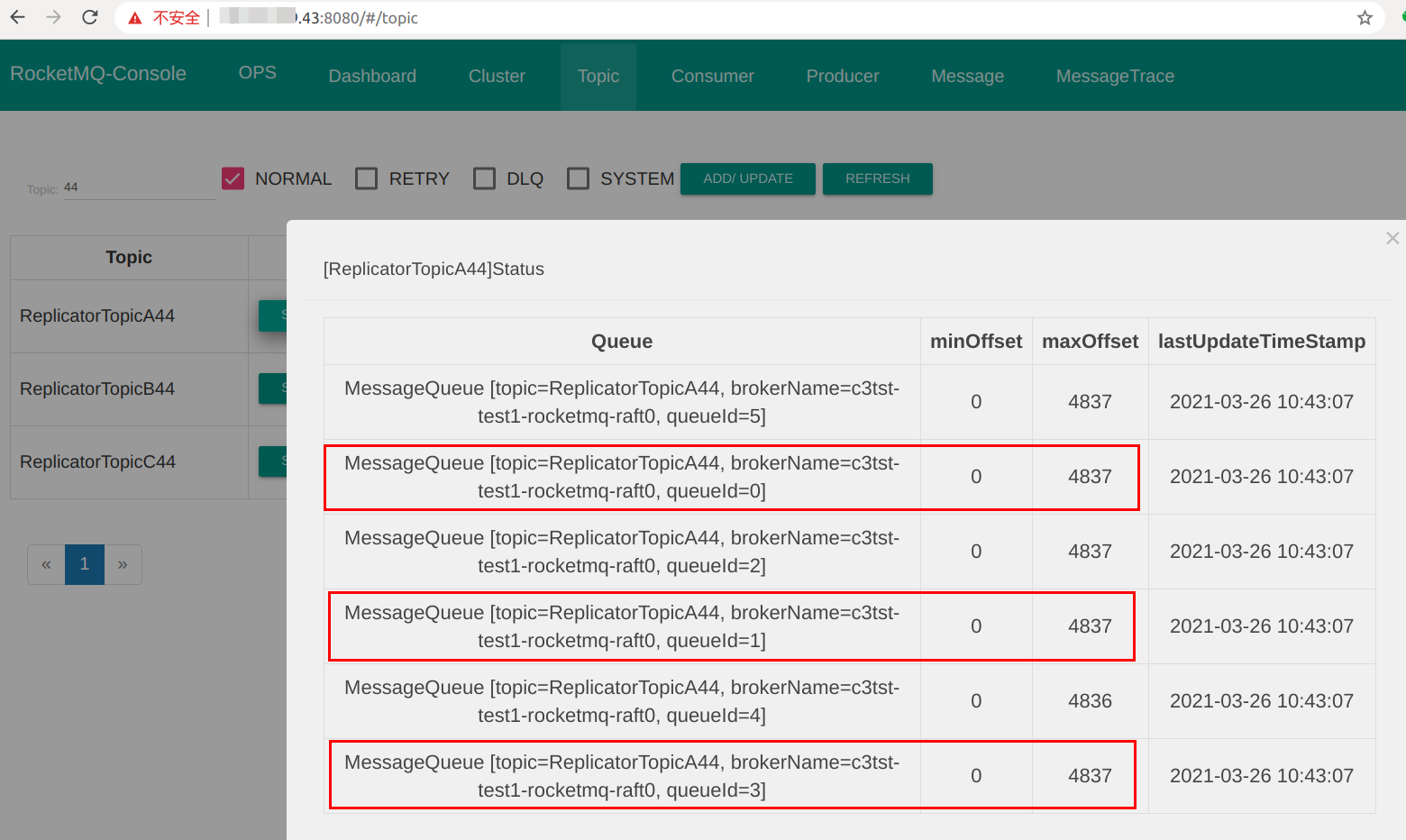

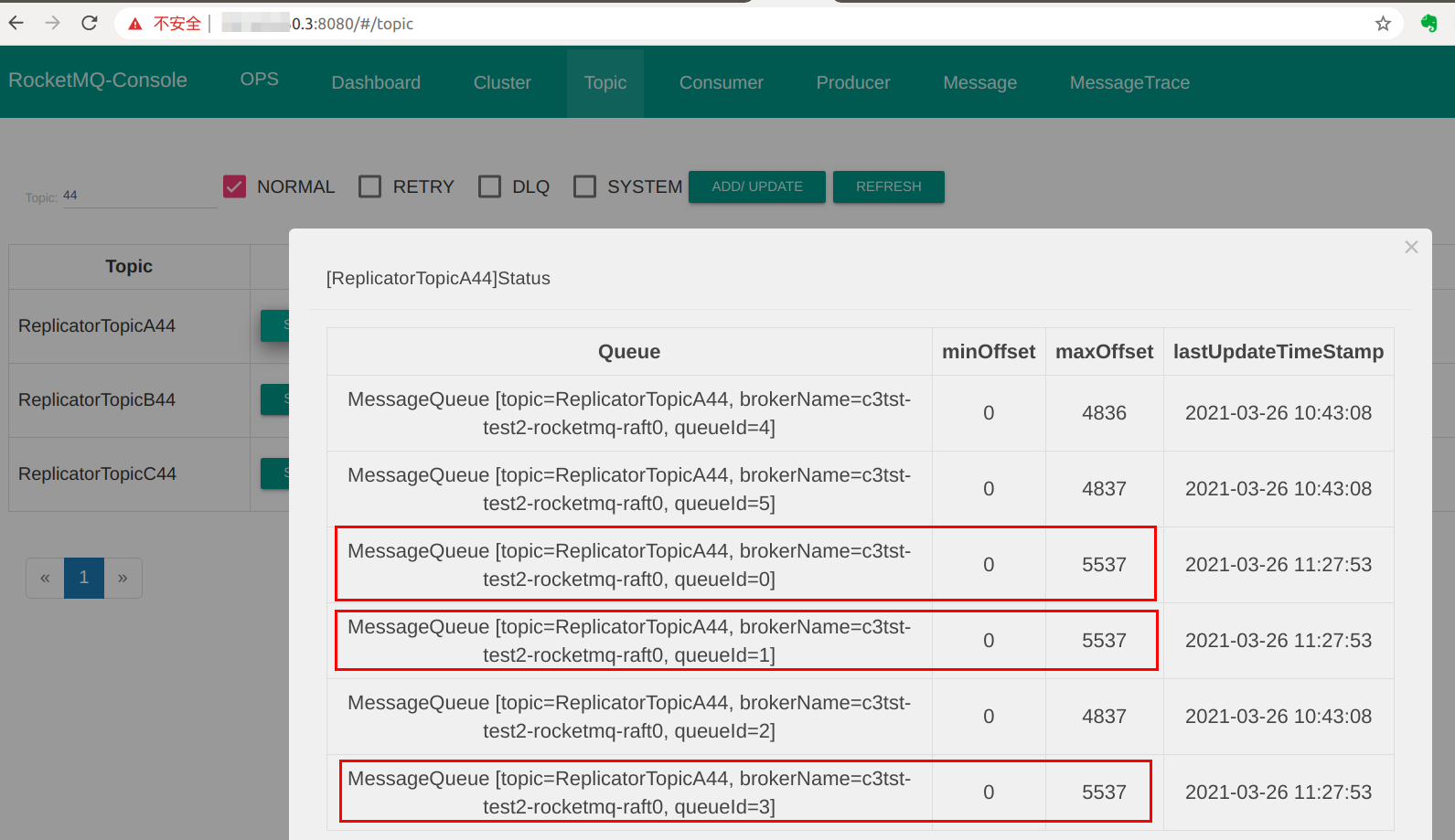

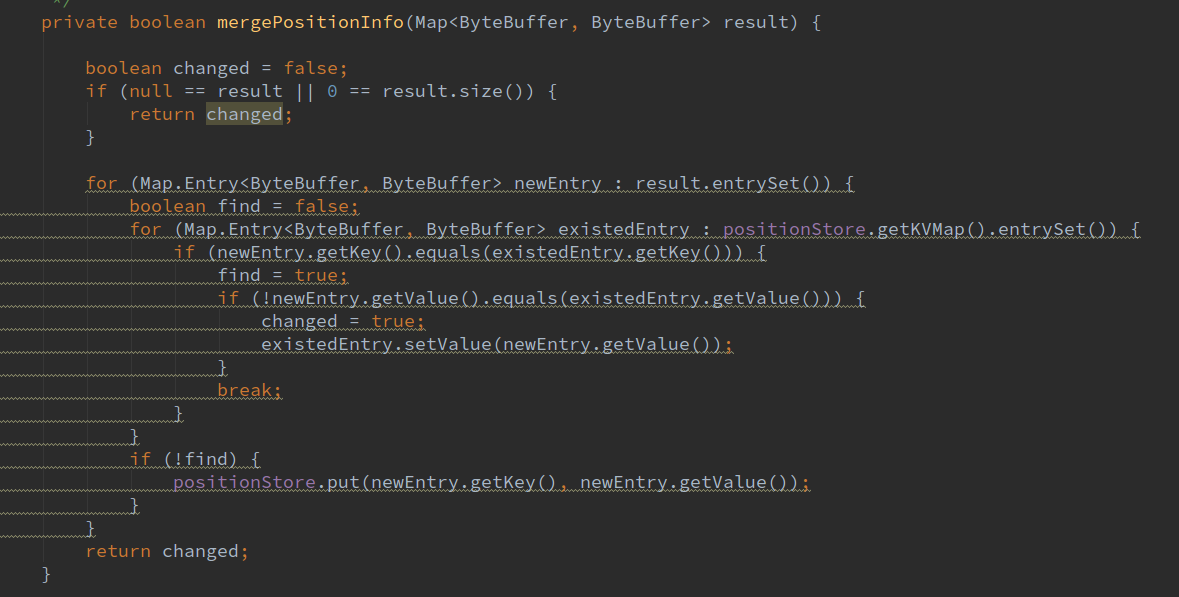

**BUG REPORT** 1. Please describe the issue you observed: **Source Cluster:**  **Target Cluster:**  **Condition:** a. Two worker nodes b. The number of concurrent tasks is 6 c. The task was restarted multiple times during the synchronization process. **Result:** The number of queue messages in the source cluster and the target cluster is inconsistent.  **Analysis:** When synchronizing positions between multiple workers, local data is directly overwritten when positions are found to be inconsistent, which may cause the latest position to be overwritten by old data. - What did you do (The steps to reproduce)? 1.Each worker instance records the current positions that have been updated locally. 2.When the current worker node synchronizes data with other worker nodes, only the locally updated position is synchronized. - What did you expect to see? Each worker node sends the local updated position to the topic to ensure that other workers only synchronize the position that needs to be updated. - What did you see instead? Each worker node consumes the full position of other worker nodes from the topic, including the old position. 2. Please tell us about your environment: openjdk1.8 CentOS7.3 3. Other information (e.g. detailed explanation, logs, related issues, suggestions how to fix, etc): Based on #693 #695 change. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]