This is an automated email from the ASF dual-hosted git repository.

cdmikechen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/submarine.git

The following commit(s) were added to refs/heads/master by this push:

new 61716e2d SUBMARINE-1318. Add documentation for serve

61716e2d is described below

commit 61716e2db25641f51d22116260c162246ffc834f

Author: cdmikechen <cdmikec...@hotmail.com>

AuthorDate: Sun Sep 18 14:14:23 2022 +0800

SUBMARINE-1318. Add documentation for serve

### What is this PR for?

Add documentation for serve and revise some text.

### What type of PR is it?

Documentation

### Todos

* [x] - Serve document

### What is the Jira issue?

https://issues.apache.org/jira/projects/SUBMARINE/issues/SUBMARINE-1318

### How should this be tested?

No.

### Screenshots (if appropriate)

### Questions:

* Do the license files need updating? No

* Are there breaking changes for older versions? No

* Does this need new documentation? Yes

Author: cdmikechen <cdmikec...@hotmail.com>

Signed-off-by: cdmikechen <cdmikec...@apache.org>

Closes #996 from cdmikechen/SUBMARINE-1318 and squashes the following

commits:

b012e1fe [cdmikechen] Fix example link

10e1828e [cdmikechen] Add documentation for serve

---

dev-support/examples/quickstart/train.py | 2 +-

submarine-serve/installation/seldon-secret.yaml | 2 +-

website/docs/gettingStarted/quickstart.md | 199 +++++++++++++++-------

website/static/img/quickstart-artifacts.png | Bin 0 -> 46012 bytes

website/static/img/submarine-model-registry.png | Bin 0 -> 75839 bytes

website/static/img/submarine-register-model.png | Bin 0 -> 71220 bytes

website/static/img/submarine-serve-model.png | Bin 0 -> 129247 bytes

website/static/img/submarine-serve-prediction.png | Bin 0 -> 159760 bytes

8 files changed, 140 insertions(+), 63 deletions(-)

diff --git a/dev-support/examples/quickstart/train.py

b/dev-support/examples/quickstart/train.py

index 5653fa97..fd4eb42d 100644

--- a/dev-support/examples/quickstart/train.py

+++ b/dev-support/examples/quickstart/train.py

@@ -14,7 +14,7 @@

#

==============================================================================

"""

An example of multi-worker training with Keras model using Strategy API.

-https://github.com/kubeflow/tf-operator/blob/master/examples/v1/distribution_strategy/keras-API/multi_worker_strategy-with-keras.py

+https://github.com/kubeflow/training-operator/blob/master/examples/tensorflow/distribution_strategy/keras-API/multi_worker_strategy-with-keras.py

"""

import tensorflow as tf

import tensorflow_datasets as tfds

diff --git a/submarine-serve/installation/seldon-secret.yaml

b/submarine-serve/installation/seldon-secret.yaml

index 4a7d0c37..64aed2f4 100644

--- a/submarine-serve/installation/seldon-secret.yaml

+++ b/submarine-serve/installation/seldon-secret.yaml

@@ -18,7 +18,7 @@ apiVersion: v1

kind: Secret

metadata:

name: submarine-serve-secret

- namespace: default

+ namespace: submarine-user-test

type: Opaque

stringData:

RCLONE_CONFIG_S3_TYPE: s3

diff --git a/website/docs/gettingStarted/quickstart.md

b/website/docs/gettingStarted/quickstart.md

index 44d1bd27..0ad835ef 100644

--- a/website/docs/gettingStarted/quickstart.md

+++ b/website/docs/gettingStarted/quickstart.md

@@ -37,7 +37,7 @@ This document gives you a quick view on the basic usage of

Submarine platform. Y

2. Start minikube cluster and install Istio

-```

+```bash

minikube start --vm-driver=docker --cpus 8 --memory 8192 --kubernetes-version

v1.21.2

istioctl install -y

# Or if you want to support Pod Security Policy

(https://minikube.sigs.k8s.io/docs/tutorials/using_psp), you can use the

following command to start cluster

@@ -48,14 +48,14 @@ minikube start

--extra-config=apiserver.enable-admission-plugins=PodSecurityPoli

1. Clone the project

-```

+```bash

git clone https://github.com/apache/submarine.git

cd submarine

```

2. Create necessary namespaces

-```

+```bash

kubectl create namespace submarine

kubectl create namespace submarine-user-test

kubectl label namespace submarine istio-injection=enabled

@@ -64,28 +64,34 @@ kubectl label namespace submarine-user-test

istio-injection=enabled

3. Install the submarine operator and dependencies by helm chart

-```

+```bash

helm install submarine ./helm-charts/submarine -n submarine

```

4. Create a Submarine custom resource and the operator will create the

submarine server, database, etc. for us.

-```

+```bash

kubectl apply -f submarine-cloud-v2/artifacts/examples/example-submarine.yaml

-n submarine-user-test

```

-### Ensure submarine is ready

+5. Install submarine serve package istio and seldon-core

+```bash

+./submarine-serve/installation/install.sh

```

+

+### Ensure submarine is ready

+

+```bash

$ kubectl get pods -n submarine

NAME READY STATUS RESTARTS

AGE

notebook-controller-deployment-66d85984bf-x562z 1/1 Running 0

7h7m

-pytorch-operator-7d778f4859-g7xph 2/2 Running 0

7h7m

-tf-job-operator-7d895bf77c-75n72 2/2 Running 0

7h7m

+training-operator-6dcd5b9c64-nxwr2 1/1 Running 0

7h7m

+submarine-operator-9cb7bc84d-brddz 1/1 Running 0

7h7m

$ kubectl get pods -n submarine-user-test

NAME READY STATUS RESTARTS AGE

-submarine-database-bdcb77549-rq2ds 2/2 Running 0 7h6m

+submarine-database-0 1/1 Running 0 7h6m

submarine-minio-686b8777ff-zg4d2 2/2 Running 0 7h6m

submarine-mlflow-68c5559dcb-lkq4g 2/2 Running 0 7h6m

submarine-server-7c6d7bcfd8-5p42w 2/2 Running 0 9m33s

@@ -96,7 +102,7 @@ submarine-tensorboard-57c5b64778-t4lww 2/2 Running 0

7h6m

1. Exposing service

-```

+```bash

kubectl port-forward --address 0.0.0.0 -n istio-system

service/istio-ingressgateway 32080:80

```

@@ -116,75 +122,86 @@ Take a simple mnist tensorflow script as an example. We

choose `MultiWorkerMirro

```python

"""

./dev-support/examples/quickstart/train.py

-Reference:

https://github.com/kubeflow/tf-operator/blob/master/examples/v1/distribution_strategy/keras-API/multi_worker_strategy-with-keras.py

+Reference:

https://github.com/kubeflow/training-operator/blob/master/examples/tensorflow/distribution_strategy/keras-API/multi_worker_strategy-with-keras.py

"""

-import tensorflow_datasets as tfds

import tensorflow as tf

+import tensorflow_datasets as tfds

+from packaging.version import Version

from tensorflow.keras import layers, models

+

import submarine

+

def make_datasets_unbatched():

- BUFFER_SIZE = 10000

+ BUFFER_SIZE = 10000

- # Scaling MNIST data from (0, 255] to (0., 1.]

- def scale(image, label):

- image = tf.cast(image, tf.float32)

- image /= 255

- return image, label

+ # Scaling MNIST data from (0, 255] to (0., 1.]

+ def scale(image, label):

+ image = tf.cast(image, tf.float32)

+ image /= 255

+ return image, label

- datasets, _ = tfds.load(name='mnist', with_info=True, as_supervised=True)

+ # If we use tensorflow_datasets > 3.1.0, we need to disable GCS

+ #

https://github.com/tensorflow/datasets/issues/2761#issuecomment-1187413141

+ if Version(tfds.__version__) > Version("3.1.0"):

+ tfds.core.utils.gcs_utils._is_gcs_disabled = True

+ datasets, _ = tfds.load(name="mnist", with_info=True, as_supervised=True)

- return datasets['train'].map(scale).cache().shuffle(BUFFER_SIZE)

+ return datasets["train"].map(scale).cache().shuffle(BUFFER_SIZE)

def build_and_compile_cnn_model():

- model = models.Sequential()

- model.add(

- layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

- model.add(layers.MaxPooling2D((2, 2)))

- model.add(layers.Conv2D(64, (3, 3), activation='relu'))

- model.add(layers.MaxPooling2D((2, 2)))

- model.add(layers.Conv2D(64, (3, 3), activation='relu'))

- model.add(layers.Flatten())

- model.add(layers.Dense(64, activation='relu'))

- model.add(layers.Dense(10, activation='softmax'))

-

- model.summary()

-

- model.compile(optimizer='adam',

- loss='sparse_categorical_crossentropy',

- metrics=['accuracy'])

-

- return model

-

-def main():

- strategy = tf.distribute.experimental.MultiWorkerMirroredStrategy(

- communication=tf.distribute.experimental.CollectiveCommunication.AUTO)

+ model = models.Sequential()

+ model.add(layers.Conv2D(32, (3, 3), activation="relu", input_shape=(28,

28, 1)))

+ model.add(layers.MaxPooling2D((2, 2)))

+ model.add(layers.Conv2D(64, (3, 3), activation="relu"))

+ model.add(layers.MaxPooling2D((2, 2)))

+ model.add(layers.Conv2D(64, (3, 3), activation="relu"))

+ model.add(layers.Flatten())

+ model.add(layers.Dense(64, activation="relu"))

+ model.add(layers.Dense(10, activation="softmax"))

- BATCH_SIZE_PER_REPLICA = 4

- BATCH_SIZE = BATCH_SIZE_PER_REPLICA * strategy.num_replicas_in_sync

+ model.summary()

- with strategy.scope():

- ds_train = make_datasets_unbatched().batch(BATCH_SIZE).repeat()

- options = tf.data.Options()

- options.experimental_distribute.auto_shard_policy = \

- tf.data.experimental.AutoShardPolicy.DATA

- ds_train = ds_train.with_options(options)

- # Model building/compiling need to be within `strategy.scope()`.

- multi_worker_model = build_and_compile_cnn_model()

+ model.compile(optimizer="adam", loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

- class MyCallback(tf.keras.callbacks.Callback):

- def on_epoch_end(self, epoch, logs=None):

- # monitor the loss and accuracy

- print(logs)

- submarine.log_metrics({"loss": logs["loss"], "accuracy":

logs["accuracy"]}, epoch)

+ return model

- multi_worker_model.fit(ds_train, epochs=10, steps_per_epoch=70,

callbacks=[MyCallback()])

+def main():

+ strategy = tf.distribute.experimental.MultiWorkerMirroredStrategy(

+ communication=tf.distribute.experimental.CollectiveCommunication.AUTO

+ )

+

+ BATCH_SIZE_PER_REPLICA = 4

+ BATCH_SIZE = BATCH_SIZE_PER_REPLICA * strategy.num_replicas_in_sync

+

+ with strategy.scope():

+ ds_train = make_datasets_unbatched().batch(BATCH_SIZE).repeat()

+ options = tf.data.Options()

+ options.experimental_distribute.auto_shard_policy = (

+ tf.data.experimental.AutoShardPolicy.DATA

+ )

+ ds_train = ds_train.with_options(options)

+ # Model building/compiling need to be within `strategy.scope()`.

+ multi_worker_model = build_and_compile_cnn_model()

+

+ class MyCallback(tf.keras.callbacks.Callback):

+ def on_epoch_end(self, epoch, logs=None):

+ # monitor the loss and accuracy

+ print(logs)

+ submarine.log_metric("loss", logs["loss"], epoch)

+ submarine.log_metric("accuracy", logs["accuracy"], epoch)

+

+ multi_worker_model.fit(ds_train, epochs=10, steps_per_epoch=70,

callbacks=[MyCallback()])

+ # save model

+ submarine.save_model(multi_worker_model, "tensorflow")

+

+

+if __name__ == "__main__":

+ main()

-if __name__ == '__main__':

- main()

```

### 2. Prepare an environment compatible with the training

@@ -218,4 +235,64 @@ eval $(minikube docker-env)

-### 5. Serve the model (In development)

+### 5. Serve the model

+

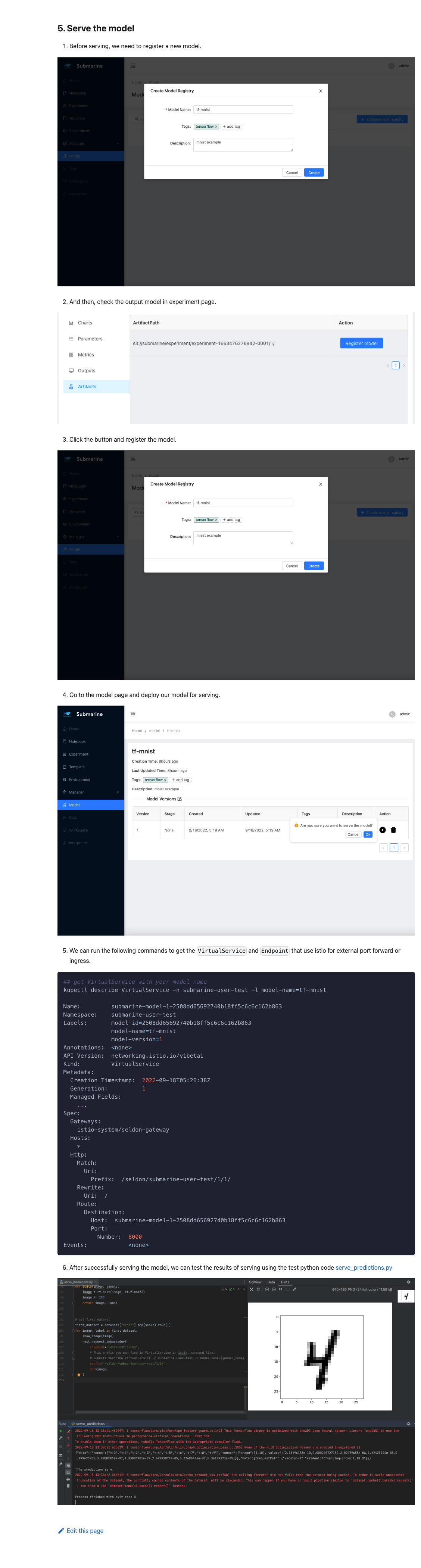

+1. Before serving, we need to register a new model.

+

+

+

+2. And then, check the output model in experiment page.

+

+

+

+3. Click the button and register the model.

+

+

+

+4. Go to the model page and deploy our model for serving.

+

+

+

+5. We can run the following commands to get the `VirtualService` and

`Endpoint` that use istio for external port forward or ingress.

+

+```bash

+## get VirtualService with your model name

+kubectl describe VirtualService -n submarine-user-test -l model-name=tf-mnist

+

+Name: submarine-model-1-2508dd65692740b18ff5c6c6c162b863

+Namespace: submarine-user-test

+Labels: model-id=2508dd65692740b18ff5c6c6c162b863

+ model-name=tf-mnist

+ model-version=1

+Annotations: <none>

+API Version: networking.istio.io/v1beta1

+Kind: VirtualService

+Metadata:

+ Creation Timestamp: 2022-09-18T05:26:38Z

+ Generation: 1

+ Managed Fields:

+ ...

+Spec:

+ Gateways:

+ istio-system/seldon-gateway

+ Hosts:

+ *

+ Http:

+ Match:

+ Uri:

+ Prefix: /seldon/submarine-user-test/1/1/

+ Rewrite:

+ Uri: /

+ Route:

+ Destination:

+ Host: submarine-model-1-2508dd65692740b18ff5c6c6c162b863

+ Port:

+ Number: 8000

+Events: <none>

+```

+

+6. After successfully serving the model, we can test the results of serving

using the test python code

[serve_predictions.py](https://github.com/apache/submarine/blob/master/dev-support/examples/quickstart/serve_predictions.py)

+

+

+

+

\ No newline at end of file

diff --git a/website/static/img/quickstart-artifacts.png

b/website/static/img/quickstart-artifacts.png

new file mode 100644

index 00000000..61be8593

Binary files /dev/null and b/website/static/img/quickstart-artifacts.png differ

diff --git a/website/static/img/submarine-model-registry.png

b/website/static/img/submarine-model-registry.png

new file mode 100644

index 00000000..cf6f2819

Binary files /dev/null and b/website/static/img/submarine-model-registry.png

differ

diff --git a/website/static/img/submarine-register-model.png

b/website/static/img/submarine-register-model.png

new file mode 100644

index 00000000..a520be6c

Binary files /dev/null and b/website/static/img/submarine-register-model.png

differ

diff --git a/website/static/img/submarine-serve-model.png

b/website/static/img/submarine-serve-model.png

new file mode 100644

index 00000000..19683325

Binary files /dev/null and b/website/static/img/submarine-serve-model.png differ

diff --git a/website/static/img/submarine-serve-prediction.png

b/website/static/img/submarine-serve-prediction.png

new file mode 100644

index 00000000..7286e639

Binary files /dev/null and b/website/static/img/submarine-serve-prediction.png

differ

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscr...@submarine.apache.org

For additional commands, e-mail: dev-h...@submarine.apache.org