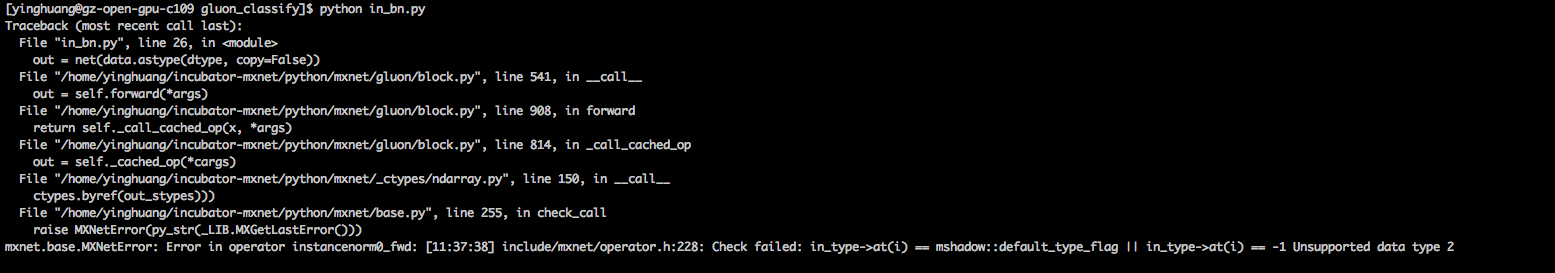

@ankkhedia Hi ankkhedia, Thank you for reply. But our question is not for how to. We run float-16 training with instance norm layer, but it break down with error log like this.

I tried to add inferType method to InstanceNorm operator, but it still not work. We found BN operator implement float 16 training by using float 32 as acctually precision. Will InstanceNorm operator support float 16 training like this way? [ Full content available at: https://github.com/apache/incubator-mxnet/issues/12302 ] This message was relayed via gitbox.apache.org for [email protected]