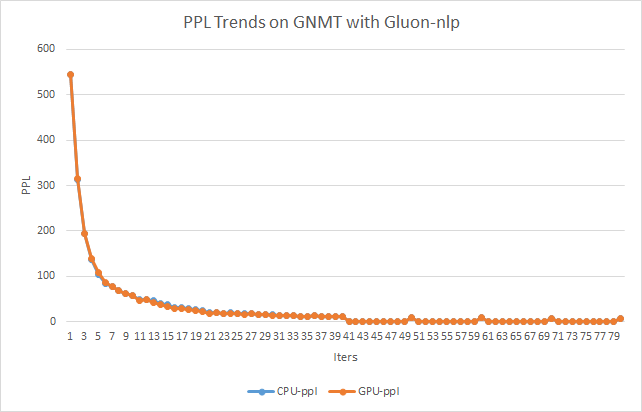

**RNN related data, including both accuracy, and performance benchmarking.** **Accuracy** 1. GNMT model implemented by gluon-nlp (scripts\nmt\train_gnmt.py), IWMT2015 dataset, en-vi translation. The decoder-encoder is a 2-layer LSTM, per the model implemenation, as gluon.rnncell used, the MKLDNN FC can be covered as it is gluon.rnncell is an unfused kernel, below figure is the ppl trends collected on both GPU and CPU, with same hyper-parameters, the two curves aligned very well.

[ Full content available at: https://github.com/apache/incubator-mxnet/pull/12591 ] This message was relayed via gitbox.apache.org for [email protected]