bkietz commented on a change in pull request #10364:

URL: https://github.com/apache/arrow/pull/10364#discussion_r646620882

##########

File path: cpp/src/arrow/compute/kernels/scalar_arithmetic.cc

##########

@@ -451,8 +604,45 @@ struct ArithmeticFunction : ScalarFunction {

if (auto kernel = DispatchExactImpl(this, *values)) return kernel;

return arrow::compute::detail::NoMatchingKernel(this, *values);

}

+

+ Result<const Kernel*> DispatchDecimal(std::vector<ValueDescr>* values) const

{

+ if (values->size() == 2) {

+ std::vector<std::shared_ptr<DataType>> replaced;

+ RETURN_NOT_OK(GetDecimalBinaryOutput(name(), *values, &replaced));

+ (*values)[0].type = std::move(replaced[0]);

+ (*values)[1].type = std::move(replaced[1]);

+ }

+

+ using arrow::compute::detail::DispatchExactImpl;

+ if (auto kernel = DispatchExactImpl(this, *values)) return kernel;

+ return arrow::compute::detail::NoMatchingKernel(this, *values);

+ }

+};

+

+// resolve decimal operation output type

+struct DecimalBinaryOutputResolver {

+ std::string func_name;

+

+ DecimalBinaryOutputResolver(std::string func_name) :

func_name(std::move(func_name)) {}

+

+ Result<ValueDescr> operator()(KernelContext*, const std::vector<ValueDescr>&

args) {

+ ARROW_ASSIGN_OR_RAISE(auto out_type, GetDecimalBinaryOutput(func_name,

args));

Review comment:

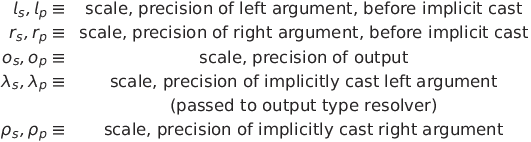

Breaking the definitions out for efficient algebra:

<details>

<pre>

l_s, l_p \equiv& \text{scale, precision of left argument, before implicit

cast} \\

r_s, r_p \equiv& \text{scale, precision of right argument, before implicit

cast} \\

o_s, o_p \equiv& \text{scale, precision of output} \\

\lambda_s, \lambda_p

\equiv&

\text{scale, precision of implicitly cast left argument} \\

&\text{(passed to output type resolver)} \\

\rho_s, \rho_p

\equiv& \text{scale, precision of implicitly cast right argument}

</pre>

</details>

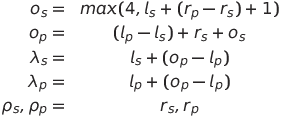

For division, the output precision and scale are:

<details>

<pre>

o_s =& max(4, l_s + (r_p - r_s) + 1) \\

o_p =& (l_p - l_s) + r_s + o_s \\

\lambda_s =& l_s + (o_p - l_p) \\

\lambda_p =& l_p + (o_p - l_p) \\

\rho_s, \rho_p =& r_s, r_p

</pre>

</details>

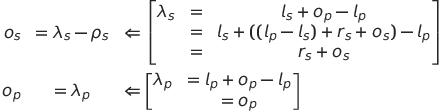

Since the post-implicit-cast types are the only types available to the

output type resolver, we need to compute the output scale and precision from

those implicitly cast types:

<details>

<pre>

o_s &= \lambda_s - \rho_s &\Leftarrow

\begin{bmatrix}

\lambda_s

&=& l_s + o_p - l_p \\

&=& l_s + ((l_p - l_s) + r_s + o_s) - l_p \\

&=& r_s + o_s

\end{bmatrix}

\\

o_p &= \lambda_p &\Leftarrow

\begin{bmatrix}

\lambda_p

&= l_p + o_p - l_p\\

&= o_p

\end{bmatrix}

</pre>

</details>

So in prose: the output precision is identical to the precision of the

implicitly cast left argument and the output scale is the implicitly cast left

argument's scale minus the right argument's scale. The output resolver can be

written as:

```c++

template <typename Op>

void AddDecimalBinaryKernels(const std::string& name,

std::shared_ptr<ArithmeticFunction>* func) {

OutputType out_type;

if (name.find("divide") == 0) {

out_type = [](KernelContext*, const std::vector<ValueDescr>& args) ->

Result<ValueDescr> {

// validation...

auto left = checked_cast<const DecimalType*>(args[0].type.get());

auto right = checked_cast<const DecimalType*>(args[1].type.get());

auto precision = left.precision();

auto scale = left.scale() - right.scale();

auto out_type = DecimalType::Make(left->id(), precision, scale);

return ValueDescr(std::move(out_type), GetBroadcastShape(args));

};

}

```

Similar resolvers can be written for the other operations. Does that make

sense?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]