wenbingshen opened a new issue #2941: URL: https://github.com/apache/bookkeeper/issues/2941

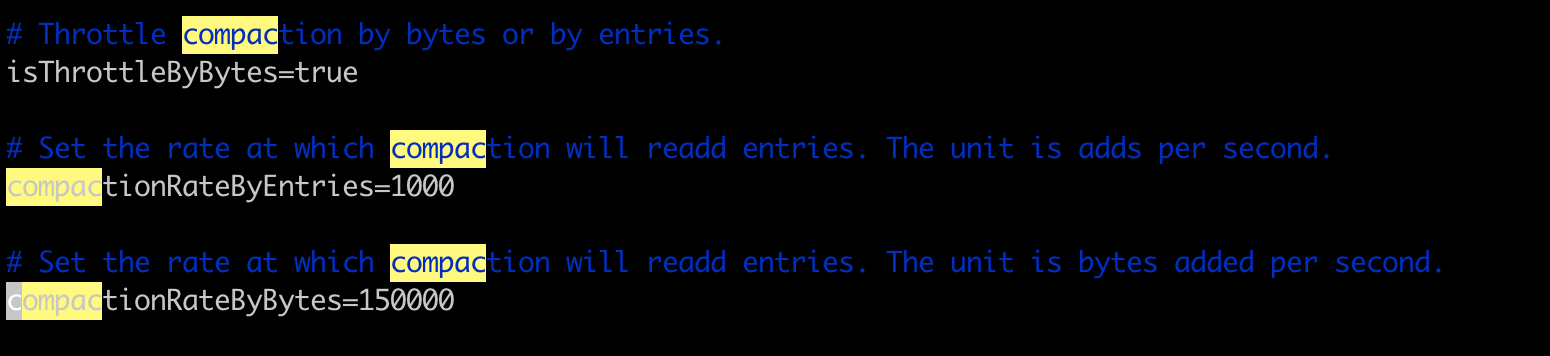

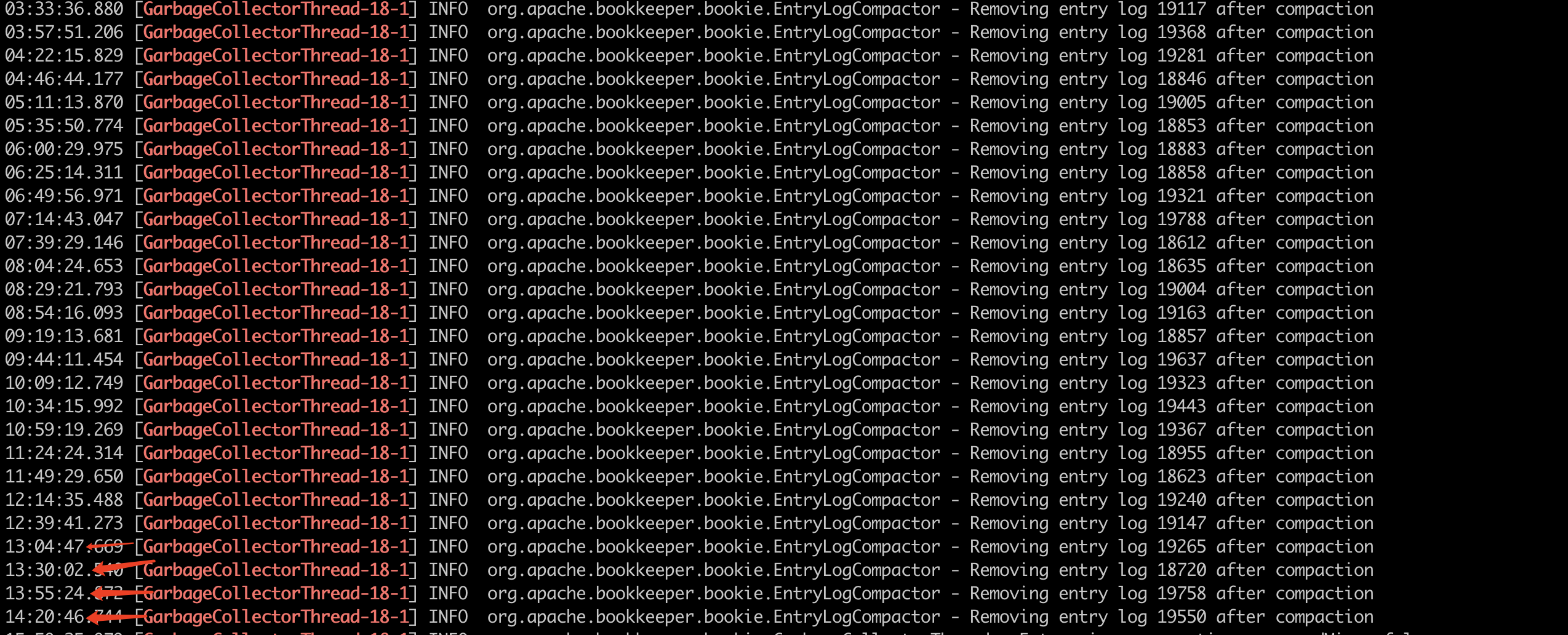

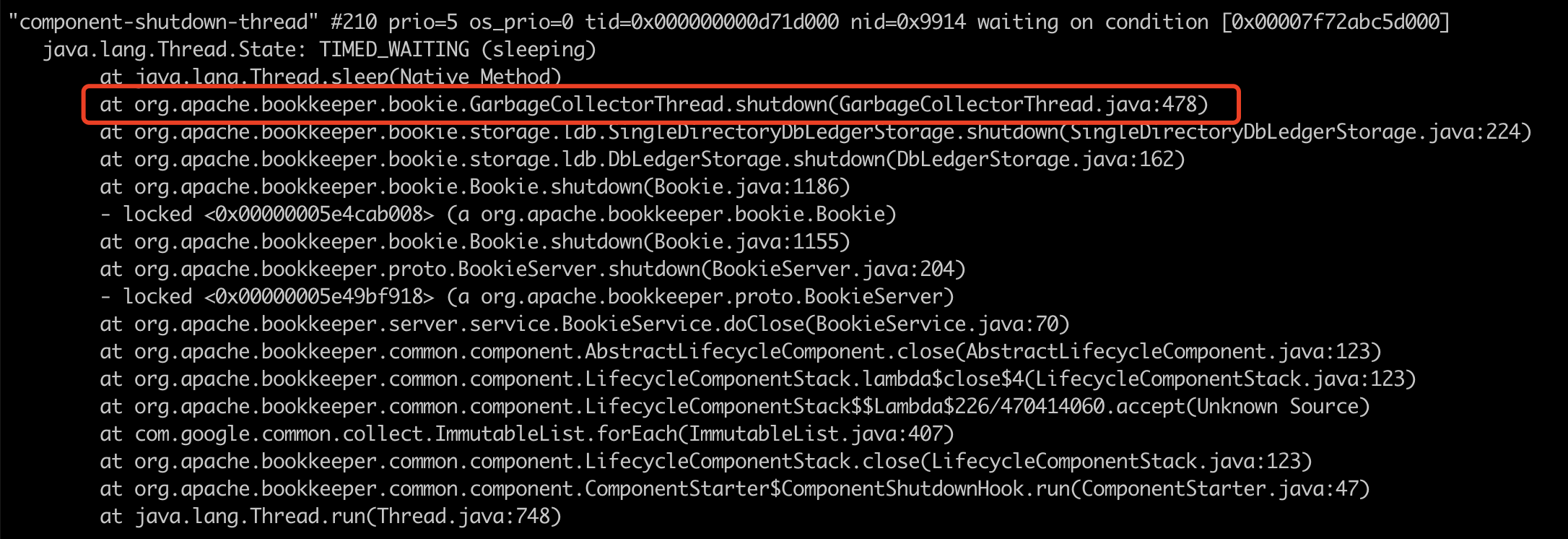

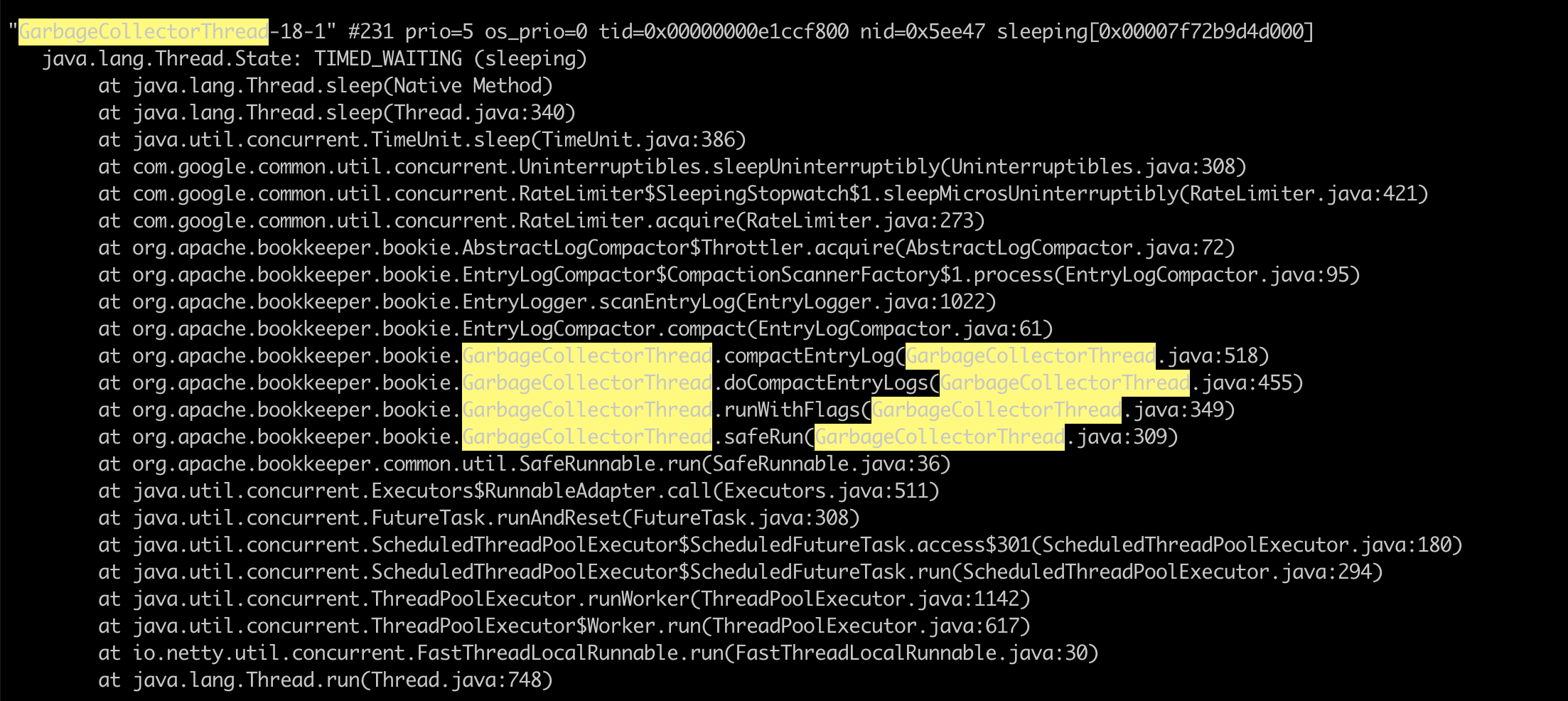

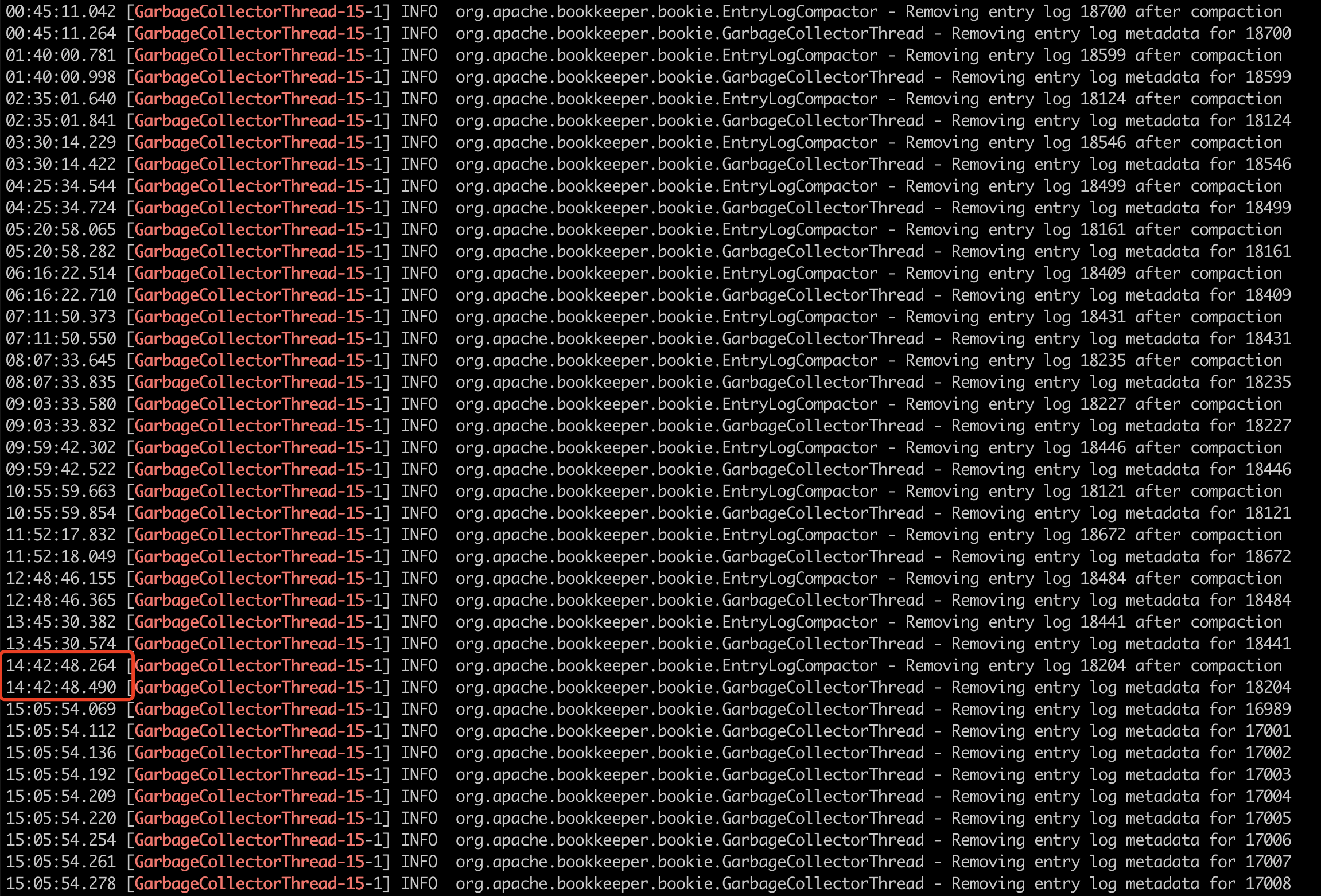

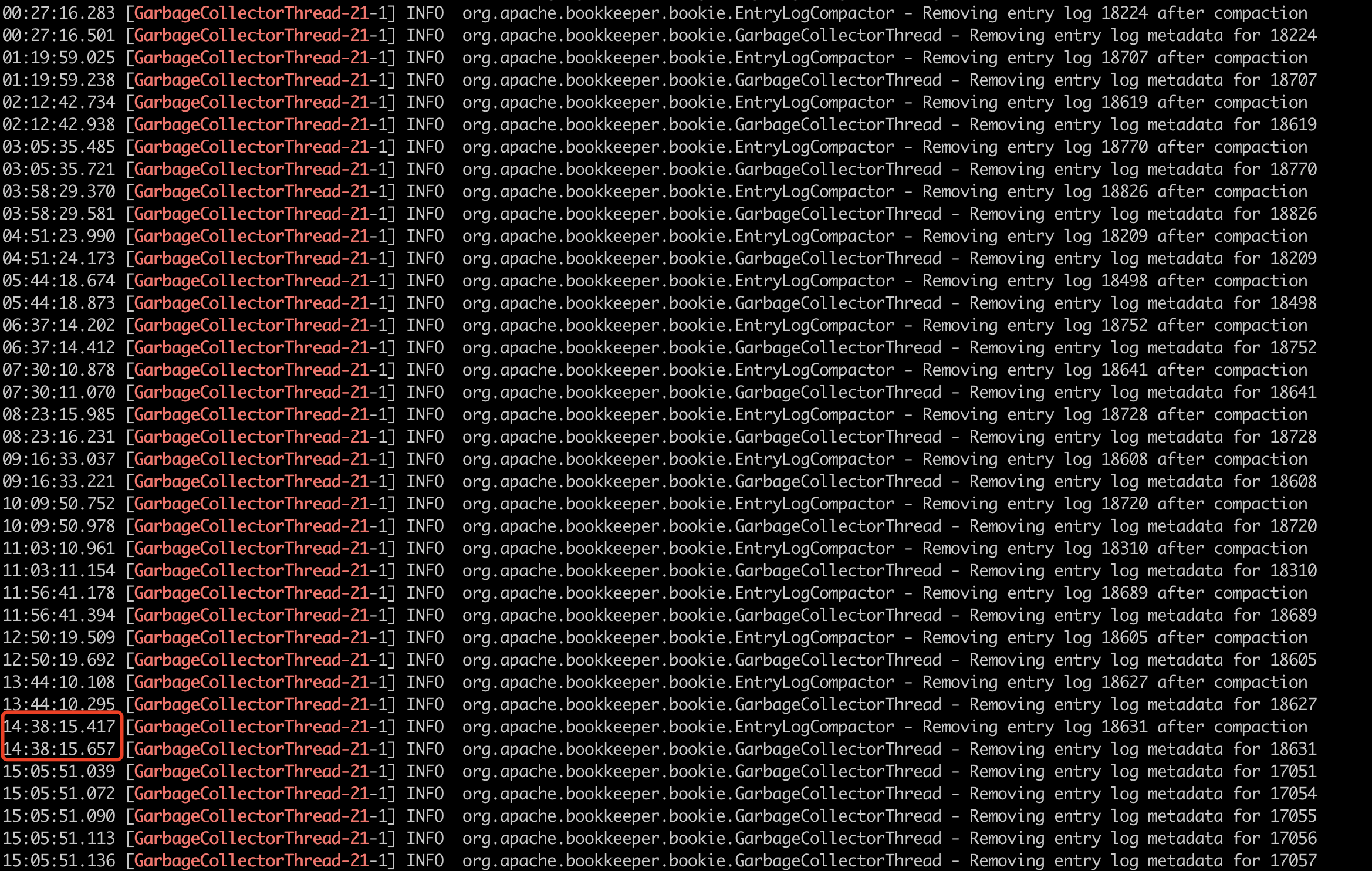

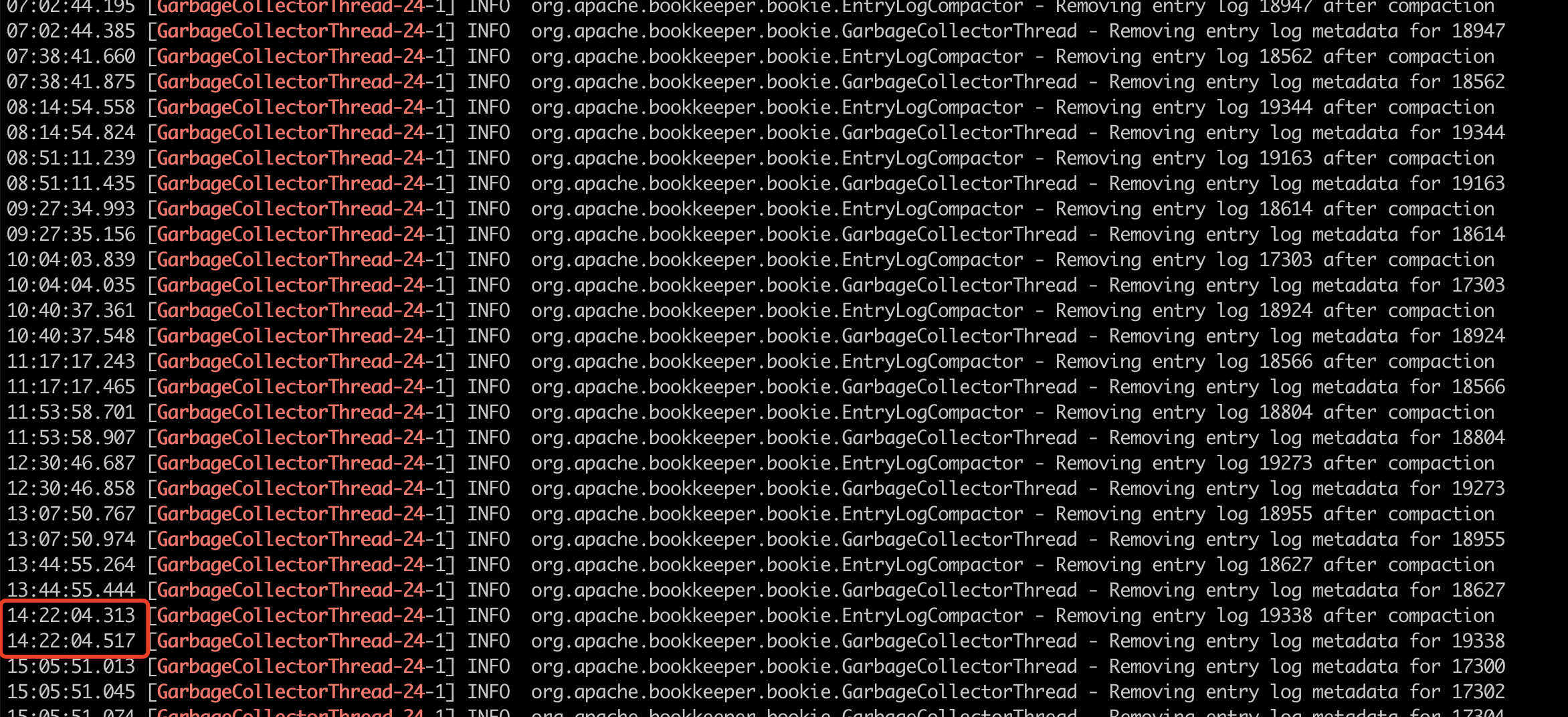

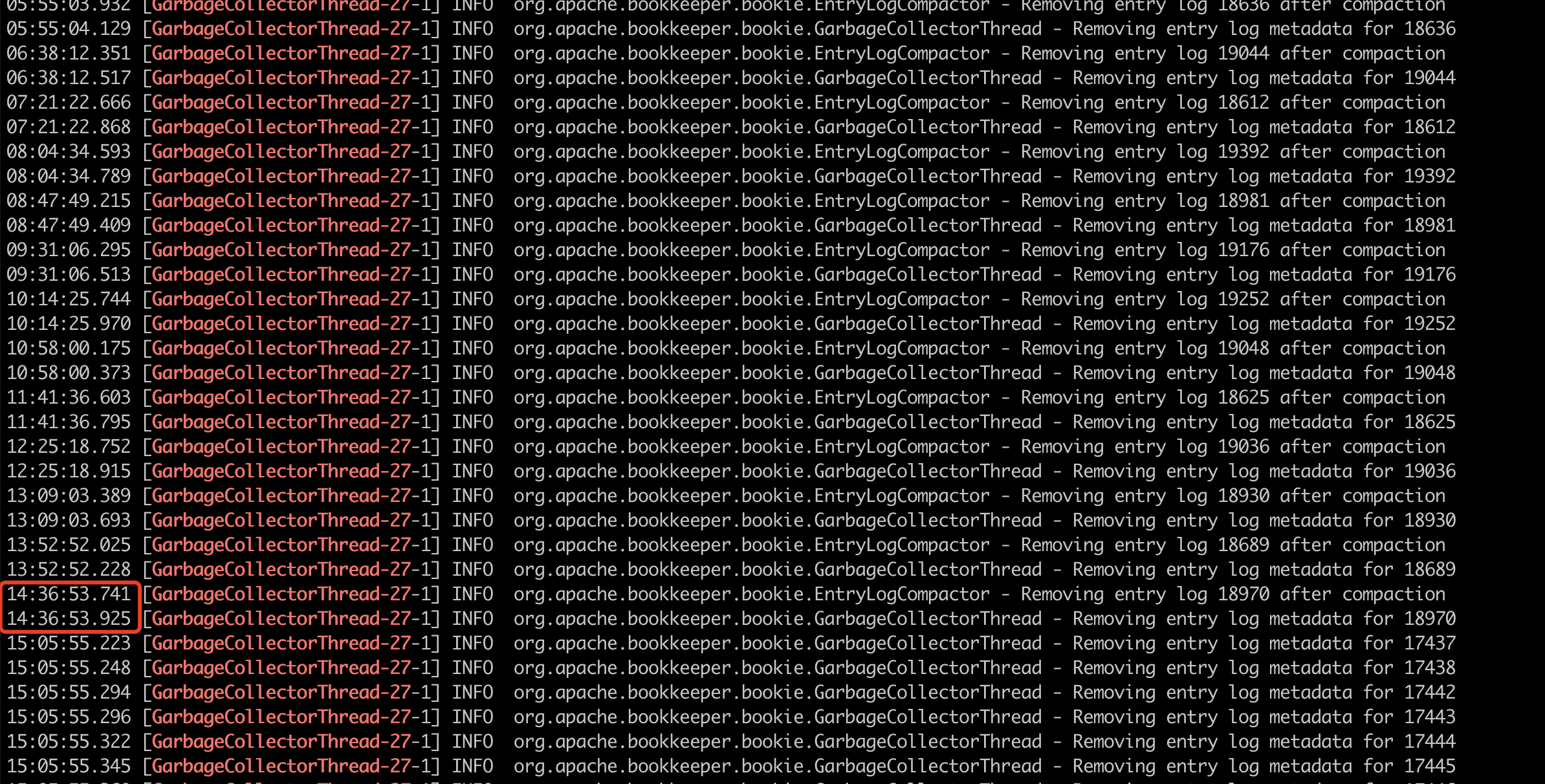

**BUG REPORT** ***Describe the bug*** Our compaction current limiting configuration is as follows, the traffic configuration is very small. **bookkeeper.conf** `isThrottleByBytes=true` `compactionRateByEntries=1000` `compactionRateByBytes=150000`  Our bookkeeper cluster is always running stably, but when we need to restart bookie, it took us half an hour to stop a bookie node. You can observe our log as follows. After log investigation, we found that the same major compaction task in a certain GC thread of Bookie has been running for two days and has not been completed. The remove operation between each entry log is separated by 20 to 30 minutes.  When we run the command line tool to stop bookie, `component-shutdown-thread` will start to work. But the `component-shutdown-thread` is always blocked in the shutdown logic of the GC thread.  The `GarbageCollectorThread` thread has been blocked in `RateLimiter.acquire`  We stopped bookie at 14:10, but bookie didn't really stop until 14:42. The last stop time depends on the last time of all GC threads to recover from the current limit. **`GarbageCollectorThread-15-1`** Stopped at 14:42:48  **`GarbageCollectorThread-21-1`** Stopped at 14:38:15  **`GarbageCollectorThread-24-1`** Stopped at 14:22:04  **`GarbageCollectorThread-27-1`** Stopped at 14:36:53  ***To Reproduce*** Steps to reproduce the behavior: Use our same compaction current limiting configuration and continue to write 30MB/s of traffic. After running for a few days, trigger the TTL of the pulsar broker, and then try to shut down your bookie node. ***Expected behavior*** If the compactor is limited, the shutdown priority should be higher than waiting for `RateLimiter.acquire`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]