RaulGracia opened a new issue, #3258: URL: https://github.com/apache/bookkeeper/issues/3258

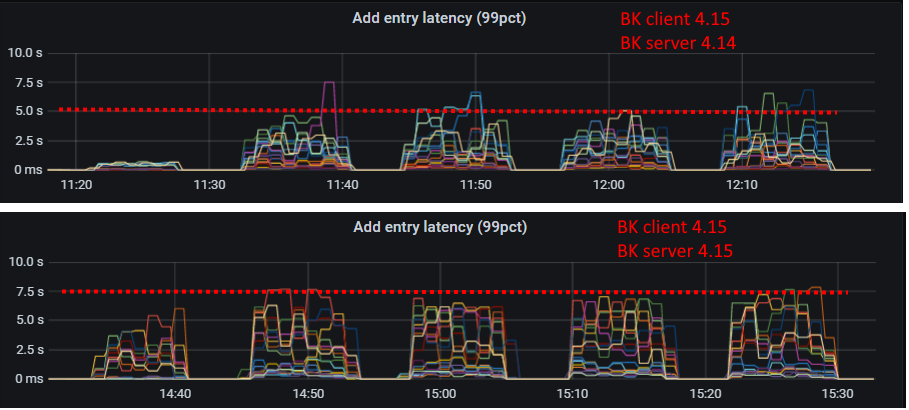

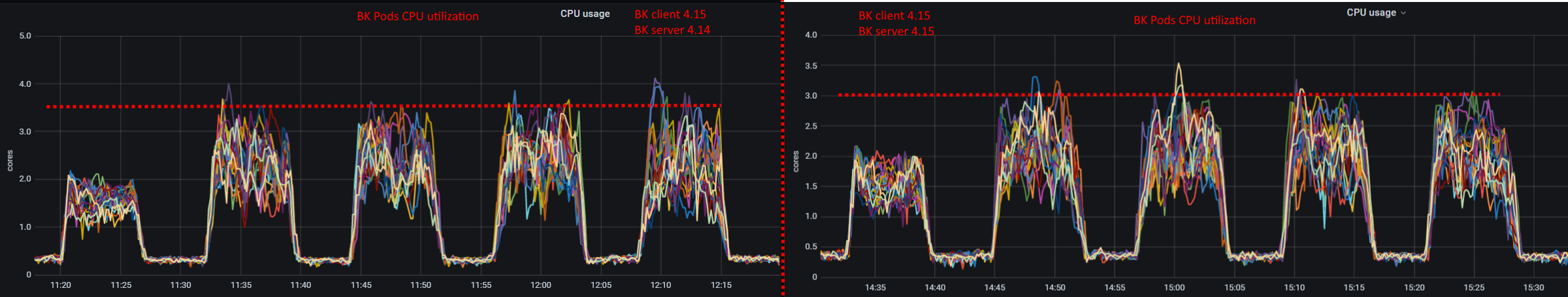

**BUG REPORT** ***Describe the bug*** We have done some performance regression tests on the Bookkeeper 4.15 RC and compared it with Bookkeeper 4.14. We have detected a performance regression of around 20% in write throughput in the new release candidate (the same performance regression may be also impacting current master). We have used the following configurations: - BK client 4.14 - BK server 4.14 - BK client 4.15 - BK server 4.14 - BK client 4.15 - BK server 4.15 The problems are only visible when using Bookkeeper 4.15 server (so we can discard that the problem is in the client). The benchmark consists of write-only workload to a Pravega (https://github.com/pravega/pravega) cluster using the above combinations of Bookkeeper clients and server (also keeping the same configuration of Pravega and Bookkeeper across tests). - Internal BK write latency metrics chart shows higher latency for Bookkeeper 4.15:  - On BK drives stats we observed much lower IOPS for BK server 4.15, at the same time the average write size on the drive is similar for both BK server versions:  - BK Pods CPU utilization is a little bit higher for BK 4.14 because of higher performance (it is expected):  ***To Reproduce*** We have done the experiments on high-end cluster (Kubernetes, 18 Bookies (1 journal/1 ledger drives), NVMes). But it is likely that the performance problem could be visible doing much smaller scale tests. ***Expected behavior*** Bookkeeper 4.15 should be at least as fast as Bookkeeper 4.14. ***Screenshots*** Posted above. ***Additional context*** We will start searching for the commit that could have caused the regression. We will also provide profiling information to help fixing the performance problem. Credits to @OlegKashtanov. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]