StephanEwen commented on pull request #15119:

URL: https://github.com/apache/flink/pull/15119#issuecomment-810455050

I only took a quick look at this.

The main thing I looked at is the performance critical path:

- the wrapping calls

- the counter (which determines whether to draw a sample).

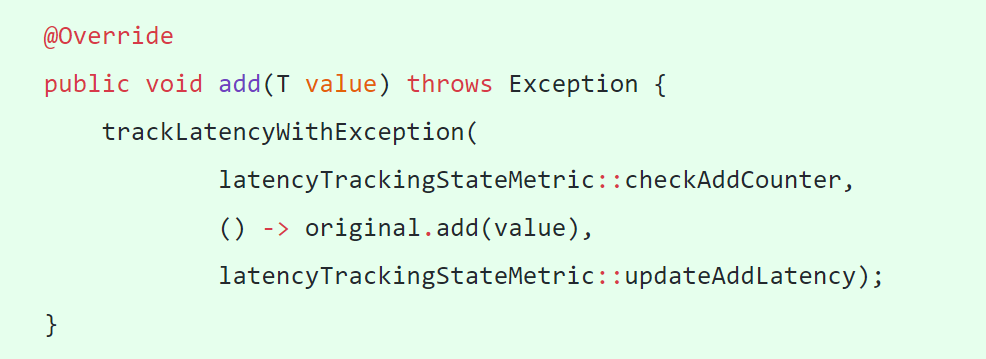

I think we can improve this. Let's look at this code here, for a list state

value adding.

If my knowledge of Java is correct, this created three capturing lambda

instances. The first and the third capture the reference to the instance of the

`StateLatencyMetricBase`, the second captures the `value` parameter. This means

three objects for every access here, regardless of whether we end up drawing

the sample. Maybe the JIT will do some magic stack allocation or so, otherwise

it remains overhead on every call.

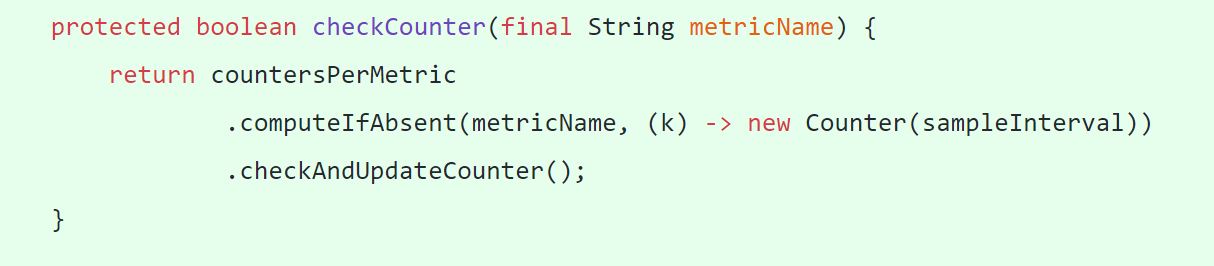

The next part is the counter to check whether it is sample time, here at the

example of checking the counter for "clear()".

Here we have a HashMap lookup every time, and also another capturing lambda

(object instantiation).

So every call now has four extra objects and one extra HashMap, and a bunch

of extra virtual method calls.

Again, maybe the JIT gets rid of some of that, but I am wondering if love of

lambdas and abstraction is a bit too far here, given that this is part of the

most performance critical code in the system.

What happens if we rewrite the code like this instead:

- Keep a primitive fields directly for each counter in the class where

action is defined

- Inline the counter checks, and only use lambdas for the actual sample

measurement

```java

private int clearCounter = sampleSize;

@Override

public void clear() {

if (--clearCounter > 0) {

original.clear();

} else {

clearCounter = sampleSize;

takeSample(originalState::clear, STATE_CLEAR_LATENCY);

}

}

```

Would be interesting to see if this can reduce the overhead. Probably most

measurable with the Heap Backend.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]