GitHub user wenjin272 created a discussion: Memory Framework Investigation

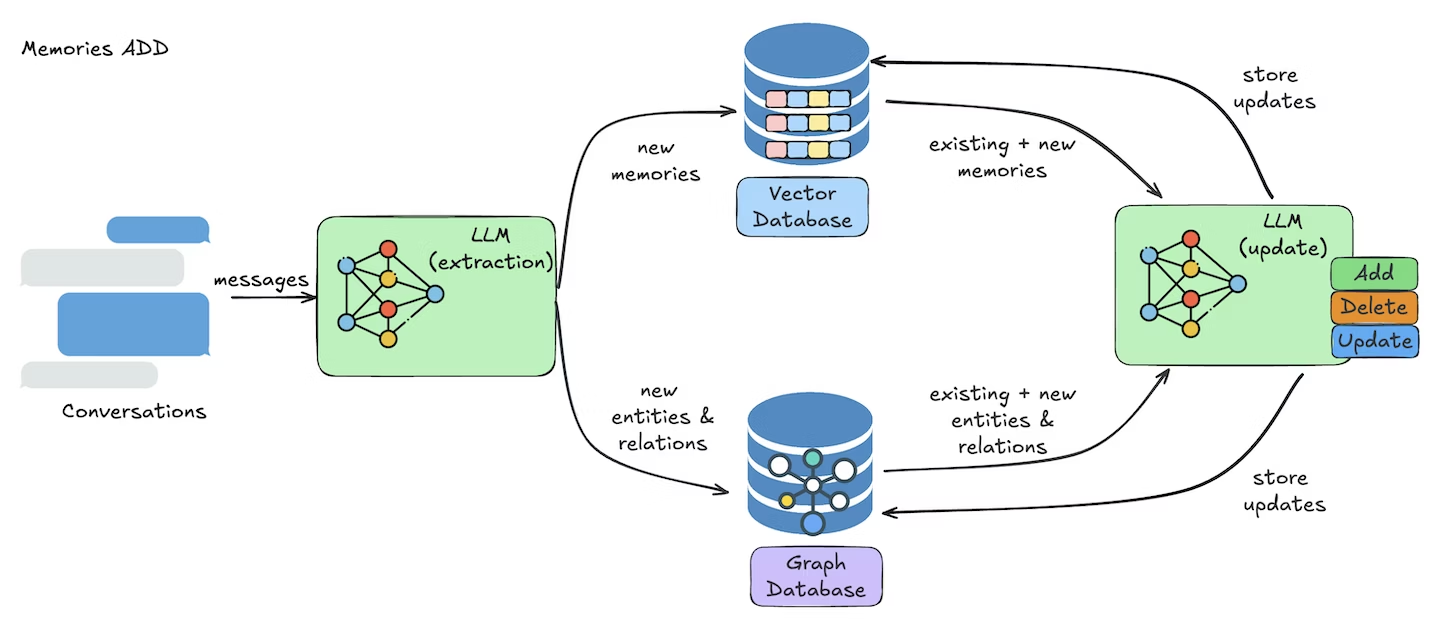

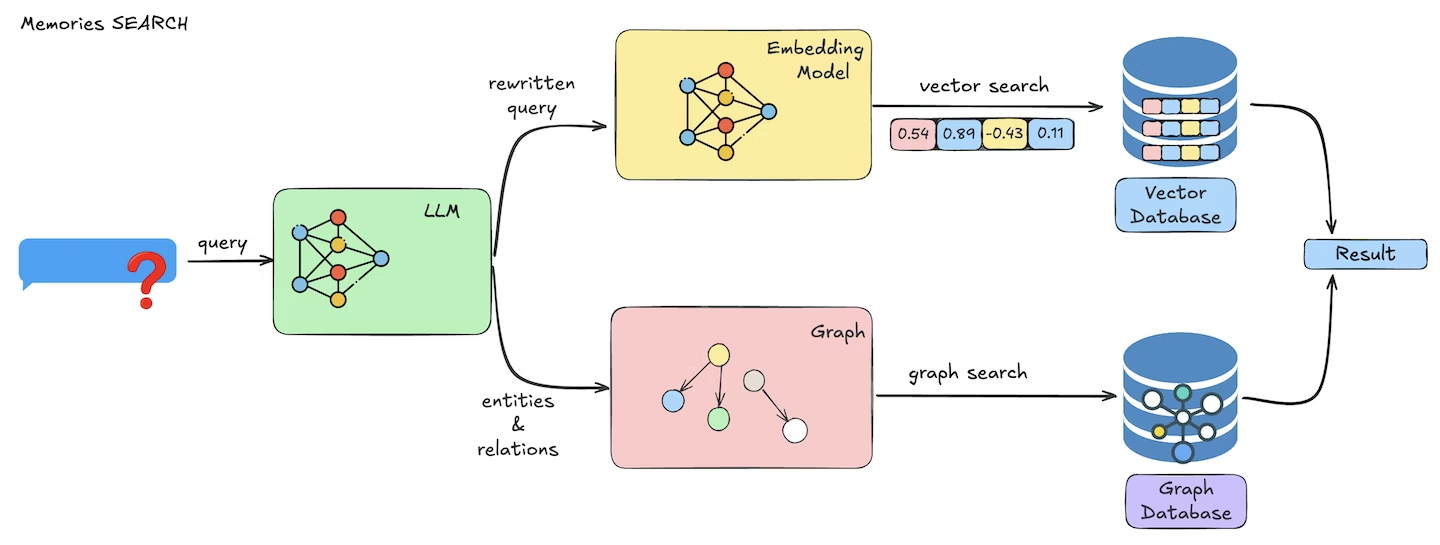

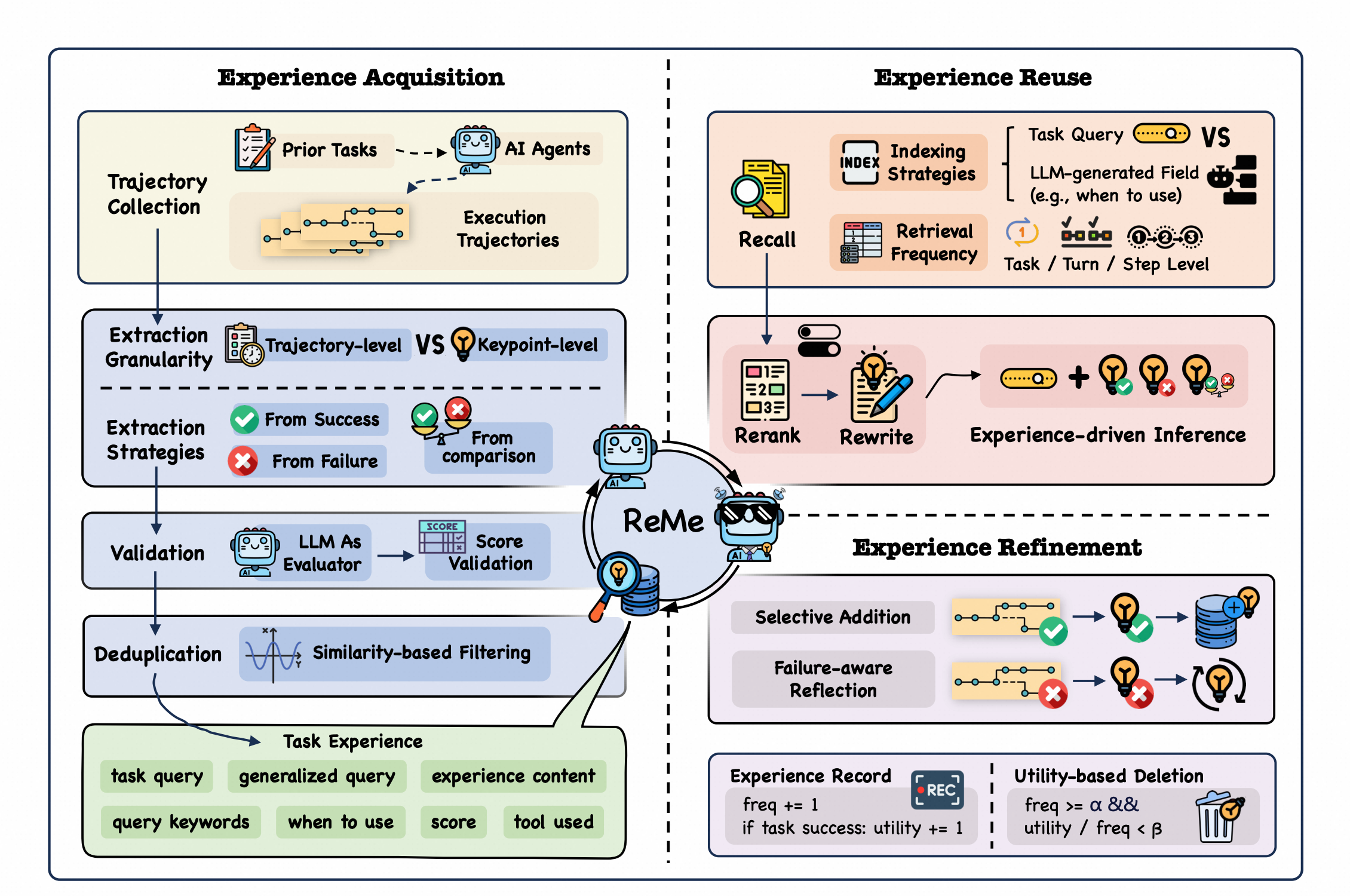

# Memory Framework Investigation ### Mem0 (46.6k stars) Mem0 is an intelligent memory layer specifically designed for AI agents, supporting both short-term and long-term memory. #### Working principle ##### Add Memory  1. **Information extraction**: Mem0 sends the messages through an LLM that pulls out key facts, decisions, or preferences to remember. 2. **Conflict resolution**: Existing memories are checked for duplicates or contradictions so the latest truth wins. 3. **Storage**:The resulting memories land in managed vector storage (and optional graph storage) so future searches return them quickly. ##### Search Memory  1. **Query processing**:Mem0 cleans and enriches your natural-language query so the downstream embedding search is accurate. 2. **Vector search**:Embeddings locate the closest memories using cosine similarity across your scoped dataset. 3. **Filtering & reranking**:Logical filters narrow candidates; rerankers or thresholds fine-tune ordering. 4. **Results delivery**:Formatted memories (with metadata and timestamps) return to your agent or calling service. #### Supported Operations * add * get by id/get all * search * update by id * delete by id/delete all * history: Displays the modification history of a memory item. #### Can use Mem0 As Flink-Agents LTM backend * **Dependency**: Currently, using Mem0 requires providing a **ChatModel**, an **EmbeddingModel**, and a **VectorStore**—this is identical to the existing implementation of Flink-Agents LTM. * **Operation:** The supported operations **covers** the interfaces of Flink-Agents LTM. * **Value Type**: In terms of type, only **string** is supported in mem0. Currently, Flink-Agents supports both str and ChatMessage; however, in practice, ChatMessage is also serialized into str, so there’s no issue at all. * **Visibility:** * You are allowed to set **user\_id**, **agent\_id**, and **run\_id**. These three identifiers essentially share the same namespace—meaning that the data will ultimately be written into the same collection in the vector store. However, when performing operations (such as get\_all, delete\_all, or search), you can use user\_id, agent\_id, and run\_id to filter the data, ensuring that only data associated with the same user\_id, agent\_id, and run\_id is visible. **In summary, implementing Flink-Agents’ LTM using Mem0 is feasible.** ### ReMe (939 stars) ReMe (Remember Me, Refine Me), is a comprehensive framework for experience-driven agent evolution.[https://arxiv.org/pdf/2512.10696](https://arxiv.org/pdf/2512.10696) ReMe think agent memory can be viewed as ```plaintext Agent Memory = Long-Term Memory + Short-Term Memory = (Personal + Task + Tool) Memory + (Working Memory) ``` * **Personal Memory:** Understand user preferences and adapt to context * **Task Memory:** Learn from experience and perform better on similar tasks * **Tool Memory:** Optimize tool selection and parameter usage based on historical performance * **Working Memory:** Manage short-term context for long-running agents without context overflow #### Working principle  1. **Experience Acquisition:** The system first constructs the initial experience pool from the agent’s past trajectories 2. **Reuse:** For new tasks, relevant experiences are recalled and reorganized to guide agent inference. 3. **Refinement:** After task execution, ReMe updates the pool, selectively adding new insights and removing outdated ones. #### Supported Operations ReMe provides a set of independent operations, each of which is a separate class rather than a method. By combining these operations, ReMe offers two flows: summary and retrieve. Take personal memory as an example. ##### summary\_personal\_memory: ```yaml summary_personal_memory: flow_content: info_filter_op >> (get_observation_op | get_observation_with_time_op | load_today_memory_op) >> contra_repeat_op >> update_vector_store_op ``` This flow performs the following operations: 1. `info_filter_op`: Filters incoming information to extract relevant personal details 2. Parallel paths for observation extraction: * `get_observation_op`: Extracts general observations * `get_observation_with_time_op`: Extracts observations with time context * `load_today_memory_op`: Loads memories from the current day 3. `contra_repeat_op`: Removes contradictions and repetitions 4. `update_vector_store_op`: Stores the processed memories in the vector database ##### retrieve\_personal\_memory ```yaml retrieve_personal_memory: flow_content: set_query_op >> (extract_time_op | (retrieve_memory_op >> semantic_rank_op)) >> fuse_rerank_op ``` This flow performs the following operations: 1. `set_query_op`: Prepares the query for memory retrieval 2. Parallel paths: * `extract_time_op`: Extracts time-related information from the query * `retrieve_memory_op >> semantic_rank_op`: Retrieves memories and ranks them semantically 3. `fuse_rerank_op`: Combines and reranks the results for final output #### As Flink-Agents LTM backend Similar to Mem0, but it doesn't support interface for retrieving/deleting data by memory ID. ### LangMem (1.3k stars) Similar to the long-term memory of LangGraph from our previous research, it hasn't been updated for four months. ### AgentFS (2.2k stars) AgentFS provides an abstract file system for Agent execution and also offers key-value storage capabilities. On top of this foundation, it delivers the following features: * **Copy-on-Write Isolation** — Run agents in sandboxed environments where changes are isolated from your source tree * **Single File Storage** — Everything stored in one portable SQLite database for easy sharing and snapshotting * **Built-in Auditing** — Every file operation is recorded and queryable * **Cloud Sync** — Optionally sync agent state to Turso Cloud I think the primary function of AgentFS is to serve as a sandbox, providing isolation, auditing, and state transition capabilities. #### Store Memory in File Agentfs provides KV read/write and file read/write capabilities, and supports storing context data such as session information. However, **it does not natively offer features like summary or similarity search**. If you use it as the backend for LTM, you’ll need to build these functionalities on top of it. Some agents do indeed store memory in files, such as the two general-purpose agent products, Manaus and OpenClaw. * Manaus treats the file system as an infinite context, and the Agent makes autonomous decisions to read and write files on demand. They argue that compression-based strategies can lead to information loss—losses that are impossible to quantify, since information currently deemed insignificant might later turn out to be critically important.[https://manus.im/zh-cn/blog/Context-Engineering-for-AI-Agents-Lessons-from-Building-Manus](https://manus.im/zh-cn/blog/Context-Engineering-for-AI-Agents-Lessons-from-Building-Manus) * OpenClaw will generate some Markdown files locally: * `memory/YYYY-MM-DD.md`Store the raw conversation records of Daily as well as the compressed context. Each file, upon being saved, is also chunked and imported into sqlite-vec to enable vector retrieval and keyword-based search capabilities. * `Memory.md`and`User.md`Regularly extract knowledge such as facts and preferences from the Daily memory. * A particularly special place: * Hybrid Search: Vector Search + Keyword Search GitHub link: https://github.com/apache/flink-agents/discussions/537 ---- This is an automatically sent email for [email protected]. To unsubscribe, please send an email to: [email protected]