ashoksri0 opened a new issue #3526:

URL: https://github.com/apache/iceberg/issues/3526

i'm using hadoop3.x,spark 3.1.2, iceberg 12.0 and trino 364

ingest the parquet with below code

`

def main(args: Array[String]): Unit = {

var s3_url="s3a://lwa-dev/"

val spark = SparkSession

.builder()

.master("spark://Ashok:7077")

.appName("SparkByExample")

.appName("Spark Hive Example")

.config("packages","org.apache.iceberg:iceberg-spark3-runtime:0.12.0")

.config("spark.sql.extensions","org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions")

.config("spark.sql.catalog.spark_catalog","org.apache.iceberg.spark.SparkSessionCatalog")

.config("spark.sql.catalog.spark_catalog.type","hive")

.config("spark.sql.catalog.spark_catalog.warehouse",s3_url)

.config("fs.s3a.access.key","***********")

.config("fs.s3a.secret.key","**********")

.config("fs.s3a.endpoint", "s3.amazonaws.com")

.config("spark.sql.catalog.my_catalog.io-impl","org.apache.iceberg.aws.s3.S3FileIO"

)

.config("spark.sql.warehouse.dir",s3_url)

.config("iceberg.engine.hive.enabled", "true")

.enableHiveSupport()

.getOrCreate()

spark.sql("CREATE TABLE spark_catalog.poc.address ( ADDR_ID bigint ,

IS_PROV boolean , ADDR_TYPE string , ADDRESS string , CITY string , " +

"STATE string , ZIP long , PHONE string , FAX string , EMAIL

string ) USING iceberg " +

" OPTIONS ( 'write.object-storage.enabled'=true,

'write.object-storage.path'= '"+s3_url+"poc/address/data' ) PARTITIONED BY

(STATE,CITY)")

var df = spark.read.parquet("hdfs://localhost:54310/stage/ADDRESS")

df.writeTo("spark_catalog.poc.address").append();

`

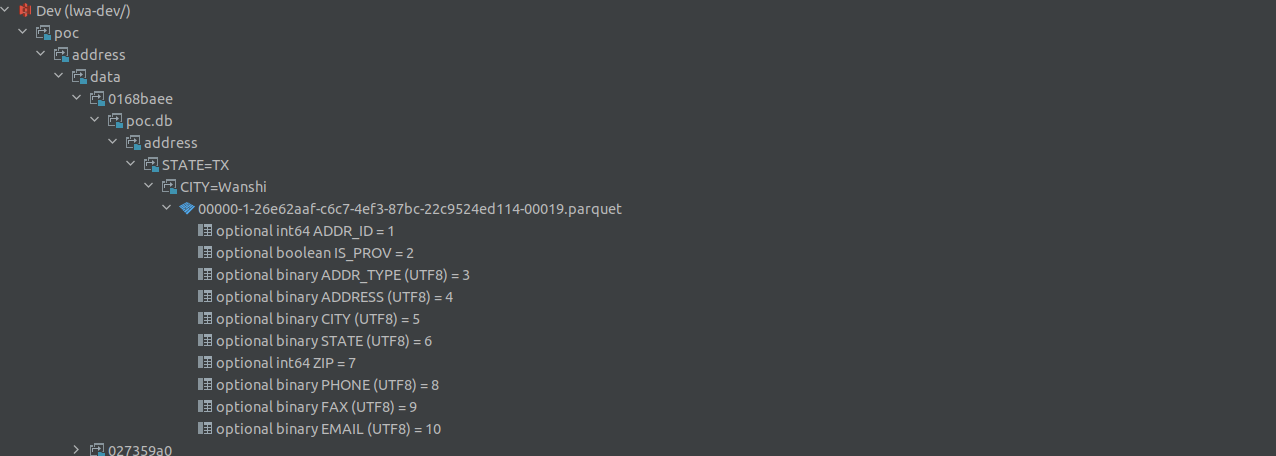

have this in s3 after ingestion

while trying with trino to read the table i'm getting following error

`

rg.jkiss.dbeaver.model.exec.DBCException: SQL Error [16777232]: Query failed

(#20211110_115905_00010_3gg39): Error reading tail from

s3a://lwa-dev/poc/address/data/4807d66c/poc.db/address/STATE=MD/CITY=Melres/00000-1-26e62aaf-c6c7-4ef3-87bc-22c9524ed114-00043.parquet

with length 3014

at

org.jkiss.dbeaver.model.impl.jdbc.exec.JDBCResultSetImpl.nextRow(JDBCResultSetImpl.java:183)

at

org.jkiss.dbeaver.model.impl.jdbc.struct.JDBCTable.readData(JDBCTable.java:198)

at

org.jkiss.dbeaver.ui.controls.resultset.ResultSetJobDataRead.lambda$0(ResultSetJobDataRead.java:118)

at

org.jkiss.dbeaver.model.exec.DBExecUtils.tryExecuteRecover(DBExecUtils.java:167)

at

org.jkiss.dbeaver.ui.controls.resultset.ResultSetJobDataRead.run(ResultSetJobDataRead.java:116)

at

org.jkiss.dbeaver.ui.controls.resultset.ResultSetViewer$ResultSetDataPumpJob.run(ResultSetViewer.java:4522)

at org.jkiss.dbeaver.model.runtime.AbstractJob.run(AbstractJob.java:105)

at org.eclipse.core.internal.jobs.Worker.run(Worker.java:63)

Caused by: java.sql.SQLException: Query failed

(#20211110_115905_00010_3gg39): Error reading tail from

s3a://lwa-dev/poc/address/data/4807d66c/poc.db/address/STATE=MD/CITY=Melres/00000-1-26e62aaf-c6c7-4ef3-87bc-22c9524ed114-00043.parquet

with length 3014

at

io.trino.jdbc.AbstractTrinoResultSet.resultsException(AbstractTrinoResultSet.java:1912)

at

io.trino.jdbc.TrinoResultSet$ResultsPageIterator.computeNext(TrinoResultSet.java:219)

at

io.trino.jdbc.TrinoResultSet$ResultsPageIterator.computeNext(TrinoResultSet.java:179)

at

io.trino.jdbc.$internal.guava.collect.AbstractIterator.tryToComputeNext(AbstractIterator.java:141)

at

io.trino.jdbc.$internal.guava.collect.AbstractIterator.hasNext(AbstractIterator.java:136)

at

java.base/java.util.Spliterators$IteratorSpliterator.tryAdvance(Spliterators.java:1811)

at

java.base/java.util.stream.StreamSpliterators$WrappingSpliterator.lambda$initPartialTraversalState$0(StreamSpliterators.java:294)

at

java.base/java.util.stream.StreamSpliterators$AbstractWrappingSpliterator.fillBuffer(StreamSpliterators.java:206)

at

java.base/java.util.stream.StreamSpliterators$AbstractWrappingSpliterator.doAdvance(StreamSpliterators.java:161)

at

java.base/java.util.stream.StreamSpliterators$WrappingSpliterator.tryAdvance(StreamSpliterators.java:300)

at

java.base/java.util.Spliterators$1Adapter.hasNext(Spliterators.java:681)

at

io.trino.jdbc.TrinoResultSet$AsyncIterator.lambda$new$0(TrinoResultSet.java:125)

at

java.base/java.util.concurrent.CompletableFuture$AsyncRun.run(CompletableFuture.java:1736)

at

java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at

java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by: io.trino.spi.TrinoException: Error reading tail from

s3a://lwa-dev/poc/address/data/4807d66c/poc.db/address/STATE=MD/CITY=Melres/00000-1-26e62aaf-c6c7-4ef3-87bc-22c9524ed114-00043.parquet

with length 3014

at

io.trino.plugin.hive.parquet.HdfsParquetDataSource.readTail(HdfsParquetDataSource.java:113)

at

io.trino.parquet.reader.MetadataReader.readFooter(MetadataReader.java:94)

at

io.trino.plugin.iceberg.IcebergPageSourceProvider.lambda$createParquetPageSource$10(IcebergPageSourceProvider.java:472)

at

io.trino.plugin.hive.authentication.NoHdfsAuthentication.doAs(NoHdfsAuthentication.java:25)

at io.trino.plugin.hive.HdfsEnvironment.doAs(HdfsEnvironment.java:97)

at

io.trino.plugin.iceberg.IcebergPageSourceProvider.createParquetPageSource(IcebergPageSourceProvider.java:472)

at

io.trino.plugin.iceberg.IcebergPageSourceProvider.createDataPageSource(IcebergPageSourceProvider.java:237)

at

io.trino.plugin.iceberg.IcebergPageSourceProvider.createPageSource(IcebergPageSourceProvider.java:178)

at

io.trino.plugin.base.classloader.ClassLoaderSafeConnectorPageSourceProvider.createPageSource(ClassLoaderSafeConnectorPageSourceProvider.java:49)

at

io.trino.split.PageSourceManager.createPageSource(PageSourceManager.java:68)

at

io.trino.operator.TableScanOperator.getOutput(TableScanOperator.java:308)

at io.trino.operator.Driver.processInternal(Driver.java:388)

at io.trino.operator.Driver.lambda$processFor$9(Driver.java:292)

at io.trino.operator.Driver.tryWithLock(Driver.java:685)

at io.trino.operator.Driver.processFor(Driver.java:285)

at

io.trino.execution.SqlTaskExecution$DriverSplitRunner.processFor(SqlTaskExecution.java:1078)

at

io.trino.execution.executor.PrioritizedSplitRunner.process(PrioritizedSplitRunner.java:163)

at

io.trino.execution.executor.TaskExecutor$TaskRunner.run(TaskExecutor.java:484)

at io.trino.$gen.Trino_364____20211110_115659_2.run(Unknown Source)

at

java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at

java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by:

io.trino.plugin.hive.s3.TrinoS3FileSystem.UnrecoverableS3OperationException:

com.amazonaws.services.s3.model.AmazonS3Exception: The specified bucket does

not exist (Service: Amazon S3; Status Code: 404; Error Code: NoSuchBucket;

Request ID: 7Q5TMG8B3CWVXD2B; S3 Extended Request ID:

c7Kj3MTJEWqk5+6n/JG+JZAEb6Vc6k19PHKvrd5geKDWaT9Dx/tDN1SXo5WUIddXT2jCJ/Bgncg=;

Proxy: null), S3 Extended Request ID:

c7Kj3MTJEWqk5+6n/JG+JZAEb6Vc6k19PHKvrd5geKDWaT9Dx/tDN1SXo5WUIddXT2jCJ/Bgncg=

(Path:

s3a://lwa-dev/poc/address/data/4807d66c/poc.db/address/STATE=MD/CITY=Melres/00000-1-26e62aaf-c6c7-4ef3-87bc-22c9524ed114-00043.parquet)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.lambda$openStream$2(TrinoS3FileSystem.java:1295)

at io.trino.plugin.hive.util.RetryDriver.run(RetryDriver.java:130)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.openStream(TrinoS3FileSystem.java:1273)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.openStream(TrinoS3FileSystem.java:1258)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.seekStream(TrinoS3FileSystem.java:1251)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.lambda$read$1(TrinoS3FileSystem.java:1195)

at io.trino.plugin.hive.util.RetryDriver.run(RetryDriver.java:130)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.read(TrinoS3FileSystem.java:1194)

at

java.base/java.io.BufferedInputStream.fill(BufferedInputStream.java:252)

at

java.base/java.io.BufferedInputStream.read1(BufferedInputStream.java:292)

at

java.base/java.io.BufferedInputStream.read(BufferedInputStream.java:351)

at java.base/java.io.DataInputStream.read(DataInputStream.java:149)

at java.base/java.io.DataInputStream.read(DataInputStream.java:149)

at

io.trino.plugin.hive.util.FSDataInputStreamTail.readTail(FSDataInputStreamTail.java:59)

at

io.trino.plugin.hive.parquet.HdfsParquetDataSource.readTail(HdfsParquetDataSource.java:109)

... 21 more

Caused by: com.amazonaws.services.s3.model.AmazonS3Exception: The specified

bucket does not exist (Service: Amazon S3; Status Code: 404; Error Code:

NoSuchBucket; Request ID: 7Q5TMG8B3CWVXD2B; S3 Extended Request ID:

c7Kj3MTJEWqk5+6n/JG+JZAEb6Vc6k19PHKvrd5geKDWaT9Dx/tDN1SXo5WUIddXT2jCJ/Bgncg=;

Proxy: null)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.handleErrorResponse(AmazonHttpClient.java:1862)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.handleServiceErrorResponse(AmazonHttpClient.java:1415)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeOneRequest(AmazonHttpClient.java:1384)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeHelper(AmazonHttpClient.java:1154)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.doExecute(AmazonHttpClient.java:811)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeWithTimer(AmazonHttpClient.java:779)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.execute(AmazonHttpClient.java:753)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutor.access$500(AmazonHttpClient.java:713)

at

com.amazonaws.http.AmazonHttpClient$RequestExecutionBuilderImpl.execute(AmazonHttpClient.java:695)

at

com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:559)

at

com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:539)

at

com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5445)

at

com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5392)

at

com.amazonaws.services.s3.AmazonS3Client.getObject(AmazonS3Client.java:1520)

at

io.trino.plugin.hive.s3.TrinoS3FileSystem$TrinoS3InputStream.lambda$openStream$2(TrinoS3FileSystem.java:1278)

... 35 more

`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]