hit-lacus commented on pull request #1351: URL: https://github.com/apache/kylin/pull/1351#issuecomment-671059037

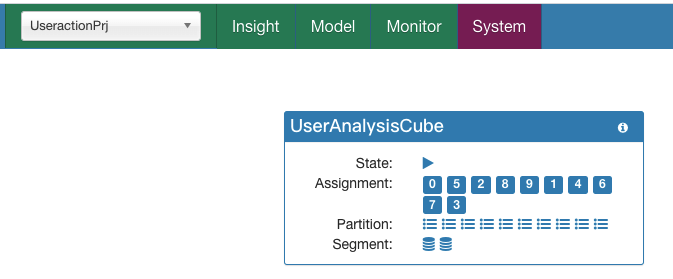

Let's do a test to check what should we do when we need to scale up the topic partitions. Give we have a topic `useraction_xxyu`, it has ten partitions.  <img width="1336" alt="image" src="https://user-images.githubusercontent.com/14030549/89734596-ae705f80-da8f-11ea-8777-afaad8556817.png";> ```sh [root@cdh-client xiaoxiang.yu]# kafka-topics --describe --topic useraction_xxyu --zookeeper cdh-master:2181 ... ... 20/08/09 22:19:36 INFO zookeeper.ZooKeeperClient: [ZooKeeperClient] Connected. Topic:useraction_xxyu PartitionCount:10 ReplicationFactor:1 Configs: Topic: useraction_xxyu Partition: 0 Leader: 116 Replicas: 116 Isr: 116 Topic: useraction_xxyu Partition: 1 Leader: 114 Replicas: 114 Isr: 114 Topic: useraction_xxyu Partition: 2 Leader: 115 Replicas: 115 Isr: 115 Topic: useraction_xxyu Partition: 3 Leader: 116 Replicas: 116 Isr: 116 Topic: useraction_xxyu Partition: 4 Leader: 114 Replicas: 114 Isr: 114 Topic: useraction_xxyu Partition: 5 Leader: 115 Replicas: 115 Isr: 115 Topic: useraction_xxyu Partition: 6 Leader: 116 Replicas: 116 Isr: 116 Topic: useraction_xxyu Partition: 7 Leader: 114 Replicas: 114 Isr: 114 Topic: useraction_xxyu Partition: 8 Leader: 115 Replicas: 115 Isr: 115 Topic: useraction_xxyu Partition: 9 Leader: 116 Replicas: 116 Isr: 116 ``` ---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]