hit-lacus edited a comment on pull request #1485: URL: https://github.com/apache/kylin/pull/1485#issuecomment-737050880

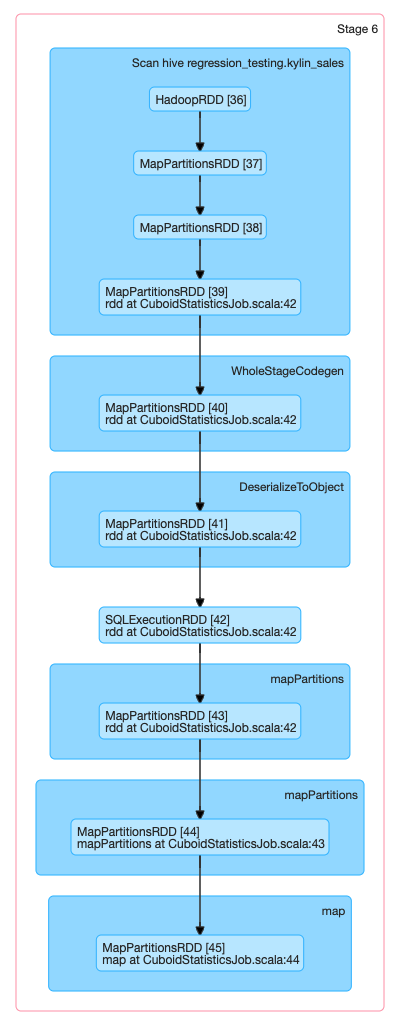

## Summary - Use Spark to calculate cuboid's HllCounter for the first segment and persist into HDFS. - Re-enable Cube planner by default, but not support cube planner phase two. - Not merge cuboid statistics(HLLCounter) when merge segment. - By default, only calculate cuboid statistics for the **FIRST** segment. (No necessary becuase phase two is not supported ) - Cuboid statistics for HLLCounter use precision **14**. - Calculate cuboid statistics use **100%** input flat table data. (Maybe use sample for input RDD in the future.) - Presisit LayoutEntity (cuboid, row count, bytes) into HDFS. ## Test result ### Cuboid purge result - Before purge <img width="1071" alt="image" src="https://user-images.githubusercontent.com/14030549/101027090-a5f9e280-35b2-11eb-834f-586e0b361d93.png";> - After cube planner phase one <img width="1007" alt="image" src="https://user-images.githubusercontent.com/14030549/101041364-59fd6c80-35b7-11eb-9746-3ab39a1d03fb.png";> - Building Job <img width="1392" alt="image" src="https://user-images.githubusercontent.com/14030549/101041987-a21c8f00-35b7-11eb-834a-3032378b4a88.png";> - Segment Info <img width="1070" alt="image" src="https://user-images.githubusercontent.com/14030549/101042032-aea0e780-35b7-11eb-98e7-394cd43f8e99.png";> ### Spark UI for new added Spark Job <img width="747" alt="image" src="https://user-images.githubusercontent.com/14030549/101045288-2290bf00-35bb-11eb-9492-d5aeae33c843.png";>  ---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: [email protected]