jeou opened a new issue #20314:

URL: https://github.com/apache/incubator-mxnet/issues/20314

## Description

```

resume = _validate_checkpoint('fcn',

'fcn_resnet50_Cityscapes_11_06_23_42_45_best.params')

net1 = get_model_by_name('fcn', ctx=ctx, model_kwargs=model_kwargs)

net1.load_parameters(resume, ctx=ctx, ignore_extra=True)

net2 = get_model_by_name('fcn', ctx=ctx, model_kwargs=model_kwargs)

net2.load_parameters(resume, ctx=ctx, ignore_extra=True)

train_iter, num_train = _data_iter('Cityscapes', batch_size=1, shuffle=False,

last_batch='keep',

root=get_dataset_info('Cityscapes')[0], split='train',

mode='val', base_size=2048, crop_size=224)

from gluoncv.loss import SoftmaxCrossEntropyLoss

loss1 = SoftmaxCrossEntropyLoss()

loss2 = SoftmaxCrossEntropyLoss()

for i, (data, target) in enumerate(train_iter):

with autograd.record(True):

# for comparison, remember to set dropout layer to None

loss_1 = 0.5 * loss1(*net1(data), target)

loss_2 = loss2(*net2(data), target)

autograd.backward([loss_1, loss_2])

params1 = net1.collect_params()

params2 = net2.collect_params()

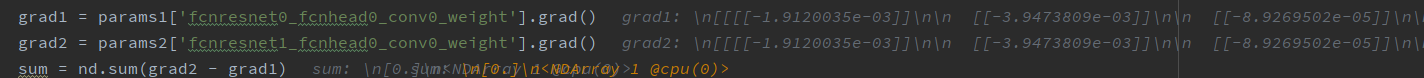

grad1 = params1['fcnresnet0_fcnhead0_conv0_weight'].grad()

grad2 = params2['fcnresnet1_fcnhead0_conv0_weight'].grad()

sum = nd.sum(grad2 - grad1)

t = grad2 / grad1

```

with two same models and losses(softmaxcrossentropy loss in gluoncv).

note that i already take away the dropout layer of models for comparison.

why the forward leads to different loss value yet the gradients are same?

the debug shotcut picture is below.

## Occurrences

this function yeilds the loss_1 which is equal to 0.5 * loss_2 in value.

but after autograd.backward, grad1 is equal to grad2.

the multiplication with 0.5 seems to do nothing to the backward grads in

network.

stucked, please help me if you know anything that may cause this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

[email protected]

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]