lizthegrey edited a comment on pull request #10326: URL: https://github.com/apache/kafka/pull/10326#issuecomment-804461064

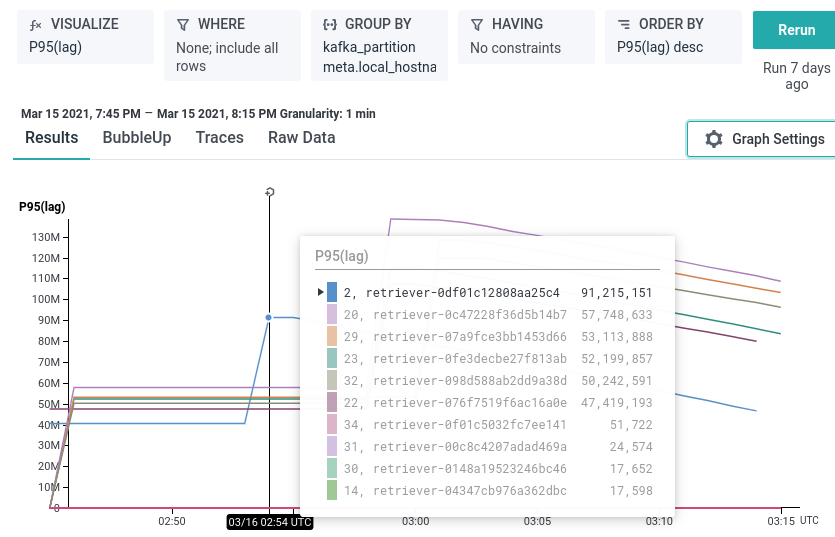

> When you say that the consumer was stuck. Are you saying that the consumer was trying to fetch an offset that the newly added replica did not have yet? Say that consumer's fetch offset was 100 and newly added replica's log end offset was 10 because it was still catching up. I'll see what I can grab from the logs. Consumer: ``` time="2021-03-16T01:38:56.753761544Z" level=info msg="Started consumer" offset=473596222274 partition=2 topic=honeycomb-prod.retriever-mutation ``` Replica: ``` [2021-03-16 01:34:21,532] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Incrementing log start offset to 472751048791 due to leader offset increment (kafka.log.Log) ... [2021-03-16 01:38:40,961] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager) [2021-03-16 01:38:40,961] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 1 ms. (kafka.log.Log) [2021-03-16 01:39:10,038] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636847643 (kafka.log.ProducerStateManager) ``` Leader: ``` [2021-03-16 01:38:16,101] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636209826 (kafka.log.ProducerStateManager) [2021-03-16 01:38:16,102] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636209826 in 0 ms. (kafka.log.Log) [2021-03-16 01:38:16,906] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, baseOffset=473635891179, endOffset=473636209825, maxTimestamp=1615858696093, size=104840863, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask) [2021-03-16 01:38:19,847] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 1900ms (kafka.tier.tasks.archive.ArchiveTask) [2021-03-16 01:38:20,865] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 10 ms (kafka.tier.tasks.archive.ArchiveTask) [2021-03-16 01:38:40,958] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager) [2021-03-16 01:38:40,959] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 0 ms. (kafka.log.Log) [2021-03-16 01:38:41,561] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, baseOffset=473636209826, endOffset=473636529288, maxTimestamp=1615858720963, size=104848877, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask) [2021-03-16 01:38:44,823] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 2221ms (kafka.tier.tasks.archive.ArchiveTask) [2021-03-16 01:38:45,866] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 7 ms (kafka.tier.tasks.archive.ArchiveTask) ``` Full logs at: https://gist.github.com/lizthegrey/58272cb6f52ab3c6b4a20035dbbdffff You'll note that 473596222274 is between low water mark 472751048791 and high water mark 473636529289. About 20 minutes later, the consumer in us-east-1b, which was reading off a leader in us-east-1d (since it had no local replica), successfully managed to read offsets 473652309773 and beyond. The consumer in us-east-1a was still stuck. Not until 0254 UTC when the replica in us-east-1b reached a total lag of 0 and there was a new leader election, perhaps, did the consumer in us-east-1a begin being able to consume from the follower in us-east-1a. Leader (1219): ``` [2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1212] Removed fetcher for partitions Set(honeycomb-prod.retriever-mutation-2) (kafka.server.ReplicaFetcherManager) [2021-03-16 02:54:28,094] INFO retrying DeletionTask(Ljl8q6qISdGMmgLgfP3pZQ:hone ycomb-prod.retriever-mutation-2, state=State(currentState: kafka.tier.tasks.dele te.DeletionTask$CollectDeletableSegments metadata: DeleteAsLeaderMetadata(leader Epoch: 439)), cancelled=false) after 1000ms (kafka.tier.tasks.delete.DeletionTas k) kafka.tier.exceptions.TierMetadataRetriableException: Leadership not established for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2. Backing off. [2021-03-16 02:54:28,110] INFO established leadership for Ljl8q6qISdGMmgLgfP3pZQ :honeycomb-prod.retriever-mutation-2 (kafka.tier.tasks.archive.ArchiveTask) ``` Follower (1212): ``` [2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Removed fe tcher for partitions HashSet(honeycomb-prod.retriever-mutation-2) (kafka.server. ReplicaFetcherManager) [2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Added fetc her to broker 1212 for partitions Map(honeycomb-prod.retriever-mutation-2 -> (of fset=473687483041, leaderEpoch=439)) (kafka.server.ReplicaFetcherManager) ```   -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org