This is an automated email from the ASF dual-hosted git repository.

bzp2010 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/apisix-website.git

The following commit(s) were added to refs/heads/master by this push:

new eba8183 docs: add EN version of usercases (#710)

eba8183 is described below

commit eba81830daa37570f66b6a0c4fcf1562b99ee03c

Author: Sylvia <[email protected]>

AuthorDate: Tue Nov 9 15:22:03 2021 +0800

docs: add EN version of usercases (#710)

---

website/blog/2021/09/07/iQIYI-usercase.md | 132 ++++++++++----------

website/blog/2021/09/14/youzan.md | 158 ++++++++++++------------

website/blog/2021/09/18/xiaodian-usercase.md | 151 +++++++++++-----------

website/blog/2021/09/24/youpaicloud-usercase.md | 155 ++++++++++++-----------

website/blog/2021/09/28/WPS-usercase.md | 122 +++++++++---------

5 files changed, 356 insertions(+), 362 deletions(-)

diff --git a/website/blog/2021/09/07/iQIYI-usercase.md

b/website/blog/2021/09/07/iQIYI-usercase.md

index f6d6548..014fe9b 100644

--- a/website/blog/2021/09/07/iQIYI-usercase.md

+++ b/website/blog/2021/09/07/iQIYI-usercase.md

@@ -1,126 +1,124 @@

---

-title: "基于 Apache APISIX,爱奇艺 API 网关的更新与落地实践"

-author: "何聪"

+title: "Based on Apache APISIX, iQIYI API Gateway Update and landing practice"

+author: "Cong He"

keywords:

-- APISIX

-- 爱奇艺

-- API 网关

-- 服务发现

-description: 本文整理自爱奇艺高级研发师何聪在 Apache APISIX Meetup 上海站的演讲,通过阅读本文,您可以了解到基于

Apache APISIX 网关,爱奇艺技术团队是如何进行公司架构的更新与融合,打造出全新的网关服务。

+- Apache APISIX

+- iQIYI

+- API Gateway

+- Service discovery

+description: In this article, you can understand how iQIYI's technical team

updates and integrates the company structure based on Apache APISIX gateway to

create a brand-new gateway service.

tags: [User Case]

---

-> 爱奇艺在之前有开发了一款网关——Skywalker,它是基于 Kong 做的二次开发,目前流量使用也是比较大的,网关存量业务日常峰值为百万级别

QPS,API

路由数量上万。但这款产品的不足随着使用也开始逐步体现。今年在交接到此项目后,我们根据上述问题和困境,开始对相关网关类产品做了一些调研,然后发现了 Apache

APISIX。在选择 Apache APISIX 之前,爱奇艺平台已经在使用 Kong 了,但是后来 Kong 被放弃了。

+> In this article, you can understand how iQIYI's technical team updates and

integrates the company structure based on Apache APISIX gateway to create a

brand-new gateway service.

<!--truncate-->

-## 背景描述

+## Background

-爱奇艺在之前有开发了一款网关——Skywalker,它是基于 Kong 做的二次开发,目前流量使用也是比较大的,网关存量业务**日常峰值为百万级别

QPS,API 路由数量上万**。但这款产品的不足随着使用也开始逐步体现。

+iQIYI has developed a gateway-skywalker, it is based on the secondary

development of Kong, the current traffic is also relatively large, the daily

peak of the gateway stock business million QPS, the number of API routes tens

of thousands. But the product’s shortcomings began to show up in the wake of

its use.

-1. 性能差强人意,因为业务量大,每天收到很多 CPU IDLE 过低的告警

+1. Performance is not satisfactory, because the volume of business, every day

received a lot of CPU IDLE too low alert

+2. The components of the system architecture depend on many

+3. The development cost of operation and maintenance is high

-2. 系统架构的组件依赖多

+After taking over the project this year, we started to do some research on

gateway products in the light of the problems and dilemmas mentioned above, and

then we found Apache APISIX.

-3. 运维开发成本较高

+## Apache APISIX Advantage

-今年在交接到此项目后,我们根据上述问题和困境,开始对相关网关类产品做了一些调研,然后发现了 Apache APISIX。

+Before choosing Apache APISIX, the iqiyi platform was already using Kong, but

it was later abandoned.

-## Apache APISIX 优势

+### Why Give Up Kong

-在选择 Apache APISIX 之前,爱奇艺平台已经在使用 Kong 了,但是后来 Kong 被放弃了。

+

-### 为什么放弃 Kong

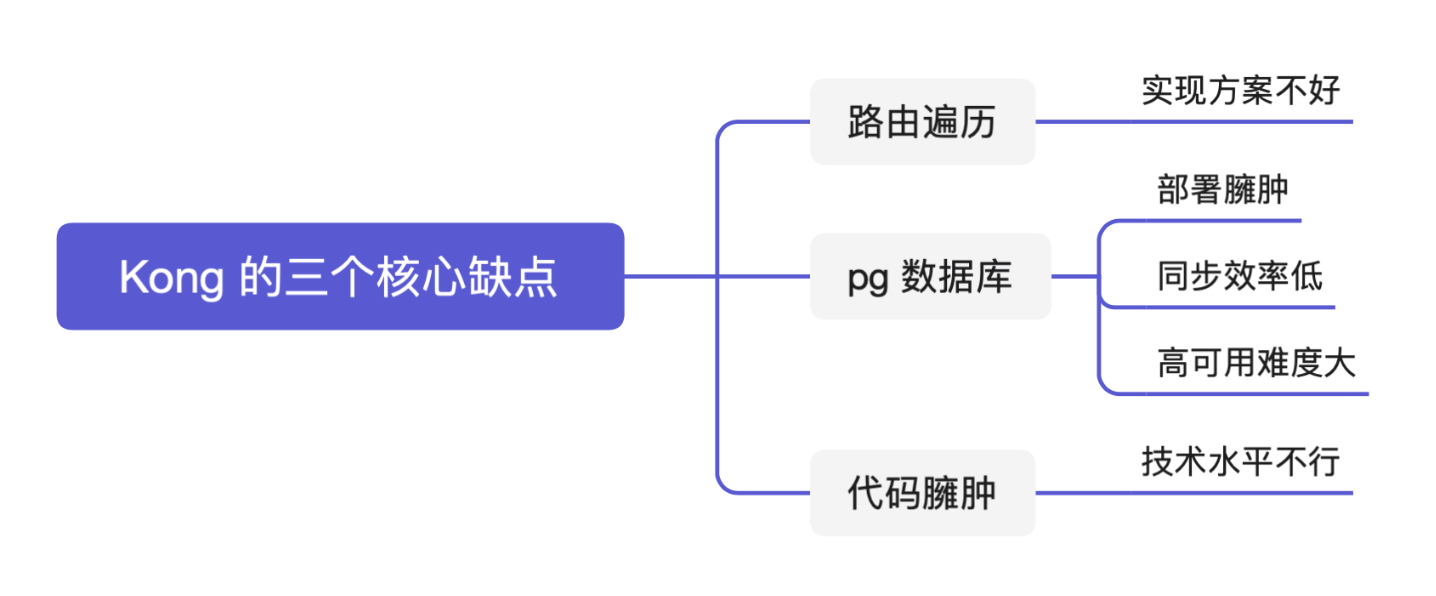

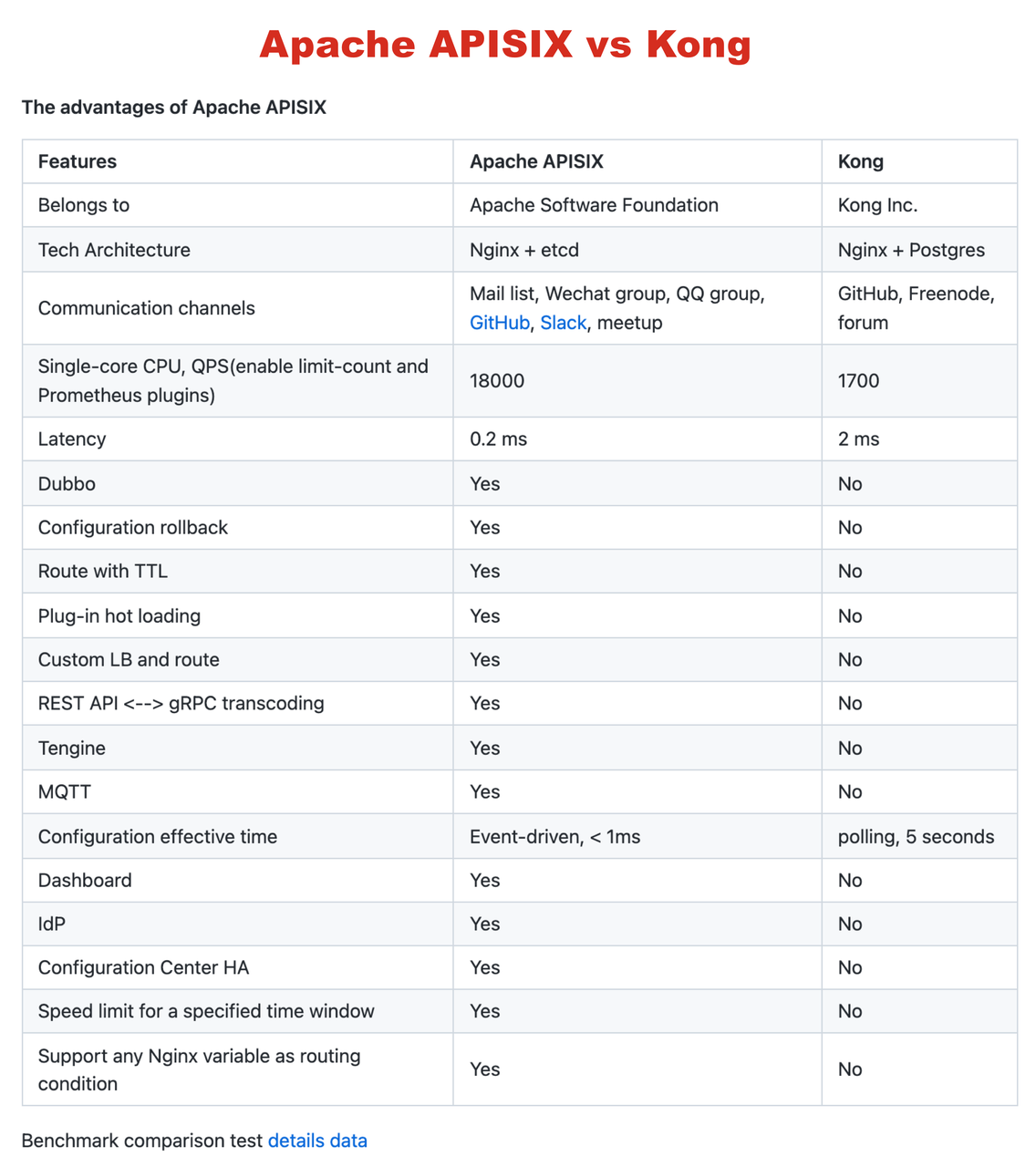

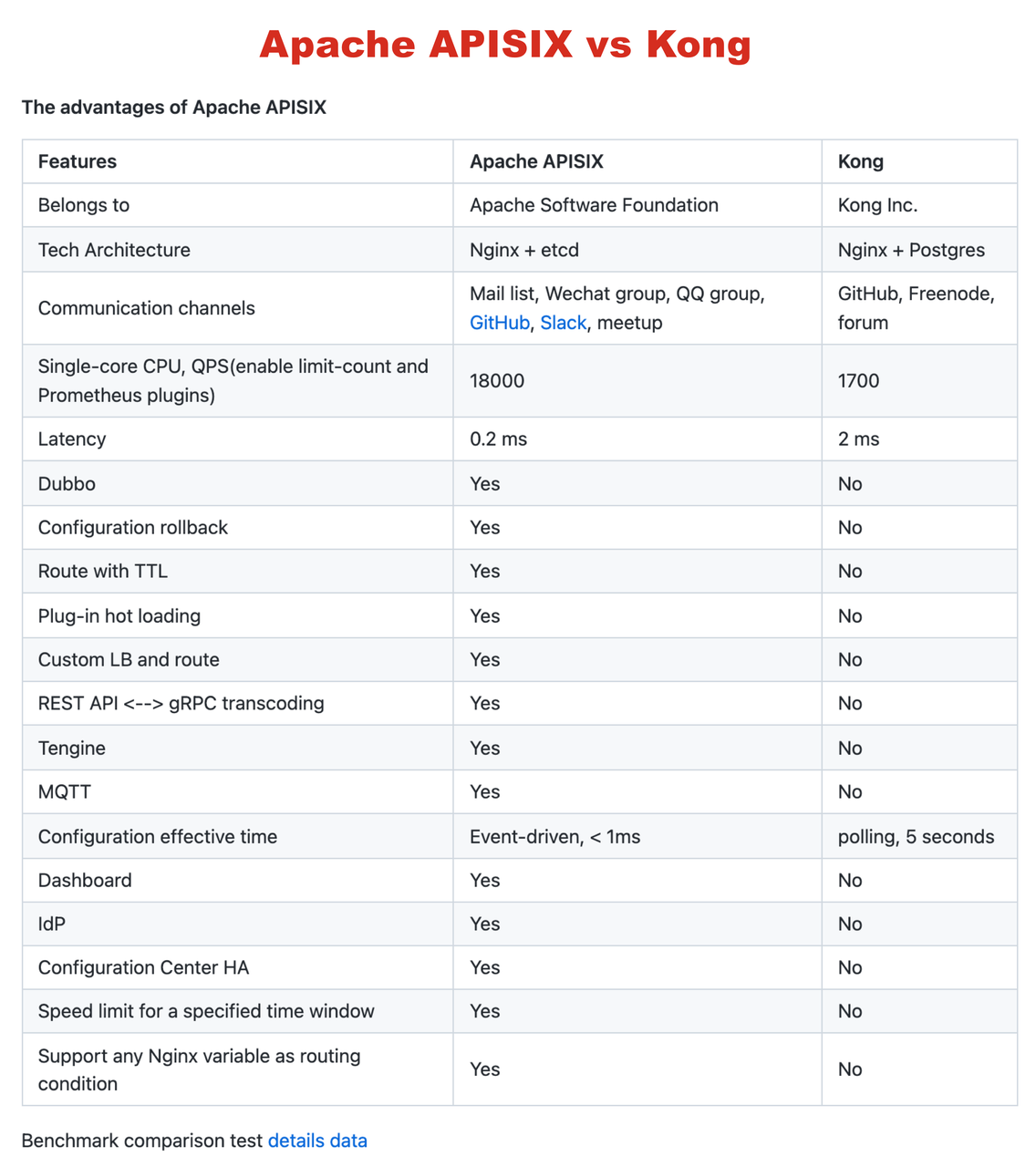

+Kong uses PostgreSQL to store its information, which is obviously not a good

way. We also looked at the performance of Apache APISIX compared to Kong in the

course of our research, and it’s amazing that Apache Apisix is 10 times better

than Kong in terms of performance optimization. We also compared some of the

major gateway products, Apache APISIX’s response latency is more than 50% lower

than other gateways, and Apache APISIX can still run stably when the CPU

reaches more than 70% .

-

+We also found out that Apache APISIX, like Kong, is based on the OpenResty

technology, so the cost of technology migration is relatively low. And Apache

APISIX is very adaptable and can be easily deployed in a variety of

environments, including cloud computing platforms.

-Kong 使用 PostgreSQL 来存储它的信息,这显然不是一个好方式。 同时在调研过程中我们查看了 Apache APISIX 与 Kong

的性能的对比,在性能优化方面 Apache APISIX 比 Kong 提升了 10 倍,这个指标是非常让人惊喜的。

同时我们也比较了一些主要的网关产品,Apache APISIX 的响应延迟比其它网关低 50% 以上,在 CPU 达到 70% 以上时 Apache

APISIX 仍能稳定运转。

+We also saw that Apache APISIX was very active throughout the open source

project, handled the issues very quickly, and the cloud native architecture of

the project was in line with our follow up plans, so we chose Apache APISIX.

-我们也发现 Apache APISIX 其实和 Kong 一样,都是基于 OpenResty 技术层面做的开发,所以在技术层面的迁移成本就比较低。而且

Apache APISIX 具有良好的环境适应性,能够被轻易地部署在包括云计算平台在内的各种环境上。

+## Architecture Based on Apache APISIX

-同时也看到 Apache APISIX 整个开源项目的活跃度非常高,对问题的处理非常迅速,并且项目的云原生架构也符合我司后续规划,所以我们选择了

Apache APISIX。

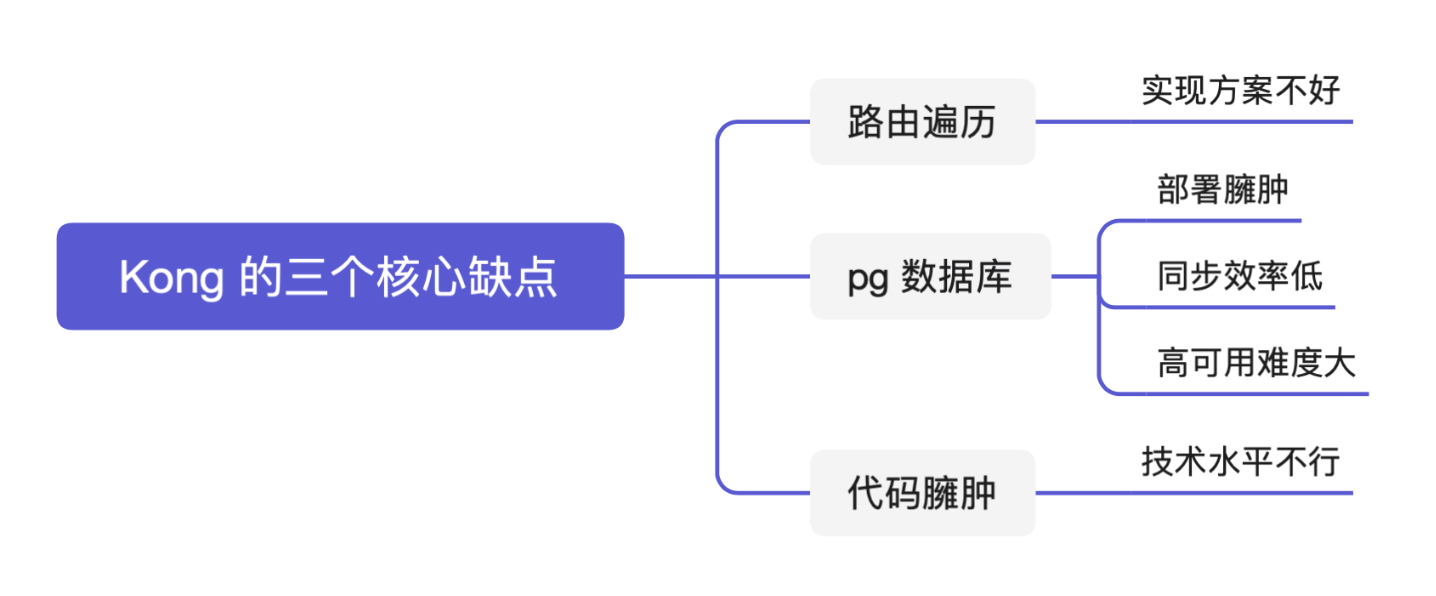

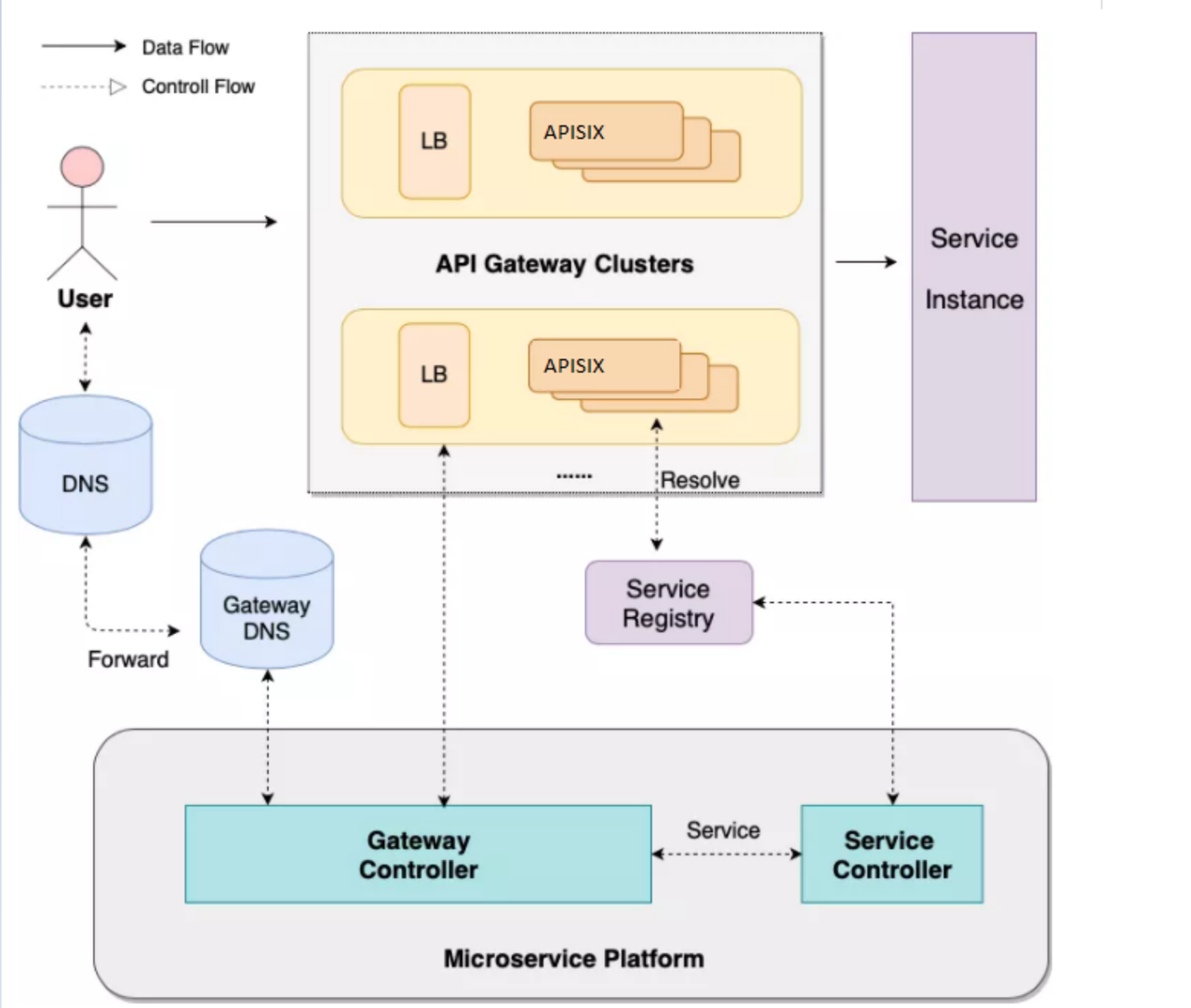

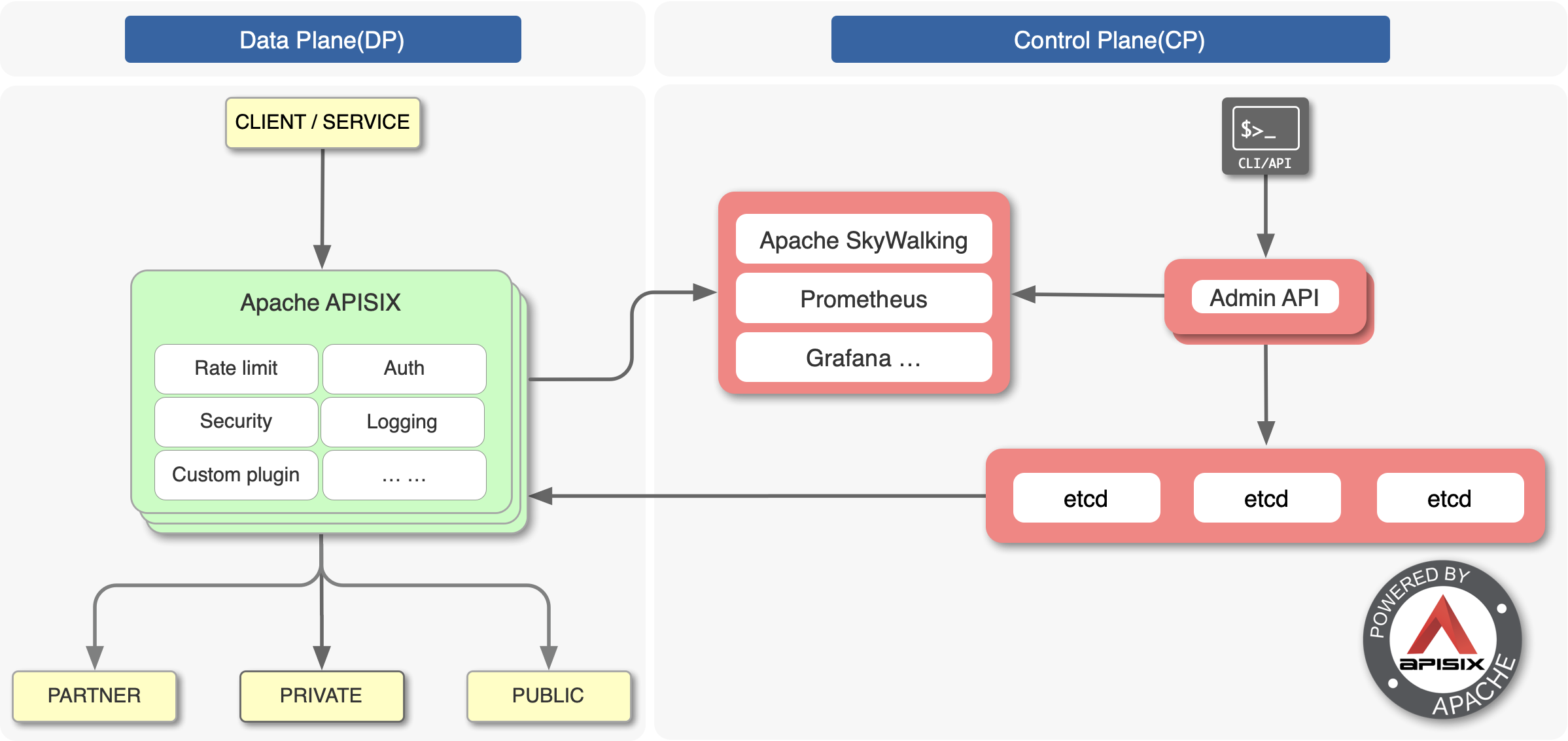

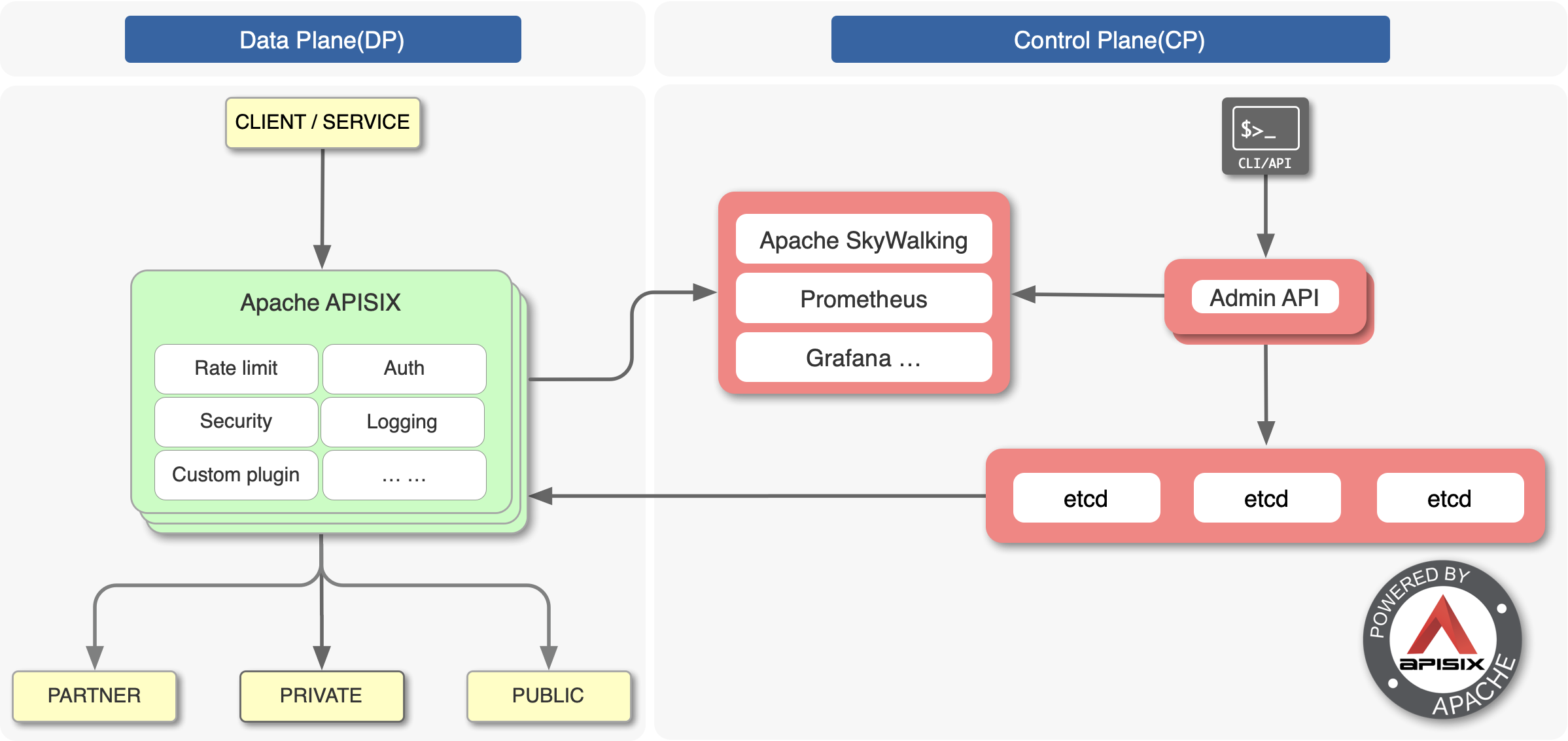

+The overall architecture of iQIYI Gateway is shown below, including domain

name, gateway to service instance and monitoring alarm. DPVS is an open source

project within the company based on LVS, Hubble monitoring alerts is also a

deep secondary development based on an open source project, and the Consul area

has been optimized for performance and high availability.

-## 基于 Apache APISIX 下的爱奇艺网关架构

+

-爱奇艺网关的总体架构如下图所示,包含域名、网关到服务实例和监控告警。DPVS 是公司内部基于 LVS 做的一个开源项目,Hubble

监控告警也是基于开源项目做的深度二次开发,Consul 这块做了些性能和高可用性方面的优化。

+### Scenario 1: Microservice Gateway

-

+About the gateway this piece, simple from the control surface and the data

surface introduce.

-### 应用场景一:微服务网关

+

-关于网关这块,简单从控制面和数据面介绍一下。

+The data plane is mainly oriented to the front-end users, and the whole

architecture from LB to Gateway is multi-location and multi-link disaster

deployment.

-

+From the perspective of control surface, because of the multi-cluster

composition, there will be a micro-service platform to do cluster management

and service management. Microservice platforms allow users to experience

services as one-stop-shops that expose them to immediate use without having to

submit a work order. The back end of the control panel will have Gateway

Controller, which controls the creation of all apis, plug-ins, and related

configurations, and Service Controller, which [...]

-数据面主要面向前端用户,从 LB 到网关整个架构都是多地多链路灾备部署,以用户就近接入原则进行布点。

+### Scenario 2: Basic Functions

-从控制面的角度来说,因为多集群构成,会存在一个微服务平台,去做集群管理和服务管理。微服务平台可以让用户体验服务暴露的一站式服务,立即使用,无需提交工单。控制面后端会有

Gateway Controller 和 Service Controller,前者主要控制所有的 API

的创建、插件等相关配置,后者则是控制服务注册注销和健康检查。

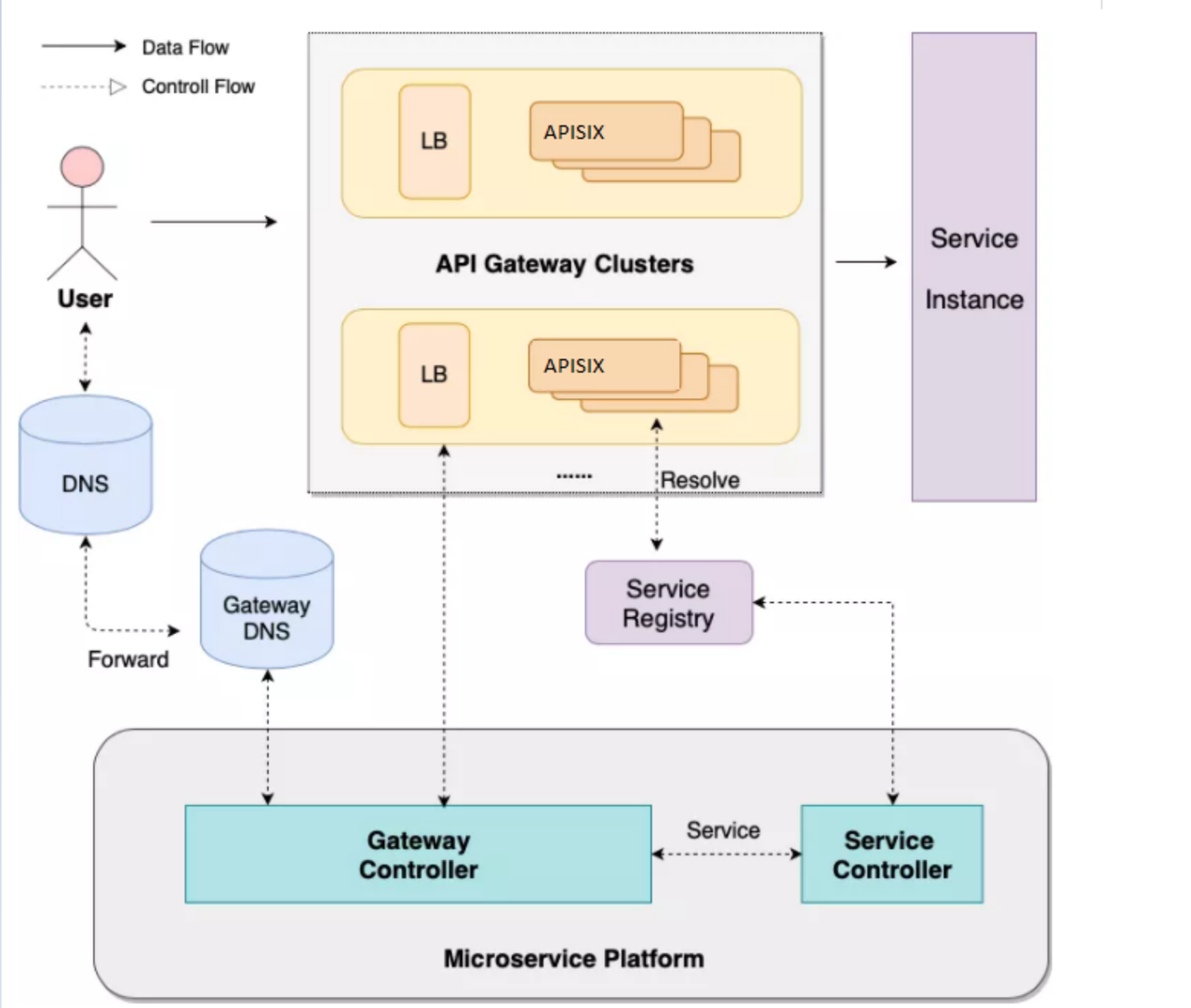

+At present, the API architecture based on Apache APISIX has realized some

basic functions, such as current limiting, authentication, alarm, monitoring

and so on.

-### 应用场景二:基础功能

+

-目前阶段,基于 Apache APISIX 调整后的 API 架构实现了一些基础功能,如限流、认证、报警、监控等。

+First is the HTTPS section, iQIYI for security reasons, certificates and keys

are not stored on the gateway machine, will be placed on a dedicated remote

server. We didn’t support this when we used Kong, we used the prefix Nginx to

do HTTPS Offload, and after the migration to Apache APISIX, we implemented this

feature on Apache APISIX, which is a layer less forwarding over the link.

-

+In the current limiting function, in addition to the basic current limiting,

but also added a precise current limiting and user-specific granularity of the

current limiting. Authentication function, in addition to the basic API Key

authentication, for specialized services also provide the relevant Passport

authentication. For black product filtering, access to the company’s WAF

Security Cloud.

-首先是 HTTPS 部分,爱奇艺方面出于对安全性的考虑,证书和密钥是不会存放在网关机器上,会放在一个专门的远程服务器上。之前使用 Kong

时我们没有在这方面做相关支持,采用前置 Nginx 做 HTTPS Offload,此次迁移到 Apache APISIX 后,我们在 Apache

APISIX 上实现了该功能,从链路上来说就少了一层转发。

+The monitoring feature is currently implemented using the Apache APISIX

plug-in Prometheus, and the metrics data will interface directly with the

company’s monitoring system. Logging and call chain analysis is also supported

through Apache APISIX.

-在限流功能上,除了基础的限流,还添加了精准限流以及针对用户粒度的限流。认证功能上,除了基本的 API Key 等认证,针对专门业务也提供了相关的

Passport 认证。对于黑产的过滤,则接入了公司的 WAF 安全云。

+### Scenario 3: Serviece Discovery

-监控功能的实现目前是使用了 Apache APISIX 自带插件——Prometheus,指标数据会直接对接公司的监控系统。日志和调用链分析也通过

Apache APISIX 得到了相关功能支持。

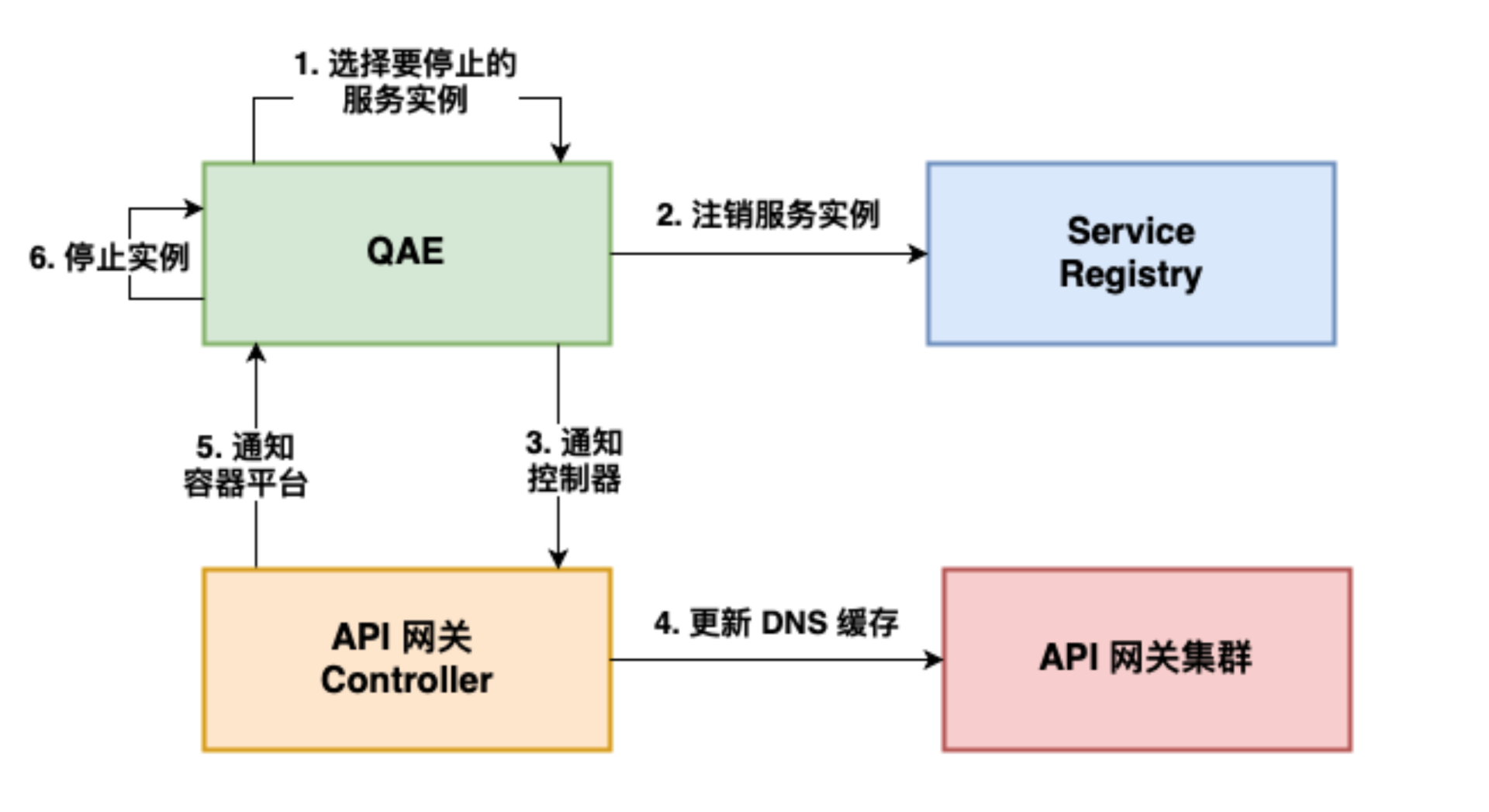

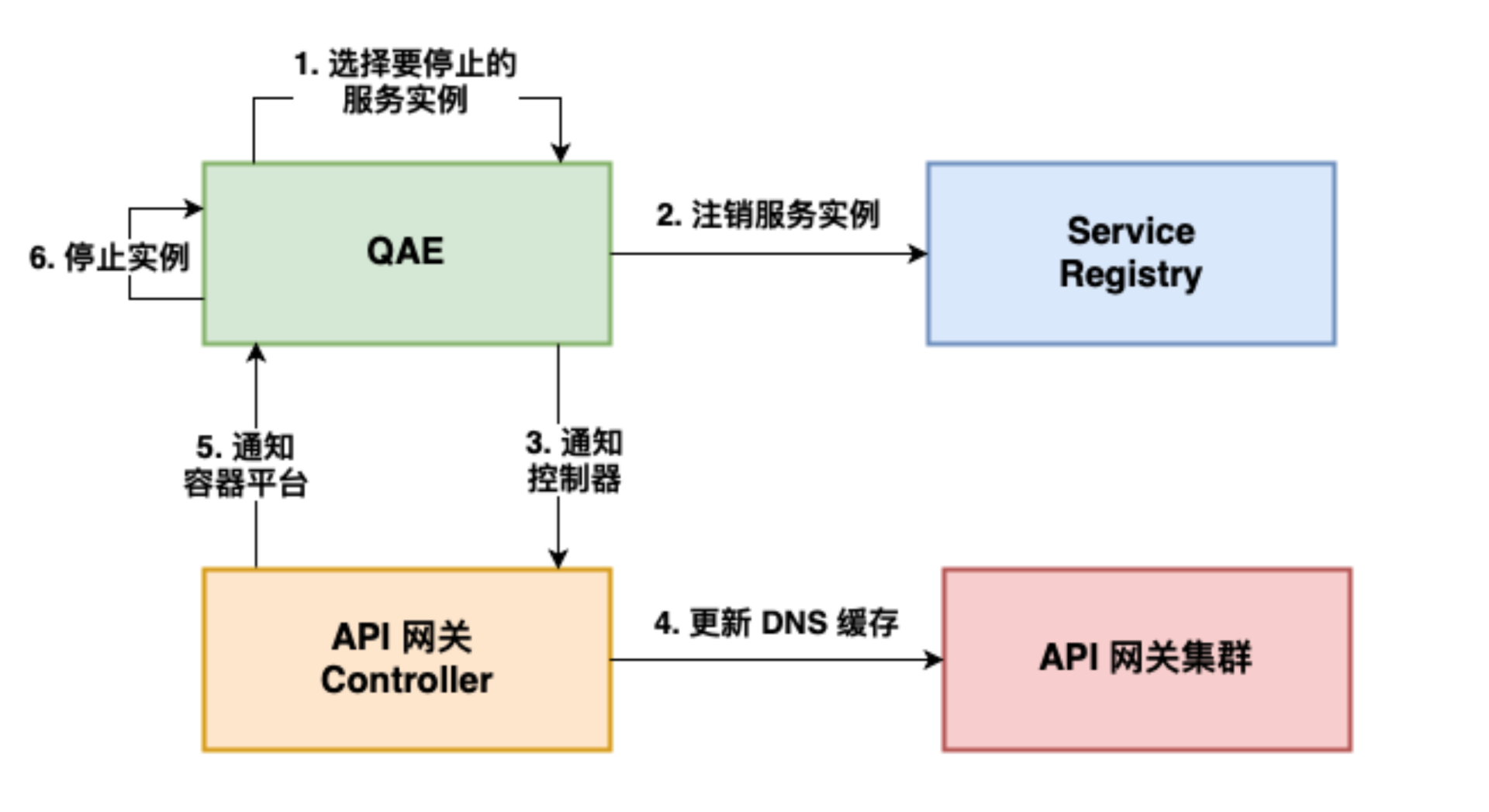

+With regard to the above-mentioned service discovery, it is mainly through the

service center to register the service to the Consul cluster, and then through

the DNS service discovery to do dynamic updates, qae is a micro-service

platform in our company. A simple example illustrates the general flow of

updating a backend application instance.

-### 应用场景三:服务发现

+

-关于前面提到的服务发现,主要是通过服务中心把服务注册到 Consul 集群,然后通过 DNS 服务发现的方式去做动态更新,其中 QAE

是我们公司内部的一个微服务平台。简单举例说明一下更新后端应用实例时的大体流程。

+When the instance changes, the corresponding node is first unlogged from

Consul and a request to update the DNS cache is sent to the gateway through the

API Gateway Controller. After the cache update is successful, the Controller

then feeds back to the QAE platform to stop the associated back-end application

node and avoid reforwarding traffic to the offline node.

-

+### Scenario 4: Directional Route

-实例变更时,首先会从 Consul 中注销对应节点,并通过 API 网关 Controller 向网关发送更新 DNS

缓存的请求。缓存更新成功后,Controller 再反馈 QAE 平台可以停止相关后端应用节点,避免业务流量再转发到已下线节点。

+

-### 应用场景四:定向路由

+The gateway is multi-location deployment, build a set of multi-location backup

link in advance, at the same time suggest the user back-end service is also

multi-location deployment nearby. Then the user creates an API service on the

Skywalker Gateway platform, the Controller deploys the API routing on the

entire DC gateway cluster, and the business domain defaults to CNAME on the

unified gateway domain name.

-

+It provides multi-local access, disaster preparedness and handoff capability

for business directly, and also supports user-defined resolution routing. For

the user’s own fault-cut flow, blue-green deployment, gray-scale publishing

needs, users can use the uuid domain name to customize the resolution of

routing configuration, but also to support the back-end service discovery

custom scheduling.

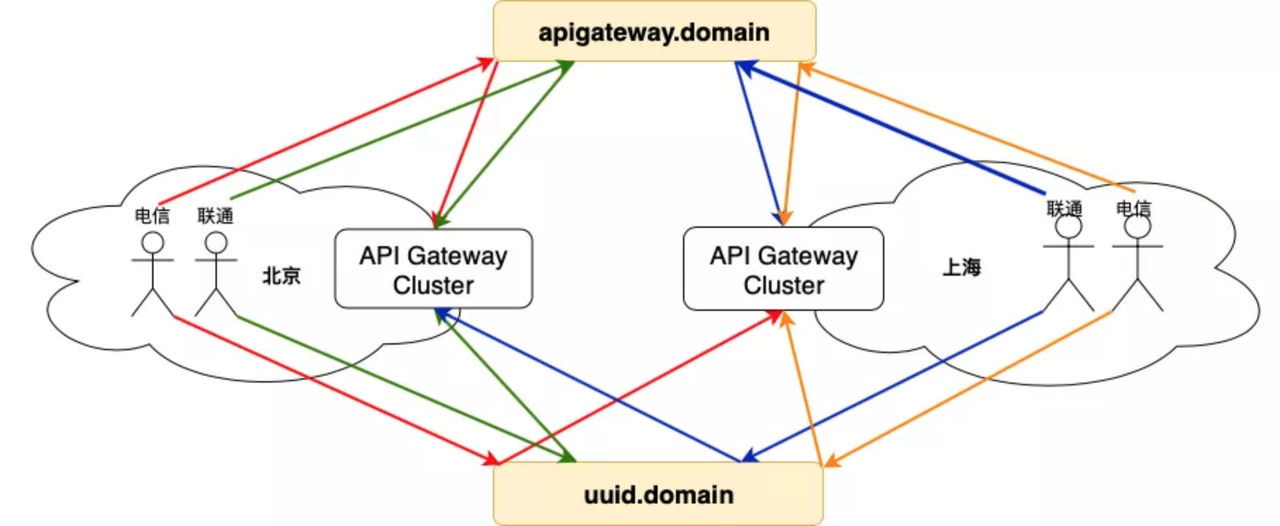

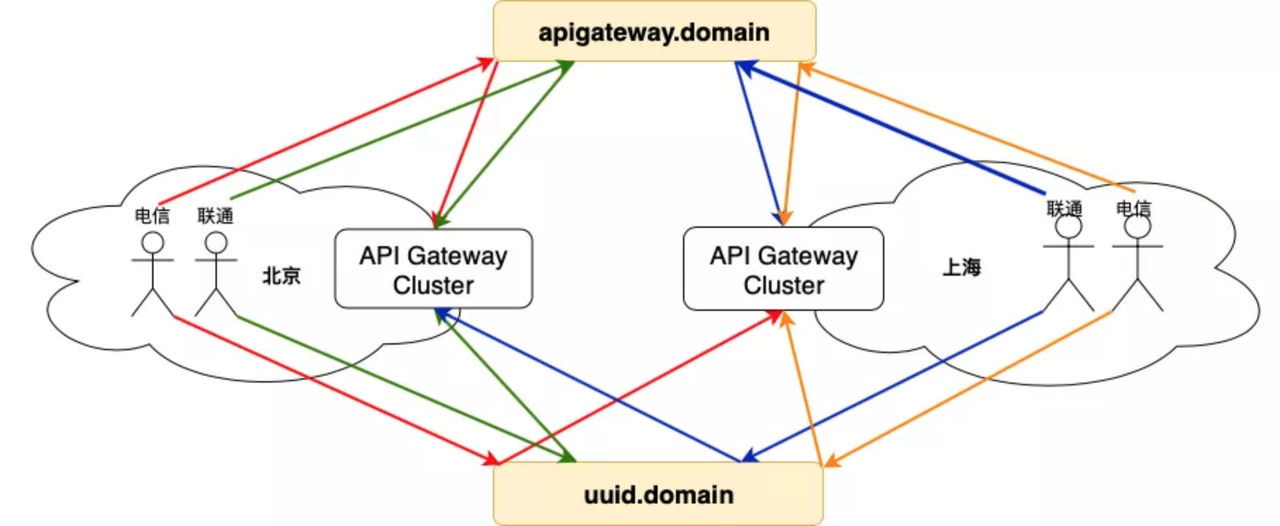

-网关是多地部署的,事先搭建好一整套多地互备链路,同时建议用户后端服务也是多地就近部署。随后用户在 Skywalker 网关平台上创建一个 API

服务,Controller 会在全 DC 网关集群上都部署好 API 路由,同时业务域名默认 CNAME 到统一的网关域名上。

+### Scenario 5: Multi-site Multi-level Disaster Tolerance

-直接为业务提供多地就近接入、故障灾备切换能力,同时也支持用户自定义解析路由。针对用户自身的故障切流、蓝绿部署、灰度发布等需求,用户可以通过采用 uuid

域名来自定义解析路由配置,同时也支持后端服务发现的自定义调度。

+As we mentioned earlier, at the business level we have business proximity and

disaster preparedness requirements for large volumes of traffic, large

clusters, and a wide audience of clients.

-### 应用场景五:多地多级容灾

+For disaster preparedness, in addition to multi-link backup, but also consider

multi-level multi-node problem, fault node closer to the client, the greater

the impact of business and traffic.

-前边我们也提到过,针对业务量大、集群多,还有客户端受众广的情况,在业务层面我们会有业务就近接入和灾备的需求。

+1. If it is the farthest back-end service node failure, depending on the

health check mechanism of the gateway and the service center, it can realize

the fuse of the fault single node or the switch of the fault DC, and the

influence scope is limited to the specified service, the user is not aware.

+2. If it is a gateway level fault, we need to rely on the health check

mechanism of L4 DPVS, fusing the fault gateway node, the influence range is

small, the user is not aware.

+3. If the fault points can not be repaired by the above-mentioned fusing

measures, it is necessary to rely on the multi-point availability dialing of

the domain name granularity to realize the automatic fault switching at the

domain name resolution level, which is a relatively slow way to repair the

fault, affect the business much, the user can feel.

-针对灾备,除了要多地多链路互备,还要考虑多级多节点问题,故障节点越向客户端靠近,受影响的业务和流量越大。

+## Problems Encountered during Migration

-1. 如果是最远的后端服务节点故障,依靠网关和服务中心的健康检查机制,可以实现故障单节点的熔断或者故障 DC 的切换,影响范围限制在指定业务上,用户无感知。

-2. 如果是网关级别故障,需要依靠 L4 DPVS 的健康检查机制,熔断故障网关节点,影响范围小,用户无感知。

-3.

如果故障点并非上述述熔断措施所能修复,就需要依靠域名粒度的外网多点可用性拨测,实现域名解析级别的故障自动切换,这种方式故障修复速度相对较慢,影响业务多,用户可感知。

+During our migration practice from Kong to Apache APISIX, we addressed and

optimized some known architectural issues, but also encountered some new ones.

-## 迁移过程中遇到的问题

+### Result 1: SNI Compatibility Issues Not Supported in The Front End Were

Resolved

-在我们从 Kong 到 Apache APISIX 的迁移实践过程中,我们解决、优化了一些架构存在的已知问题,但同时也遇到了一些新的问题。

+Most of the frontend is now supported for SNI, but you’ll also encounter a few

frontend that won’t pass the hostname during SSL. At present, we have done a

compatibility for this situation, using port matching method to obtain the

relevant certificates.

-### 成果一:解决了前端不支持 SNI 的兼容问题

+### Result 2: A Large Number of API Routing Matching Problems Have Been

Optimized

-现在大部分前端都是支持 SNI 的,但也会碰到有一些前端在 SSL 过程中无法传递

hostname。目前我们针对这种情况做了一个兼容,采取端口匹配的方式进行相关证书获取。

+As I said before, we currently have more than 9,000 API services running

directly online, and may increase in the future. In order to solve this

problem, we made some performance optimization, according to the API to decide

whether to match the domain name or the path first.

-### 成果二:优化了大量 API 路由匹配问题

+### Result 3: The Limitation of ETCD Interface Is Solved

-前边也说过,目前我们线上直接运行的 API 业务数量就有 9000 多个,后续可能还会增加。针对这一问题我们进行了相关性能上的优化,根据 API

来决定优先匹配域名还是路径。

+After accessing Apache APISIX, the ETCD interface limitation has also been

resolved and the 4M limit has now been lifted.

-### 成果三:解决了 ETCD 接口限制问题

+### Result 4: Performance Issues Optimized for The Number of ETCD Connections

-在接入 Apache APISIX 后,ETCD 接口的限制问题也已经解决,目前已经解除了 4M 的限制。

+Currently, every worker at Apache APISIX is connected to the ETCD, and every

listening directory is going to make a connection. For example, a physical

machine is 80core, with 10 listening directories and 800 connections on a

single gateway server. With a gateway cluster of 10,8,000 connections is a lot

of pressure on the ETCD. The optimization is to take one worker and listen to a

limited set of necessary directories and share the information with the rest of

the workers.

-### 成果四:优化了 ETCD 连接数量的性能问题

+### To Be Optimized

-目前是 Apache APISIX 的每个 worker 都会跟 ETCD 连接,每一个监听目录都会去建一个连接。比如一台物理机是 80

core,监听目录有 10 个,单台网关服务器就有 800 个连接。一个网关集群 10 台的话,8000 个连接对 ETCD

压力蛮大的。我们做的优化是只拿一个 worker 去监听有限的必要目录,和其余 worker 之间共享信息。

+In addition to the above problems, there are also a number of issues are being

optimized.

-### 待优化

+1. A number of API issues, even if APISIX is supported, we need to consider

other component-dependent bottlenecks. Such as the ETCD, Prometheus Monitoring

and logging services described above. So in the future, we may adopt the two

ways of large cluster sharing and small cluster independence to mix the

deployment of business, for example, some important business we will deploy in

small clusters.

+2. With respect to the Prometheus monitoring metric, we will continue to scale

down and optimize the DNS service to find more.

-除了上述问题,还有一些问题也正在努力优化中。

+## Expectations for Apache APISIX

-1. 大量 API 的问题,即使 APISIX 能够支持,我们也要考虑其他依赖组件的瓶颈。比如上述的 ETCD、Prometheus

监控和日志服务。所以后续我们可能会采取大集群共享和小集群独立这两种方式来混合部署业务,比如一些重要业务我们会以小集群方式进行部署。

-2. 关于 Prometheus 监控指标,后续我们也会进行相应的缩减和优化,对 DNS 服务发现这块做更多的优化。

-

-## 对 Apache APISIX 的期望

-

-我们希望 Apache APISIX 能在后续的开发更新中,除了支持更多的功能,也可以一直保持性能的高效和稳定。

+We hope that in future development updates Apache APISIX will not only support

more functionality, but also maintain performance efficiency and stability over

time.

diff --git a/website/blog/2021/09/14/youzan.md

b/website/blog/2021/09/14/youzan.md

index cfc7230..d1c6abd 100644

--- a/website/blog/2021/09/14/youzan.md

+++ b/website/blog/2021/09/14/youzan.md

@@ -1,149 +1,147 @@

---

-title: "Apache APISIX 助力有赞云原生 PaaS 平台,实现全面微服务治理"

-author: "戴诺璟"

+title: "Apache APISIX powers the Youzanyun native PaaS platform for

comprehensive micro-service governance"

+author: "Nuojing Dai"

keywords:

- Apache APISIX

-- 有赞

-- 微服务治理

-- 云原生

-description: 本文主要介绍了有赞云原生 PaaS 平台使用 Apache APISIX 的企业案例,以及如何使用 Apache APISIX

作为产品流量网关的具体实例。

+- Youzan

+- Micro-service

+- Cloud native

+description: This article focuses on the enterprise case of using Apache

APISIX for PaaS platform with Youzanyun native platform and how to use Apache

APISIX as a specific example of the product traffic gateway.

tags: [User Case]

---

-> 本文主要介绍了有赞云原生 PaaS 平台使用 Apache APISIX 的企业案例,以及如何使用 Apache APISIX

作为产品流量网关的具体实例。

+> This article focuses on the enterprise case of using Apache APISIX for PaaS

platform with Youzanyun native platform and how to use Apache APISIX as a

specific example of the product traffic gateway.

<!--truncate-->

-作者戴诺璟,来自有赞技术中台,运维平台开发工程师

+Youzan is a major retail technology SaaS provider that helps businesses open

online stores, engage in social marketing, improve retention and repurchase,

and expand new retail across all channels. This year, youzan Technologies is

beginning to design and implement a new cloud native PaaS platform with a

common model for release management and micro-service related governance of

various applications. Apache APISIX played a key role.

-有赞是一家主要从事零售科技 SaaS

服务的企业,帮助商家进行网上开店、社交营销、提高留存复购,拓展全渠道新零售业务。在今年,有赞技术中台开始设计实现新的云原生 PaaS

平台,希望通过一套通用模型来进行各种应用的发布管理和微服务相关治理。而 Apache APISIX 在其中起到了非常关键的作用。

+## Why Need a Traffic Gateway

-## 为什么需要流量网关

+### Youzan OPS Platform

-### 有赞 OPS 平台

+Youzan OPS platform is based on FLASK in the early single application, mainly

to support business-oriented. It gradually went online and deployed a lot of

business-side code into the containerization phase. At that time, the gateway

was only a part of the function of the internal flash application, and there

was no clear concept of the gateway, only as the traffic forwarding function of

business application. The following illustration shows the current Gateway 1.0

business structure.

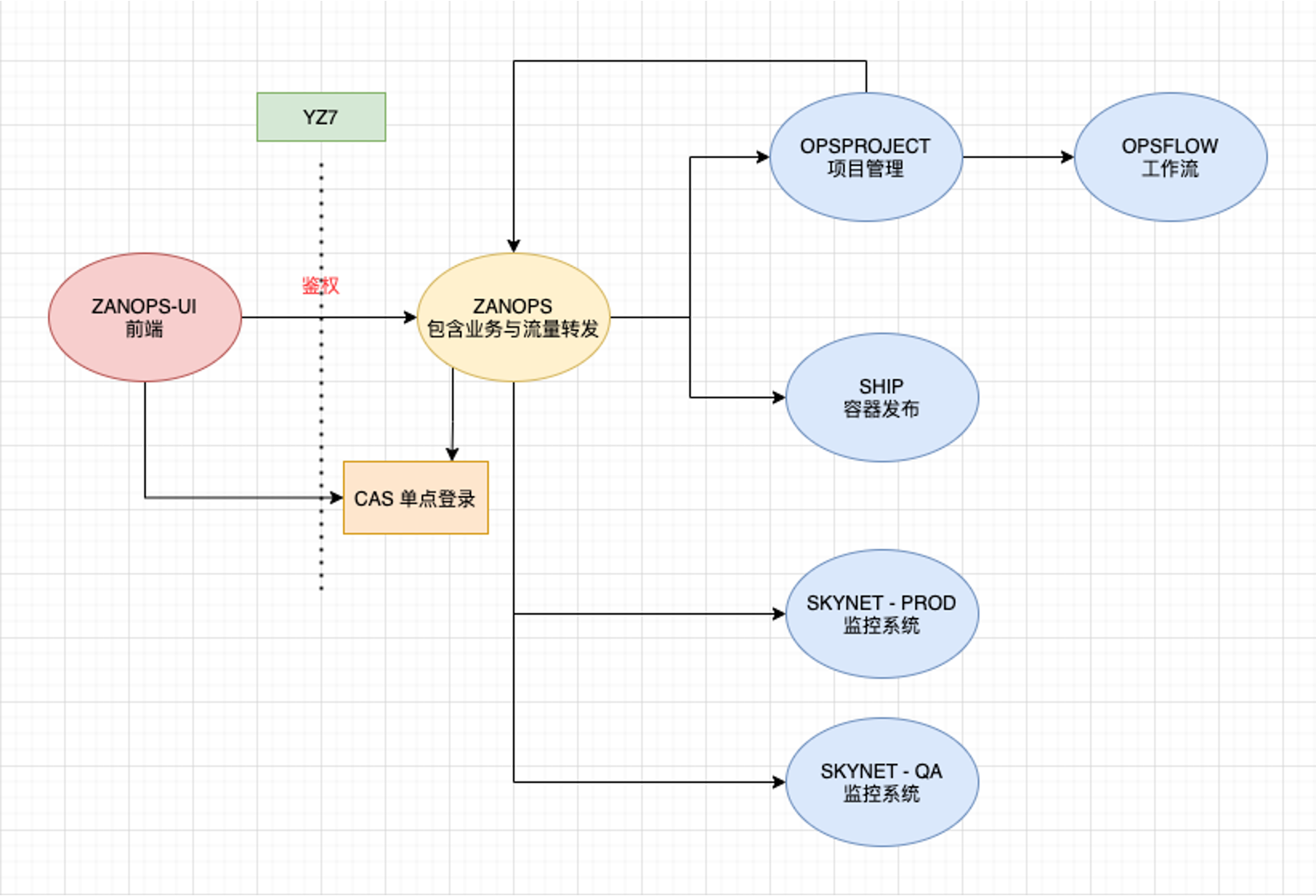

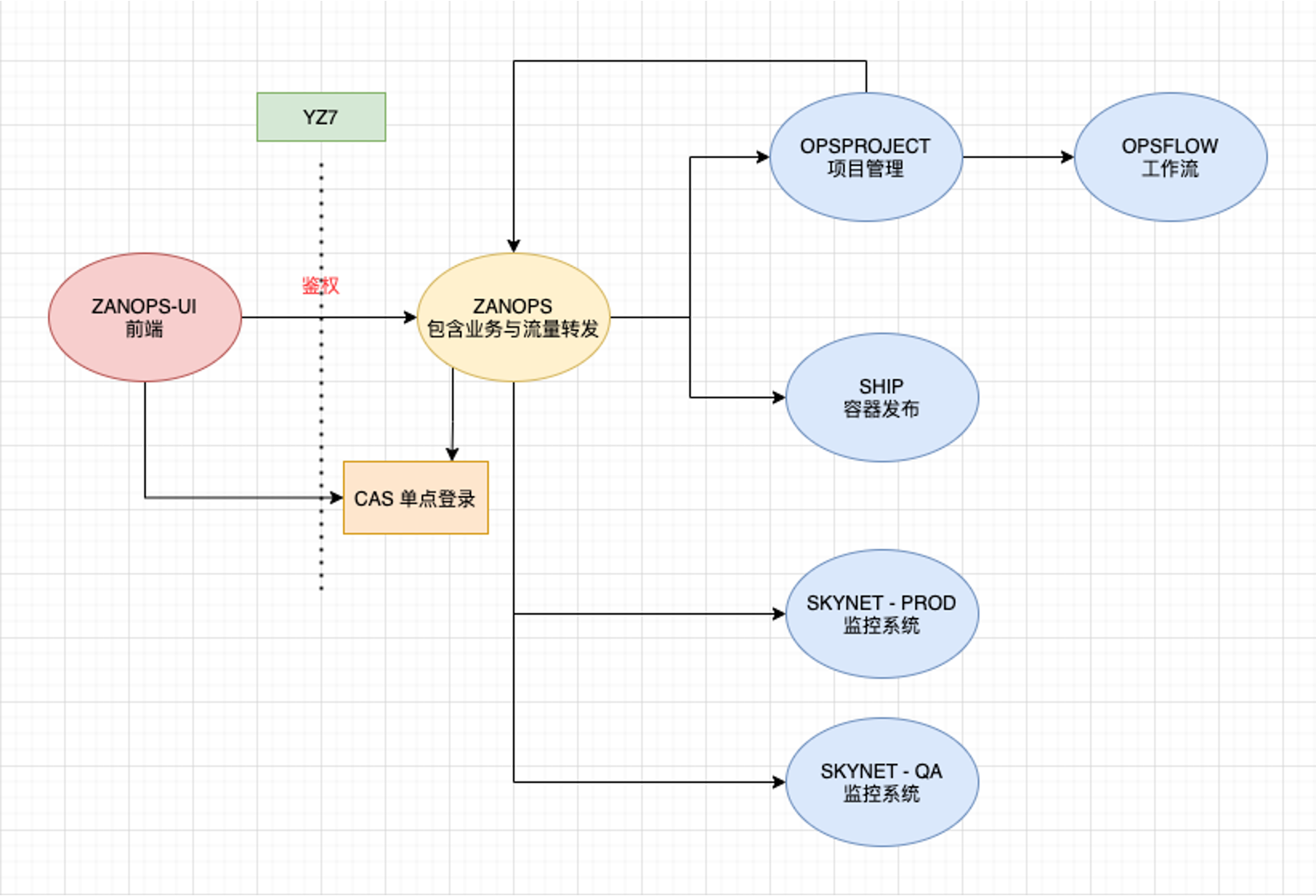

-有赞 OPS 平台是前期基于 FLASK 的单体应用,主要以支持业务为主。后来逐渐上线了很多业务,部署了很多业务端代码,进入容器化阶段。网关在当时只是内部

FLASK 应用的一部分功能,且没有一个明确的网关概念,仅作为业务应用的流量转发功能使用。下图展示的就是当时的网关 1.0 业务结构。

+

-

+As the entire system in the early days mainly focused on the direction of the

business, so did not generate too much momentum to carry out the

transformation. From 2018 onwards, through internal communication, we found

that if there is not a good gateway layer governance, the subsequent product

function and business access will bring more and more obvious bottlenecks.

-由于前期整个体系主要着重于业务方向,所以没有产生太多的动力去进行改造。从 2018

年开始,通过内部交流我们发现,如果没有一个很好的网关层治理,对后续产品功能的实现和业务接入度上会带来越来越明显的瓶颈。

+### Issues with no-gateway Layer Governance

-### 没有网关层治理出现的问题

+#### Performance

-#### 问题一:性能方面

+1. Every time you add a back-end service, you need to make a coding change

+2. The traffic forwarding code is implemented simply in Python and is not

designed as a “Gateway”

+3. The performance limitations of the FLASK framework are limited to 120-150

QPS per machine

+4. Repeat the wheel: each of the different business requirements produces a

set of corresponding entrances

+5. It’s messy. It’s complicated

-1. 每次新增后端服务,都需要进行编码变更

-2. 流量转发的代码用 Python 简单实现,未按“网关”要求进行设计

-3. Flask 框架的性能限制,单机 QPS 范围局限在 120-150

-4. 重复造轮子:不同的业务需求都生产一套对应入口

-5. 管理麻烦,运维复杂

+Based on this problem, our action direction is: the professional work to the

professional system to do.

-基于这个问题,我们的行动方向是:专业的工作交给专业的系统去做。

+#### Internal Operational Aspects

-#### 问题二:内部业务方面

+

-

+1. The number of internal services to manage is very high (hundreds)

+2. Some services do not dock with CAS implementation authentication

+3. The cost of new service docking CAS exists, and the repeated development is

time-consuming and labor-consuming

+4. All services are configured directly at the access layer, with no internal

service specifications or best practices

-1. 需要管理的内部服务数量非常多(上百)

-2. 部分服务未对接 CAS 实现鉴权

-3. 新的服务对接 CAS 存在对接成本,重复开发耗时耗力

-4. 所有服务直接配置在接入层,没有内部服务的规范及最佳实践

+With these two aspects of the problem, we began to gateway products related

research.

-带着以上这两方面问题,我们就开始对网关类产品进行了相关的调研。

+## Why Apache APISIX

-## 为什么选择了 Apache APISIX

+We also initially investigated a number of gateway systems, such as Apache

APISIX, Kong, Traefik and MOSN, as well as two related projects within our

company, YZ7 and Tether.

-最早我们也调研了很多的网关系统,比如 Apache APISIX、Kong、traefik 和 MOSN,还有我们公司内部的两个相关项目 YZ7 和

Tether。

+

-

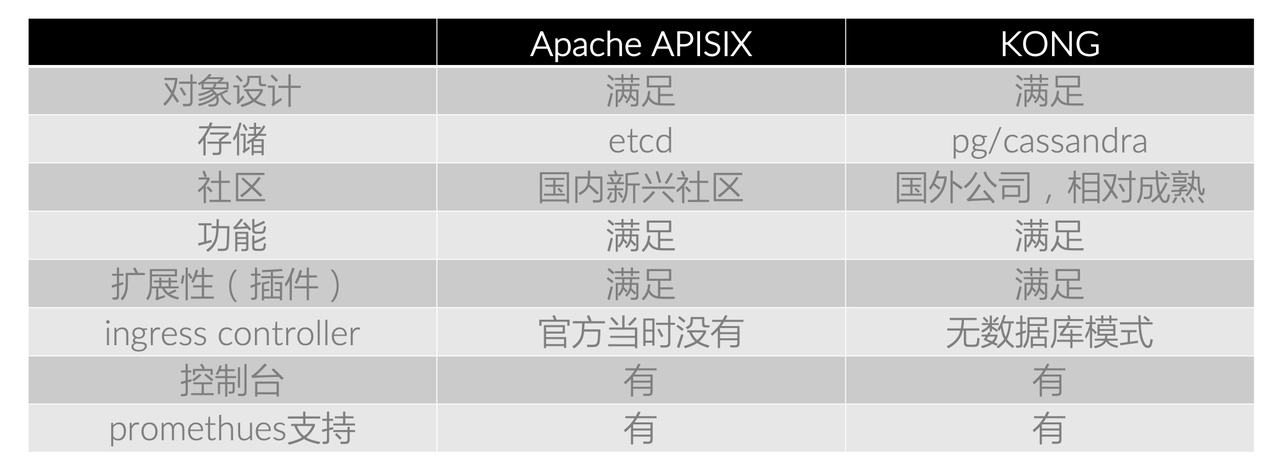

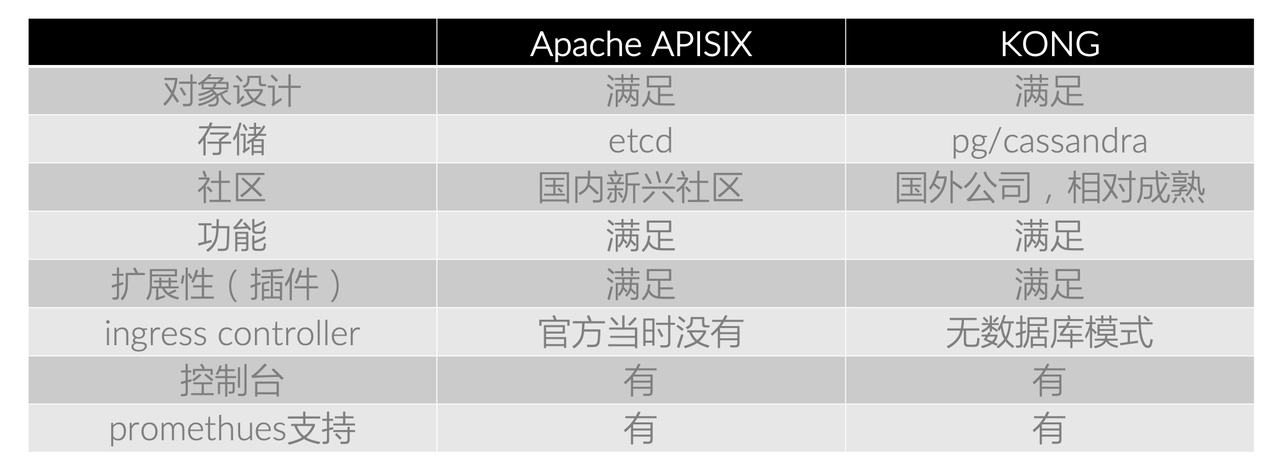

+Considering the maturity and extensibility of the product, we finally made a

choice between Kong and Apache APISIX.

-考虑到产品成熟度和可拓展性,最终我们在 Kong 和 Apache APISIX 中进行对比选择。

+

-

+As you can see from the image above, the two are basically the same in many

ways, so the storage side has become a key consideration. Because etcd is

mature in our company’s internal operation and maintenance system, Apache

APISIX is a little better than Kong.

-从上图对比中可以发现,两者在很多方面基本不相上下,所以存储端成为我们重点考虑的一点。因为 etcd 在我们公司内部的运维体系上已经比较成熟,所以

Apache APISIX 相较 Kong 则略胜一筹。

+At the same time, considering the open source project level, Apache APISIX’s

domestic communication and follow-up processing speed is very good, the

project’s plug-in system is rich and comprehensive, for each phase of the use

of the type are relatively consistent.

-同时考虑到在开源项目层面,Apache APISIX 的国内交流与跟进处理速度上都非常优秀,项目的插件体系比较丰富全面,对各个阶段的使用类型都比较契合。

+So after research in 2020, [Apache APISIX](https://github.com/apache/apisix)

was finally chosen as the gateway for the upcoming cloud native PaaS platform

in Youzan.

-所以在 2020 年调研之后,最终选择了 [Apache APISIX](https://github.com/apache/apisix)

作为有赞即将推出云原生 PaaS 平台的流量网关。

+## After Using Apache APISIX

-## 使用 Apache APISIX 后的产品新貌

+When we started accessing Apache APISIX, the two problems mentioned above were

solved one by one.

-当我们开始接入 Apache APISIX 后,前文提到的两方面问题逐一得到了解决。

+### Effect 1: Optimized Architecture Performance

-### 效果一:优化了架构性能

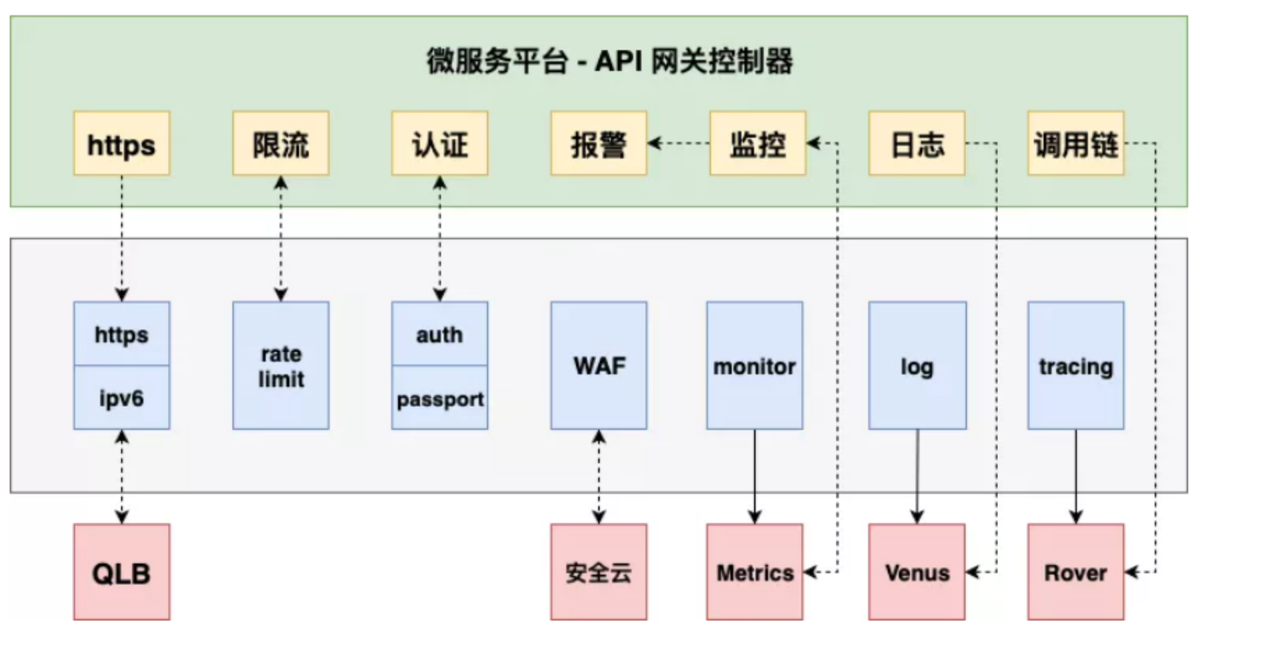

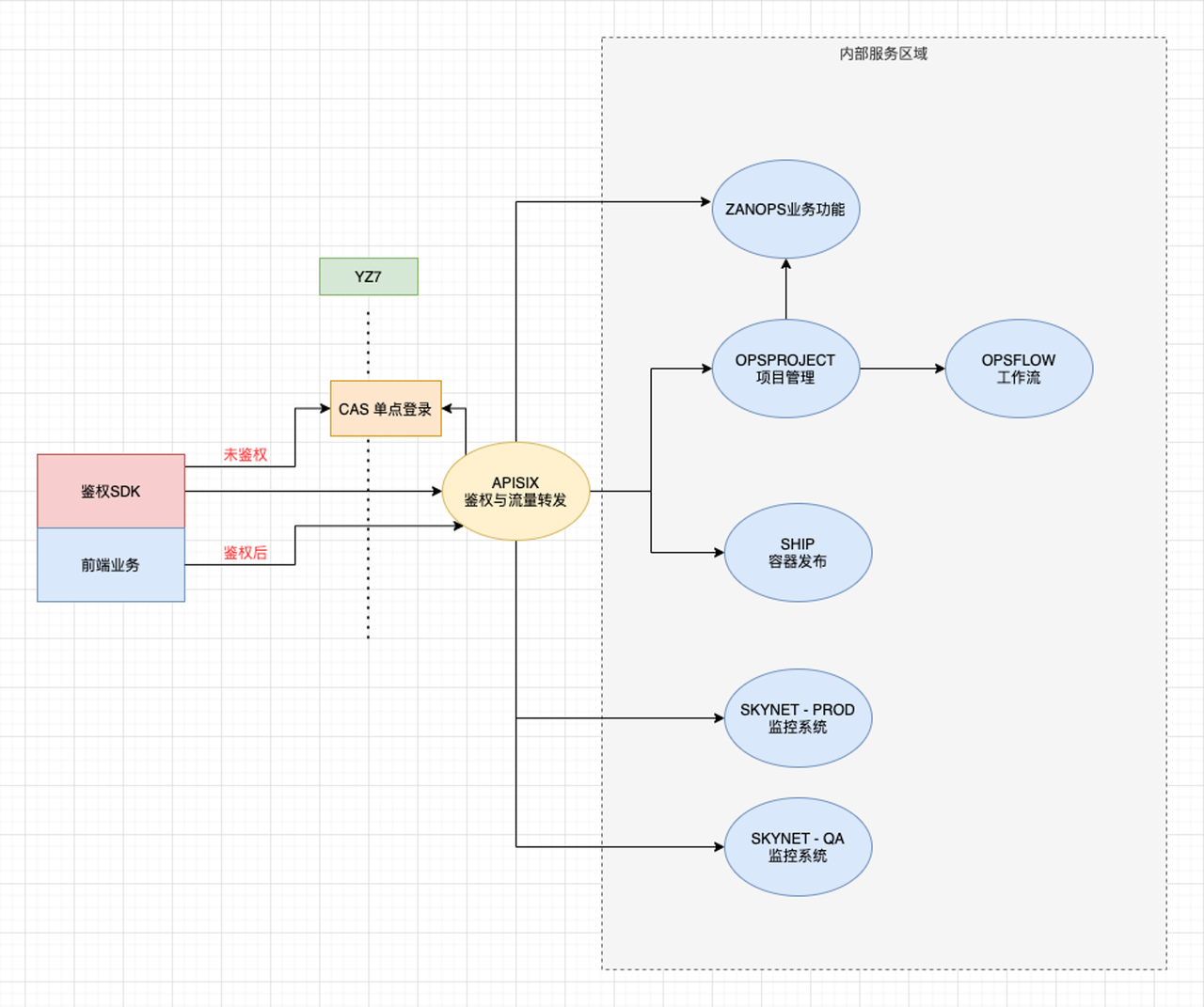

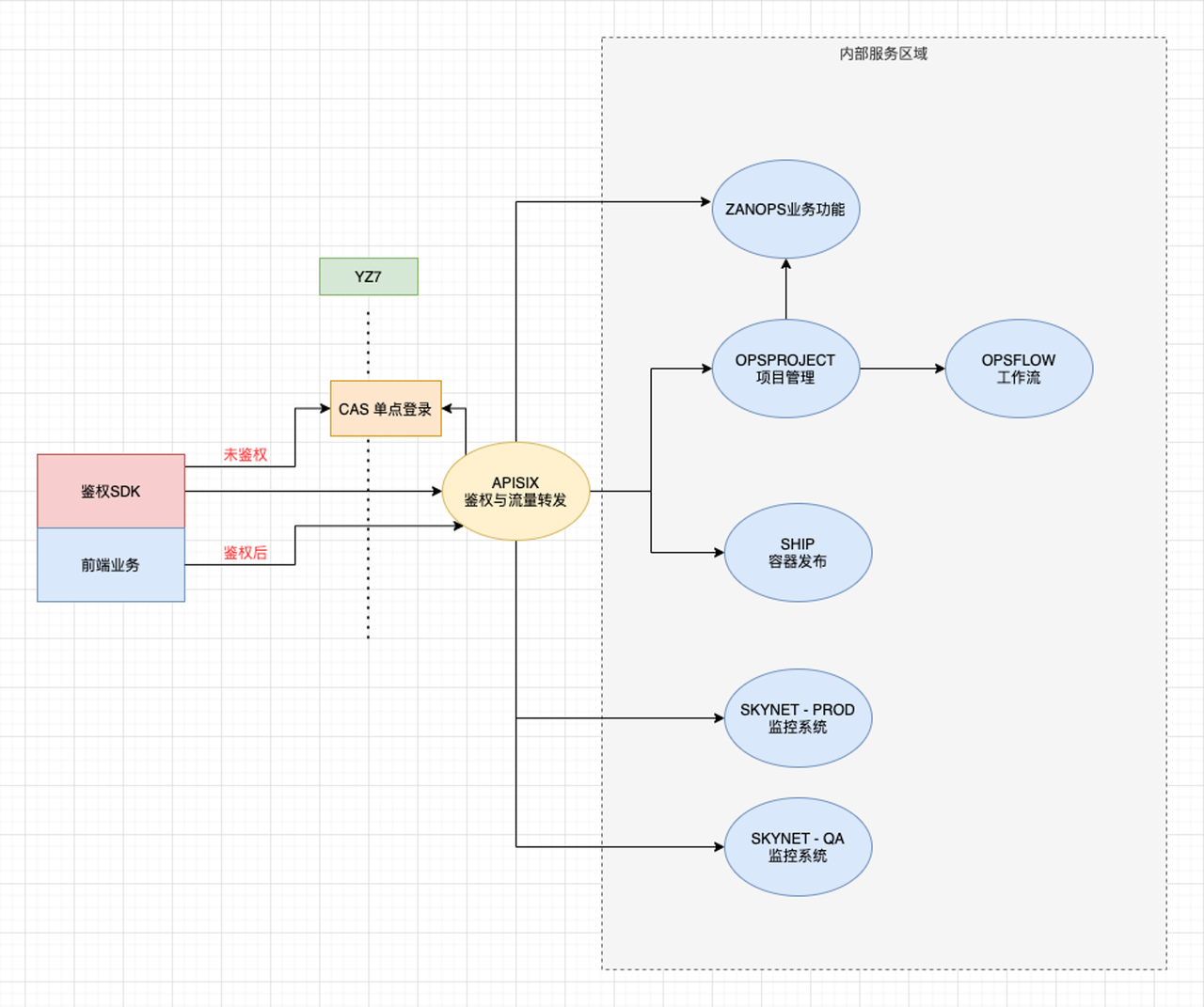

+Apache APISIX is deployed as an entry point to gateway at the edge of the

internal service area, through which all requests to the front end pass. At the

same time, we use the plug-in function of Apache APISIX to connect with the

company’s internal CAS single sign-on system. At the same time in the front end

we provide a responsible for authentication SDK Apache APISIX authentication

interface docking, to achieve a complete and automated process system.

-Apache APISIX 作为入口网关部署在内部服务区域边缘,前端的所有请求都会经过它。同时我们通过 Apache APISIX

的插件功能实现了与公司内部 CAS 单点登录系统的对接,之前负责流量转发的账号变为纯业务系统。同时在前端我们提供了一个负责鉴权的 SDK 与 Apache

APISIX 鉴权接口进行对接,达成一套完整又自动化的流程体系。

+

-

+So the problem was solved:

-于是问题得到了解决:

+1. Each time you add a new back-end service, you simply call the Apache APISIX

interface and write the new service configuration

+2. Traffic forwarding is done through Apache APISIX, which is excellent at

what the gateway does

+3. The gateway is no longer a performance bottleneck in the architecture

+4. For different business requirements, you can use the same gateway to

achieve uniformity; business details vary, you can achieve through plug-ins

-1. 每次增加新的后端服务,只需调用 Apache APISIX 接口,将新的服务配置写入

-2. 流量转发通过 Apache APISIX 完成,在网关该做的事情上,它完成得十分优秀

-3. 网关不再是架构中的性能瓶颈

-4. 对不同的业务需求,可以统一使用同一个网关来实现;业务细节有差异,可以通过插件实现

+### Effect 2: Internal Service Access Standardization

-### 效果二:内部服务接入标准化

+After accessing Apache APISIX, the company’s new internal service will have

its own authentication function, access costs are very low, business can

directly start to develop business code. At the same time when the new service

access, according to the norms of internal services for the relevant routing

configuration, back-end services can be unified access authentication after the

user identity, save time and effort.

-接入 Apache APISIX

后,公司新的内部服务接入时将自带鉴权功能,接入成本极低,业务方可以直接开始开发业务代码。同时在新服务接入时,按内部服务的规范进行相关路由配置,后端服务可以统一拿到鉴权后的用户身份,省时省力。

+Some of the fine-tuning details of the in-house service are briefly described

here.

-具体关于内部服务的一些调整细节这里简单介绍一下。

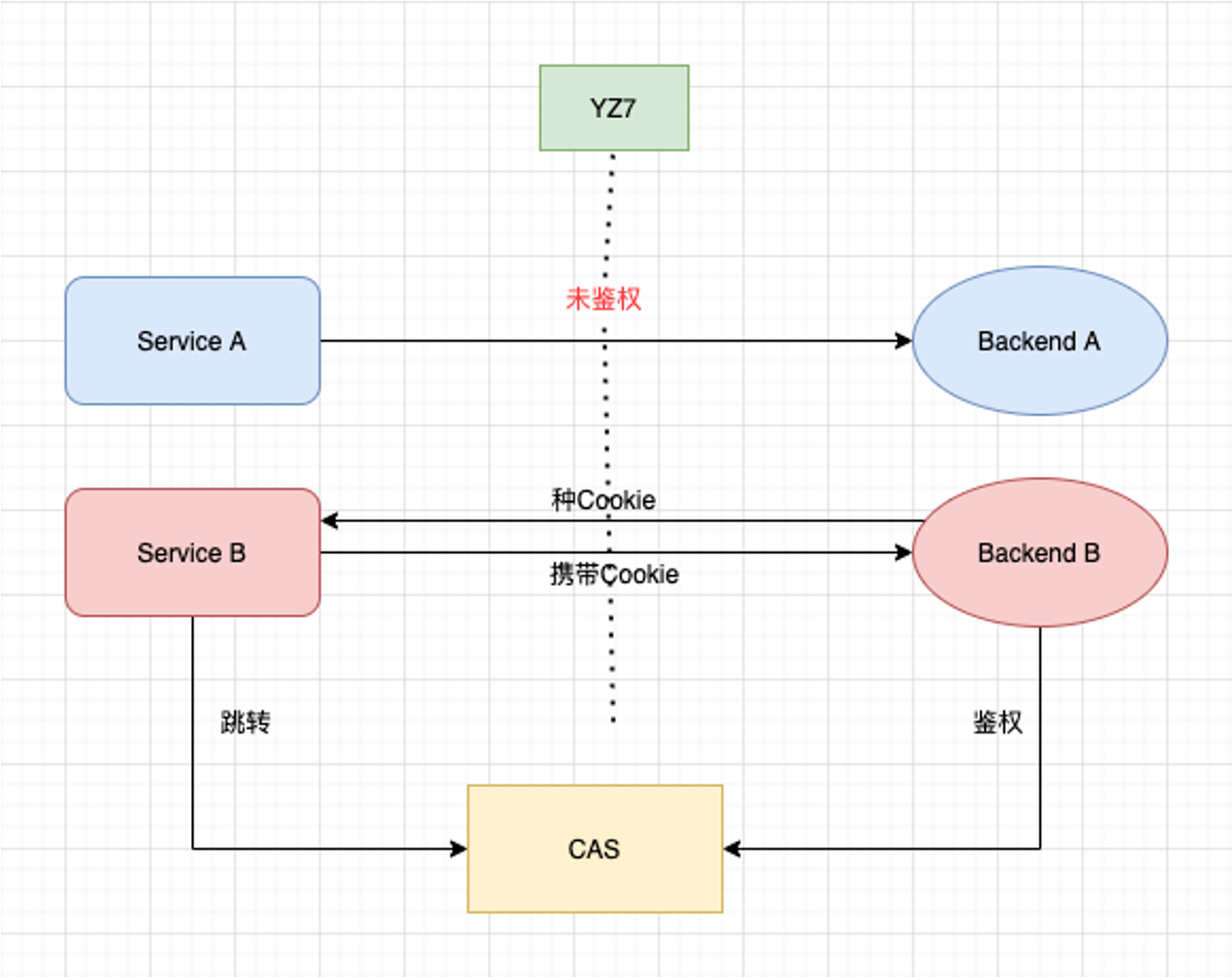

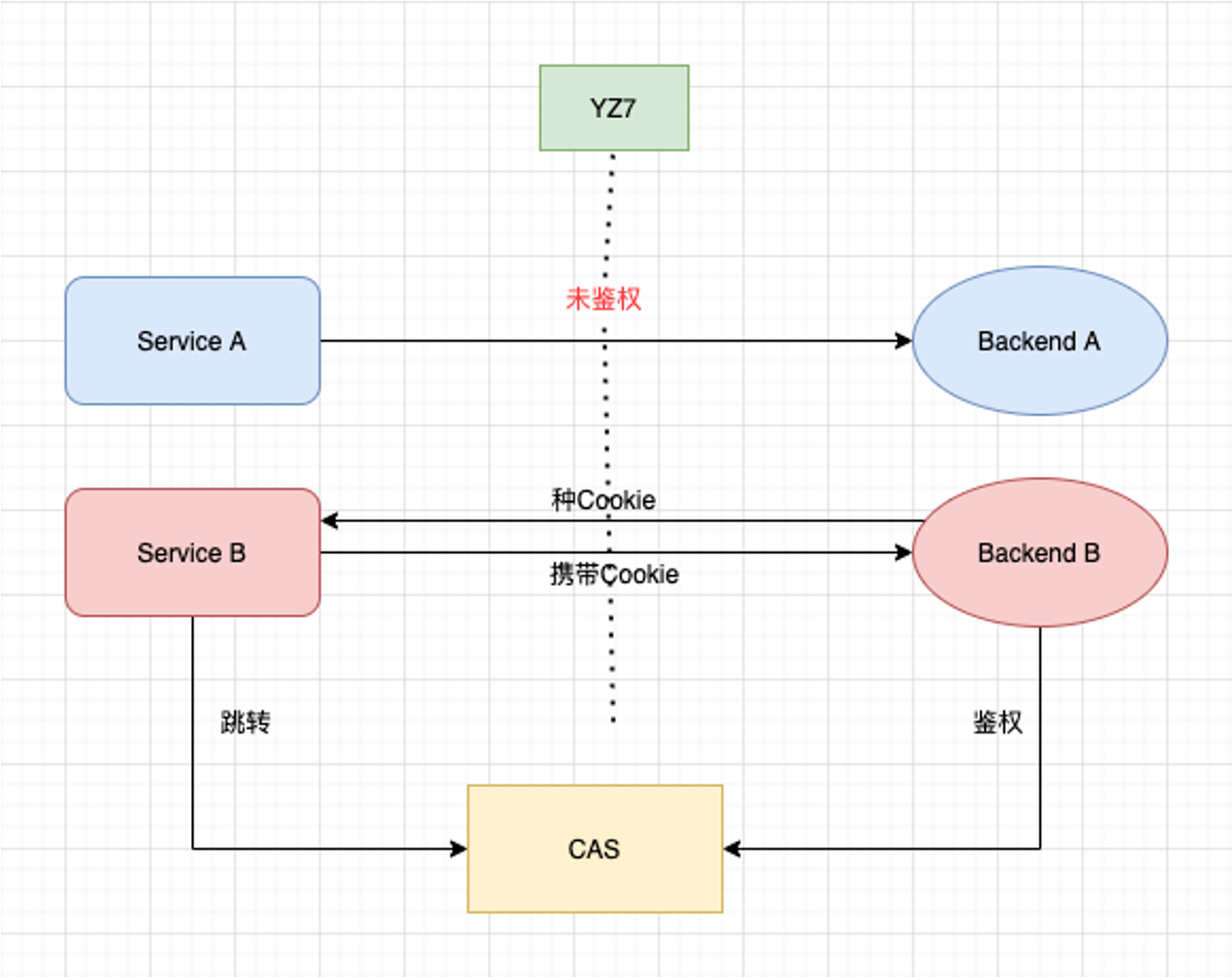

+#### Authentication Plugin OPS-JWT-Auth

-#### 鉴权插件 OPS-JWT-Auth

+The authentication plug-in is developed based on JWT-Auth protocol. When a

user accesses the front end, the front end calls the SDK first to get the

available JWT-Token locally. Then through the following path to get the user’s

valid information, placed in the front-end of a storage, complete login

authentication.

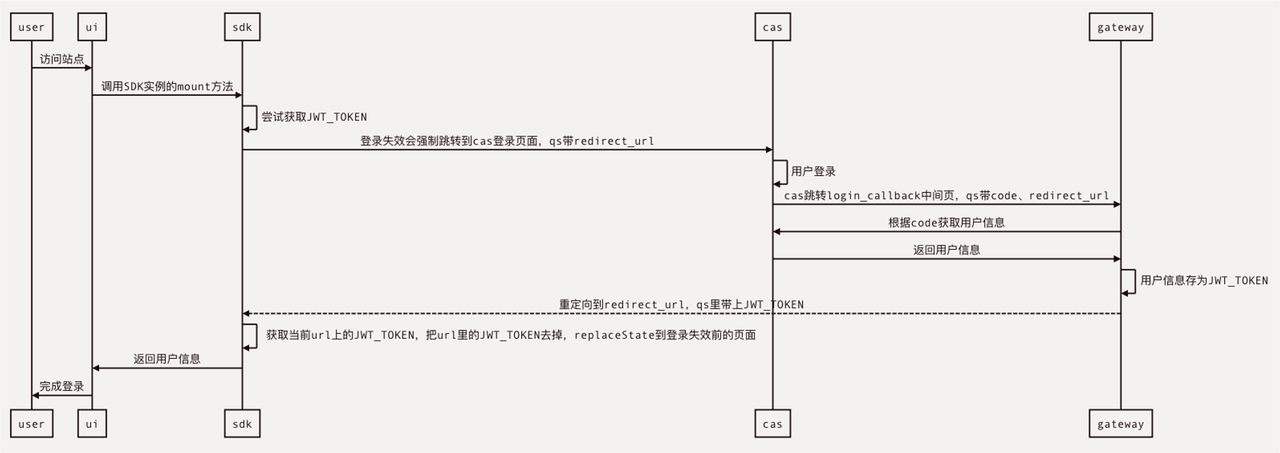

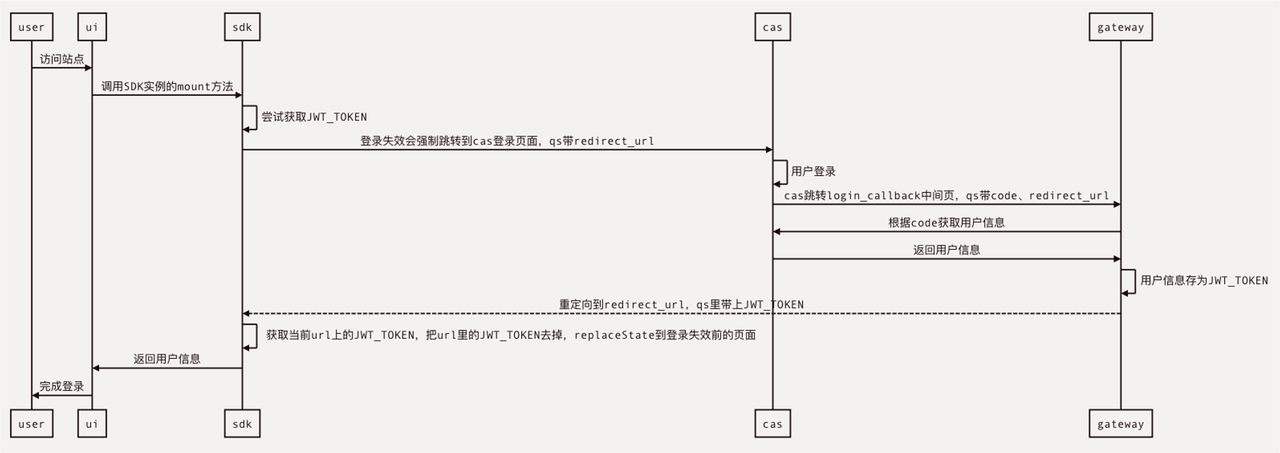

-鉴权插件是基于 JWT-Auth 协议去开发的,用户访问前端时,前端会先去调用 SDK,去前端本地获取可用的

JWT-Token。然后通过下图的路径获得用户有效信息,放在前端的某个存储里,完成登录鉴权。

+

-

+#### Deployment Configuration Upgrade

-#### 部署配置升级

+At the deployment level, we implemented the current multi-cluster

configuration deployment after three iterations from the simpler version.

-在部署层面,我们从简单版本经历三次迭代后实现了目前的多集群配置部署。

+- Version 1: Double Room 4 independent nodes, the hypervisor is written to

each node’s etcd

+- Version 2: Double Room 4 independent nodes, the main room three nodes etcd

cluster

+- Version 3: Three Rooms 6 independent nodes, three rooms etcd cluster

-* 版本一:双机房 4 个独立节点,管理程序分别写入每个节点的 etcd

-* 版本二: 双机房 4 个独立节点,主机房三节点 etcd 集群

-* 版本三: 三机房 6 个独立节点,三机房 etcd 集群

+For now we’re going to mix computing with storage deployment, and then we’re

going to deploy a really high availability ETCD cluster that can be isolated

from the governance plane Apache APISIX runtime, deploy in stateless mode.

-目前我们还是计算与存储混合部署在一起,后续我们会去部署一个真正高可用的 etcd 集群,这样在管控平面 Apache APISIX

运行时就可以分离出来,以无状态模式部署。

+#### New Authentication Plugin PAT-Auth

-#### 新增鉴权插件 PAT-Auth

+This year we added the Person Access Token (Pat) authentication plug-in,

which, like calling the Open API on GitHub, generates a personal Token that can

call the Open API as a Person.

-在今年我们又新增了 Person Access Token(PAT)的鉴权插件,这个功能类似于在 GitHub 上去调用 Open API

一样,会生成一个个人 Token,可以以个人身份去调用 Open API。

+Because our own operating platform also has some such requirements, for

example, some local development plug-ins need to personally access the

interface on the cloud platform, in this case the personal way Token is more

convenient, allow developers to license themselves.

-因为我们自己的运维平台也有一些这样的需求,比如本地的一些开发插件需要以个人身份去访问云平台上的接口时,这种情况下个人 Token

方式就比较方便,允许开发自己给自己授权。

+While multiple Auth plug-ins have been supported since Apache APISIX 2.2, one

Consumer can now run multiple Auth plug-in scenario implementations.

-目前 Apache APISIX 2.2 版本后已支持多个 Auth 插件使用,现在可以支持一个 Consumer 运行多个 Auth 插件的场景实现。

+## More Plans to Explore

-## 更多玩法待开发

+### Upgrade Operations Automation

-### 升级运维自动化

+We also experienced a few version changes during our use of Apache APISIX. But

each upgrade, more or less because of compatibility and lead to the

transformation of development, after the completion of the online changes,

operating efficiency is low. So in the future we will try to deploy a

three-room etcd cluster on the storage surface at the same time as Apache

APISIX runs the surface containerization implementation for automatic

distribution.

-在使用 Apache APISIX

的过程中,我们也经历了几次版本变动。但每次升级,都或多或少出现因为兼容性而导致改造开发,完成后进行线上变更,运维效率较低。所以后续我们会尝试在存储面部署三机房

etcd 集群的同时,将 Apache APISIX 运行面容器化实现自动发布。

+### Use the Traffic Split Plugin

-### traffic split 插件使用

-

-[traffic

split](https://github.com/apache/apisix/blob/master/docs/en/latest/plugins/traffic-split.md)

是 Apache APISIX 在最近几个版本中引入的插件,主要功能是进行流量分离。有了这个插件后,我们可以根据一些流量头上的特征,利用它去自动完成相关操作。

+[traffic

split](https://github.com/apache/apisix/blob/master/docs/en/latest/plugins/traffic-split.md)

is a plug-in that Apache APISIX has introduced in recent releases with the

primary function of traffic separation. With this plug-in, we can according to

some of the traffic head characteristics, use it to complete the relevant

operations automatically.

-如上图在路由配置上引入 traffic split 插件,如果当有 Region=Region1 的情况,便将其路由到

Upstream1。通过这样的规则配置,完成流量管控的操作。

+As shown above, a traffic split plug-in is introduced in the routing

configuration, and when region = Region1 is present, it is routed to Upstream1.

Through such a rule configuration, the operation of traffic control is

completed.

-### 东西向流量管理

+### East-west Flow Management

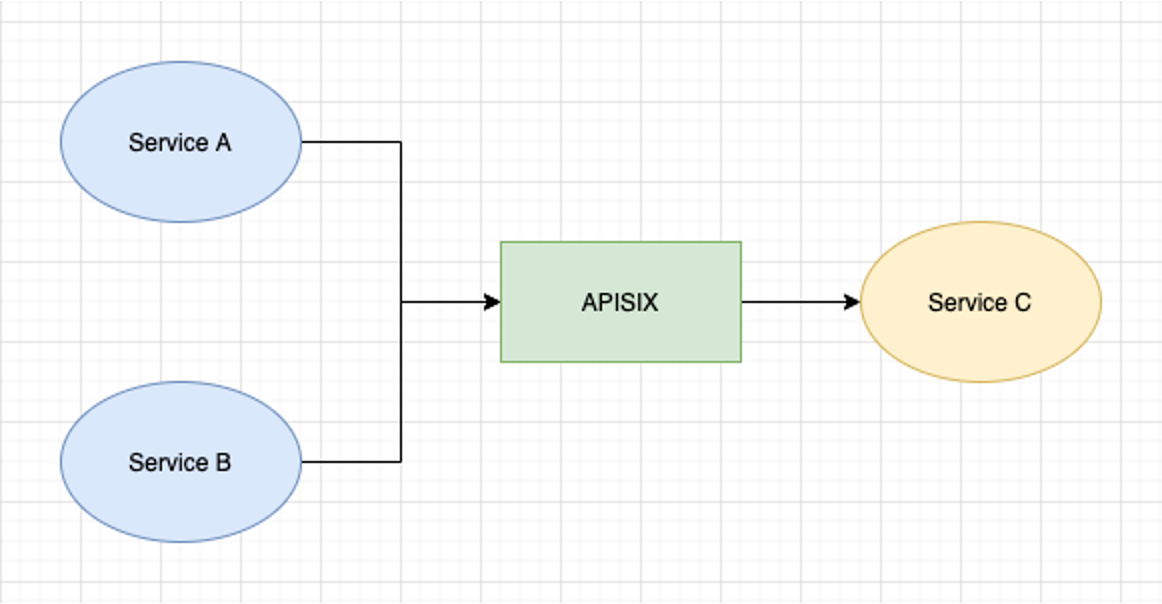

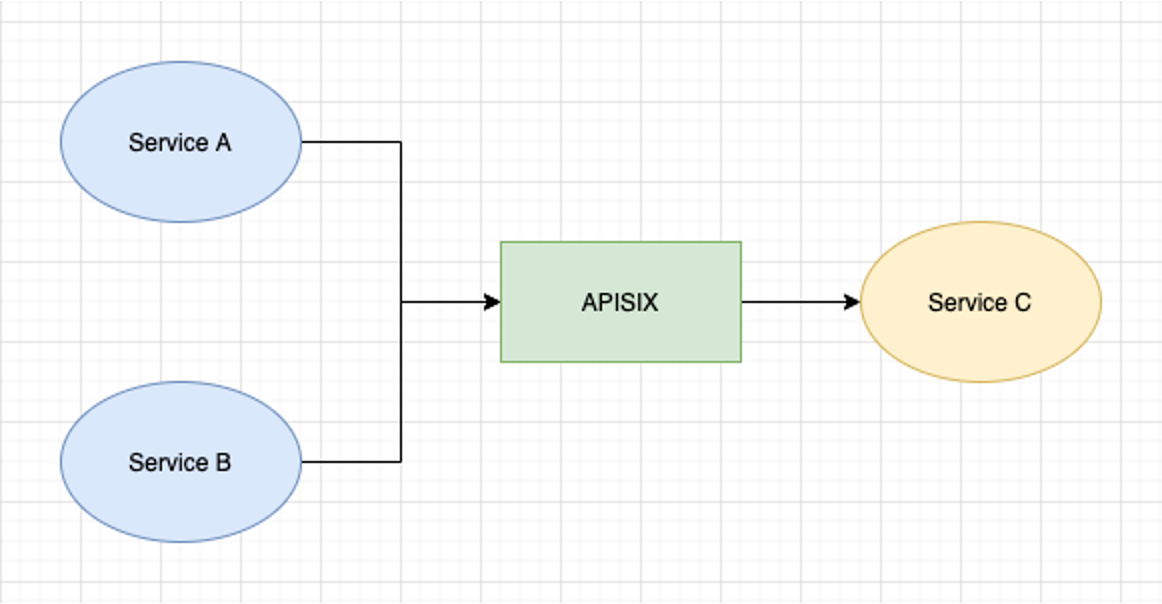

-我们的使用场景中更多是涉及到在内网多个服务之间去做服务,调用鉴权时可以依靠 Apache APISIX 做流量管理。服务 A、服务 B

都可以通过它去调用服务 C,中间还可以加入鉴权的插件,设定其调用对象范围、环境范围或者速率和熔断限流等,做出类似这样的流量管控。

+In our usage scenario, we are more involved in multi-service of intranet, and

we can rely on Apache APISIX for traffic management when calling

authentication. Both service A and service B can use it to call service C, with

the addition of an authenticated plug-in to set its call object scope,

environment scope, or rate, and fuse limit, etc. , to do something like this.

-

+

-### 内部权限系统对接

+### With the Internal Access System

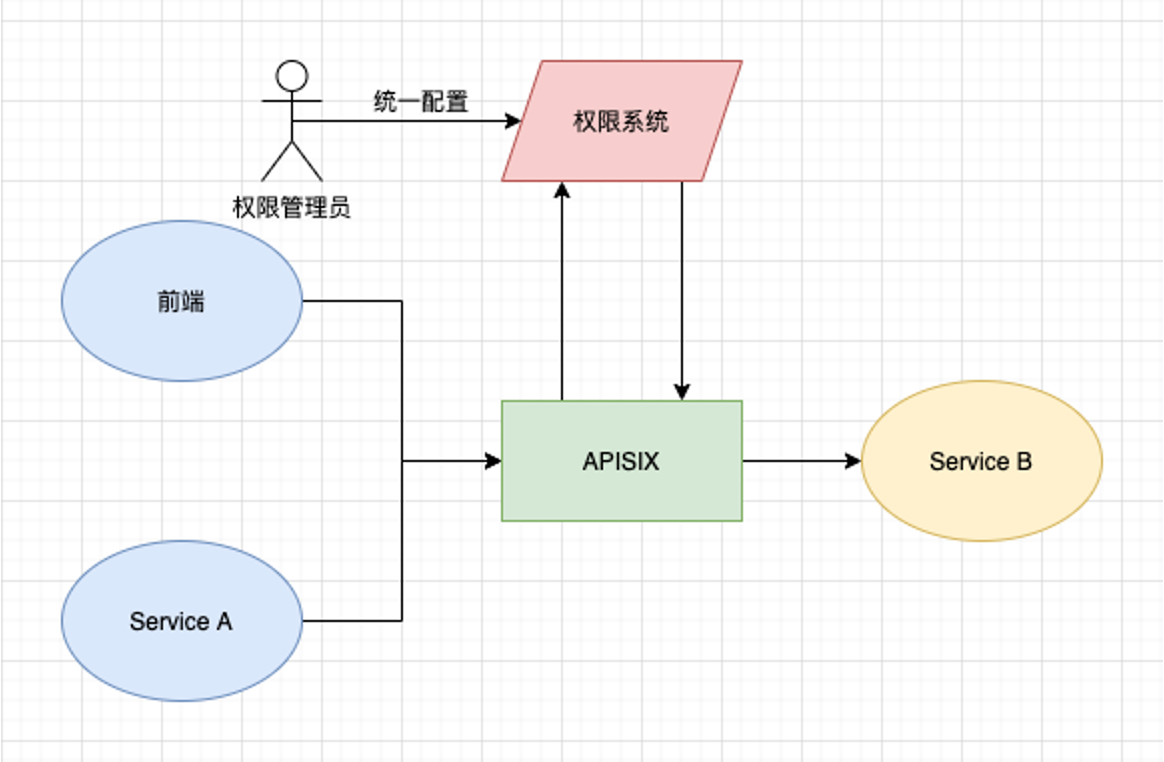

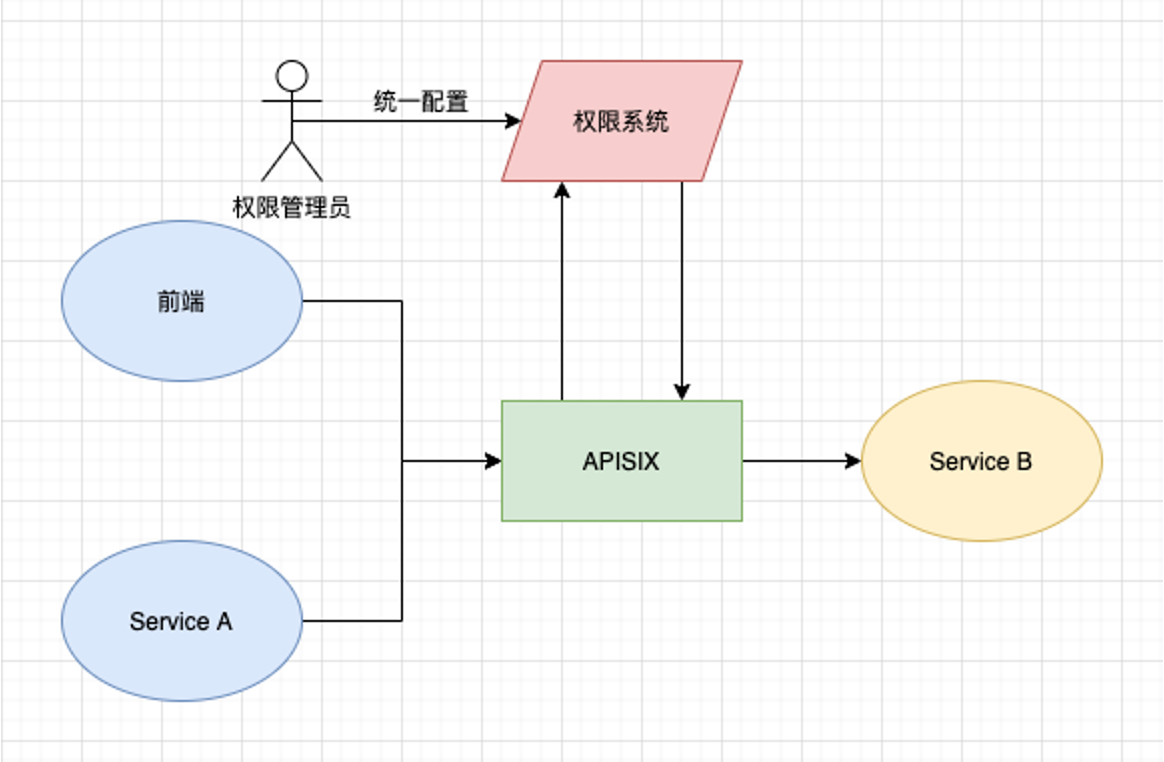

-之后我们也打算将公司的权限系统与 Apache APISIX

进行对接,鉴权通过后,判定用户是否有权限去访问后端的某个资源,权限的管理员只需在管控平面上做统一配置即可。

+Then we’re going to Dock Apache Apisix with the company’s permission system,

and after authentication, determine if the user has access to a resource on the

back end, the administrator of the permissions only needs to make a uniform

configuration on the administration plane.

-

+

-这样带来的一个好处就是后端的所有服务不需要各自去实现权限管控,因为当下所有流量都是经过网关层处理。

+One of the benefits of this is that all back-end services do not need to be

individually managed, since all current traffic is handled through the gateway

layer.

-### Go Plugin 开发

+### Go Plugin Development

-目前 Apache APISIX 在计算语言层面已支持多计算语言,比如 Java、Go 以及 Python。刚好我们最近实现的云原生 PaaS

平台,也开始把技术栈从 Python 往 Go 上转移。

+Apache APISIX currently supports multiple computing languages at the computing

language level, such as Java, Go, and Python. It just so happens that our

recently implemented cloud native PaaS platform is also starting to move the

technology stack from Python to Go.

-希望后续在使用 Apache APISIX 的过程中,可以用 Go 去更新一些我们已经实现了的插件,期待在后续的迭代中给有赞产品带来更多的好处。

+Hopefully we will be able to update some of the plug-ins we have implemented

with Go in the future with Apache APISIX, hopefully bringing more benefits to

the like product in subsequent iterations.

diff --git a/website/blog/2021/09/18/xiaodian-usercase.md

b/website/blog/2021/09/18/xiaodian-usercase.md

index 44354fa..9366912 100644

--- a/website/blog/2021/09/18/xiaodian-usercase.md

+++ b/website/blog/2021/09/18/xiaodian-usercase.md

@@ -1,144 +1,143 @@

---

-title: "Apache APISIX 助力便利充电创领者小电,实现云原生方案"

-author: "孙冉"

+title: "Apache APISIX helps DIAN to facilitate cloud native solution"

+author: "Ran Sun"

keywords:

- Apache APISIX

-- 小电

-- 云原生

-- 容器化

-description: 本文介绍了国内便利充电创领者——小电通过应用 Apache APISIX,进行公司产品架构的云原生项目搭建的相关背景和实践介绍

+- DIAN

+- Cloud Native

+- container

+description: This article introduces the background and practice of using

Apache APISIX to build the cloud native project in DIAN.

tags: [User Case]

---

-> 本文介绍了国内便利充电创领者——小电通过应用 Apache APISIX,进行公司产品架构的云原生项目搭建的相关背景和实践介绍

-> 作者孙冉,运维专家。目前就职于小电平台架构部,主要负责 K8s 集群和 API 网关的相关部署。

+> This article introduces the background and practice of using Apache APISIX

to build the cloud native project in DIAN. The author is Sun ran, an expert in

operation and maintenance. Currently working in DIAN, mainly responsible for

the deployment of K8s cluster and API Gateway.

<!--truncate-->

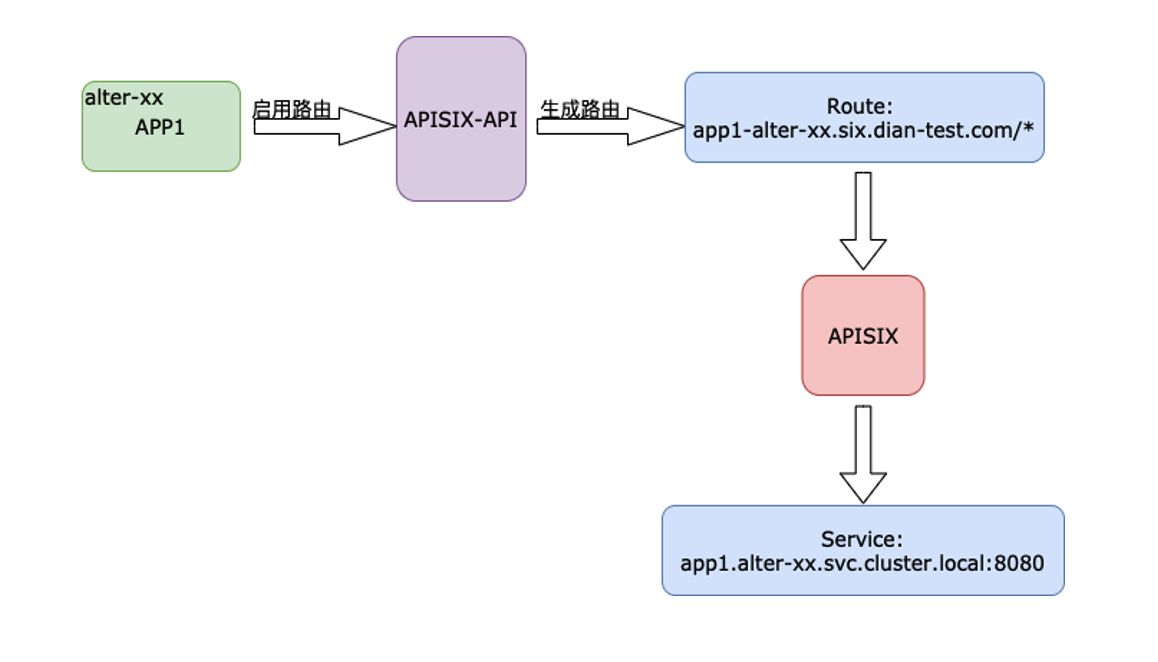

-## 业务背景

+## Background

-小电作为国内共享充电宝服务平台,目前还处于初创阶段。从运维体系、测试环境等方面来讲,当下产品的业务主要面临了以下几个问题:

+As a domestic service platform for sharing chargers, DIAN is still in its

initial stage. From the aspects of operation and maintenance system, test

environment and so on, the business of the current product mainly faces the

following problems:

-- VM 传统模式部署,利用率低且不易扩展

-- 开发测试资源抢占

-- 多套独立的测试环境(k8s),每次部署维护步骤重复效率低

-- 使用 Nginx 配置管理,运维成本极高

+- Traditional VM mode deployment, low utilization and not easy to expand

+- Sharing resources among Developers and QAs are difficult

+- Multiple independent test environments (K8s) that repeat maintenance steps

for each deployment are inefficient

+- Using Nginx configuration management, operating costs are extremely high

-在 2020 年初,我们决定启动容器化项目,打算寻找一个现有方案来进行上述问题的解决。

+At the beginning of 2020, we decided to launch the containerization project

with the intention of finding an existing solution to solve the above problems.

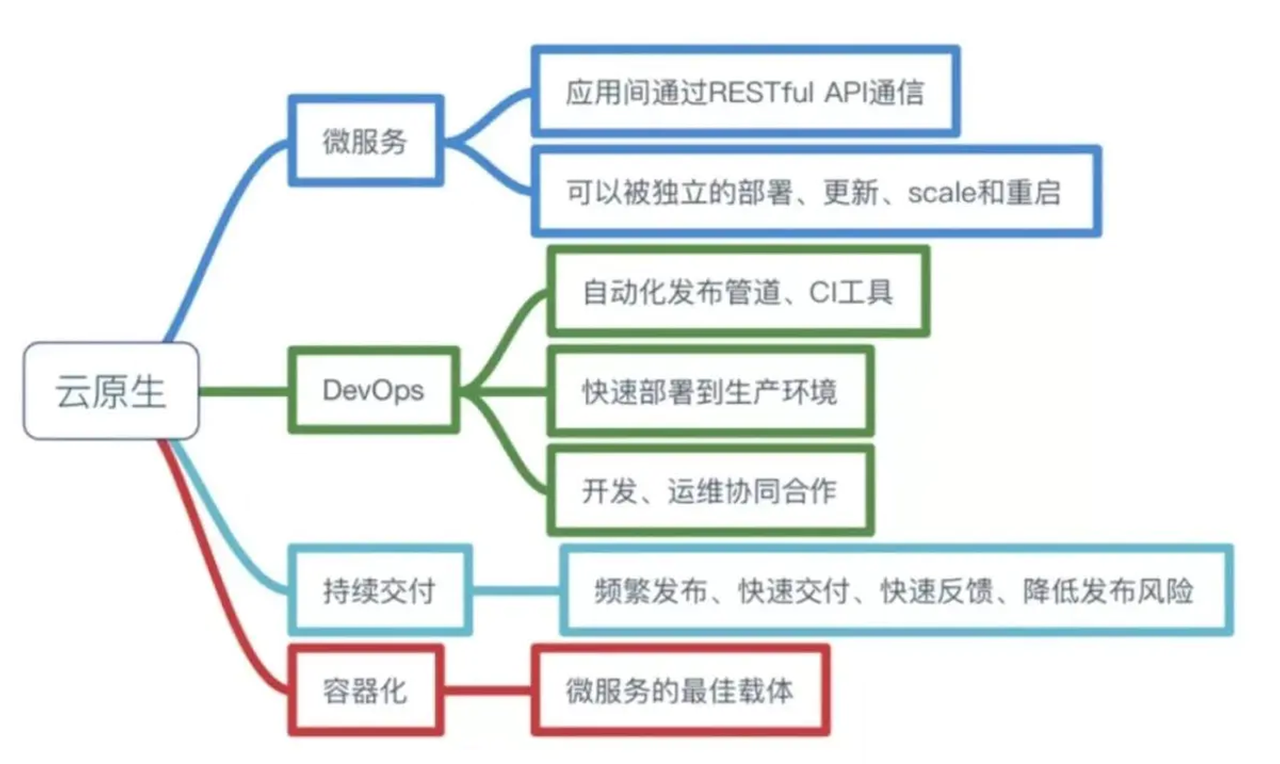

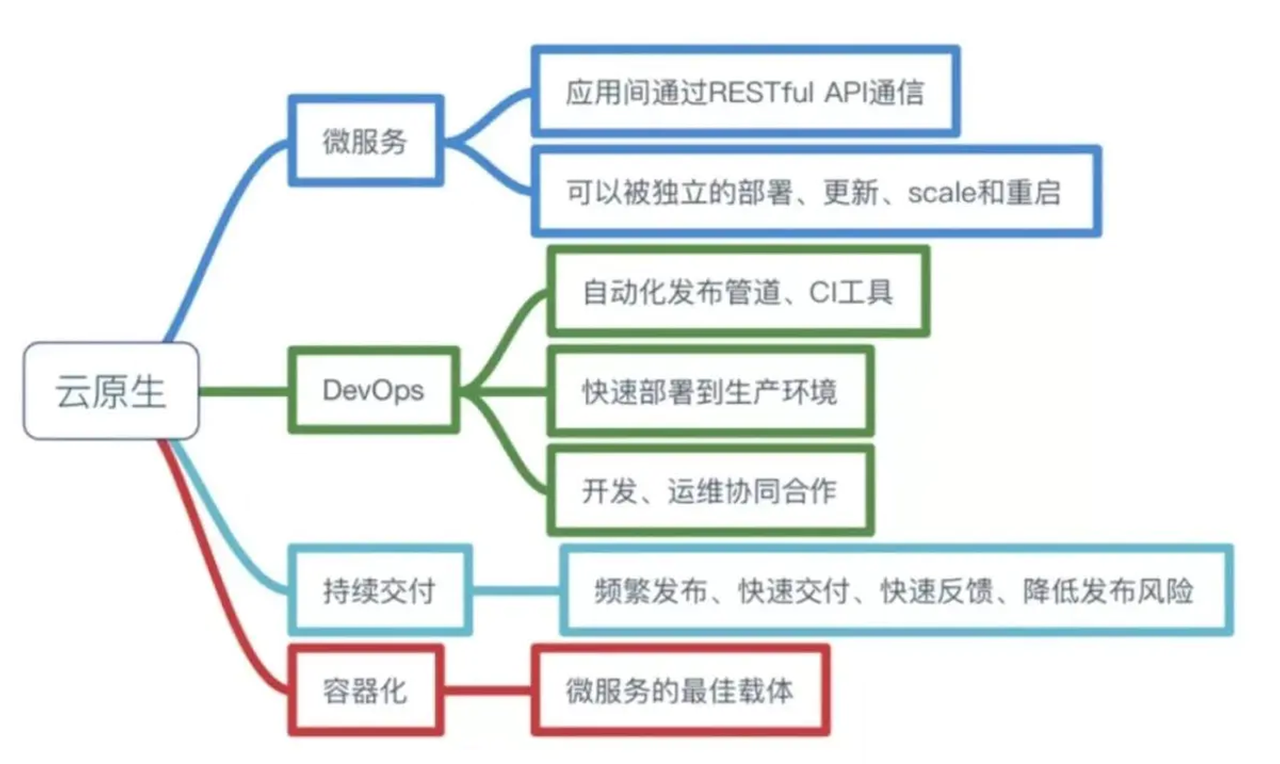

-目前公司是以「拥抱云原生」的态度来进行后续业务的方案选择,主要看重云原生模式下的微服务改造、DevOps、持续交付以及最重要的容器化特性。

+Currently, companies are looking to “Embrace cloud native”as a solution for

future business, focusing on micro-service re-engineering in cloud native mode,

DevOps, continuous delivery, and most importantly, containerization.

-

+

-## 为什么需要 Apache APISIX

+## Why Need Apache APISIX

-基于上述云原生模式的选择,我们开启了容器化方案搭建。方案主要有三部分组成:

+Based on the above selection of cloud native mode, we started to build the

containerized solution. The programme has three main components:

-1. **自研 Devops 平台 - DNA**:这个平台主要是用于项目管理、变更管理(预发、生产环境)、应用生命周期管理(DNA Operator)和

CI/CD 相关功能的嵌入。

-2. **基于 k8s Namespace

的隔离**:之前我们所有的开发项目环境,包括变更环境等都全部注册在一起,所以环境与环境之间的相互隔离成为我们必要的处理过程。

-3. **动态管理路由的网关接入层**:考虑到内部的多应用和多环境,这时就需要有一个动态管理的网关接入层来进行相关的操作处理。

+1. **Self-developed Devops platform-DNA**: this platform is mainly used for

project management, change management (pre-release, production environment) ,

application lifecycle management (DNA Operator) , and CI/CD related

functionality.

+2. **Isolation based on K8s Namespace**: previously all of our development

project environments, including change environments, were all registered

together, so isolation between environment and environment became a necessary

part of our processing.

+3. **Dynamic management routing gateway access layer**: considering the

internal multi-application and multi-environment, it is necessary to have a

dynamic management gateway access layer to deal with the relevant operations.

-### 网关选择

+### Gateway Selection

-在网关选择上,我们对比了以下几个产品:OpenShift Route、Nginx Ingress 和 Apache APISIX。

+For gateway selection, we compared OpenShift Route, Nginx Ingress, and Apache

APISIX.

-OpenShift 3.0 开始引入 OpenShift Route ,作用是通过 Ingress Operator 为 Kubernetes 提供

Ingress Controller,以此来实现外部入栈请求的流量路由。但是在后续测试中,功能支持方面不完善且维护成本很高。同时 Nginx Ingres

也存在类似的问题,使用成本和运维成本偏高。

+OpenShift 3.0 introduced OpenShift Route to Route traffic for external stack

requests by providing the Ingress Controller through the Ingress Operator for

Kubernetes. But in the follow-up test, the function support aspect is not

perfect and the maintenance cost is very high. Nginx Ingres also has a similar

problem with high operating and operation costs.

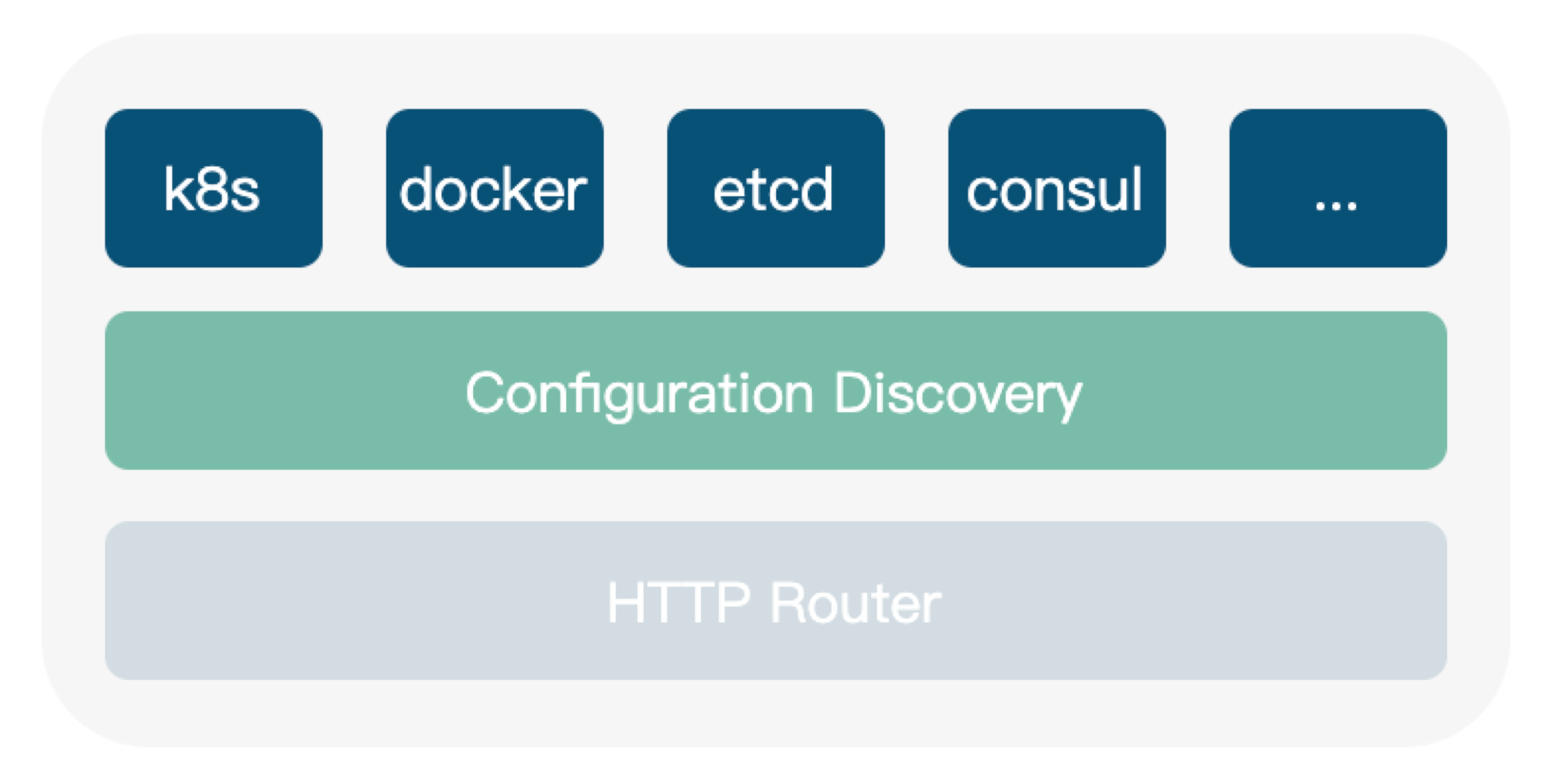

-在参与 Apache APISIX 的调研过程中我们发现,Apache APISIX 的核心就是提供路由和负载均衡相关功能,同时还支持:

+During our research with Apache APISIX, we found that the core of Apache

APISIX is to provide routing and load balancing capabilities, as well as

support:

-- 动态路由加载、实时更新

-- etcd 存储下的无状态高可用模式

-- 横向扩展

-- 跨源资源共享(CORS)、Proxy Rewrite 插件

-- API 调用和自动化设置

-- Dashboard 清晰易用

+- Dynamic route loading and real-time update

+- Stateless high availability mode in ETCD storage

+- Lateral spread

+- Cross-resource sharing (CORS) , Proxy Rewrite plug-in

+- API calls and automation settings

+- Dashboard is clean and easy to use

-当然,作为一个开源项目,Apache APISIX 有着非常高的社区活跃度,也符合我们追求云原生的趋势,综合考虑我们的应用场景和 Apache APISIX

的产品优势,最终将项目环境中所有路由都替换为 Apache APISIX。

+Of course, as an open source project, Apache APISIX has a very active

community and is in line with our trend to pursue cloud native, taking into

account our application scenarios and Apache APISIX’s product strengths,

finally, replace all routes in the project environment with Apache APISIX.

-## 应用 Apache APISIX 后的变化

+## Changes with Apache APISIX

-### 整体架构

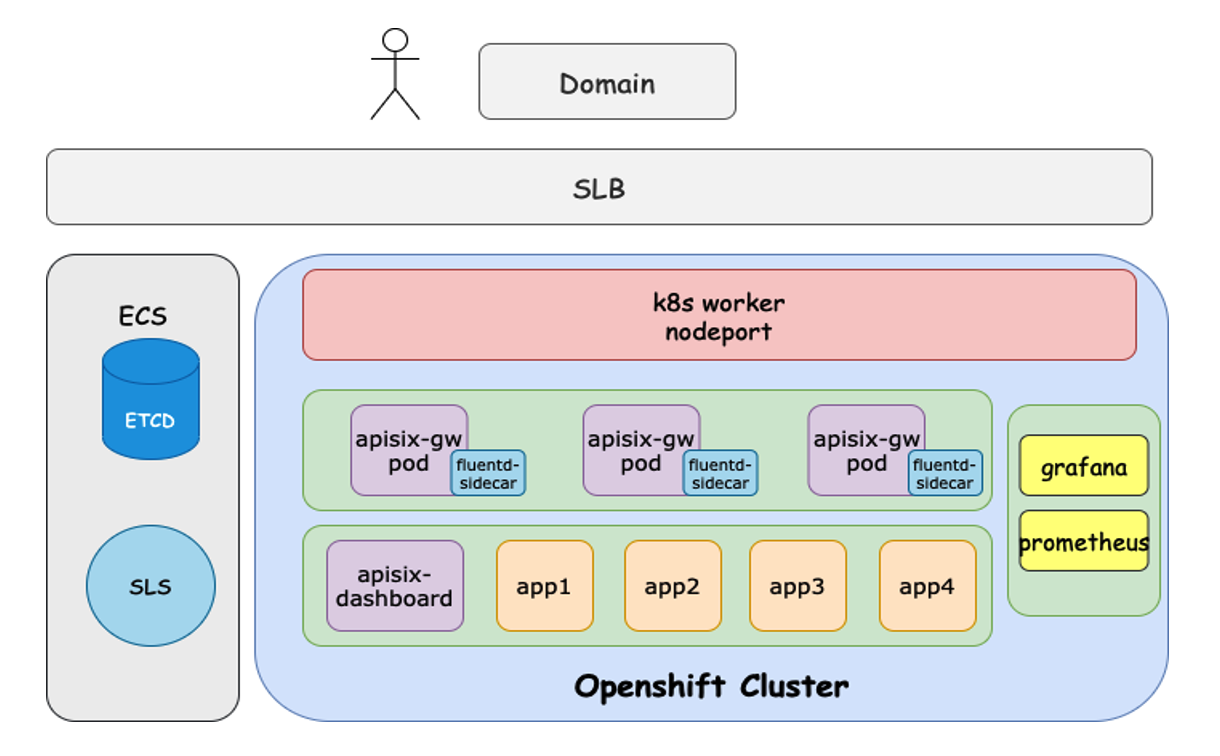

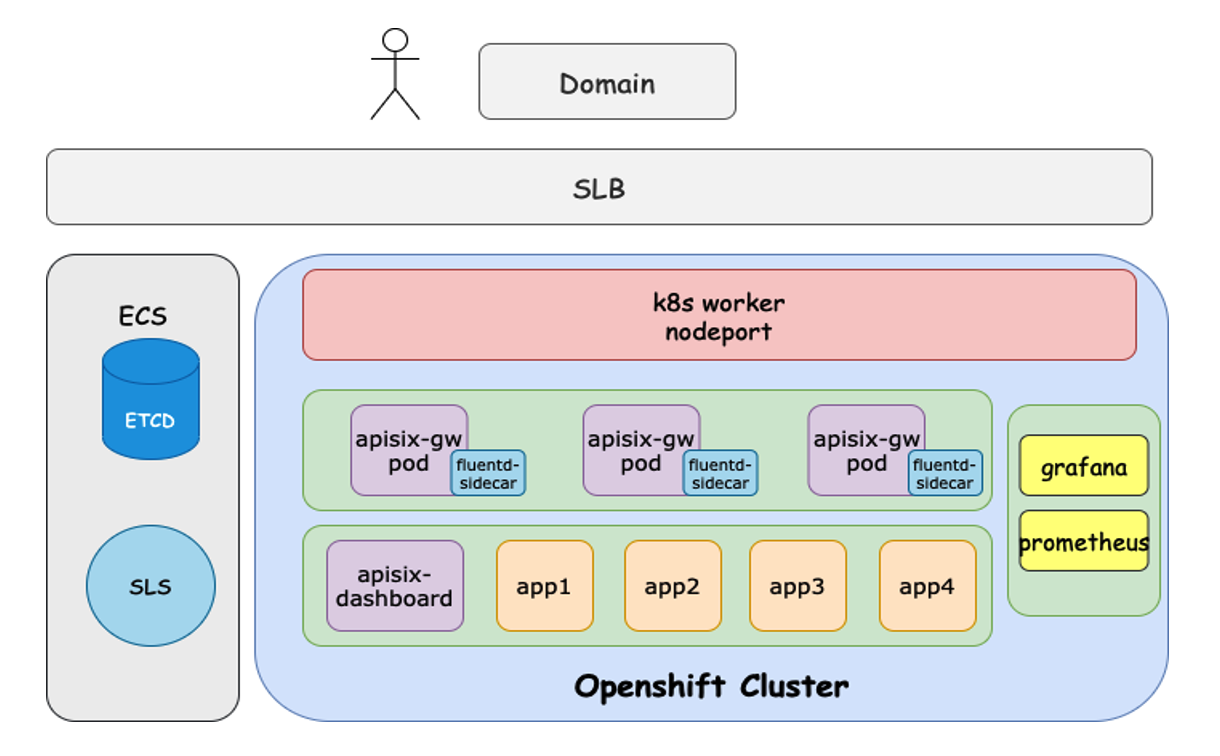

+### Overall Architecture

-我们目前的产品架构与在 K8s 中使用 Apache APISIX 大体类似。主要是将 Apache APISIX 的 Service 以

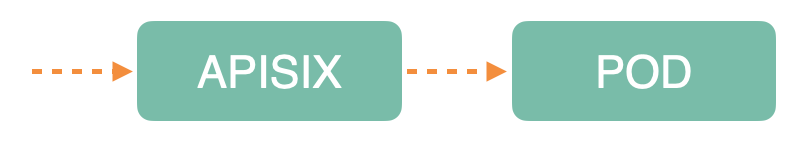

LoadBalancer 类型暴露到外部。然后用户通过请求访问传输到 Apache APISIX,再将路由转发到上游的相关服务中。

+Our current product architecture is broadly similar to using Apache APISIX in

K8s. The main idea is to expose Apache APISIX’s Service as a LoadBalancer type.

The user then transfers the request access to Apache APISIX and forwards the

route to the upstream related service.

-

+

-额外要提的一点是,为什么我们把 etcd 放在了技术栈外。一是因为早些版本解析域名时会出现偏差,二是因为在内部我们进行维护和备份的过程比较繁琐,所以就把

etcd 单独拿了出来。

+One additional point is why we put the ETCD outside the technology stack. The

ETCD was taken out separately because of errors in resolving domain names in

earlier versions, and because the internal maintenance and backup process was

cumbersome.

-### 业务模型

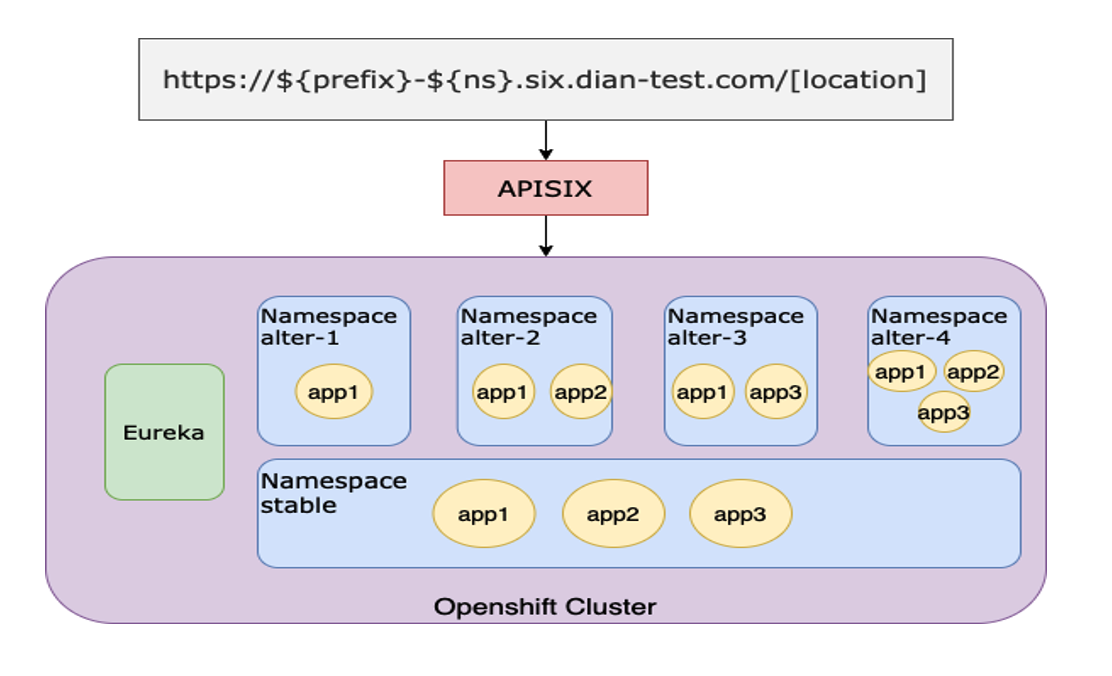

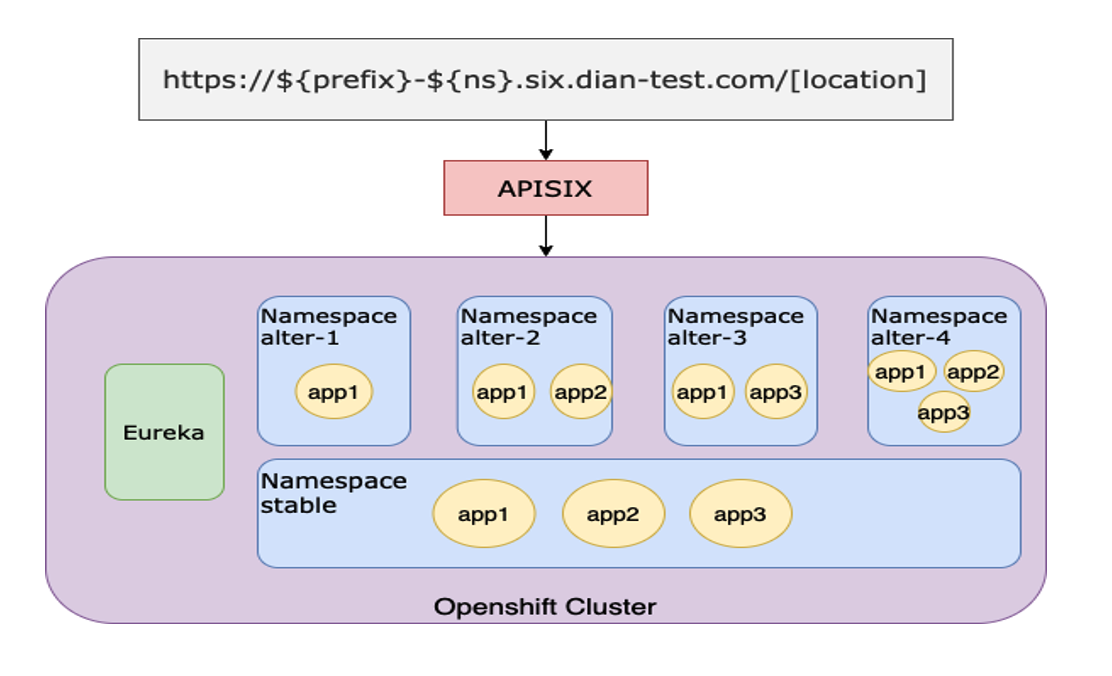

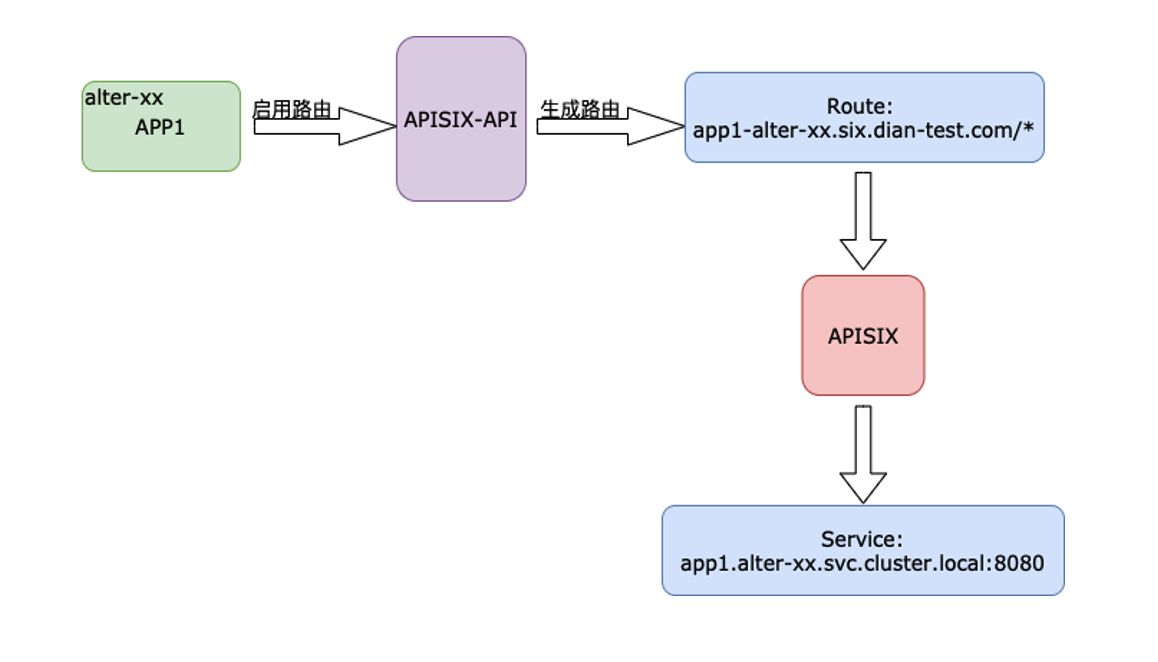

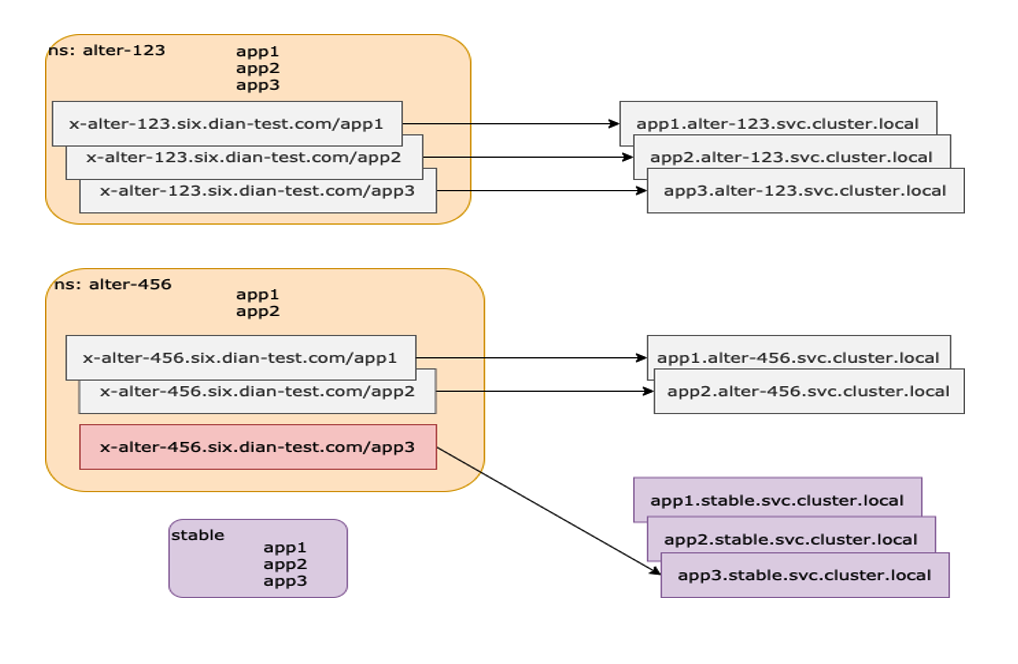

+### Business Model

-

+

-上图是接入 Apache APISIX 后的业务环境改造模型。每个开发或项目进行变更时,DNA 都会创建一个变更,同时转化为 k8s Namespace

资源。

+The image above shows the business environment transformation model after

accessing Apache APISIX. As each development or project changes, the DNA

creates a change and converts it into a K8s Namespace resource.

-因为 k8s Namespace 本身就具备资源隔离的功能,所以在部署时我们基于 Namespace

提供了多套项目变更环境,同时包含所有应用副本并注册到同一个 Eureka。我们改造了 Eureka 使得它可以支持不同 Namespace

的应用副本隔离,保证互相不被调用。

+Because K8s Namespace is resource-isolated in and of itself, we provide

multiple sets of project change environments based on Namespace at deployment

time, including copies of all applications and registering to the same Eureka.

We’ve modified Eureka to allow it to support the separation of application

replicas of different namespaces so that they don’t call each other.

-### 功能加持

+### Function Enhancement

-将上述架构和业务模型实践起来后,每个项目变更都会产生对应的 Namespace 资源,同时 DNA Operator 就会去创建对应的 APP

资源,最后去生成相应的 Apache APISIX 路由规则。

+With the above architecture and business model in practice, each project

change generates a corresponding Namespace resource, while the DNA Operator

creates the corresponding APP resource, and finally generates the corresponding

Apache APISIX routing rules.

-#### 功能一:项目变更多环境

+#### Function 1: Project Changes to More Environments

-在变更环境中我们有两种场景,一个是点对点模式,即一个域名对应一个应用。开发只需要启用域名,DNA 里就会利用 Apache APISIX

去生成对应路由,这种就是单一路径的路由规则。

+In a change environment we have two scenarios, one is point-to-point mode,

where one domain name corresponds to one application. Simply by enabling the

domain name, Apache APISIX is used in the DNA to generate the corresponding

route, which is the single path routing rule.

-

+

-另一种场景就是多级路径路由。在这种场景下我们基于 Apache APISIX 将项目变更中需要的多个 APP 路由指向到当前 Namespace

环境,其关联 APP 路由则指向到一套稳定的 Namespace 环境中(通常为 Stable 环境)。

+Another scenario is multilevel path routing. In this scenario, we use Apache

APISIX to point multiple APP routes required in project changes to the current

Namespace environment, and their associated APP routes to a Stable set of

Namespace environments (usually Stable) .

-

+

-#### 功能二:自动化流程

+#### Function 2: Automate the Process

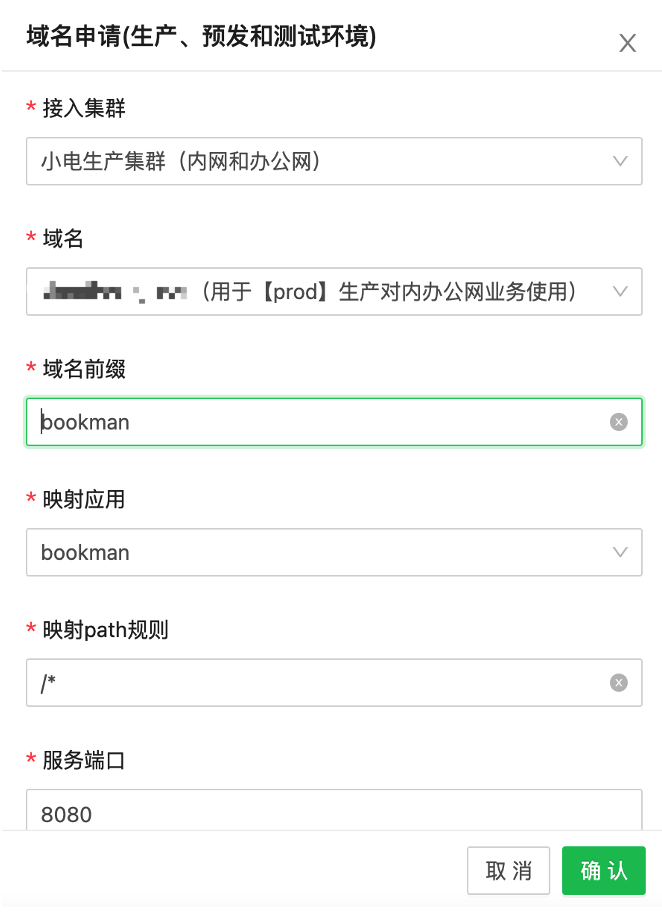

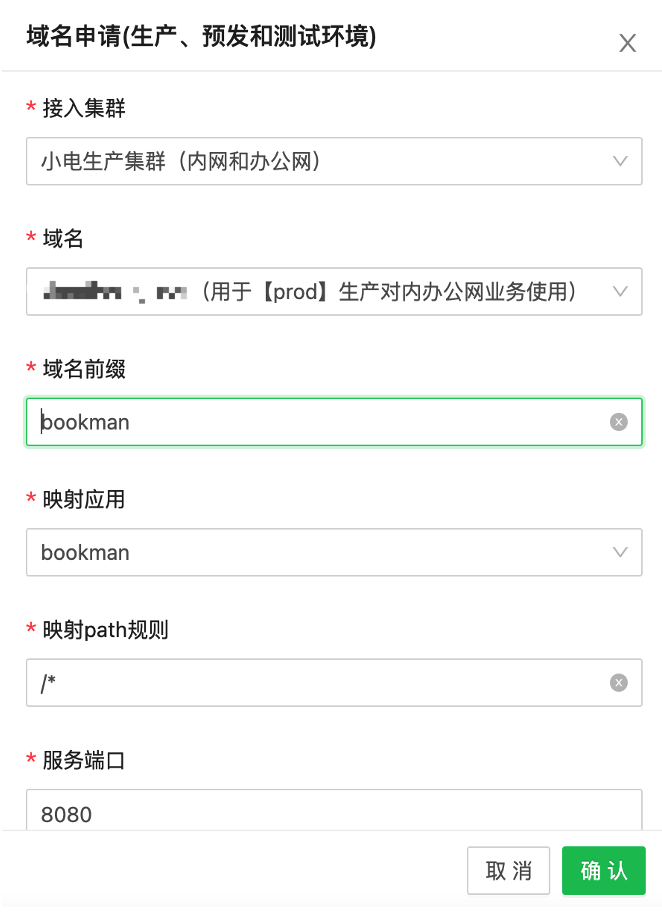

-基于上述项目环境的一些路由规则,搭配 Apache APISIX 的 API 调用功能做了一个控制中心,集合了一些包括域名前缀和对应的应用实例等功能。

+Based on some routing rules of the above project environment, Apache APISIX’s

API call function is used as a control center, and some functions including

domain name prefix and corresponding application instance are collected.

-比如某个新应用上线时,可以申请一条相应的路由规则,然后把规则加到控制中心中。需要请求路由时,就可以一键启用这条路由规则并自动同步到 Apache

APISIX。

+For example, when a new application comes online, you can request a

corresponding routing rule and add it to the control center. When you need to

request routing, you can enable this routing rule with one click and

automatically synchronize to Apache APISIX.

-

+

-另外我们也提供了单一普通路由申请,包括线上环境和测试环境,或者一些对外公网的暴露与测试需求等,也可以调用 Apache APISIX 接口。

+We also provide a single common routing request, including online and test

environments, or some public network exposure and test requirements, and call

the Apache APISIX interface.

-

+

-## 基于 Apache APISIX 的具体实践

+## Practice Based on Apache APISIX

-### 基于 OpenShift 部署

+### OpenShift Based Deployment

-OpenShift 具有非常严格的 SCC 机制,在利用 OpenShift 去部署 Apache APISIX

时遇到了很多问题,所以每次发版都要重新去做编译。

+OpenShift has a very strict SCC mechanism, and there are many problems with

deploying Apache APISIX with OpenShift, so you have to recompile every release.

-另外基于 Apache APISIX 提供的 Docker 镜像功能,我们将日常的一些基础软件进行了更新,比如调优和问题查看,通过 Image

Rebuild 功能上传到内部镜像仓库。

+Also, based on the Docker mirroring provided by Apache APISIX, we updated some

of the basic software on a daily basis, such as tuning and problem viewing, and

uploaded it to the internal Image repository via Image Rebuild.

-### 跨版本平滑处理

+### Cross-version Smoothing

-我们一开始使用的 Apache APISIX 为 1.5 版本,在更新到最新版本的过程中,我们经历了类似 etcd v2 版本性能下降、增加了 CORS

插件强校验等情况。

+We started with Apache APISIX at version 1.5, and in the process of updating

to the latest version, we experienced things like ETCD V2 performance

degradation and increased strong validation of the CORS plug-in.

-

+

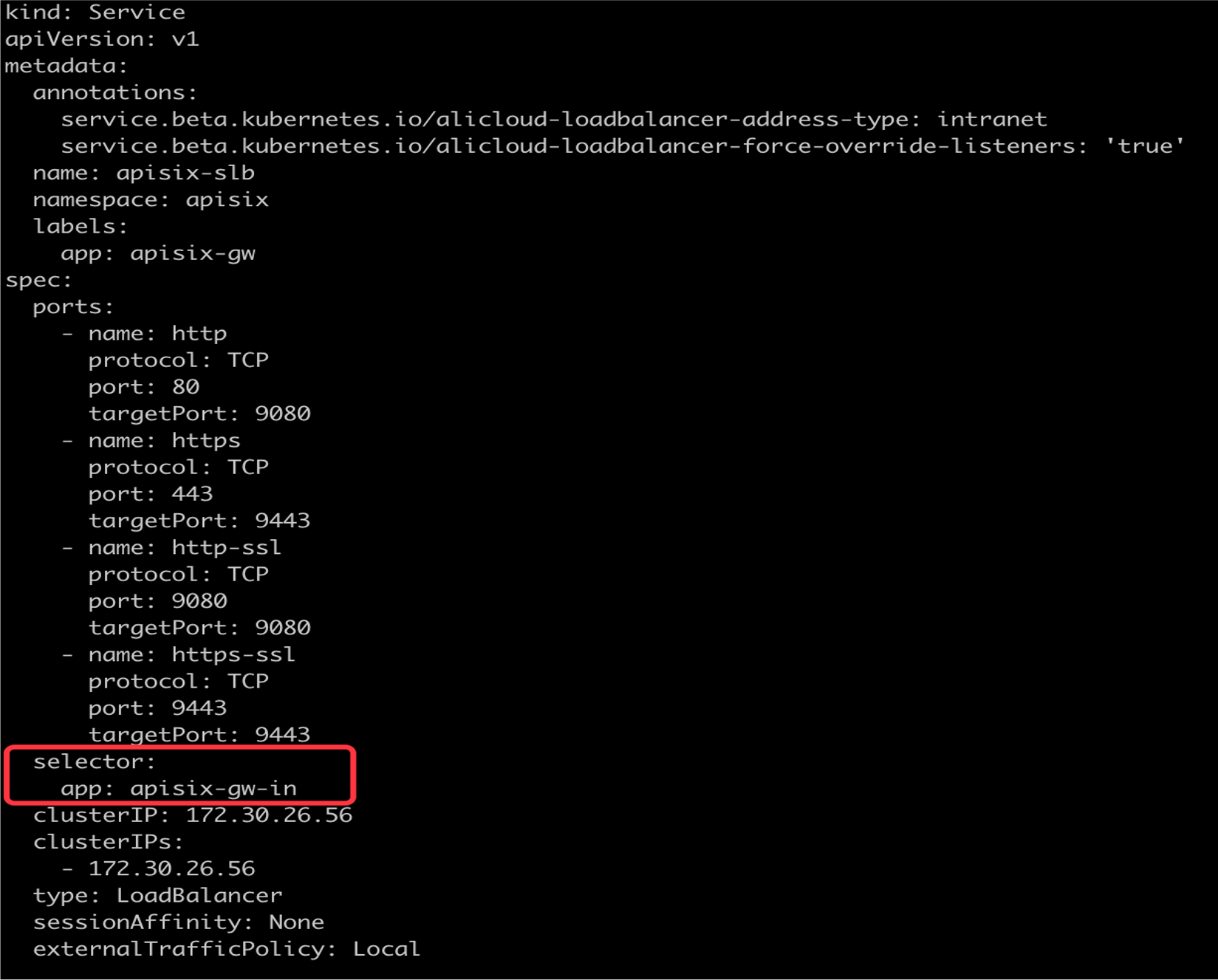

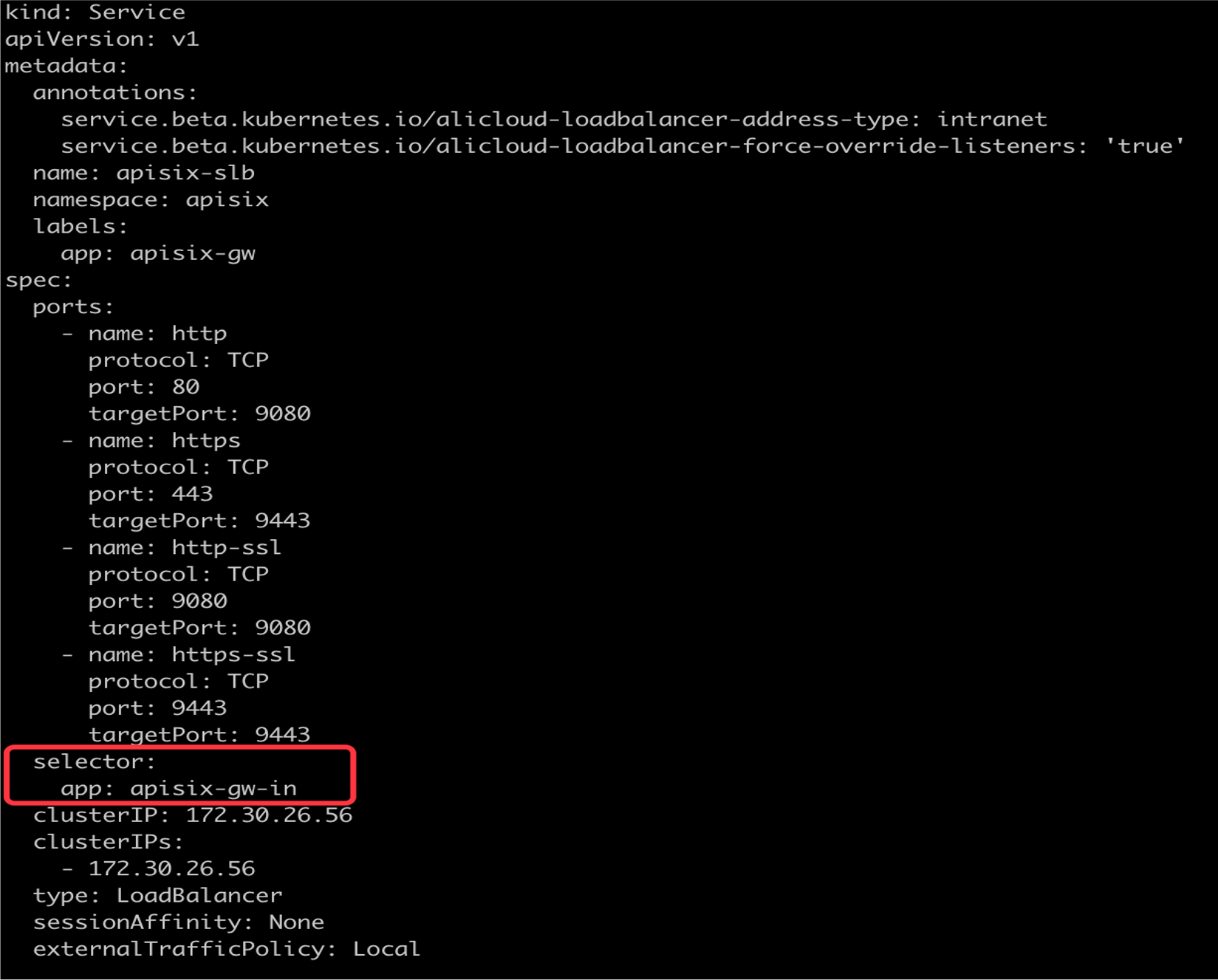

-基于此,我们采取了版本切流的方案,新版本启用并创建新的 Service 以及暴露相关 SLB 信息。通过 Service 的 Selector 属性,将

Gateway 切换到新版本的 Apache APISIX 中。另一方面我们也会将流量进行切分处理,将部分流量通过 DNS 解析到新版本 Apache

APISIX SLB 地址,来实现版本更迭的平滑处理。

+Based on this, we take the solution of version cut flow, new version enable

and create new Service and expose relevant SLB information. Switch the Gateway

to the new version of Apache APISIX using the Selector property of the Service.

On the other hand, we will also split the traffic, some of the traffic through

DNS resolution to the new version of Apache APISIX SLB address, to achieve a

smooth version of the process.

-### 解决 etcd 压缩问题

+### Solve the ETCD Compression Problem

-在使用期间我们也观察到 Load 一直突高不下的情况,经检查发现 etcd 内的数据量已达到 600 多兆,所以我们采取了定期压缩 etcd

的措施,将历史事物数据全部清除。具体代码可参考:

+During the use period, we also observed that the Load has been suddenly high.

After checking, we found that the amount of data in the ETCD has reached more

than 600 megabytes, so we took the measure of compressing the ETCD regularly,

wIPE out all historical data. The code can be found at:

```sh

$ ETCDCTL_API=3 etcdctl --endpoints=http://etcd-1:2379 compact 1693729

$ ETCDCTL_API=3 etcdctl --endpoints=http://etcd-1:2379 defrag

```

-### 获取 Client-IP

+### Get Client-IP

-在线上业务场景中,我们需要获取到源 IP 去做相关的业务处理。刚好 Apache APISIX 提供了「X-Real-IP」的功能,通过配置

real_ip_header 和开启 externalTrafficPolicy 的 Local 模式进行相关获取操作。

+In the online business scenario, we need to get the source IP to do the

relevant business processing. Apache APISIX provides the “X-Real-IP”capability

to do this by configuring real and enabling Local mode of externalTrafficPolicy.

-## 未来期望

+## Future Expectations

-众所周知,小电现在是主做共享充电宝业务场景,所以在属性上也是偏物联网方向。

+As we all know, DIAN is now the main business scenario for sharing chargers,

so in the property is also partial to the direction of the Internet of things.

-从业务层面出发,我们也还有一些重要业务比如 MQTT 类型的应用。目前它们在容器内还是以 SLB 模式去暴露的,希望后续也可以接入到整个 Apache

APISIX 集群里。

+From the business level, we also have some important business such as MQTT

type applications. They are currently exposed in the container in SLB mode and

hopefully can be plugged into the entire Apache APISIX cluster in the future.

-从前端层面来讲,目前的前端应用还是部署在容器里,后续我们也打算将前端应用直接接入 Apache APISIX,通过 Proxy Rewrite

插件功能直接指向我们的阿里云 OSS 域名。这样可以节省容器部署的资源,进行更方便地管理。

+At the front-end level, the current front-end application is still in a

container, and we intend to connect the front-end application directly to

Apache APISIX via Proxy Rewrite plug-in to our Ali Cloud OSS domain name. This

saves the resources for container deployment and makes it easier to manage.

-在对 Apache APISIX 项目上,我们经过实践部署也产生了一些改进需求,希望后续 Apache APISIX

的版本更迭中可以进行相关功能的支持或完善。比如:

+On the Apache APISIX project, we have also developed a number of practical

deployment requirements, in the hope that subsequent changes in the Apache

APISIX version can support or improve related functions. For example:

-1. 技术管理层面进行多集群功能的添加

-2. 开发层面进行更细粒度的用户权限管理

-3. 功能层面支持 SSL 证书滚动更新

-4. Apache APISIX-Ingress-Controller 相关业务接入

+1. Add multi-cluster functionality at the technical management level

+2. More fine-grained user rights management at the development level

+3. Feature level support for SSL certificate rolling updates

+4. Apache APISIX-Ingress-Controller related business access

diff --git a/website/blog/2021/09/24/youpaicloud-usercase.md

b/website/blog/2021/09/24/youpaicloud-usercase.md

index 78d746a..1ee4c6c 100644

--- a/website/blog/2021/09/24/youpaicloud-usercase.md

+++ b/website/blog/2021/09/24/youpaicloud-usercase.md

@@ -1,160 +1,159 @@

---

-title: "Apache APISIX Ingress 为何成为又拍云打造容器网关的新选择?"

-author: "陈卓"

+title: "Why is Apache APISIX Ingress a new option for building container

gateways into the UPYUN?"

+author: "Zhuo Chen"

keywords:

- Apache APISIX

- Apache APISIX Ingress

-- 又拍云

-- 容器网关

-description: 本文介绍了又拍云选择 Apache APISIX Ingress

后所带来公司内部网关架构的更新与调整,同时分享了在使用过程中的一些实践场景介绍。

+- UPYUN

+- Container gateway

+description: This article describes the update and adjustment of UPYUN's

internal gateway architecture after you selected Apache Apisix Ingress, and

shares some of the practice scenarios in use.

tags: [User Case]

---

-> 本文介绍了又拍云选择 Apache APISIX Ingress 后所带来公司内部网关架构的更新与调整,同时分享了在使用过程中的一些实践场景介绍。

-> 作者陈卓,又拍云开发工程师,负责云存储、云处理和网关应用开发。

+> This article describes the update and adjustment of UPYUN's internal gateway

architecture after you selected Apache Apisix Ingress, and shares some of the

practice scenarios in use. Chen Zhuo, a cloud development engineer, is

responsible for cloud storage, cloud processing, and gateway application

development.

<!--truncate-->

-## 项目背景介绍

+## Background

-目前市面上可执行 Ingress 的产品项目逐渐丰富了起来,可选择的范围也扩大了很多。这些产品按照架构大概可分为两类,一类像 k8s

Ingress、Apache APISIX Ingress,他们是基于 Nginx、OpenResty 等传统代理器,使用 k8s-Client 和

Golang 去做 Controller。还有一类新兴的用 Golang 语言去实现代理和控制器功能,比如 Traefik。

+The range of Ingress products on the market has grown and the range of options

has expanded considerably. These products fall into roughly two architectural

categories. One, like K8s Ingress and Apache APISIX Ingress, are based on

traditional agents such as Nginx and OpenResty, and use k8s-Client and Golang

to do Controller. There is also an emerging class of agents and controllers

using the Golang language, such as Traefik.

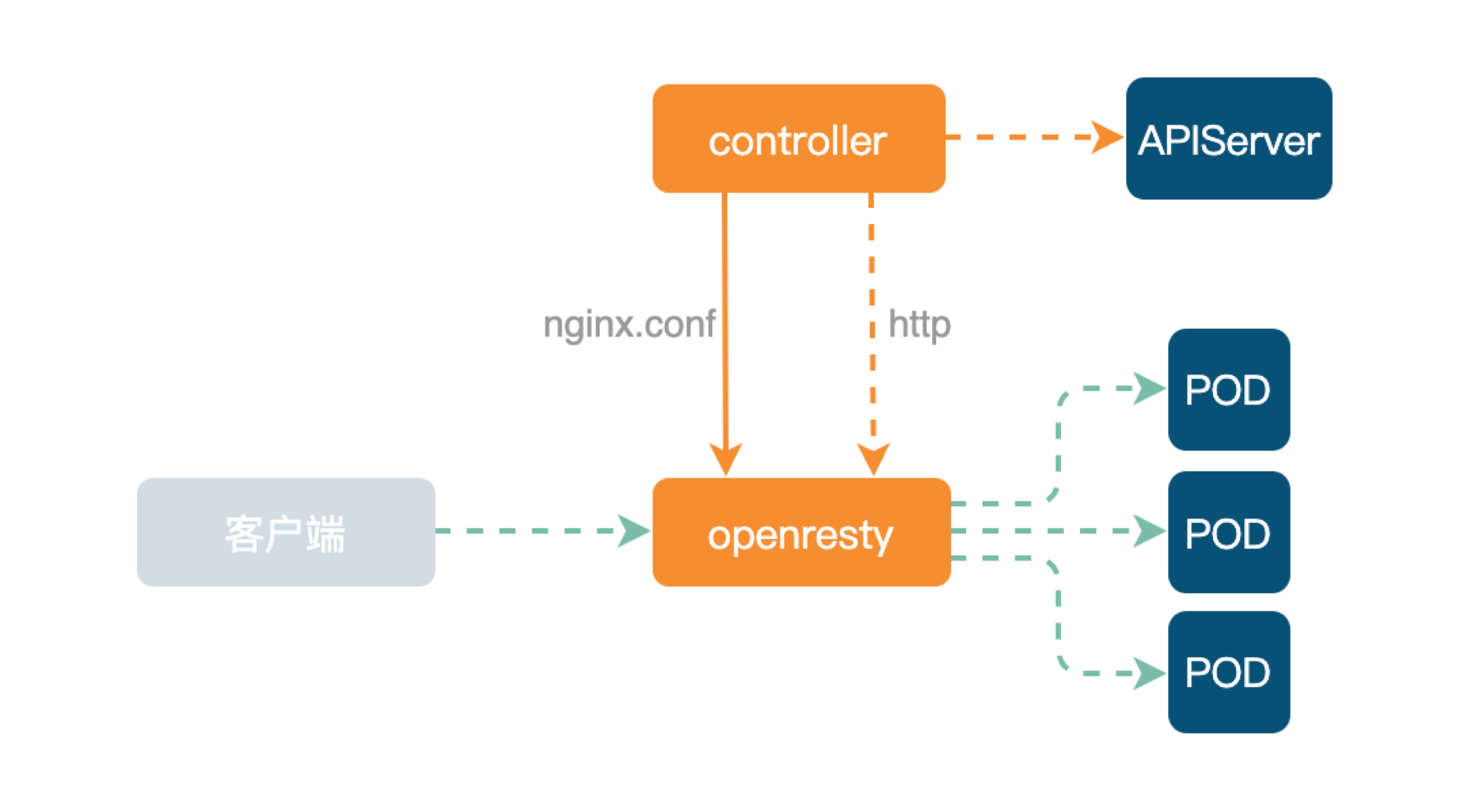

-又拍云最开始包括现在的大部分业务仍在使用 Ingress-Nginx,整体架构如下。

+Ingress-Nginx is still used by most businesses in UPYUN, and the overall

architecture is as follows.

-

+

-下层为数据面 OpenResty。上层 Controller 主要是监听 APIServer 的资源变化,并生成 nginx.conf 配置文件,然后

Reload OpenResty。如果 POD IP 发生变化,可直接通过 HTTP 接口传输给 OpenResty Lua 代码去实现上游 Upstream

node 替换。

+The lower layer is data plane OpenResty. The upper Controller listens

primarily for resource changes from APIServer and generates `nginx.conf`

configuration file, and then Reload OpenResty. If the POD IP changes, the

Upstream Upstream node replacement can be transmitted directly to the OpenResty

Lua code via the HTTP interface.

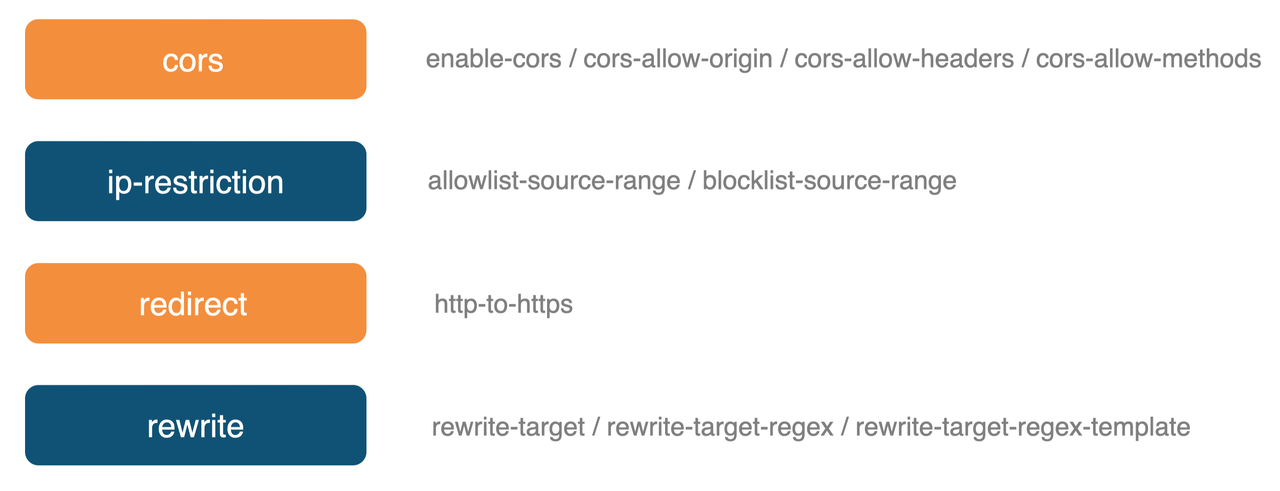

-Ingress-Nginx 的扩展能力主要通过 Annotations

去实现,配置文件本身的语法和路由规则都比较简单。可以按照需求进行相关指令配置,同时也支持可拓展 Lua 插件,提高了定制化功能的可操作性。

+The extensibility of Ingress-Nginx is achieved mainly through Annotations, and

the configuration file itself has simple syntax and routing rules. Lua can be

configured on demand, and the extension of the Lua plug in improves Operability

of customization.

-

+

-但 Ingress-Nginx 它也有一些缺点,比如:

+But Ingress-Nginx has some drawbacks, such as:

-- 开发插件时组件依赖复杂,移植性差

-- 语义能力弱

-- 任何一条 Ingress 的修改都需要 Reload,对长链接不友好

+- The development of plug-ins depends on complex components, poor portability

+- Weak semantic ability

+- Any change to Ingress requires Reload, which is unfriendly to long links

-在维护现有逻辑时,上述问题造成了一定程度的困扰,所以我们开始寻找相关容器网关替代品。

+These issues caused some confusion in maintaining the existing logic, so we

started looking for alternatives to the relevant container gateways.

-## 调研阶段

+## Research Phase

-在替代 Ingress-Nginx 的选择中,我们主要考量了 Traefik 和 Apache APISIX Ingress。

+In choosing an alternative to Ingress-Nginx, we focused on Traefik and Apache

APISIX Ingress.

-Traefik 是云原生模式,配置文件极其简单,采用分布式配置,同时支持多种自动配置发现。不仅支持 k8s、etcd,Golang

的生态语言支持比较好,且开发成本较低,迭代和测试能力较好。但是在其他层面略显不足,比如:

+Traefik is cloud-native, with extremely simple configuration files, a

distributed configuration, and support for a variety of automated configuration

discovery. Not only support K8s, ETCD, Golang eco-language support is better,

and the development cost is lower, iteration and testing ability is better. But

it falls short at other levels, such as:

-- 扩展能力弱

-- 不支持 Reload

-- 性能和功能上不如 Nginx(虽然消耗也较低)

+- Weak expansibility

+- Reload is not supported

+- Not as good as Nginx in terms of performance and functionality (though it’s

also less expensive)

-它不像 Nginx 有丰富的插件和指令,通过增加一条指令即可解决一个问题,在线上部署时,可能还需在 Traefik 前搭配一个 Nginx。

+Unlike Nginx, which is rich in plugins and instructions, you can solve a

problem by adding an instruction, and you may need to pair an Nginx with

Traefik when you deploy online.

-综上所述,虽然在操作性上 Traefik 优势尽显,但在扩展能力和运维效率上有所顾虑,最终没有选择 Traefik。

+In summary, although Traefik has advantages on operations, we are worried

about its drawbacks on extension and operational efficiency concerns, so we did

not choose Traefik.

-## 为什么选择 Apache APISIX Ingress

+## Why Apache APISIX Ingress

-### 内部原因

+### Internal Cause

-在公司内部的多个数据中心上目前都存有 Apache APISIX 的网关集群,这些是之前从 Kong 上替换过来的。后来基于 Apache APISIX

的插件系统我们开发了一些内部插件,比如内部权限系统校验、精准速率限制等。

+Apache APISIX’s cluster of gateways, which were previously replaced from Kong,

is currently hosted in multiple data centers within the company. Later, based

on the Apache APISIX plug-in system, we developed some internal plug-in, such

as internal permission system check, precision rate limit and so on.

-### 效率维护层面

+### Efficiency of Maintenance

-同时我们也有一些 k8s 集群,这些容器内的集群网关使用的是 Ingress Nginx。在之前不支持插件系统时,我们就在 Ingress Nginx

上定制了一些插件。所以在内部数据中心和容器的网关上,Apache APISIX 和 Nginx 的功能其实有一大部分都是重合的。

+We also have some K8s clusters, and the cluster gateway in these containers is

using Ingress Nginx. When the plug-in system wasn’t supported before, we

customized some of the plug-ins on Ingress Nginx. So Apache APISIX and Nginx

have a lot of overlap in their internal data center and container gateways.

-为了避免后续的重复开发和运维,我们想把之前使用的 Ingress Nginx 容器内网关全部替换为 Apache APISIX

Ingress,实现网关层面的组件统一。

+To avoid subsequent development and maintenance, we want to replace all of the

Ingress Nginx container internal gateways with Apache APISIX Ingress to achieve

component unification at the gateway level.

-## 基于 Apache APISIX Ingress 的调整更新

+## Tuning Update Based on Apache APISIX Ingress

-目前 Apache APISIX Ingress 在又拍云是处于初期(线上测试)阶段。因为 Apache APISIX Ingress

的快速迭代,我们目前还没有将其运用到一些重要业务上,只是在新集群中尝试使用。

+Currently Apache APISIX Ingress is in the early stages of UPYUN. Because of

the rapid iteration of Apache APISIX Ingress, we haven’t yet applied it to some

important businesses, just to try it out in a new cluster.

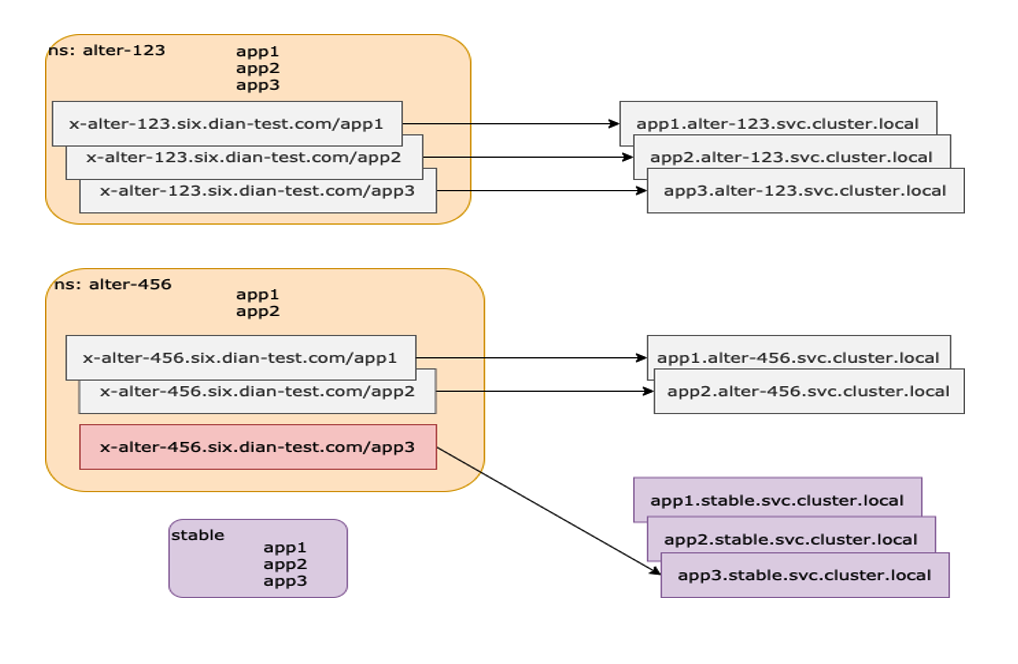

-### 架构调整

+### Restructuring

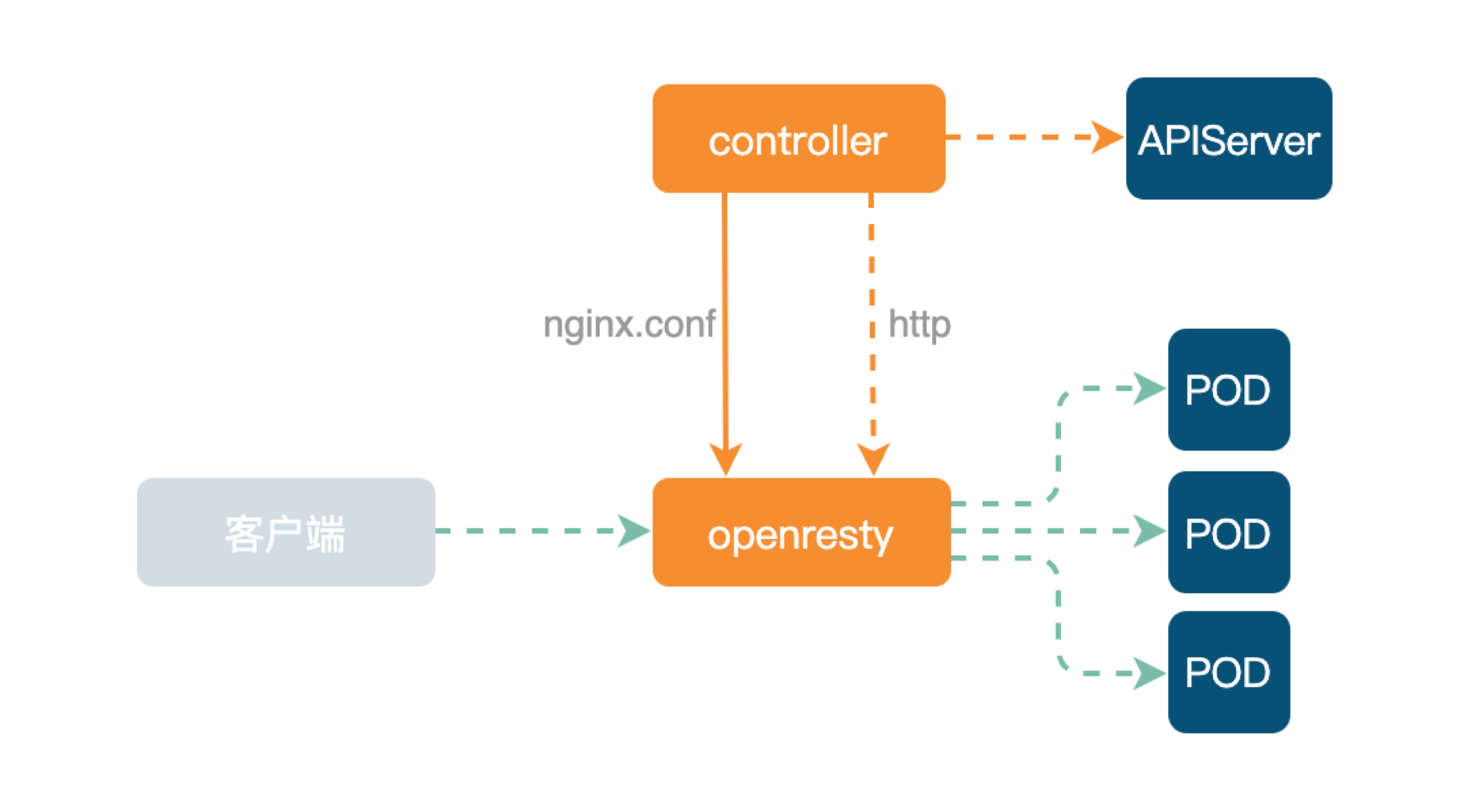

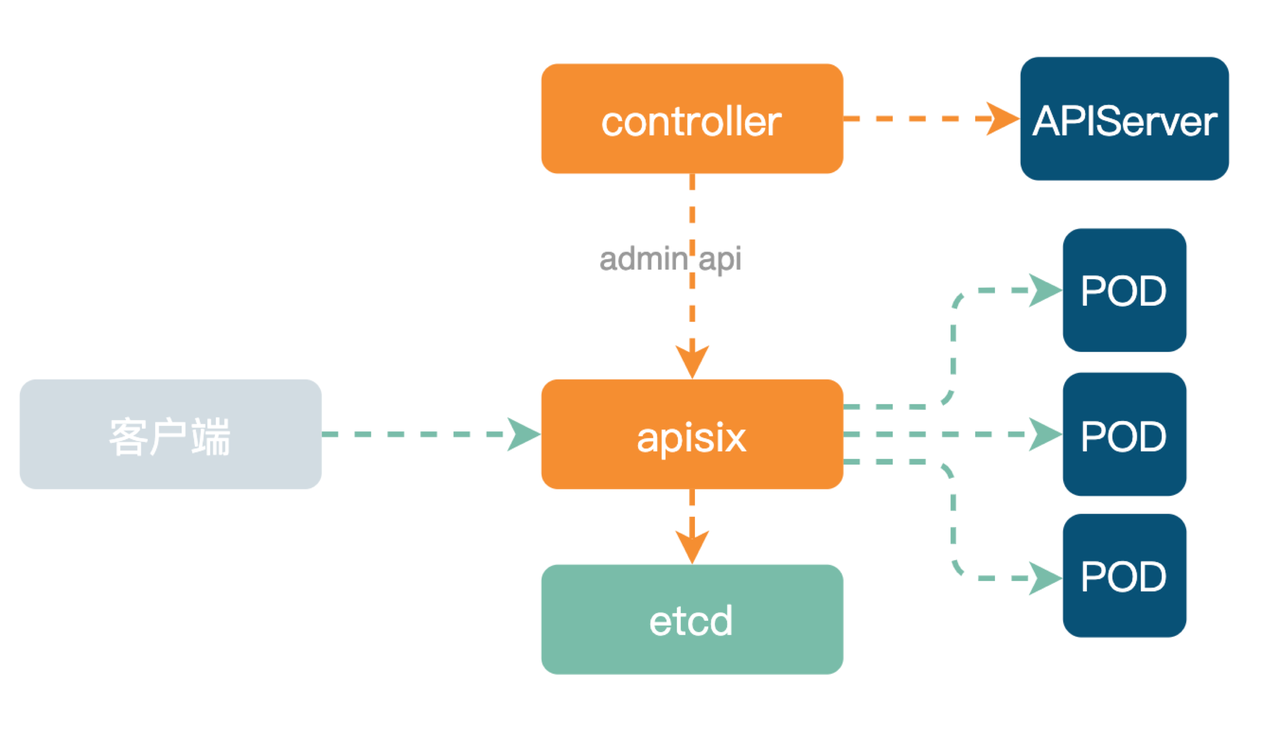

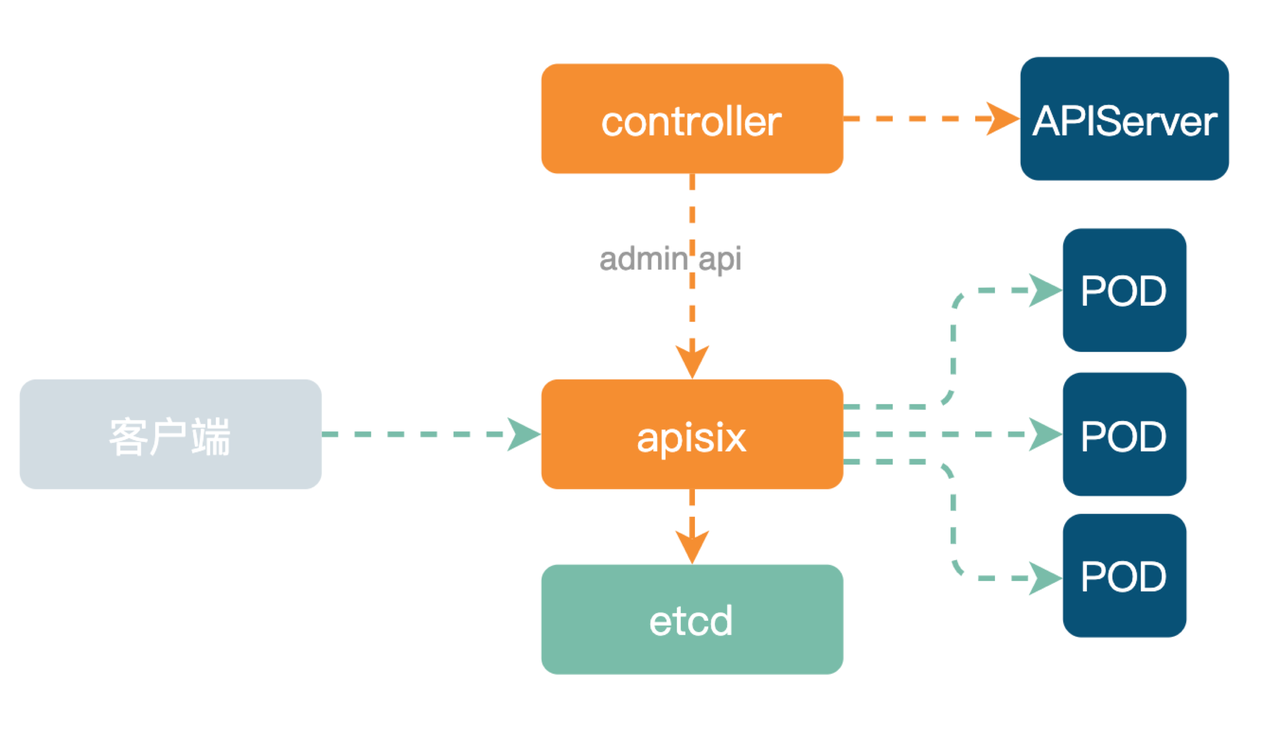

-使用 Apache APISIX Ingress 之后,内部架构如下图所示。

+With Apache APISIX Ingress, the internal architecture looks like this.

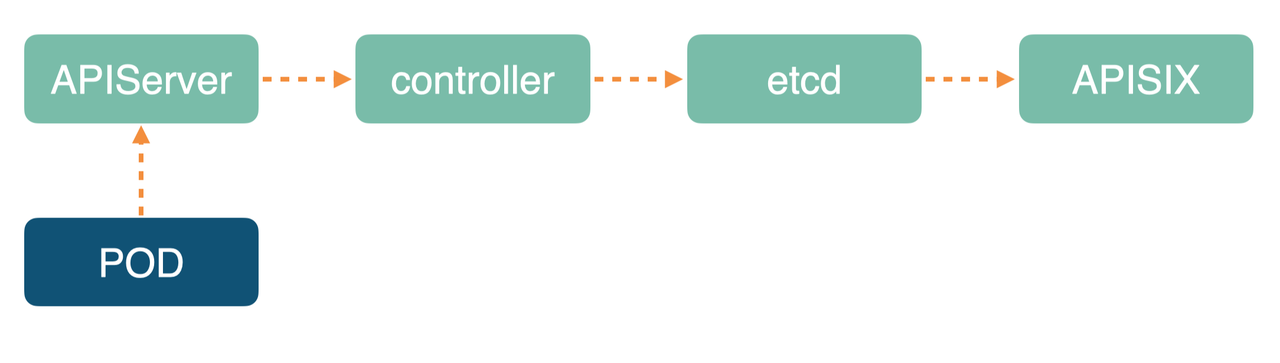

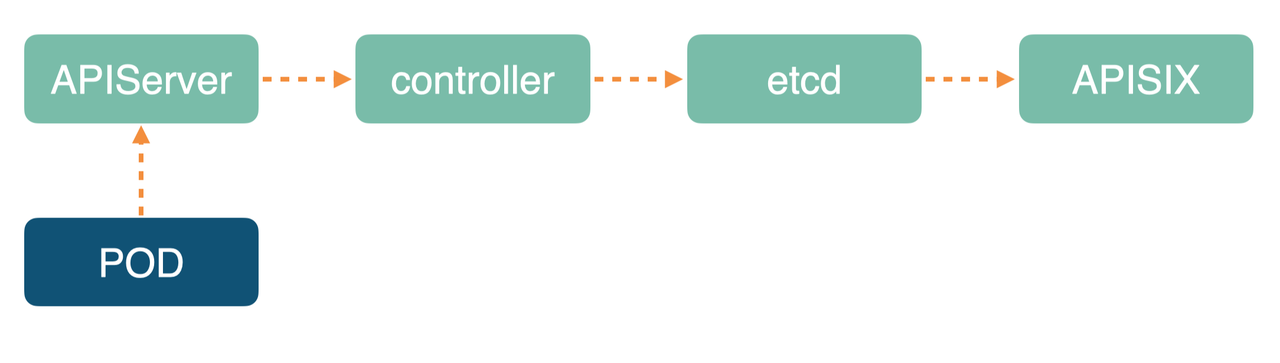

-

+

-跟前面提到的 Ingress-Nginx 架构不一样,底层数据面替换成了 Apache APISIX 集群。上层 Controller 监听

APIServer 变化,然后再通过 etcd 将配置资源分发到整个 Apache APISIX 集群的所有节点。

+Unlike the aforementioned Ingress-Nginx architecture, the underlying data

surface has been replaced with the Apache APISIX cluster. The upper Controller

listens for APIServer changes and then distributes the configuration resources

through the ETCD to all nodes in the entire Apache APISIX cluster.

-

+

-由于 Apache APISIX 是支持动态路由修改,与右边的 Ingress-Nginx 不同。在 Apache APISIX

中,当有业务流量进入时走的都是同一个 Location,然后在 Lua 代码中实现路由选择,代码部署简洁易操作。而右侧 Ingress-Nginx 相比,其

nginx.conf 配置文件复杂,每次 Ingress 变更都需要 Reload。

+Because Apache APISIX supports dynamic routing changes, it is different from

Ingress-Nginx on the right. In Apache APISIX, the same Location is used when

traffic enters, and routing is done in Lua Code, which is easy to deploy. And

the right Ingress-Nginx compared to its `nginx.conf` configuration file is

complex and requires a Reload for every Ingress change.

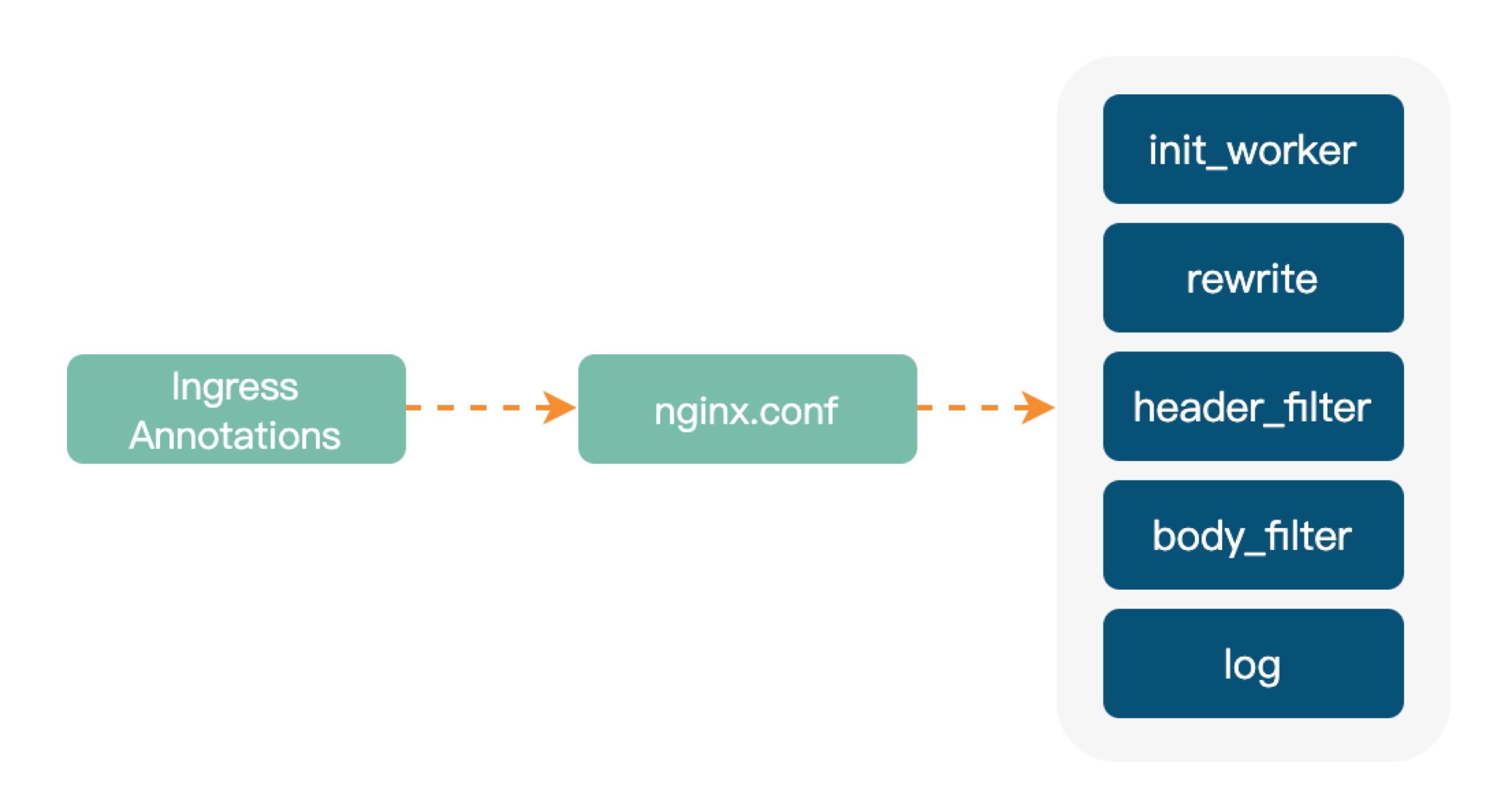

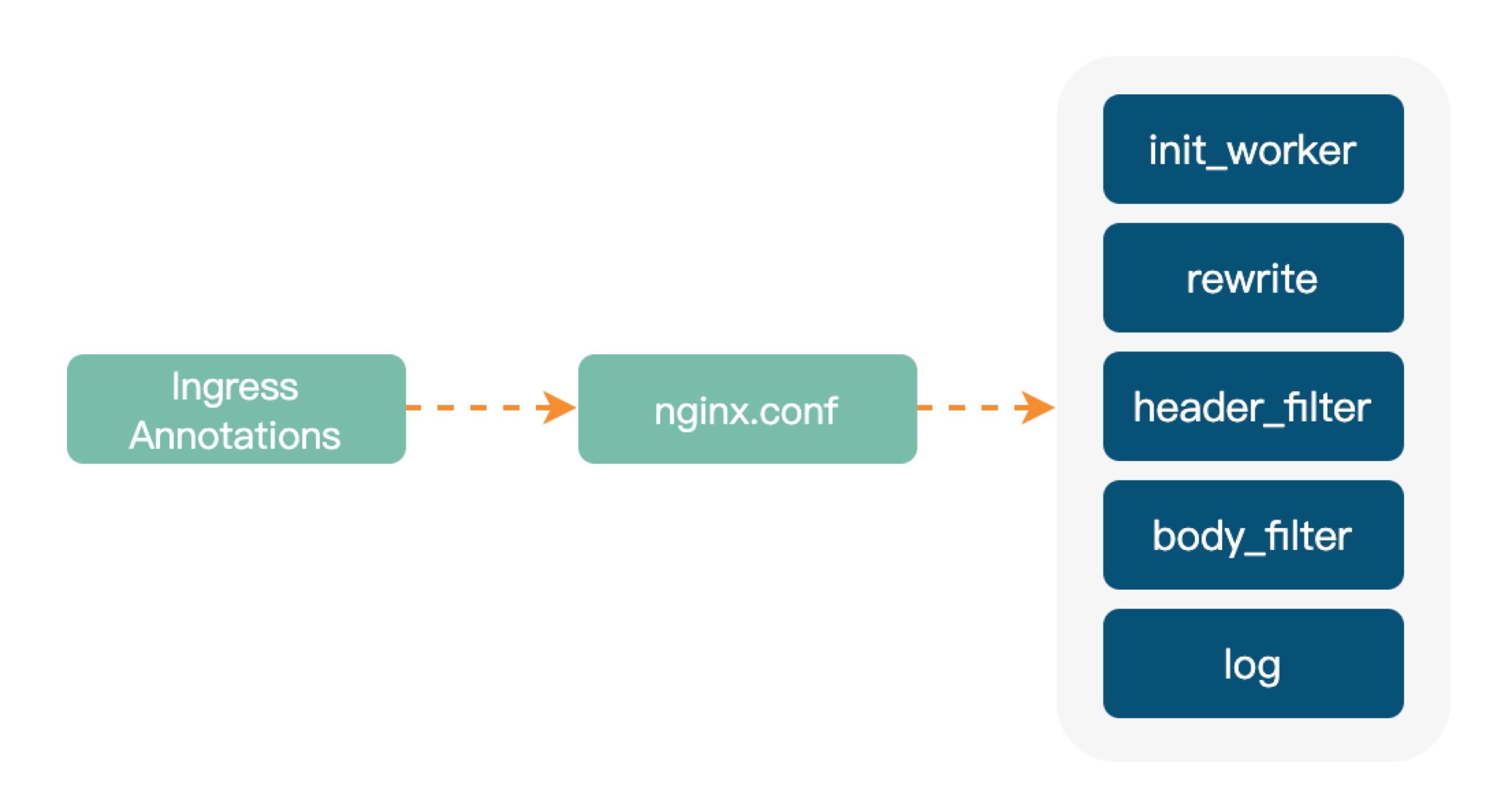

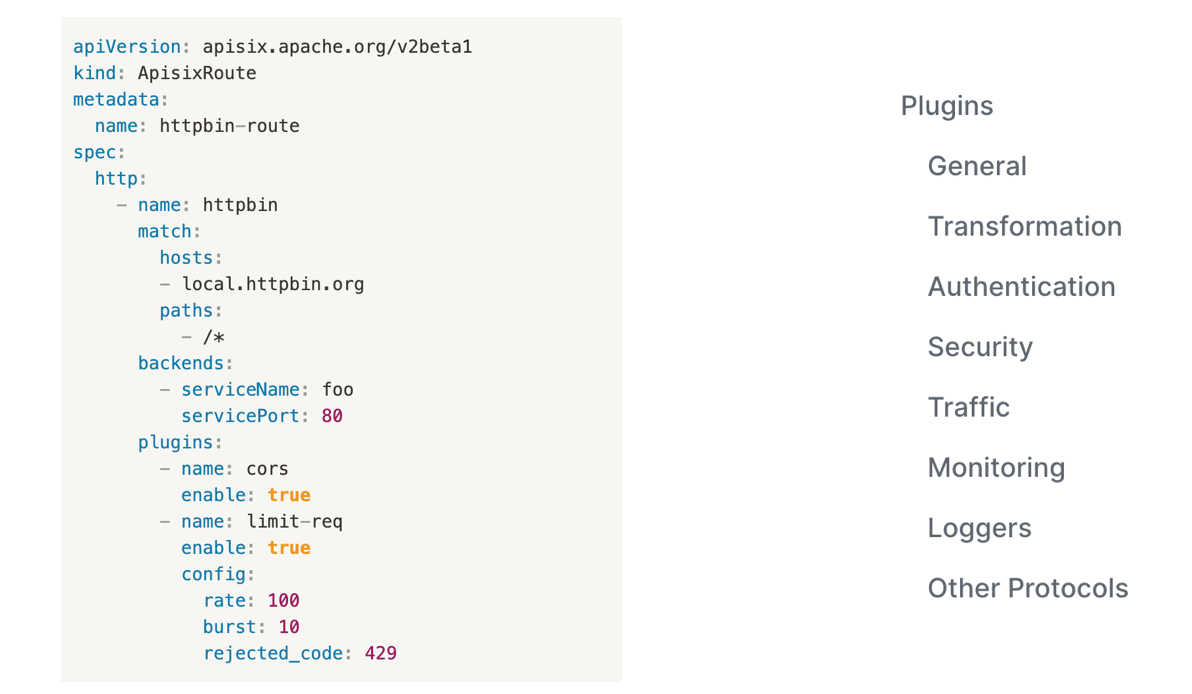

### Apache APISIX Ingress Controller

-Apache APISIX Ingress Controller 依赖于 Apache APISIX 的动态路由能力进行功能实现。它主要监控

APIServer 的资源变化,进行数据结构转换、数据校验和计算 DIFF,最后应用到 Apache APISIX Admin API 中。

+Apache APISIX Ingress Controller relies on Apache APISIX’s dynamic routing

capabilities for its functional implementation. It monitors resource changes at

APIServer, performs data structure conversion, data validation, and DIFF

computation, and finally applies it to the Apache APISIX Admin API.

-同时 Apache APISIX Ingress Controller 也有高可用方案,直接通过 k8s 的 leader-election

机制实现,不需要再引入外部其他组件。

+Apache APISIX Ingress Controller also has a highly available scheme,

implemented directly through the leader-election mechanism of K8s, without the

need to introduce additional external components.

-#### 声明式配置

+#### Declarative Configuration

-目前 Apache APISIX Ingress Controller 支持两种声明式配置,Ingress Resource 和 CRD

Resource。前者比较适合从 Ingress-Nginx

替换过来的网关控件,在转换成本上是最具性价比的。但是它的缺点也比较明显,比如语义化能力太弱、没有特别细致的规范等,同时能力拓展也只能通过 Annotation

去实现。

+Currently Apache APISIX Ingress Controller supports two declarative

configurations, Ingress Resource and CRD Resource. The former is more suitable

for the replacement of gateway controls from Ingress-Nginx and is the most

cost-effective in terms of conversion costs. However, its shortcomings are

obvious, such as too weak semantic ability, no specific specifications, and

capacity development can only be achieved through annotations.

-又拍云内部选择的是第二种声明配置——语义化更强的 CRD Resource。结构化数据通过这种方式配置的话,只要 Apache APISIX

支持的能力,都可以进行实现。

+UPYUN selected the second declarative configuration, the more semantic CRD

Resource. Structured data can be configured this way, with the capabilities

that Apache APISIX supports.

-如果你想了解更多关于 Apache APISIX Ingress Controller 的细节干货,可以参考 Apache APISIX PMC 张超在

Meetup

上的[分享视频](https://www.bilibili.com/video/BV1eB4y1u7i1?spm_id_from=333.999.0.0)。

+If you want to learn more about Apache APISIX Ingress Controller dry goods,

see Apache Apisix PMC Zhang Chao’s [sharing

video](https://www.bilibili.com/video/BV1eB4y1u7i1?spm_id_from=333.999.0.0) on

Meetup.

-### 功能提升

+### Functional Enhancement

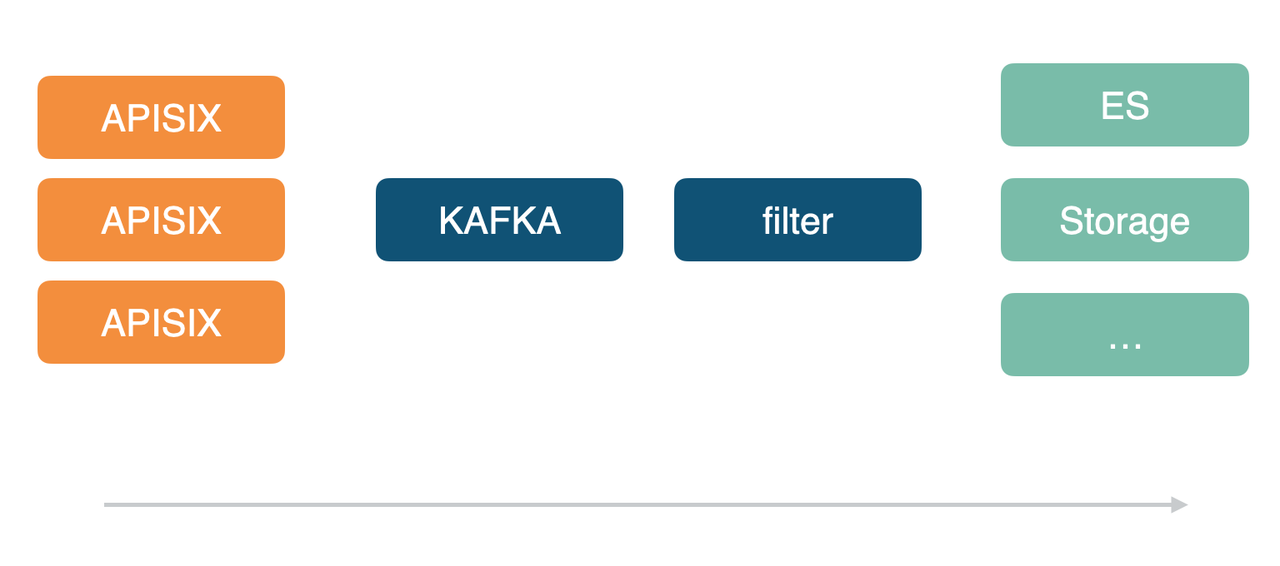

-#### 效果一:日志层面效率提高

+#### Effect 1: Log Level Efficiency

-目前我们内部有多个 Apache APISIX 集群,包括数据中心网关和容器网关都统一开始使用了 Apache

APISIX,这样在后续相关日志的处理/消费程序时可以统一到一套逻辑。

+We currently have multiple Apache APISIX clusters in our company, including

the data center gateway and the container gateway that all started using Apache

APISIX uniformly so that they can be consolidated into a set of logic for

subsequent processing/consumption of related logs.

-

+

-当然 Apache APISIX 的日志插件支持功能也非常丰富。我们内部使用的是

Kafka-Logger,它可以进行自定义日志格式。像下图中其他的日志插件可能有些因为使用人数的原因,还尚未支持自定义化格式,欢迎有相关需求的小伙伴进行使用并提交

PR 来扩展当前的日志插件功能。

+Of course, Apache APISIX’s logging plug-in support is also very rich.

Internally we use Kafka-Logger, which allows you to customize the log format.

Like the other log plug-ins in the figure below, custom formats may not yet be

supported due to the number of users, partners with relevant needs are welcome

to use and submit PR to extend the current logging plug-in functionality.

-

+

-#### 效果二:完善监控与健康检查

+#### Effect Two: Improve the Monitoring and Health Check

-在监控层面,Apache APISIX 也支持 Prometheus、SkyWalking 等,我们内部使用的是 Prometheus。

+At the monitoring level, Apache APISIX also supports Prometheus, SkyWalking,

and so on, and we use Prometheus internally.

-Apache APISIX 作为一个基本代理器,可以实现 APP 状态码的监控和请求等需求。但 Apache APISIX

的自身健康检查力度不是很好控制。目前我们采用的是在 k8s 里面部署一个服务并生成多个 POD,将这个服务同时应用于 Apache APISIX

Ingress,然后通过检查整个链路来确定 Apache APISIX 是否健康。

+Apache APISIX is a basic proxy for the monitoring and request of APP status

codes. But Apache APISIX’s own health checks are not well controlled. What

we’re doing now is deploying a service inside K8s and generating multiple pods,

applying that service to Apache APISIX Ingress, and then checking the entire

link to see if Apache APISIX is healthy.

-

+

-## 使用 Apache APISIX Ingress 实践解决方案

+## Practice the Solution Using Apache APISIX Ingress

-### 场景一:k8s 应用变更

+### Scenario 1: K8s Application Changes

-在使用 k8s 搭配 Apache APISIX Ingress 的过程中,需要做到以下几点:

+In using K8s with Apache APISIX Ingress, you need to do the following:

-- 更新策略的选用建议使用滚动更新,保证大部分 POD 可用,同时还需要考虑稳定负载的问题

-- 应对 POD 启动 k8s 内部健康检查,保证 POD 的业务可用性,避免请求过来后 POD 仍处于不能提供正常服务的状态

-- 保证 Apache APISIX Upstream 里的大部分 Endpoint 可用

+- The selection of update strategy suggests using rolling update to ensure

that most of the POD is available, but also need to consider the problem of

stable load

+- We should start K8s internal health check for POD to ensure the business

availability of POD and avoid the POD being unable to provide the normal

service after the request

+- Make most endpoints available on Apache APISIX Upstream

-在实践过程中,我们也遇到了传输延时的问题。POD 更新路径如下所示,POD Ready

后通过层层步骤依次进行信息传递,中间某些链路就可能会出现延时问题。正常情况下一般是 1 秒内同步完成,某些极端情况下部分链路时间可能会增加几秒进而出现

Endpoint 更新不及时的问题。

+In practice, we also encounter the problem of transmission delay. The POD

update path is shown below, after the POD Ready through the layer-by-layer

steps of information transfer, some of the links in the middle may appear delay

problems. In some extreme cases, the link time may be increased by a few

seconds and the Endpoint update may not be up to date.

-

+

-这种情况下的解决方案是,当更新时前一批 POD 变成 Ready 状态后,等待几秒钟再继续更新下一批。这样做的目的是保证 Apache APISIX

Upstream 里的大部分 Endpoint 是可用的。

+The solution in this case is to wait a few seconds after the first batch of

POD becomes Ready before continuing with the next batch when the update occurs.

The goal is to ensure that most endpoints in the Apache APISIX Upstream are

available.

-### 场景二:上游健康检查问题

+### Scenario 2: Upstream Health Screening Problem

-由于 APIServer 面向状态的设计,在与 Apache APISIX 进行同步时也会出现延时问题,可能会遇到更新过程中「502

错误状态警告」。像这类问题,就需要在网关层面对 Upstream Endpoint 进行健康检查来解决。

+Due to APIServer’s state-oriented design, there are also latency issues when

synchronizing with Apache APISIX, which can result in “502 error status

warnings”during the update process. Problems like this require a health check

at the gateway level for the Upstream Endpoint.

-首先 Upstream Endpoint 主动健康检查不太现实,因为 Endpoint 太耗时费力。而 HTTP

层的监控检查由于不能确定状态码所以也不适合进行相关操作。

+First of all, an Upstream Endpoint health check is not practical because

Endpoint is too time consuming. The monitoring check at the HTTP layer is not

suitable for the operation because the status code can not be determined.

-最合适的方法就是在网关层面基于 TCP 做被动健康检查,比如你的网络连接超时不可达,就可以认为 POD 出现了问题,需要做降级处理。这样只需在 TCP

层面进行检查,不需要触及其他业务部分,可达到独立操控。

+The best way to do this is to do a passive health check based on TCP at the

gateway level. If your network connection time out is not reachable, consider

the POD as a problem that needs to be degraded. This allows for independent

control without touching other parts of the business, as long as you check at

the TCP level.

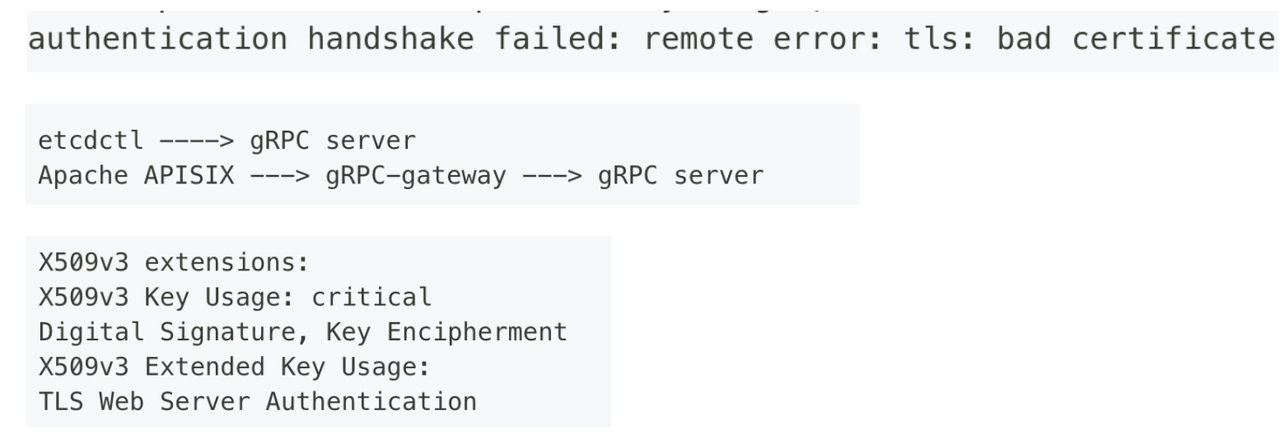

-### 场景三:mTLS 连接 etcd

+### Scenario:mTLS Connecting to ETCD

-由于 Apache APISIX 集群默认使用单向验证的机制,作为容器网关使用 Apache APISIX 时,可能会在与 k8s 连接同一个 etcd

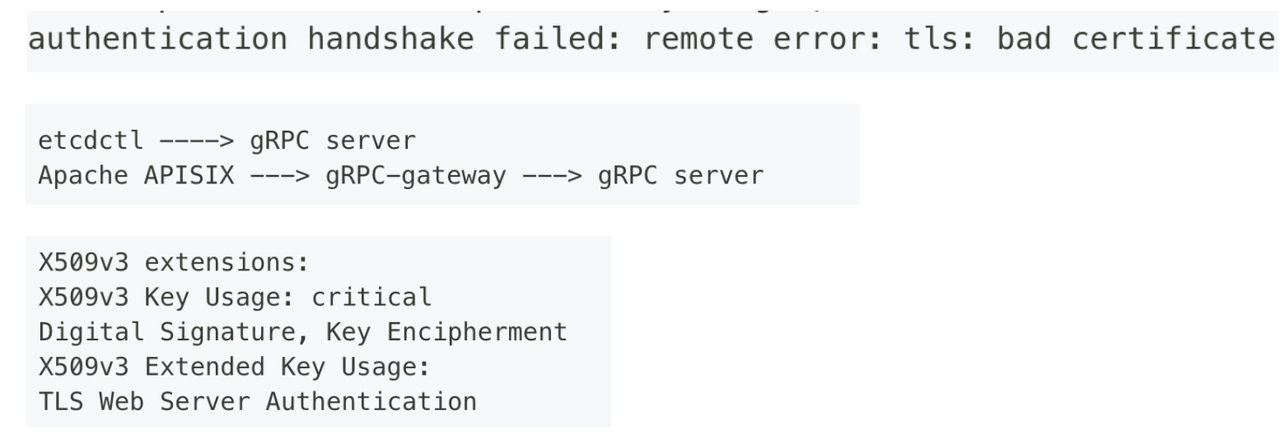

集群(k8s etcd 中使用双向验证)时默认开启双向认证,进而导致出现如下证书问题:

+Because the Apache APISIX cluster uses one-way authentication by default,

using Apache APISIX as a container gateway might turn on two-way authentication

by default when connecting to the same ETCD cluster with K8s (which uses

two-way authentication in K8s ETCD) , this in turn led to the following

certificate issues:

-

+

-Apache APISIX 不是通过 gRPC 直接连接 etcd,而是通过 HTTP 协议先连接到 etcd 内部的

gRPC-gateway,再去连接真正的 gRPC Server。这中间多了一个组件,所以就会多一次双向验证。

+Instead of connecting directly to the ETCD via gRPC, Apache APISIX first

connects to the gRPC-gateway inside the ETCD via the HTTP protocol, and then to

the real gRPC Server. There’s an extra component in the middle, so there’s an

extra two-way validation.

-gRPC-gateway 去连接 gRPC Server 的时候需要一个客户端证书,etcd 没有提供这个证书的配置项,而是直接使用 gRPC server

的服务端证书。相当于一个证书同时作为客户端和服务端的校验。如果你的 gRPC server

服务端证书开启了扩展(表明这个证书只能用于服务端校验),那么需要去掉这个扩展,或者再加上也可用于客户端校验的扩展。

+A client certificate is required when the gRPC-gateway connects to the gRPC

Server. The ETCD does not provide a configuration for this certificate, but

uses the Server certificate of the gRPC Server directly. This is equivalent to

a certificate being validated both on the client and the server. If your gRPC

server server-side certificate has an extension enabled (indicating that the

certificate can only be used for server-side validation) , remove the

extension, or add an extension that [...]

-同时 OpenResty 底层是不支持 mTLS 的,当你需要通过 mTLS 连接上游服务或 etcd 时,需要使用基于

apisix-nginx-module 去构建打过 patch 的 Openresty。apisix-build-tools 可以找到相关构建脚本。

+At the same time, the bottom layer of OpenResty does not support mTLS. When

you need to connect to an upstream service or ETCD via mTLS, you need to use

apisix-nginx-module to build an OpenResty after patch. Apisix-build-tools can

find related build scripts.

-## 未来期望

+## Future Expectations

-虽然目前我们还只是在测试阶段应用 Apache APISIX Ingress,但相信在不久之后,经过应用的迭代功能更新和内部架构迁移调整,Apache

APISIX Ingress 会统一应用到又拍云的所有容器网关内。

+While we are currently only using Apache APISIX Ingress in the testing phase,

we believe that in the near future, with the application of an iterative

feature update and internal architecture migration, apache APISIX Ingress will

be applied uniformly to all container gateways to UPYUN.

diff --git a/website/blog/2021/09/28/WPS-usercase.md

b/website/blog/2021/09/28/WPS-usercase.md

index 93ef56b..b390f66 100644

--- a/website/blog/2021/09/28/WPS-usercase.md

+++ b/website/blog/2021/09/28/WPS-usercase.md

@@ -1,122 +1,122 @@

---

-title: "百万级 QPS 业务新宠,金山办公携手 Apache APISIX 打造网关实践新体验"

-author: 张强

+title: "WPS has teamed up with Apache APISIX to create a new gateway

experience"

+author: Qiang Zhang

keywords:

- Apache APISIX

-- API 网关

-- 金山办公

+- API Gateway

- WPS

-description: 本文介绍了金山办公如何使用 Apache APISIX 应对百万级 QPS 业务,同时基于 Apache APISIX

更新与改进网关实践层面的内容。

+description: In this article, Zhang Qiang, head of SRE network in WPS,

explains how WPS can use Apache APISIX to handle Mega QPS, and update and

improve gateway practices based on Apache APISIX.

tags: [User Case]

---

-> 本文由金山办公中台部门 SRE 网络负责人张强介绍了金山办公如何使用 Apache APISIX 应对百万级 QPS 业务,同时基于 Apache

APISIX 更新与改进网关实践层面的内容。

+> In this article, Zhang Qiang, head of SRE network in WPS, explains how WPS

can use Apache APISIX to handle Mega QPS, and update and improve gateway

practices based on Apache APISIX.

<!--truncate-->

-## 背景介绍

+## Background

-金山办公是目前国内最大的办公软件厂商,旗下产品涉及 WPS、金山文档、稻壳等。在业务层面上由数千个业务以容器化部署在内部云原生平台,目前 [Apache

APISIX](https://apisix.apache.org/) 在金山办公主要负责为中台部门业务(百万级 QPS )提供相关网关服务。

+WPS is currently the largest domestic office software manufacturers, its

products include WPS Office, Kdocs, Docer and so on. At the business level,

deployed by thousands of businesses in a container on an internal cloud native

platform, [Apache APISIX](https://apisix.apache.org/) at WPS is currently

responsible for providing gateway services to the mid-stage business (Mega QPS)

.

-## 金山办公的网关演进

+## Gateway Evolution in WPS

-在 1.0 阶段时,我们对于 API Gateway 的特性没有什么强需求,只是想解决运维问题,所以基于 OpenResty 与 Lua

进行了自研,实现了动态 Upstream、黑名单、waf 等功能。

-虽然自研成功,但在功能上却遗留了一些问题,比如:

+In phase 1.0, we didn’t have a strong requirement for API Gateway features, we

just wanted to solve the operations problem, so we did our own research based

on OpenResty and Lua to implement dynamic Upstream, blacklist, WAF and so on.

Although self-developed, but left some problems in the function, such as:

-- 动态化只做到到 Upstream 维度

-- 需要 Reload 才能带出新域名

-- 底层设计简单,功能扩展能力不强

+- It’s only as dynamic as the Upstream dimension

+- Need to Reload to bring out the new domain name

+- The bottom design is simple, the function expansion ability is not strong

-后续我们对 API Gateway 功能有了强需求后,开始去调研相关的开源网关产品。

+Following the strong demand for API Gateway functionality, we began to

investigate the related open source Gateway products.

-## 为什么选择了 Apache APISIX

+## Why Apache APISIX?

-实际上 2019 年年底开始调研网关产品时,Kong 算是一个比较流行的选择。

+In fact, when the research on gateway products began in late 2019, Kong was

one of the more popular choices.

-但后续经过测试发现,Kong 的性能不太能满足我们的需求,同时我们认为 Kong 的架构不是很优秀:因为其配置中心选用 PostgreSQL,所以 Kong

只能利用非事件驱动去更新路由,依赖每个节点去刷新路由。

+However, subsequent tests showed that Kong’s performance was not quite up to

our expectations, and we didn’t think that Kong’s architecture was very good:

its configuration center used PostgreSQL, so Kong can only use the non-event

driver to update the route, relying on each node to refresh the route.

-进一步调研时,我们发现了 [Apache APISIX](https://github.com/apache/apisix)。首先 Apache

APISIX 的性能比 Kong 强,在 Apache APISIX 的 GitHub Readme

中有个非常详细的对比图,列出了两者的[性能测试差距](https://gist.github.com/membphis/137db97a4bf64d3653aa42f3e016bd01),这与我们自己测试下来的数据基本一致。

+On further investigation, we discovered [Apache

APISIX](https://github.com/apache/apisix). First of all, Apache APISIX performs

better than Kong, and there’s a very detailed graph in Apache APISIX’s GitHub

Readme that shows the [performance of Apache APISIX Compared to

Kong](https://gist.github.com/membphis/137db97a4bf64d3653aa42f3e016bd01), which

are basically consistent with the data we’ve tested ourselves.

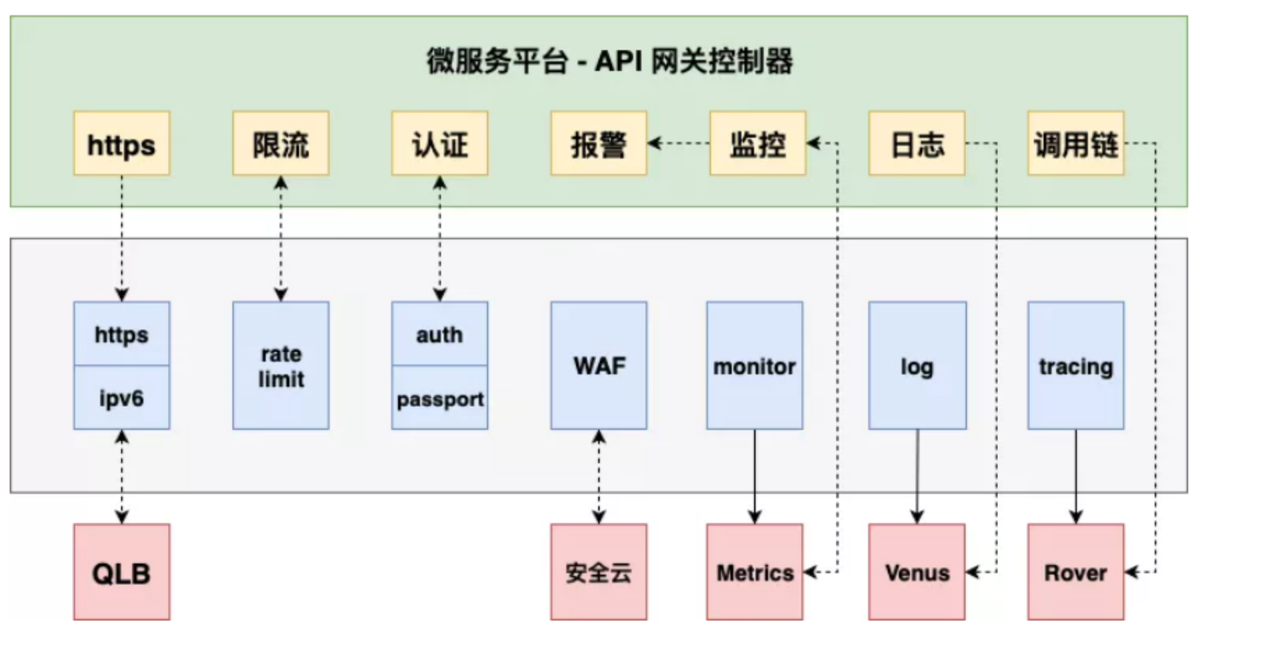

-

+

-在架构方面,Apache APISIX 的 etcd 配置对我们而言是一项更优的选择。

+In terms of architecture, Apache APISIX’s ETCD configuration is a better

choice for us.

-

+

-当然,最主要的原因是我们觉得社区也很重要。社区如果活跃,在版本更新迭代、问题解决和功能优化上的速度就会很快。从 GitHub 和平时的邮件反馈中我们看到了

Apache APISIX 社区的活跃,为产品功能和稳定性提供了强有力的保证。

+The main reason, of course, is that we feel that community is also important.

If the community is active, it will be able to update iterations, troubleshoot

problems, and optimize functionality quickly. From GitHub and regular email

feedback we can see that the Apache APISIX community is active, providing a

strong guarantee of product functionality and stability.

-## 网关平滑迁移经验分享

+## Experience of Gateway Smooth Migration

-大部分朋友在开始接触 Apache APISIX 时,都会用 CLI 去生成配置并起实例。但在我们做平滑迁移的过程中,并没有使用 CLI 去生成配置。

+When most of my friends started working with Apache APISIX, they used the CLI

to generate configurations and instances. However, during our smooth migration,

we did not use the CLI to generate the configuration.

-主要原因是 Apache APISIX 在 OpenResty 中会生效一些 Phase,比如初始化 init、init_worker、HTTP 和

Upstream 相关 Phase 等。对应到 Apache APISIX 的配置后我们发现,这些都可以脱离 CLI 而存在。

+The main reason is that Apache APISIX does some Phase in OpenResty, such as

initializing the init, init_worker, HTTP, and Upstream related phases.

-所以基于上述原因,我们最终采取了如下行动进行平滑迁移:

+Corresponding to the Apache APISIX configuration, we found that these can be

migrated from the CLI smoothly.

-- 不使用 Apache APISIX 的 CLI 生成配置

-- 引入 Apache APISIX 的 Package Path 并将 Apache APISIX 作为 Default Server

-- 保留其它静态配置中的域名,由于新域名未在静态配置中,将 Fallback 到 Apache APISIX

-- 最终将静态配置逐渐迁移到 Apache APISIX 中

+So for these reasons, we ended up doing the following smooth migration:

-当然,除了上述方法,我们也给大家推荐一种「轻混模式」,即使用静态配置配合 Apache APISIX 作为 Location,引入前边提到的一些 Phase

或 Lua 代码进行配置即可。这样做可以在静态配置中引入一些特殊配置,实现动态化等效果。

+- CLI generation configuration without Apache APISIX

+- Introduce a Package Path for Apache APISIX and make Apache APISIX the

Default Server

+- KEEP domain names in other static configurations, and because the new domain

name is not in the static configuration, Fallback to Apache APISIX

+- Eventually the static configuration was migrated gradually to Apache APISIX

-## 基于 Apache APISIX 的 Shared State 改进

+Of course, in addition to the above, we recommend a “Light-mixing mode” that

uses static configuration with Apache APISIX as Location, with some of the

Phase or Lua code mentioned earlier. Doing so allows you to introduce special

configurations into your static configuration, make it dynamic, etc. .

-首先在我个人看来,「转发效率一定不是问题,而 Shared State 是影响稳定性的最大因素」,为什么这么说?

+## Shared State Improvements Based on Apache APISIX

-因为转发效率可以通过横向扩容去解决,但 Shared State 是所有的节点共享的,所以是至关重要的模块。

+First of all, in my opinion, “The Shared State is the biggest factor in the

stability of the feed, which is definitely not an issue.”Why?

-所以在使用 Apache APISIX 后,我们主要针对 Shared State 层面进行了一些调整与优化。

+Because forwarding efficiency can be addressed by scaling laterally, the

Shared State is a critical module because it is Shared by all nodes.

-### 优化一:多台机器监听下的 etcd 架构优化

+So after using Apache APISIX, we made a few tweaks and optimizations to focus

on the Shared State layer.

-一般公司网关架构中,都会涉及多台机器,有的可能多至几百台,同时每台机器还要顾及 worker 数量。所以当多台机器监控相同 Key 时,etcd

的压力就会比较大,因为 etcd

的其中一个机制是为了保证数据一致性,需要所有事件返回给监听请求后才能处理新请求,当发送缓冲满了后就会丢弃请求。所以当多台机器同时监听时就会导致 etcd

超时运行,提示 Overload 报错等状况。

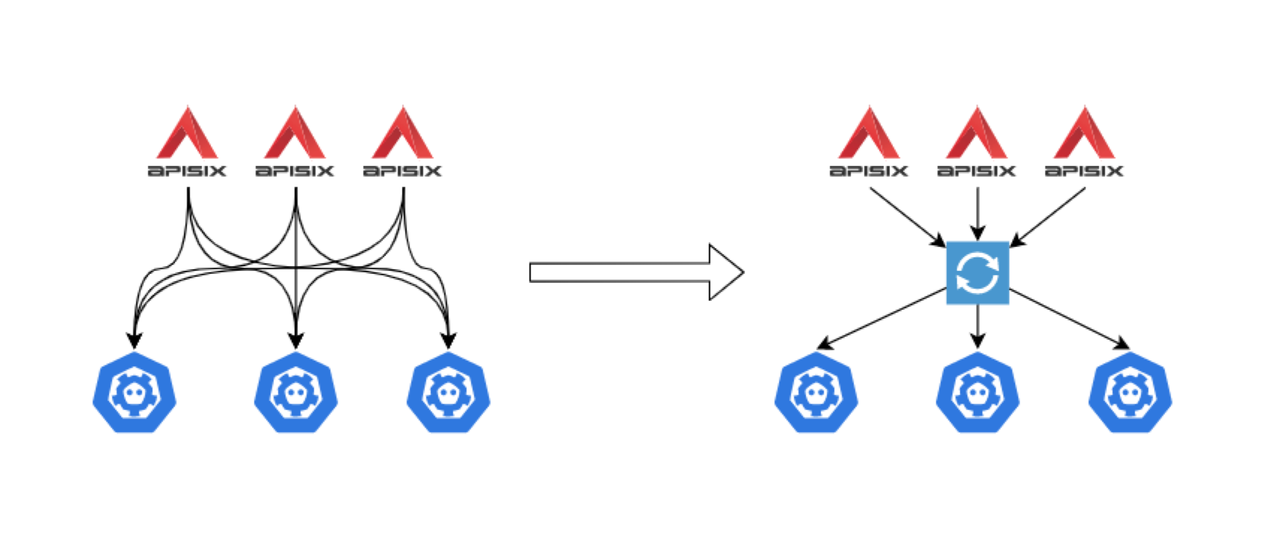

+### Improvement 1: Optimize ETCD Architecture with Multiple Machines Listening

-针对上述问题,我们使用了自研的 etcd Proxy。之前 Apache APISIX 与 etcd 的连接关系如下图左侧所示,每个节点均与 etcd

连接。所以作为一个大规模入口时,连接数量会特别大,对 etcd 造成压力。

+In a typical corporate gateway architecture, multiple machines are involved,

some as many as a few hundred, and each machine has to take into account the

number of workers. So when multiple machines monitor the same Key, the pressure

on the ETCD is greater, because one of the ETCD mechanisms is to ensure data

consistency, requiring all events to be returned to the listening request

before new requests can be processed, the request is discarded when the send

buffer is full. So when multip [...]

+

+To solve the above problem, we use our own ETCD Proxy. The previous connection

between Apache APISIX and ETCD is shown on the left side of the figure below,

with each node connected to the ETCD. So as a large-scale entry, the number of

connections can be particularly large, putting pressure on the ETCD.

-既然是监听相同的 Key,我们做了一个代理来进行统一监听,当有结果反馈时,再返回给 Apache APISIX。具体架构如上图右侧所示,在 Apache

APISIX 和 etcd 中间放置了 etcd Proxy 组件来监控 Key 值的变化。

+Since we are listening to the same Key, we make a proxy to do a uniform

listening and return the results to Apache APISIX when there is feedback. As

shown on the right side of the image above, the ETCD Proxy component is placed

between Apache APISIX and ETCD to monitor changes in Key values.

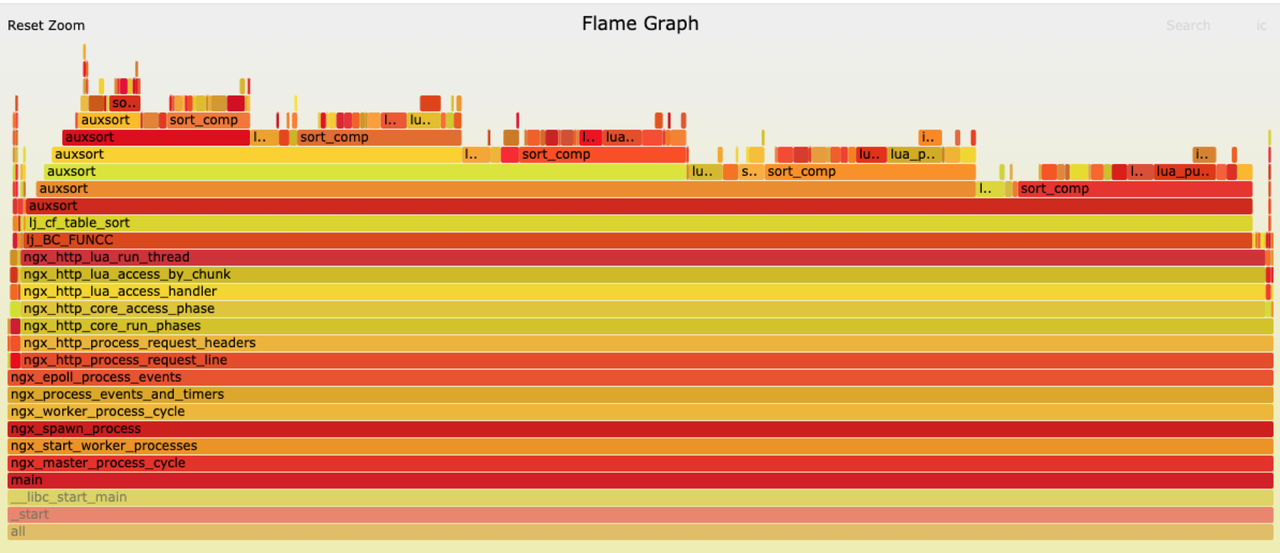

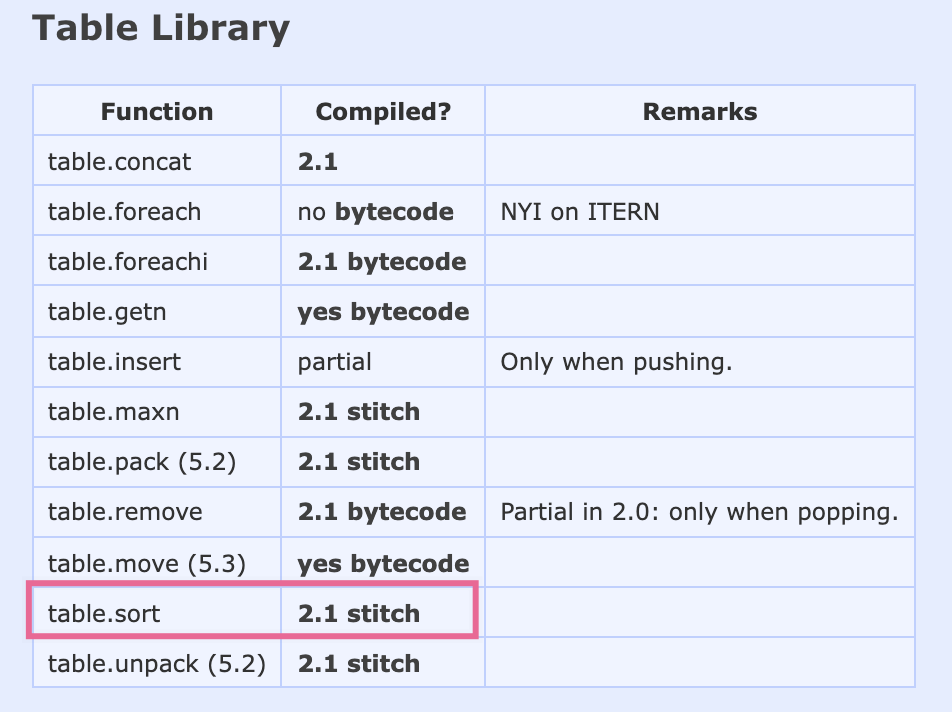

-### 优化二:解决路由生效过程中的性能问题

+### Improvement 2: Solve the Performance Problem During Routing Validation

-随着公司规模提升,路由数量的增长也会随之而来。我们在实践过程中发现在每次路由更新时,Apache APISIX 都会重建用来匹配路由的前缀树。这个主要是由于

`table.sort` 性能不足所导致的。

+As companies grow in size, so will the number of routes. In practice, Apache

APISIX reconstructs the prefix tree used to match the route each time the route

is updated. This is mainly due to poor sort performance of `table.sort`.

-在实践过程中,我们观察到路由频繁更新时,网关 CPU 升高、丢包率升高,进一步排查后发现丢包率升高的主要原因为 Listen overflow 所造成。

+In the process of practice, we observe that the CPU of the gateway increases

and the packet loss rate increases when the route is updated frequently.

-

+

-在 CPU 升高现象上,通过火焰图可以明显看到大部分 CPU 的时间都是划在 `auxsort` 上,它是由 FUNCC 触发。而 FUNCC

的触发也指明了一个问题,就是证明相关数据没有经过 LuaJIT,只有图中最右侧的一小部分处理了正常请求。

+In terms of CPU ramp-up, it is clear from the flame diagram that the majority

of CPU time is allocated to the `auxsort`, which is triggered by FUNCC. The

FUNCC trigger also points to the problem of proving that the data did not pass

through Luajit and that only the rightmost part of the graph processed normal

requests.

-出现这种现象的原因主要是 LuaJIT 的 `table.sort` 不是完全依靠 JIT 模式,这点可以在 [LuaJIT 官网

wiki](http://wiki.luajit.org/NYI) 中看到相关说明,所以在 Lua 代码环境中使用 `table.sort` 效率是比较低的。

+The main reason for this is LuaJIT’s `table.sort` doesn’t rely entirely on the

JIT mode, as you can see in the [Luajit wiki](http://wiki.luajit.org/NYI), so

it works in the Lua code environment with low efficiency.

-针对这个问题,我们自己使用纯 Lua 代码实现了针对上述场景的 sort 配置进行了解决,但其实 Apache APISIX

在之后的版本更新中已经修复了这项问题,具体思路也跟我们理解的类似。

+We solved this problem ourselves using pure Lua code to implement the sort

configuration for the above scenario, but Apache APISIX has since fixed the

problem, the idea is similar to what we understand.

-### 更多 Shared State 使用经验

+### More Experience with Shared State

-1. 在修改 Apache APISIX 或者自己进行插件开发时,确保做好 Schema

校验,包含判空,尤其是在匹配部分。因为在匹配部分出问题的话,会造成整体性的影响。

-2. 做好业务拆分规划。根据业务量去规划好相关 etcd Prefix 和 IP 数量,部署更稳固的集群,把系统性风险降到最低

+1. When you modify Apache APISIX or do your own plug-in development, make sure

you do Schema validation, including nulls, especially in the matching section.

Because if something goes wrong in the matching section, it can have an impact

on the whole.

+2. Do a good job of business split planning. Plan your ETCD Prefix and IP

numbers according to your traffic, and deploy more robust clusters to minimize

systemic risks.

-## 开源话题讨论

+## Open Source Discussion

-### 稳定性与功能层面的取舍

+### The Trade-off Between Stability and Function

-目前金山办公使用 Apache APISIX 已经快两年了,作为产品用户,我认为 Apache APISIX

确实是一款稳定可信的开源产品,在绝大多数情况下,都会及时地与社区最新版本保持一致。

+WPS has been using Apache APISIX for almost two years now, and as a product

user, I think Apache APISIX is really a stable and reliable open source

product, keep up to date with the latest community releases.

-但是一般接触并应用过开源产品的公司应该都有体会,升级版本会有一些新功能的出现,但同时也会带来一些稳定性上的问题,所以在升级版本和稳定性中我们应该如何取舍。

+But as anyone who has ever used an open source product will know, there will

be some new features in the updated version, but there will also be some

stability issues, so how do we choose between the updated version and the

stability.

-这个问题肯定没有统一的答案,但是我个人觉得针对 Apache APISIX 这项产品,尽量与官网版本保持一致。