tzssangglass opened a new pull request #6315: URL: https://github.com/apache/apisix/pull/6315

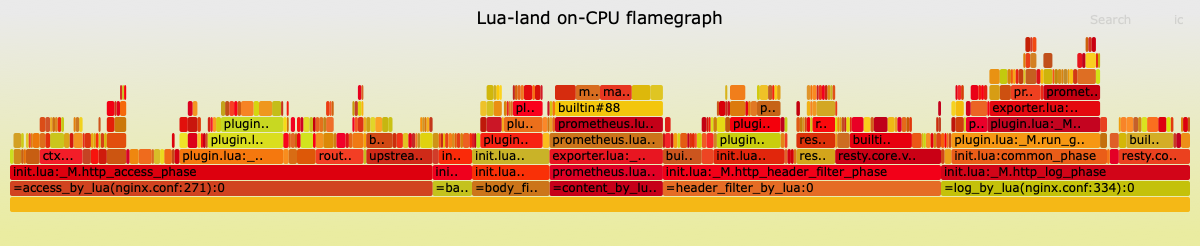

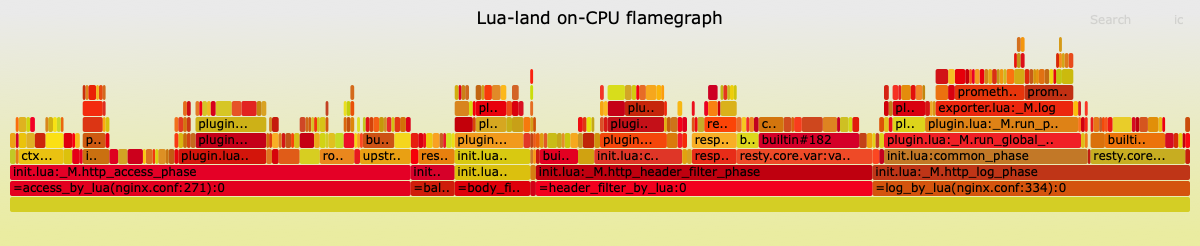

Closes #5755 ### What this PR does / why we need it: <!--- Why is this change required? What problem does it solve? --> <!--- If it fixes an open issue, please link to the issue here. --> ### Pre-submission checklist: <!-- Please follow the PR manners: 1. Use Draft if the PR is not ready to be reviewed 2. Test is required for the feat/fix PR, unless you have a good reason 3. Doc is required for the feat PR 4. Use a new commit to resolve review instead of `push -f` 5. If you need to resolve merge conflicts after the PR is reviewed, please merge master but do not rebase 6. Use "request review" to notify the reviewer once you have resolved the review 7. Only reviewer can click "Resolve conversation" to mark the reviewer's review resolved --> * [x] Did you explain what problem does this PR solve? Or what new features have been added? * [ ] Have you added corresponding test cases? * [ ] Have you modified the corresponding document? * [x] Is this PR backward compatible? **If it is not backward compatible, please discuss on the [mailing list](https://github.com/apache/apisix/tree/master#community) first** The following is a simple test prepare 1. add prometheus plugin ```shell curl -i http://127.0.0.1:9080/apisix/adminglobal_rules/1 -H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' -X PUT -d ' { "plugins": { "prometheus": {} } }' ``` 2. add rouet ```shell curl -i http://127.0.0.1:9080/apisix/adminroutes/1 -H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' -X PUT -d ' { "upstream": { "nodes": { "127.0.0.1:1980": 1 }, "type": "roundrobin" }, "uri": "/hello" }' ``` 3. wrk2 ```shell # mock client request wrk -t4 -c100 -d90s -R5000 --u_latency http://127.0.0.1:9080/hello # mock prometheus collection wrk -t4 -c100 -d100s -R10 --u_latency http://127.0.0.1:9091/apisix/prometheus/metrics ``` #### before update prometheus version: *request latency* ``` Latency Distribution (HdrHistogram - Uncorrected Latency (measured without taking delayed starts into account)) 50.000% 415.00us 75.000% 594.00us 90.000% 0.87ms 99.000% 4.95ms 99.900% 17.26ms 99.990% 33.53ms 99.999% 40.96ms 100.000% 46.69ms ``` *prometheus latency* ``` Latency Distribution (HdrHistogram - Uncorrected Latency (measured without taking delayed starts into account)) 50.000% 9.73ms 75.000% 17.36ms 90.000% 27.34ms 99.000% 38.85ms 99.900% 41.50ms 99.990% 43.49ms 99.999% 43.49ms 100.000% 43.49ms ``` flamegraph  #### after update prometheus version: *request latency* ``` Latency Distribution (HdrHistogram - Uncorrected Latency (measured without taking delayed starts into account)) 50.000% 605.00us 75.000% 0.89ms 90.000% 1.26ms 99.000% 2.58ms 99.900% 9.80ms 99.990% 14.20ms 99.999% 18.74ms 100.000% 19.65ms ``` *prometheus latency* ``` Latency Distribution (HdrHistogram - Uncorrected Latency (measured without taking delayed starts into account)) 50.000% 1.67ms 75.000% 2.10ms 90.000% 2.86ms 99.000% 6.89ms 99.900% 10.61ms 99.990% 10.67ms 99.999% 10.67ms 100.000% 10.67ms ``` flamegraph  We can assume that the impact on request latency when prometheus collects information is significantly reduced after the update. #### why not use `ngx.timer(0)` From the flamegraph, the code in the prometheus plugin does not significantly increase latency, which is most noticeable in the `fix_histogram_bucket_labels` function of nginx-lua-prometheus, even if this function is optimized. The main delay is due to the regular matching in this function. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]