super-small-rookie opened a new issue, #1034:

URL: https://github.com/apache/apisix-ingress-controller/issues/1034

I install apisix-ingress-controller to use the hard way mode with the

official documents.

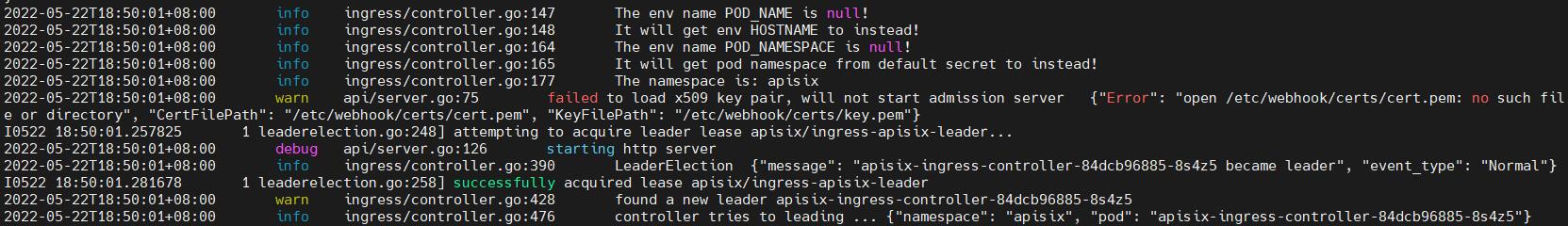

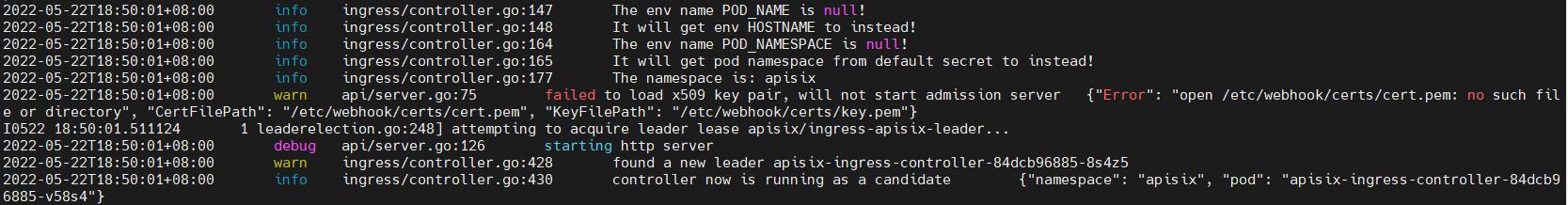

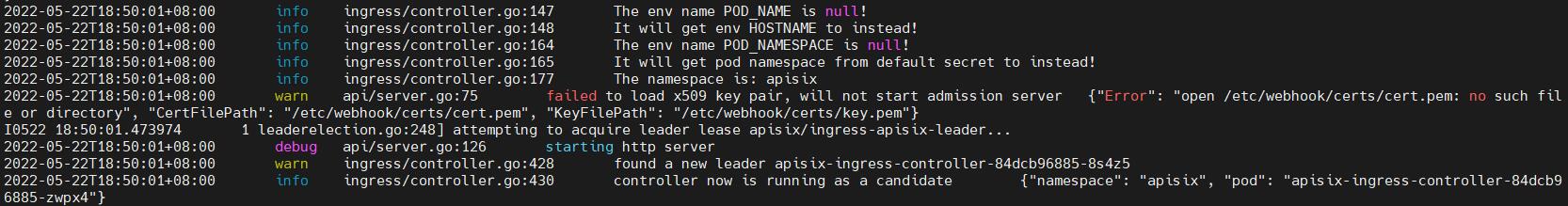

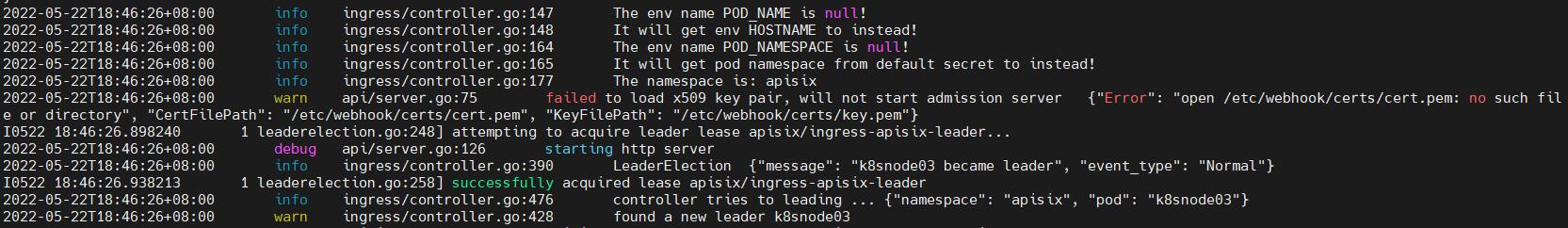

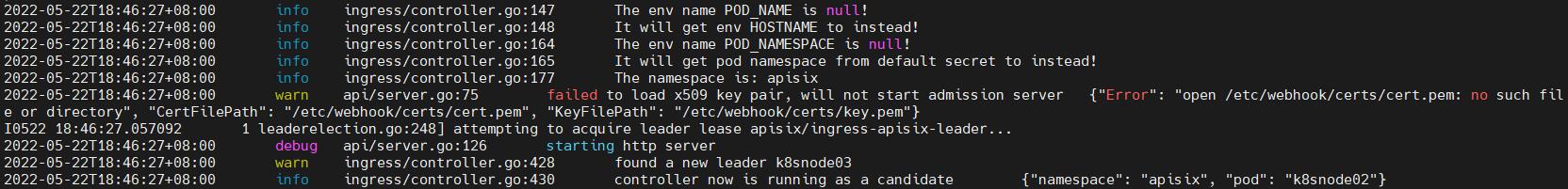

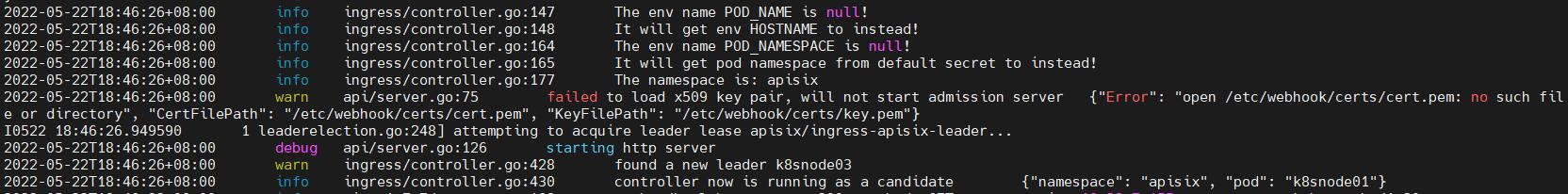

when deploy more replicas like three, I find that echo

apisix-ingress-controller will be leader, they are not leader and candidate

relationships, so echo leader will watch kubernetes and access apisix

admin-api, kubernetes and etcd will bear more pressure. From echo instance log

can see them work.

I read the code and find the apisix-ingress-controller use two env to lease:

apisix-ingress-controller/pkg/ingress/controller.go

podName := os.Getenv("POD_NAME")

podNamespace := os.Getenv("POD_NAMESPACE")

if podNamespace == "" {

podNamespace = "default"

}

......

c := &Controller{

name: podName,

namespace: podNamespace,

cfg: cfg,

apiServer: apiSrv,

apisix: client,

MetricsCollector: metrics.NewPrometheusCollector(),

kubeClient: kubeClient,

watchingNamespaces: watchingNamespace,

watchingLabels: watchingLabels,

secretSSLMap: new(sync.Map),

recorder: eventBroadcaster.NewRecorder(scheme.Scheme,

v1.EventSource{Component: _component}),

podCache: types.NewPodCache(),

}

......

lock := &resourcelock.LeaseLock{

LeaseMeta: metav1.ObjectMeta{

Namespace: c.namespace,

Name: c.cfg.Kubernetes.ElectionID,

},

Client: c.kubeClient.Client.CoordinationV1(),

LockConfig: resourcelock.ResourceLockConfig{

Identity: c.name,

EventRecorder: c,

},

}

In the deployment yaml file, I try to use downwardAPI to make right:

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

later, I found the deployment yaml file used ownwardAPI when I read the

samples deployment in git repository by accident, so it confirms the result I

found above.

Is there a way the apisix-ingress-controller cluster will work well when not

use the downwardAPI ?

I have the above questions, then try to find use some env or method to

instead the env above.

When the env POD_NAME is null, try to use HOSTNAME to instead, if deployment

hostNetwork is true, the HOSTNAME is the kubernetes host hostname. if

hostNetwork is false or not config,The HOSTNAME is the same as downwardAPI

metadata.name.

When the env POD_NAMESPACE is null, try to read

"/run/secrets/kubernetes.io/serviceaccount/namespace" in the pod.

so I try to write the code below and test it :

apisix-ingress-controller/pkg/ingress/controller.go

var controllerName string

podName := os.Getenv("POD_NAME")

if podName == "" {

log.Info("The env name POD_NAME is null!")

log.Info("It will get env HOSTNAME to instead!")

//if deployment hostNetwork is true,The HOSTNAME is the

kubernetes host hostname.if hostNetwork is false or not config,The HOSTNAME is

the same as downwardAPI metadata.name.

hostName := os.Getenv("HOSTNAME")

if hostName == "" {

log.Info("The env name HOSTNAME is null!")

log.Warn("Because both the env POD_NAME and HOSTNAME

are null, if replica count is bigger than 1, it will impact on the election,

each ingress-controller will be leader, so more leader will watch kubernetes

and access apisix admin-api, kubernetes and etcd will bear more pressure,

please note!")

} else {

controllerName = hostName

}

} else {

controllerName = podName

}

podNamespace := os.Getenv("POD_NAMESPACE")

if podNamespace == "" {

log.Info("The env name POD_NAMESPACE is null!")

log.Info("It will get pod namespace from default secret to

instead!")

//when env POD_NAMESPACE is null , get namespace from secret:

/run/secrets/kubernetes.io/serviceaccount/namespace

namespace, err :=

ioutil.ReadFile("/run/secrets/kubernetes.io/serviceaccount/namespace")

if err != nil {

log.Info("Read namespace file failed!")

log.Warn("Because could not get the namespace from

secret, if replica count bigger than 1 and the ingress controller namespace is

not default, it will impact on the election, each ingress-controller will be

leader, so more leader will watch kubernetes and access apisix admin-api,

kubernetes and etcd will bear more pressure, please note!")

//Could not get the namespace, set default, but if

ingress controller deployment namespace is not default, the ingress controller

cluster lease will be failed. The resource leases.coordination.k8s.io will be

created in default namespace, but the ingress controller will run in the

deployment namespace.

log.Info("Use the default namespace!")

podNamespace = "default"

log.Info("The namespace is: ", podNamespace)

} else {

podNamespace = string(namespace)

log.Info("The namespace is: ", podNamespace)

}

}

......

c := &Controller{

name: controllerName,

namespace: podNamespace,

cfg: cfg,

apiServer: apiSrv,

apisix: client,

MetricsCollector: metrics.NewPrometheusCollector(),

kubeClient: kubeClient,

watchingNamespaces: watchingNamespace,

watchingLabels: watchingLabels,

secretSSLMap: new(sync.Map),

recorder: eventBroadcaster.NewRecorder(scheme.Scheme,

v1.EventSource{Component: _component}),

podCache: types.NewPodCache(),

}

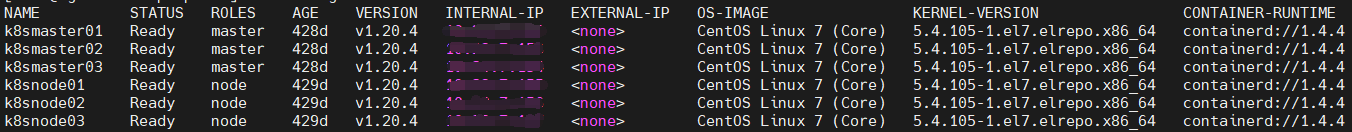

The test kubernetes cluster info:

The deployment yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: apisix-ingress-controller

namespace: apisix

labels:

app.kubernetes.io/name: ingress-controller

spec:

replicas: 3

selector:

matchLabels:

app.kubernetes.io/name: ingress-controller

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app.kubernetes.io/name: ingress-controller

spec:

serviceAccountName: apisix-ingress-controller

volumes:

- name: configuration

configMap:

name: apisix-configmap

items:

- key: config.yaml

path: config.yaml

containers:

- name: ingress-controller

command:

- /ingress-apisix/apisix-ingress-controller

- ingress

- --config-path

- /ingress-apisix/conf/config.yaml

image:

"xxx.xxx.xxx.xxx/apisix/apisix-ingress-controller:test-bugfix-1"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8080

protocol: TCP

livenessProbe:

httpGet:

path: /healthz

port: 8080

readinessProbe:

httpGet:

path: /healthz

port: 8080

resources:

{}

volumeMounts:

- mountPath: /ingress-apisix/conf

name: configuration

hostNetwork not config:

hostNetwork is true:

......

spec:

hostNetwork: true

......

It works well when not use downwardAPI to banding the env POD_NAME and

POD_NAMESPACE.

Because when forgot to use downwardAPI like the official documents.

Is it necessary to add some methods to make the process normal and execute

next?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]