[GitHub] [incubator-hudi] anchalkataria commented on issue #796: Error hive sync via delta streamer

anchalkataria commented on issue #796: Error hive sync via delta streamer URL: https://github.com/apache/incubator-hudi/issues/796#issuecomment-517127576 > @anchalkataria we have some leads on the null issue. we expect it to be fixed on master soon.. > > on your original registration issue, I actually was able to register through delta streamer in the demo setup on master branch... Would you be able to give it a shot? I can give you commands.. @vinothchandar So now I am not trying this on local anymore . I am directly running the tool on AWS Emr cluster and able to sync data in hive through DeltaStreamer. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

vinothchandar commented on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-517125538 @NetsanetGeb 2 comes from the configs you are setting? hoodie.upsert.shuffle.parallellism & hoodie.insert.shuffle.parallellism? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #774: Matching question of the version in Spark and Hive2

vinothchandar commented on issue #774: Matching question of the version in Spark and Hive2 URL: https://github.com/apache/incubator-hudi/issues/774#issuecomment-517124662 @cdmikechen can we have a call or can you write up how we can take a fresh look at the hive sync aspects? It definitely works in certain versions, but runs into snags like this with certain versions.. Its a pretty hairy issue IMO This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #789: Demo : Unexpected result in some queries

vinothchandar commented on issue #789: Demo : Unexpected result in some queries URL: https://github.com/apache/incubator-hudi/issues/789#issuecomment-517124343 @n3nash is debugging the join issue, which seems different? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #796: Error hive sync via delta streamer

vinothchandar commented on issue #796: Error hive sync via delta streamer URL: https://github.com/apache/incubator-hudi/issues/796#issuecomment-517124229 @n3nash can you paste the error you got hive syncing on the apache hive 2.x servers if any? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #796: Not able to use S3 as storage for Hudi dataset

vinothchandar commented on issue #796: Not able to use S3 as storage for Hudi dataset URL: https://github.com/apache/incubator-hudi/issues/796#issuecomment-517123959 @anchalkataria we have some leads on the null issue. we expect it to be fixed on master soon.. on your original registration issue, I actually was able to register through delta streamer in the demo setup on master branch... Would you be able to give it a shot? I can give you commands.. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #800: Performance tuning

vinothchandar commented on issue #800: Performance tuning URL: https://github.com/apache/incubator-hudi/issues/800#issuecomment-517123657 hi.. any updates? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #801: How to customize schema

vinothchandar commented on issue #801: How to customize schema URL: https://github.com/apache/incubator-hudi/issues/801#issuecomment-517123554 Closing. Reopen new issues on JIRA or mailing list as needed This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar closed issue #801: How to customize schema

vinothchandar closed issue #801: How to customize schema URL: https://github.com/apache/incubator-hudi/issues/801 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] cdmikechen opened a new issue #817: spark-submit with userClassPathFirst config error

cdmikechen opened a new issue #817: spark-submit with userClassPathFirst config error URL: https://github.com/apache/incubator-hudi/issues/817 When I used spark-submit to run some codes like that(spark 2.4.3 and scala 2.11.12): ``` ../bin/spark-submit --master yarn --class xxx.xxx.Main --conf spark.driver.userClassPathFirst=true --conf spark.executor.userClassPathFirst=true --jars xxx/hoodie/hoodie-spark-bundle-0.4.8-SNAPSHOT.jar,xxx.jar xxx/sparkserver.jar ``` Spark reported such an exception when creating spark session to yarn ``` 19/08/01 08:49:29 ERROR org.apache.spark.network.server.TransportRequestHandler - Error while invoking RpcHandler#receive() for one-way message. java.lang.ClassCastException: cannot assign instance of scala.collection.immutable.Map$Map2 to field org.apache.spark.scheduler.cluster.CoarseGrainedClusterMessages$AddWebUIFilter.filterParams of type scala.collection.immutable.Map in instance of org.apache.spark.scheduler.cluster.CoarseGrainedClusterMessages$AddWebUIFilter at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2287) at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1417) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2293) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:431) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:108) at org.apache.spark.rpc.netty.NettyRpcEnv$$anonfun$deserialize$1$$anonfun$apply$1.apply(NettyRpcEnv.scala:271) at scala.util.DynamicVariable.withValue(DynamicVariable.scala:58) at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:320) at org.apache.spark.rpc.netty.NettyRpcEnv$$anonfun$deserialize$1.apply(NettyRpcEnv.scala:270) at scala.util.DynamicVariable.withValue(DynamicVariable.scala:58) at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:269) at org.apache.spark.rpc.netty.RequestMessage$.apply(NettyRpcEnv.scala:611) at org.apache.spark.rpc.netty.NettyRpcHandler.internalReceive(NettyRpcEnv.scala:662) at org.apache.spark.rpc.netty.NettyRpcHandler.receive(NettyRpcEnv.scala:654) at org.apache.spark.network.server.TransportRequestHandler.processOneWayMessage(TransportRequestHandler.java:274) at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:105) at org.apache.spark.network.server.TransportChannelHandler.channelRead(TransportChannelHandler.java:118) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:102) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) at org.apache.spark.network.util.TransportFrameDecoder.channelRead(TransportFrameDecoder.java:85) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1359) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at

[GitHub] [incubator-hudi] jackwang2 commented on issue #764: Hoodie 0.4.7: Error upserting bucketType UPDATE for partition #, No value present

jackwang2 commented on issue #764: Hoodie 0.4.7: Error upserting bucketType

UPDATE for partition #, No value present

URL: https://github.com/apache/incubator-hudi/issues/764#issuecomment-517089256

@n3nash No, I didn't. The main logic is for just global deduplication, and

code is pasted as below:

df.dropDuplicates(recordKey)

.write

.format("com.uber.hoodie")

.mode(SaveMode.Append)

.option(HoodieWriteConfig.TABLE_NAME, tableName)

.option(HoodieIndexConfig.INDEX_TYPE_PROP,

HoodieIndex.IndexType.GLOBAL_BLOOM.name)

.option(DataSourceWriteOptions.RECORDKEY_FIELD_OPT_KEY, recordKey)

.option(DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY,

partitionCol)

.option(DataSourceWriteOptions.OPERATION_OPT_KEY,

DataSourceWriteOptions.INSERT_OPERATION_OPT_VAL)

.option(DataSourceWriteOptions.STORAGE_TYPE_OPT_KEY, storageType)

.option(DataSourceWriteOptions.PRECOMBINE_FIELD_OPT_KEY, preCombineCol)

.option("hoodie.consistency.check.enabled", "true")

.option("hoodie.parquet.small.file.limit", 1024 * 1024 * 128)

.save(tgtFilePath)

Thanks,

Jack

On Thu, Aug 1, 2019 at 9:01 AM n3nash wrote:

> It looks like the "Not an Avro data file" exception is thrown when there

> is a 0 byte stream read into the datafilereader as can be seen here :

>

https://github.com/apache/avro/blob/master/lang/java/avro/src/main/java/org/apache/avro/file/DataFileReader.java#L55

> and here :

>

https://github.com/apache/avro/blob/master/lang/java/avro/src/main/java/org/apache/avro/file/DataFileConstants.java#L29

>

> From the stack trace (by tracing the line numbers), it looks like the

> CLEAN file is failing to be archived. I looked at the clean logic and we do

> create clean files even when we don't have anything to clean but that does

> not result in a 0 bytes file, it still has some valid avro data. I'm

> wondering if this has anything to do with any sort of race condition

> leading to archiving running when clean is a 0 sized file.

>

> @jackwang2 How are you running the cleaner

> and the archival process ? Are you explicitly doing anything there ?

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

>

,

> or mute the thread

>

> .

>

--

[image: vshapesaqua11553186012.gif]

[image:

fb1552694203.png] [image:

tw1552694330.png] [image:

ig1552694392.png]

Units 3801, 3804, 38F, C Block, Beijing Yintai Center, Beijing, China

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] n3nash commented on issue #764: Hoodie 0.4.7: Error upserting bucketType UPDATE for partition #, No value present

n3nash commented on issue #764: Hoodie 0.4.7: Error upserting bucketType UPDATE for partition #, No value present URL: https://github.com/apache/incubator-hudi/issues/764#issuecomment-517076737 It looks like the "Not an Avro data file" exception is thrown when there is a 0 byte stream read into the datafilereader as can be seen here : https://github.com/apache/avro/blob/master/lang/java/avro/src/main/java/org/apache/avro/file/DataFileReader.java#L55 and here : https://github.com/apache/avro/blob/master/lang/java/avro/src/main/java/org/apache/avro/file/DataFileConstants.java#L29 From the stack trace (by tracing the line numbers), it looks like the CLEAN file is failing to be archived. I looked at the clean logic and we do create clean files even when we don't have anything to clean but that does not result in a 0 bytes file, it still has some valid avro data. I'm wondering if this has anything to do with any sort of race condition leading to archiving running when clean is a 0 sized file. @jackwang2 How are you running the cleaner and the archival process ? Are you explicitly doing anything there ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] n3nash edited a comment on issue #764: Hoodie 0.4.7: Error upserting bucketType UPDATE for partition #, No value present

n3nash edited a comment on issue #764: Hoodie 0.4.7: Error upserting bucketType UPDATE for partition #, No value present URL: https://github.com/apache/incubator-hudi/issues/764#issuecomment-517076737 It looks like the "Not an Avro data file" exception is thrown when there is a 0 byte stream read into the datafilereader as can be seen here : https://github.com/apache/avro/blob/master/lang/java/avro/src/main/java/org/apache/avro/file/DataFileReader.java#L55 and here : https://github.com/apache/avro/blob/master/lang/java/avro/src/main/java/org/apache/avro/file/DataFileConstants.java#L29 From the stack trace (by tracing the line numbers), it looks like the CLEAN file is failing to be archived. I looked at the clean logic and we do create clean files even when we don't have anything to clean but that does not result in a 0 bytes file, it still has some valid avro data. Although we need to fix not creating a clean file when there is nothing to clean, this still doesn't result into the error. I'm wondering if this has anything to do with any sort of race condition leading to archiving running when clean is a 0 sized file. @jackwang2 How are you running the cleaner and the archival process ? Are you explicitly doing anything there ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] tweise commented on issue #816: [HUDI-121] Add lresende signing key to KEYS file

tweise commented on issue #816: [HUDI-121] Add lresende signing key to KEYS file URL: https://github.com/apache/incubator-hudi/pull/816#issuecomment-517066426 The KEYS file needs to be added to the dist area, not here. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] bvaradar commented on a change in pull request #816: [HUDI-121] Add lresende signing key to KEYS file

bvaradar commented on a change in pull request #816: [HUDI-121] Add lresende signing key to KEYS file URL: https://github.com/apache/incubator-hudi/pull/816#discussion_r309469510 ## File path: KEYS ## @@ -126,3 +126,286 @@ txTq7YpleWQhcz9+9Fruu7jA+l1pSUJSR0+DZegBOq+zWIHcZSTbAnfOX+jYySYd lsw/ =GJFW -END PGP PUBLIC KEY BLOCK- +pub dsa1024 2007-06-30 [SC] + 50D7C82AC19334EA3C75699AF39F187DEFB55DF1 +uid [ unknown] Luciano Resende (Code Signing Key) +sig 3F39F187DEFB55DF1 2007-06-30 Luciano Resende (Code Signing Key) +sig 2F4268EE7F2EFD0F0 2010-11-05 Christopher David Schultz (Christopher David Schultz) Review comment: @lresende : Not sure if sig entries for other users must be present. Can you remove them if not needed ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] lresende opened a new pull request #816: [HUDI-121] Add lresende signing key to KEYS file

lresende opened a new pull request #816: [HUDI-121] Add lresende signing key to KEYS file URL: https://github.com/apache/incubator-hudi/pull/816 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

svn commit: r35085 - /dev/incubator/hudi/

Author: lresende Date: Wed Jul 31 22:45:37 2019 New Revision: 35085 Log: Adding release staging directory for Hudi Added: dev/incubator/hudi/

svn commit: r35084 - /release/incubator/hudi/

Author: lresende Date: Wed Jul 31 22:44:48 2019 New Revision: 35084 Log: Adding release directory for Hudi Added: release/incubator/hudi/

[GitHub] [incubator-hudi] vinothchandar closed pull request #803: [WIP] [Not For Merging] Demo automation with pom dep order fixes from PR-780

vinothchandar closed pull request #803: [WIP] [Not For Merging] Demo automation with pom dep order fixes from PR-780 URL: https://github.com/apache/incubator-hudi/pull/803 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #803: [WIP] [Not For Merging] Demo automation with pom dep order fixes from PR-780

vinothchandar commented on issue #803: [WIP] [Not For Merging] Demo automation with pom dep order fixes from PR-780 URL: https://github.com/apache/incubator-hudi/pull/803#issuecomment-517043325 have this code and #780 both testing in `pom-bundle-cleanup` branch.. Closing this. Will open a new one when ready. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links

vinothchandar commented on issue #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links URL: https://github.com/apache/incubator-hudi/pull/815#issuecomment-517042646 No. have not seen them.. its auto generated content, so may be it reflects the localhost name or ip (0.0.0.0).. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar merged pull request #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links

vinothchandar merged pull request #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links URL: https://github.com/apache/incubator-hudi/pull/815 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-hudi] branch asf-site updated: Fix formatting for new content in Writing Data page. Update hudi.incubator.apache.org website (#815)

This is an automated email from the ASF dual-hosted git repository. vinoth pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-hudi.git The following commit(s) were added to refs/heads/asf-site by this push: new 3190d6d Fix formatting for new content in Writing Data page. Update hudi.incubator.apache.org website (#815) 3190d6d is described below commit 3190d6d59f2292f265e5bdbf6cffb40264c5252d Author: Balaji Varadarajan AuthorDate: Wed Jul 31 15:15:00 2019 -0700 Fix formatting for new content in Writing Data page. Update hudi.incubator.apache.org website (#815) --- content/404.html | 13 +- content/admin_guide.html | 13 +- content/community.html | 13 +- content/comparison.html | 13 +- content/concepts.html| 13 +- content/configurations.html | 13 +- content/contributing.html| 13 +- content/docker_demo.html | 13 +- content/feed.xml | 20 content/gcs_hoodie.html | 13 +- content/index.html | 13 +- content/js/mydoc_scroll.html | 13 +- content/migration_guide.html | 13 +- content/news.html| 13 +- content/news_archive.html| 13 +- content/performance.html | 13 +- content/powered_by.html | 13 +- content/privacy.html | 13 +- content/querying_data.html | 13 +- content/quickstart.html | 13 +- content/s3_hoodie.html | 13 +- content/sitemap.xml | 48 ++--- content/strata-talk.html | 15 ++-- content/use_cases.html | 13 +- content/writing_data.html| 56 ++-- docs/writing_data.md | 14 ++- 26 files changed, 356 insertions(+), 70 deletions(-) diff --git a/content/404.html b/content/404.html index 07dc2e8..dd7c740 100644 --- a/content/404.html +++ b/content/404.html @@ -46,7 +46,7 @@ https://oss.maxcdn.com/libs/respond.js/1.4.2/respond.min.js";>

[GitHub] [incubator-hudi] n3nash commented on issue #814: Fix for realtime queries

n3nash commented on issue #814: Fix for realtime queries URL: https://github.com/apache/incubator-hudi/pull/814#issuecomment-517026353 On another note, I debugged the issue with join queries as reported here : https://github.com/apache/incubator-hudi/issues/789 and found weird results (nothing to do with the change in this PR or the hive on spark fix). Essentially, looks like due to some hive join optimizations, only 1 table format gets picked up (either hoodierealtimeinputformat or hoodieinputformat) when joining 2 tables with different table formats. May be some bug on our end, have to dig deeper and will open a different ticket around it. This is just FYI This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] n3nash commented on issue #814: Fix for realtime queries

n3nash commented on issue #814: Fix for realtime queries URL: https://github.com/apache/incubator-hudi/pull/814#issuecomment-517025307 The code being removed was added to make Hive on Spark work. Due to a bug in Hive, Hive on Spark does not work seamlessly with RT tables. > Issue with Hive on Spark (that was fixed by caching some information) : Hive on Spark allows for multiple tasks to run in the same executor. Since the executor/JVM' lifetime is longer than 1 task, the job conf variable is shared across different file splits. Due to a bug in hive (find in the comments of HoodieRealtimeInputFormat class), the columnids and columnnames are messed up. The same columnids and names are added multiple times to the same key in job conf. As a workaround this : https://issues.apache.org/jira/browse/HUDI-151 was added. But this leads to some other issues which results in breaking the RT queries. > Current issue : Ideally, a single query in Hive either starts a MapReduce job or a Spark job. Once the query finishes, the mapper/reduces or the spark tasks die. As a result, this caching of column_ids and column_names does not carry across different queries. Hence, this works fine in production environments at the moment. In the case of the demo, it seems like this cache is somehow kept across different queries. > Steps to reproduce the issue : _Execute the following sequence in step 4(a)_ `0: jdbc:hive2://hiveserver:1> select symbol, max(ts) from stock_ticks_mor_rt group by symbol HAVING symbol = 'GOOG';` When this is done, the COLUMN_NAMES and COLUMN_IDS for this query is cached as follows : ` COLUMN_NAMES ==> ts,symbol,_hoodie_record_key,_hoodie_commit_time,_hoodie_partition_path COLUMN_IDS ==> 6,7,2,0,3 ` _Now run the second query :_ `0: jdbc:hive2://hiveserver:1> select `_hoodie_commit_time`, symbol, ts, volume, open, close from stock_ticks_mor_rt where symbol = 'GOOG';` At this time, although the projection cols are as follows : ` Projection Column Names => _hoodie_commit_time,volume,ts,symbol,close,open,_hoodie_record_key,_hoodie_partition_path Projection Column Ids => 0,5,6,7,14,15,2,3 ` due to the fact that we cache these values (to fix hive on spark), these values are replaced with the earlier values (see [here](https://github.com/apache/incubator-hudi/blob/master/hoodie-hadoop-mr/src/main/java/com/uber/hoodie/hadoop/realtime/HoodieRealtimeInputFormat.java#L243)) : ` COLUMN_NAMES ==> ts,symbol,_hoodie_record_key,_hoodie_commit_time,_hoodie_partition_path COLUMN_IDS ==> 6,7,2,0,3 ` Notice that the column names and ids for columns volume, open & close are omitted (since they were not part of the first query and hence the cached values don't have it). Hence these columns are never read/projected and return NULL. This happens intermittently and cannot be reproduced everytime. My suspicion is that it has to do with which datanode the query runs in and if the datanode caches the job conf but I'm not very sure about this. In any case, I'm reverting the change made to have Hive on Spark work. This means Hive on Spark queries will be broken in RT (for some specific types of queries, not all). Since the docker image does not have a way to debug hive on spark queries, I'm figuring out an environment where I can do this (the internal environment that i was using earlier is broken) after which I will find a permanent fix that will not break RT queries and also make hive on spark run. @vinothchandar @bvaradar @bhasudha FYI This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] bvaradar commented on issue #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links

bvaradar commented on issue #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links URL: https://github.com/apache/incubator-hudi/pull/815#issuecomment-517022364 @vinothchandar @n3nash : Fixed some doc formatting in Writing Data page and updating the website to make Whimsy website check green. I see some additional changes replacing 0.0.0.0 with localhost. Have you seen this before. Guess this is due to version change in the tool - bundle. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] bvaradar opened a new pull request #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links

bvaradar opened a new pull request #815: HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links URL: https://github.com/apache/incubator-hudi/pull/815 HUDI-186 Fix formatting for new content in Writing Data page. Update website to reflect new apache links This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] n3nash opened a new pull request #814: Fix for realtime queries

n3nash opened a new pull request #814: Fix for realtime queries URL: https://github.com/apache/incubator-hudi/pull/814 - Fix realtime queries by removing COLUMN_ID and COLUMN_NAME cache in inputformat - These variables were cached to make Hive on Spark work This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-hudi] branch asf-site updated: HUDI-186 : Add missing Apache Links in hudi site

This is an automated email from the ASF dual-hosted git repository. nagarwal pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/incubator-hudi.git The following commit(s) were added to refs/heads/asf-site by this push: new 018802c HUDI-186 : Add missing Apache Links in hudi site 018802c is described below commit 018802ca7a2f97a267a4b8a99b0e97bfa6444362 Author: Balaji Varadarajan AuthorDate: Wed Jul 31 10:50:30 2019 -0700 HUDI-186 : Add missing Apache Links in hudi site --- docs/Gemfile.lock | 2 +- docs/_includes/footer.html | 11 +++ 2 files changed, 12 insertions(+), 1 deletion(-) diff --git a/docs/Gemfile.lock b/docs/Gemfile.lock index b72b9b1..acdd9db 100644 --- a/docs/Gemfile.lock +++ b/docs/Gemfile.lock @@ -153,4 +153,4 @@ DEPENDENCIES jekyll-feed (~> 0.6) BUNDLED WITH - 1.14.3 + 2.0.1 diff --git a/docs/_includes/footer.html b/docs/_includes/footer.html index ed02cf6..d1a77c0 100755 --- a/docs/_includes/footer.html +++ b/docs/_includes/footer.html @@ -15,5 +15,16 @@ reflection of the completeness or stability of the code, it does indicate that the project has yet to be fully endorsed by the ASF. + + +https://incubator.apache.org/;> Apache Incubator +https://www.apache.org/;> About the ASF +https://www.apache.org/events/current-event;> Events +https://www.apache.org/foundation/thanks.html;> Thanks +https://www.apache.org/foundation/sponsorship.html;> Become a Sponsor +https://www.apache.org/security/;> Security +https://www.apache.org/licenses/;> License + +

[GitHub] [incubator-hudi] n3nash merged pull request #813: HUDI-186 : Add missing Apache Links in hudi site

n3nash merged pull request #813: HUDI-186 : Add missing Apache Links in hudi site URL: https://github.com/apache/incubator-hudi/pull/813 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] bvaradar commented on issue #813: HUDI-186 : Add missing Apache Links in hudi site

bvaradar commented on issue #813: HUDI-186 : Add missing Apache Links in hudi site URL: https://github.com/apache/incubator-hudi/pull/813#issuecomment-517005269 @vinothchandar @n3nash : Checked by running the website locally. Needed for making website checks all green. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] bvaradar opened a new pull request #813: HUDI-186 : Add missing Apache Links in hudi site

bvaradar opened a new pull request #813: HUDI-186 : Add missing Apache Links in hudi site URL: https://github.com/apache/incubator-hudi/pull/813 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] n3nash commented on issue #812: KryoException: Unable to find class

n3nash commented on issue #812: KryoException: Unable to find class URL: https://github.com/apache/incubator-hudi/issues/812#issuecomment-516965553 This looks more like a spark issue. So whenever spark shuffles data, if you choose kryo for serialization, one has to register java objects with kryo under a name, it looks like kryo is unable to find that object under the name and hence throws class not found. Does your application change over time in any way ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

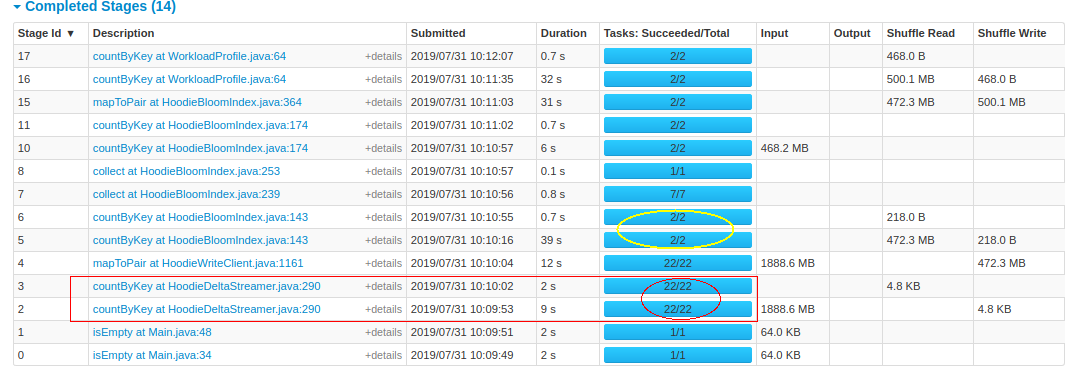

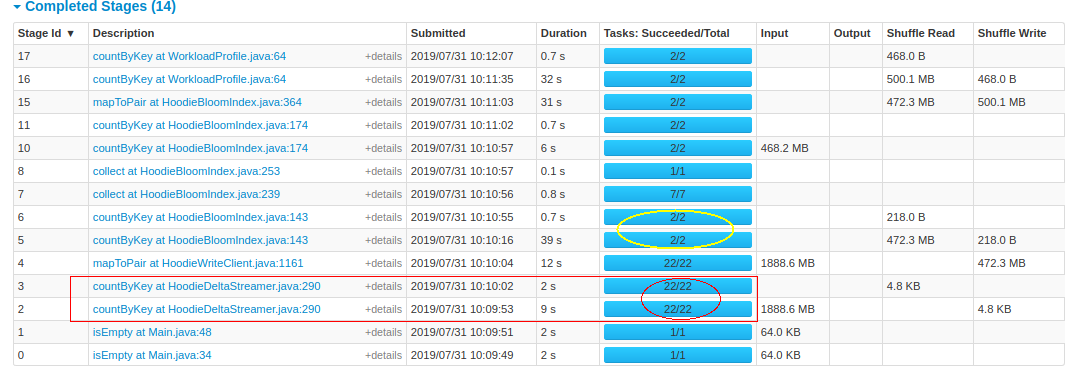

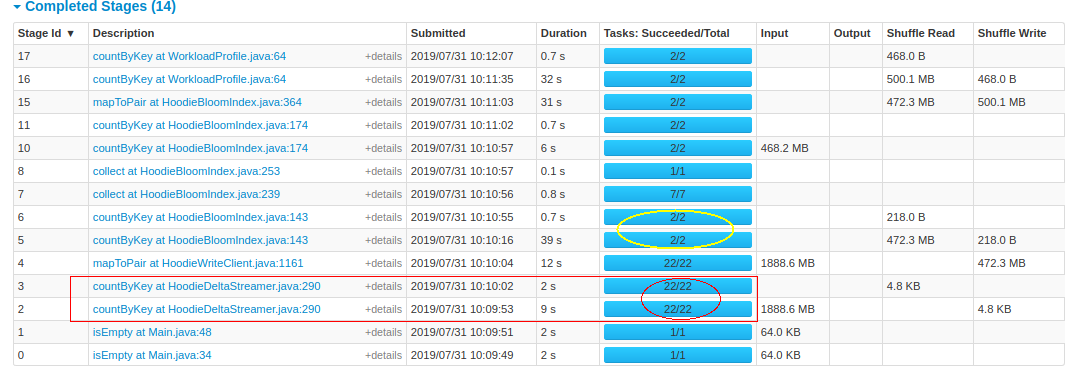

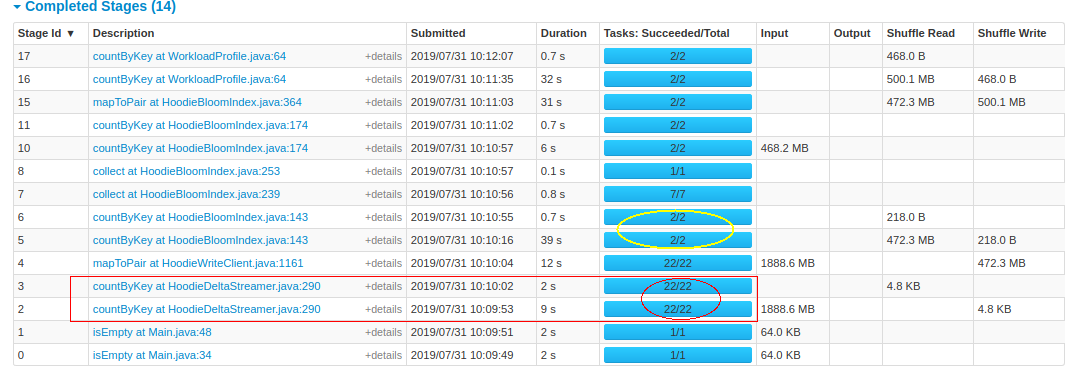

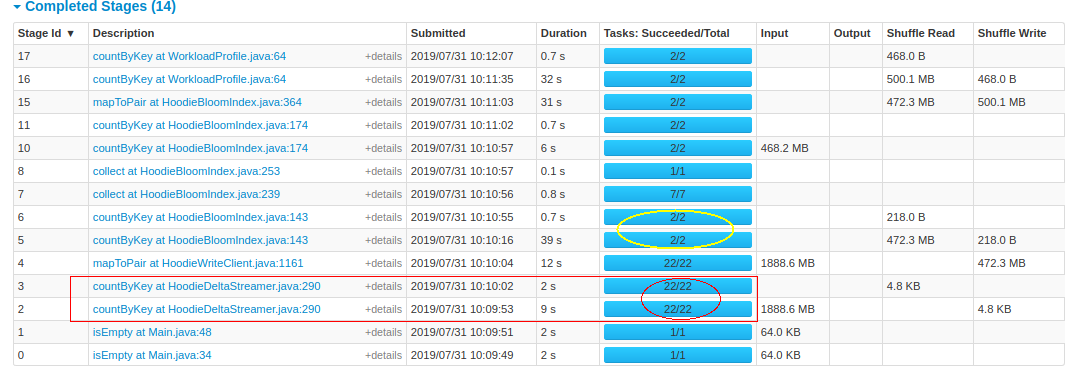

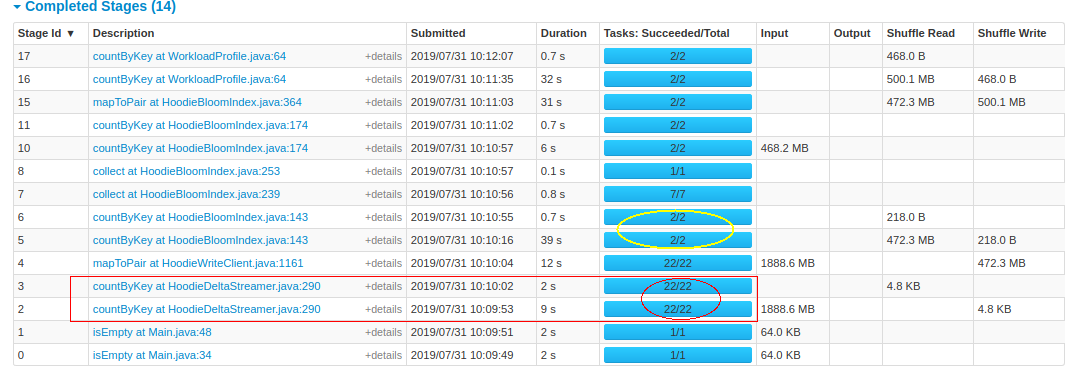

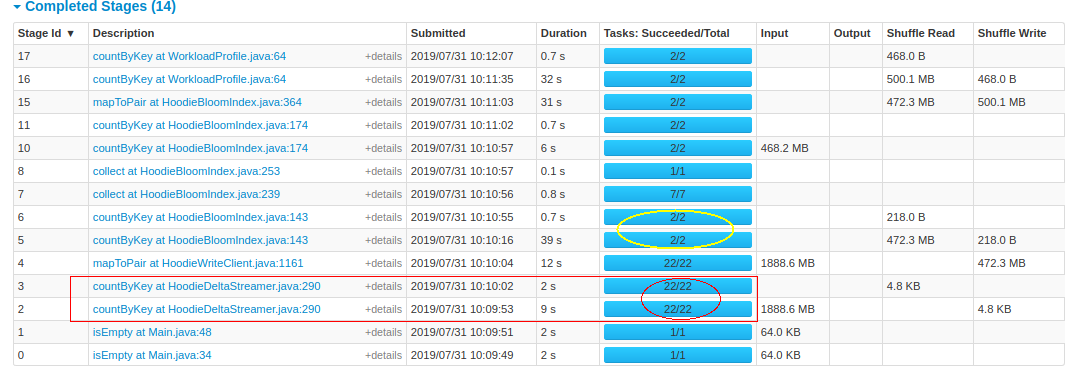

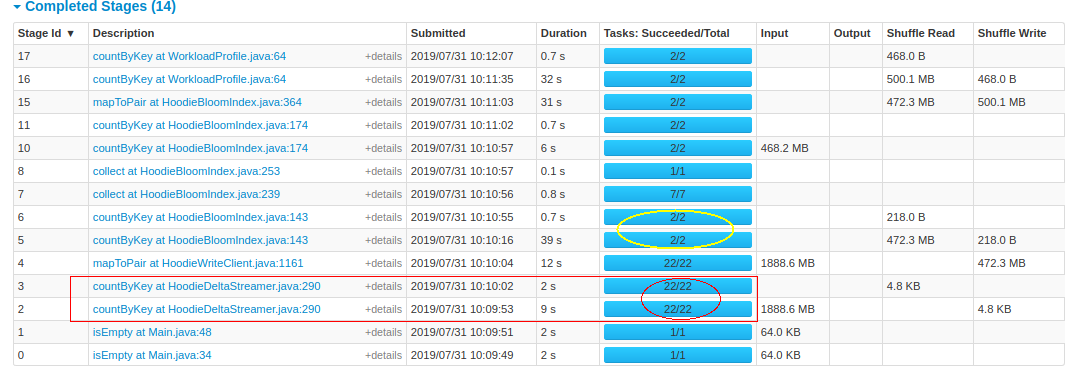

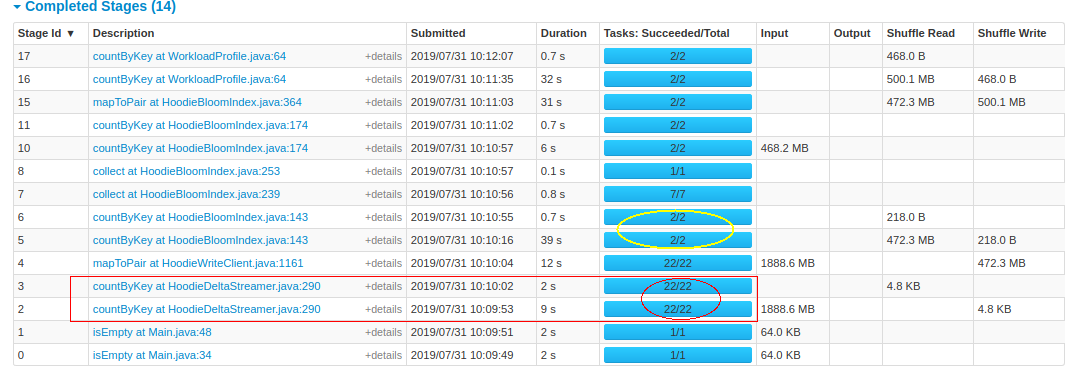

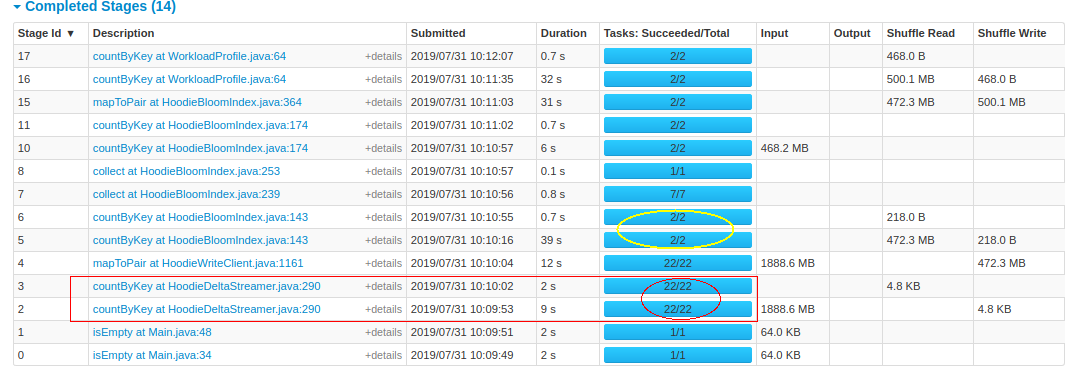

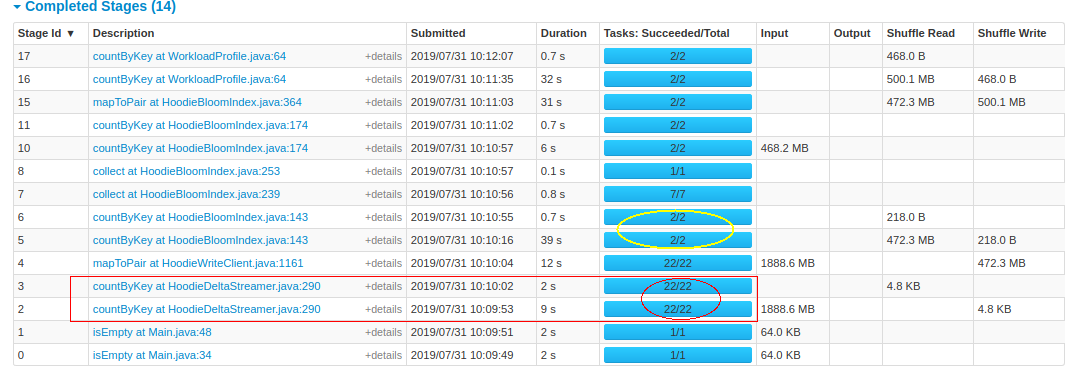

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first countByKey it have 22 and on the HoodieBloomIndex its 2 as observed from the Spark UI below.  The effect is clearly seen as we increase the size of the input data from 2 GB to 27 GB. For stage 2, 3, and 4, it was using the 90 executors as provided and decreases it accordingly. While for stage 5, only 2 executors were running from the start.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as a configuration? In general, are there specific ways to enhance the performance of bloom indexing? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  The effect is clearly seen as we increase the size of the input data from 2 GB to 27 GB. For stage 2, 3, and 4, it was using the 90 executors as provided and decreases it accordingly. While for stage 5, only 2 executors were running from the start.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as a configuration? In general, are there specific ways to enhance the performance of bloom indexing? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  The effect is clearly seen as we increase the size of the input data from 2 GB to 27 GB. For stage 2, 3, and 4, it was using the 90 executors as provided and decreases it accordingly. While for stage 5, only 2 executors were only running from the start.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as a configuration? In general, are there specific ways to enhance the performance of bloom indexing? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  The effect is clearly seen as we increase the size of the input data from 2 GB to 27 GB. For stage 2, 3, and 4, it was using the 90 executors as provided and decreases it accordingly. While for stage 5, 2 executors were only running from the start.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as a configuration? In general, are there specific ways to enhance the performance of bloom indexing? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  The effect is clearly seen as we increase the size of the input data from 2 GB to 27 GB.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as a configuration? In general, are there specific ways to enhance the performance of bloom indexing? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  The effect is clearly seen as we increase the size of the input data from 2 GB to 27 GB.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as configuration? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on a change in pull request #805: fix DeltaStreamer writeConfig

vinothchandar commented on a change in pull request #805: fix DeltaStreamer

writeConfig

URL: https://github.com/apache/incubator-hudi/pull/805#discussion_r309183898

##

File path:

hoodie-utilities/src/main/java/com/uber/hoodie/utilities/deltastreamer/DeltaSync.java

##

@@ -453,14 +453,16 @@ private HoodieWriteConfig

getHoodieClientConfig(SchemaProvider schemaProvider) {

HoodieWriteConfig.Builder builder =

HoodieWriteConfig.newBuilder()

.withProps(props)

-.withPath(cfg.targetBasePath)

-.combineInput(cfg.filterDupes, true)

.withCompactionConfig(HoodieCompactionConfig.newBuilder()

+.fromProperties(HoodieIndexConfig.newBuilder()

Review comment:

yeah. that probably the confusion I had before as well :) .. Its atleast

simpler to explain instead of saying "oh this prop is overridden, this is not"

as long as we document it, it should be fine?

https://github.com/apache/incubator-hudi/tree/asf-site

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] bhasudha commented on issue #789: Demo : Unexpected result in some queries

bhasudha commented on issue #789: Demo : Unexpected result in some queries URL: https://github.com/apache/incubator-hudi/issues/789#issuecomment-516820770 Oh this could also be causing the join issue then? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #789: Demo : Unexpected result in some queries

vinothchandar commented on issue #789: Demo : Unexpected result in some queries

URL: https://github.com/apache/incubator-hudi/issues/789#issuecomment-516813828

With disabling of these static variables introduced, I can get the query to

work now..

```

diff --git

a/hoodie-hadoop-mr/src/main/java/com/uber/hoodie/hadoop/realtime/HoodieRealtimeInputFormat.java

b/hoodie-hadoop-mr/src/main/java/com/uber/hoodie/hadoop/realtime/HoodieRealtimeInputFormat.java

index 14263738..00c36e26 100644

---

a/hoodie-hadoop-mr/src/main/java/com/uber/hoodie/hadoop/realtime/HoodieRealtimeInputFormat.java

+++

b/hoodie-hadoop-mr/src/main/java/com/uber/hoodie/hadoop/realtime/HoodieRealtimeInputFormat.java

@@ -208,11 +208,13 @@ public class HoodieRealtimeInputFormat extends

HoodieInputFormat implements Conf

HOODIE_COMMIT_TIME_COL_POS);

configuration = addProjectionField(configuration,

HoodieRecord.PARTITION_PATH_METADATA_FIELD,

HOODIE_PARTITION_PATH_COL_POS);

+/*

if (!isReadColumnsSet) {

READ_COLUMN_IDS =

configuration.get(ColumnProjectionUtils.READ_COLUMN_IDS_CONF_STR);

READ_COLUMN_NAMES =

configuration.get(ColumnProjectionUtils.READ_COLUMN_NAMES_CONF_STR);

isReadColumnsSet = true;

}

+*/

return configuration;

}

@@ -241,8 +243,8 @@ public class HoodieRealtimeInputFormat extends

HoodieInputFormat implements Conf

+ split);

// Reset the original column ids and names

-job.set(ColumnProjectionUtils.READ_COLUMN_IDS_CONF_STR,

READ_COLUMN_IDS);

-job.set(ColumnProjectionUtils.READ_COLUMN_NAMES_CONF_STR,

READ_COLUMN_NAMES);

+/*job.set(ColumnProjectionUtils.READ_COLUMN_IDS_CONF_STR,

READ_COLUMN_IDS);

+job.set(ColumnProjectionUtils.READ_COLUMN_NAMES_CONF_STR,

READ_COLUMN_NAMES);*/

return new HoodieRealtimeRecordReader((HoodieRealtimeFileSplit) split,

job,

super.getRecordReader(split, job, reporter));

```

those static variables seem to be fixing for some hive version, while

breaking hive 2.x.. I dont recall the reasoning behind it. @n3nash ? But, high

level caching these values across queries in a static variables does not make a

ton of sense to me.. @bvaradar as well for input

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #789: Demo : Unexpected result in some queries

vinothchandar commented on issue #789: Demo : Unexpected result in some queries URL: https://github.com/apache/incubator-hudi/issues/789#issuecomment-516807719 I can reproduce this actually and what I see is that the user columns are removed off the column projection list,before being passed on to AbstractRealtimeRecordReader ? ``` 2019-07-31T10:37:19,108 INFO [d71b59a8-15fd-4339-a60b-158fa67a3901 HiveServer2-Handler-Pool: Thread-45]: realtime.HoodieRealtimeInputFormat (HoodieRealtimeInputFormat.java:getRecordReader(223)) - Before adding Hoodie columns, Projections :_hoodie_commit_time,volume,ts,symbol,close,open, Ids :0,5,6,7,14,15 2019-07-31T10:37:19,108 INFO [d71b59a8-15fd-4339-a60b-158fa67a3901 HiveServer2-Handler-Pool: Thread-45]: realtime.HoodieRealtimeInputFormat (HoodieRealtimeInputFormat.java:getRecordReader(235)) - Creating record reader with readCols :_hoodie_commit_time,volume,ts,symbol,close,open,_hoodie_record_key,_hoodie_partition_path, Ids :0,5,6,7,14,15,2,3 2019-07-31T10:37:19,200 INFO [d71b59a8-15fd-4339-a60b-158fa67a3901 HiveServer2-Handler-Pool: Thread-45]: realtime.AbstractRealtimeRecordReader (AbstractRealtimeRecordReader.java:(97)) - cfg ==> ts,symbol,_hoodie_record_key,_hoodie_commit_time,_hoodie_partition_path 2019-07-31T10:37:19,200 INFO [d71b59a8-15fd-4339-a60b-158fa67a3901 HiveServer2-Handler-Pool: Thread-45]: realtime.AbstractRealtimeRecordReader (AbstractRealtimeRecordReader.java:(98)) - columnIds ==> 6,7,2,0,3 2019-07-31T10:37:19,200 INFO [d71b59a8-15fd-4339-a60b-158fa67a3901 HiveServer2-Handler-Pool: Thread-45]: realtime.AbstractRealtimeRecordReader (AbstractRealtimeRecordReader.java:(99)) - partitioningColumns ==> dt ``` Seems like a regression to me.. is HUDI-151 related? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] vinothchandar commented on issue #811: HUDI-182 : Adding HoodieCombineHiveInputFormat for COW tables

vinothchandar commented on issue #811: HUDI-182 : Adding HoodieCombineHiveInputFormat for COW tables URL: https://github.com/apache/incubator-hudi/pull/811#issuecomment-516801178 @n3nash I think checkstyle is failing? what Hive version does this correspond to? can we also document that in the class comments This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This change seems to occur due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as configuration? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This seems of due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism for bloom index inside without the need to set it as configuration? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This seems of due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below.  How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism inside without the need to set it as configuration? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code to the countByKey for counting the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This seems of due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below. How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism inside without the need to set it as configuration?  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI

NetsanetGeb edited a comment on issue #714: Performance Comparison of HoodieDeltaStreamer and DataSourceAPI URL: https://github.com/apache/incubator-hudi/issues/714#issuecomment-516753477 After i used hoodie 0.4.6 version, the performance improved and now its taking 4 minutes.  I also added a similar code of the countByKey to count the records in the HoodieDeltaStreamer class and check why its taking long in the HoodieBloomIndex and it took about 9 seconds. While the countByKey of the HoodieBloomIndex is still taking 39 seconds. This seems of due to parallelism because on the first count it have 22 and on the HoodieBloom index its 2 as observed from the Spark UI below. How do we enhance the parallelism of the bloom index since hoodie is calculating the parallelism inside without the need to set it as configuration?  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] arw357 opened a new issue #812: KryoException: Unable to find class

arw357 opened a new issue #812: KryoException: Unable to find class URL: https://github.com/apache/incubator-hudi/issues/812 I get the exception at the end while trying to upsert the same file more thatn 12 times ( not sure why 12 ) . The error is given for every partition ( 6 of them - i just pasted the 4th one ) . I am using the same SparkSession to do the upserts. It does not happen if I increase the `hoodie.cleaner.commits.retained` . The place where it crashes is on the collect part of this piece of code from HoodieCopyOnWrite: ``` List> partitionCleanStats = jsc .parallelize(partitionsToClean, cleanerParallelism) .flatMapToPair(getFilesToDeleteFunc(this, config)) .repartition(cleanerParallelism)// repartition to remove skews .mapPartitionsToPair(deleteFilesFunc(this)).reduceByKey( // merge partition level clean stats below (Function2) (e1, e2) -> e1 .merge(e2)).collect(); ``` ``` 09:26:09.181 [task-result-getter-2] ERROR org.apache.spark.scheduler.TaskResultGetter - Exception while getting task result com.esotericsoftware.kryo.KryoException: Unable to find class: hdfs://namenode:8020/test/20190731-091411-373/1564557251826_551/converted/A/4/2c5790b6-eb12-4c15-a84a-f287d9cd9984_1_20190731091435.parquetA/4 Serialization trace: deletePathPatterns (com.uber.hoodie.table.HoodieCopyOnWriteTable$PartitionCleanStat) at com.esotericsoftware.kryo.util.DefaultClassResolver.readName(DefaultClassResolver.java:160) at com.esotericsoftware.kryo.util.DefaultClassResolver.readClass(DefaultClassResolver.java:133) at com.esotericsoftware.kryo.Kryo.readClass(Kryo.java:693) at com.esotericsoftware.kryo.Kryo.readClassAndObject(Kryo.java:804) at com.esotericsoftware.kryo.serializers.CollectionSerializer.read(CollectionSerializer.java:134) at com.esotericsoftware.kryo.serializers.CollectionSerializer.read(CollectionSerializer.java:40) at com.esotericsoftware.kryo.Kryo.readObject(Kryo.java:731) at com.esotericsoftware.kryo.serializers.ObjectField.read(ObjectField.java:125) at com.esotericsoftware.kryo.serializers.FieldSerializer.read(FieldSerializer.java:543) at com.esotericsoftware.kryo.Kryo.readClassAndObject(Kryo.java:813) at com.twitter.chill.Tuple2Serializer.read(TupleSerializers.scala:42) at com.twitter.chill.Tuple2Serializer.read(TupleSerializers.scala:33) at com.esotericsoftware.kryo.Kryo.readObject(Kryo.java:731) at com.esotericsoftware.kryo.serializers.DefaultArraySerializers$ObjectArraySerializer.read(DefaultArraySerializers.java:391) at com.esotericsoftware.kryo.serializers.DefaultArraySerializers$ObjectArraySerializer.read(DefaultArraySerializers.java:302) at com.esotericsoftware.kryo.Kryo.readClassAndObject(Kryo.java:813) at org.apache.spark.serializer.KryoSerializerInstance.deserialize(KryoSerializer.scala:362) at org.apache.spark.scheduler.DirectTaskResult.value(TaskResult.scala:88) at org.apache.spark.scheduler.TaskResultGetter$$anon$3$$anonfun$run$1.apply$mcV$sp(TaskResultGetter.scala:72) at org.apache.spark.scheduler.TaskResultGetter$$anon$3$$anonfun$run$1.apply(TaskResultGetter.scala:63) at org.apache.spark.scheduler.TaskResultGetter$$anon$3$$anonfun$run$1.apply(TaskResultGetter.scala:63) at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1991) at org.apache.spark.scheduler.TaskResultGetter$$anon$3.run(TaskResultGetter.scala:62) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.ClassNotFoundException: hdfs://namenode:8020/test/20190731-091411-373/1564557251826_551/converted/A/4/2c5790b6-eb12-4c15-a84a-f287d9cd9984_1_20190731091435.parquetA/4 at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at com.esotericsoftware.kryo.util.DefaultClassResolver.readName(DefaultClassResolver.java:154) ... 25 more [error]org.apache.spark.SparkException: Job aborted due to stage failure: Exception while getting task result: com.esotericsoftware.kryo.KryoException: Unable to find class: hdfs://namenode:8020/test/20190731-091411-373/1564557251826_551/converted/A/4/2c5790b6-eb12-4c15-a84a-f287d9cd9984_1_20190731091435.parquetA/4 [error]Serialization trace: [error]deletePathPatterns (com.uber.hoodie.table.HoodieCopyOnWriteTable$PartitionCleanStat) (DAGScheduler.scala:1602) [error] org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1602)

[GitHub] [incubator-hudi] cdmikechen commented on issue #774: Matching question of the version in Spark and Hive2

cdmikechen commented on issue #774: Matching question of the version in Spark

and Hive2

URL: https://github.com/apache/incubator-hudi/issues/774#issuecomment-516722052

Another things. I found that Hive2 use log4j2 and spark use log4j. If I

commit a spark task like

```bash

spark-submit --class xx.Main --jars

xxx.jar,hoodie-spark-bundle-0.4.8-SNAPSHOT.jar xxx/sparkserver.jar

```

It will report error:

```log

ERROR StatusLogger Unrecognized format specifier [d]

ERROR StatusLogger Unrecognized conversion specifier [d] starting at

position 16 in conversion pattern.

ERROR StatusLogger Unrecognized format specifier [thread]

ERROR StatusLogger Unrecognized conversion specifier [thread] starting at

position 25 in conversion pattern.

ERROR StatusLogger Unrecognized format specifier [level]

ERROR StatusLogger Unrecognized conversion specifier [level] starting at

position 35 in conversion pattern.

ERROR StatusLogger Unrecognized format specifier [logger]

ERROR StatusLogger Unrecognized conversion specifier [logger] starting at

position 47 in conversion pattern.

ERROR StatusLogger Unrecognized format specifier [msg]

ERROR StatusLogger Unrecognized conversion specifier [msg] starting at

position 54 in conversion pattern.

ERROR StatusLogger Unrecognized format specifier [n]

ERROR StatusLogger Unrecognized conversion specifier [n] starting at

position 56 in conversion pattern.

Exception in thread "main" java.lang.AbstractMethodError:

org.apache.logging.log4j.core.config.ConfigurationFactory.getConfiguration(Lorg/apache/logging/log4j/core/config/ConfigurationSource;)Lorg/apache/logging/log4j/core/config/Configuration;

at

org.apache.logging.log4j.core.config.ConfigurationFactory$Factory.getConfiguration(ConfigurationFactory.java:509)

at

org.apache.logging.log4j.core.config.ConfigurationFactory$Factory.getConfiguration(ConfigurationFactory.java:449)

...

...

```

So that I need to remove some hive dependencies in pom like

```xml

${hive.groupid}

hive-jdbc

${hive.version}

org.eclipse.jetty.aggregate

jetty-all

org.apache.logging.log4j

log4j-1.2-api

org.apache.logging.log4j

log4j-web

org.apache.logging.log4j

log4j-slf4j-impl

${hive.groupid}

hive-exec

```

After that spark can work.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] cdmikechen edited a comment on issue #774: Matching question of the version in Spark and Hive2

cdmikechen edited a comment on issue #774: Matching question of the version in Spark and Hive2 URL: https://github.com/apache/incubator-hudi/issues/774#issuecomment-516719772 @vinothchandar I think we should try to use Spark's basic functions as the standard. It means we use spark-hive libs when building, but exclude it when packaging. I think if we need spark to connect hive to syn some table, we will use `Sparksession.enableHiveSupport()`. It will let spark open a hive connection client itself. we can use it, so that we don't need to import `hoodie-hive` in spark. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] cdmikechen commented on issue #774: Matching question of the version in Spark and Hive2

cdmikechen commented on issue #774: Matching question of the version in Spark and Hive2 URL: https://github.com/apache/incubator-hudi/issues/774#issuecomment-516719772 I think we should try to use Spark's basic functions as the standard. It means we use spark-hive libs when building, but exclude it when packaging. I think if we need spark to connect hive to syn some table, we will use `Sparksession.enableHiveSupport()`. It will let spark open a hive connection client itself. we can use it, so that we don't need to import `hoodie-hive` in spark. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-hudi] arw357 commented on issue #590: Failed to APPEND_FILE /HUDI_MOR/2019/03/01/.abca886a-e213-4199-b571-475919d34fe5_20190301123358.log.1 for DFSClient_NONMAPREDUCE_-263902482_1

arw357 commented on issue #590: Failed to APPEND_FILE /HUDI_MOR/2019/03/01/.abca886a-e213-4199-b571-475919d34fe5_20190301123358.log.1 for DFSClient_NONMAPREDUCE_-263902482_1 on 172.29.0.10 because lease recovery is in progress URL: https://github.com/apache/incubator-hudi/issues/590#issuecomment-516717346 Hey @n3nash , the datanode (only one) is alive and kicking, I can use it for other ingestions with no issue. Configured Capacity: 749.96 GB Configured Remote Capacity: 0 B DFS Used:748.36 MB (0.1%) Non DFS Used:105.37 GB DFS Remaining: 605.7 GB (80.76%) Block Pool Used: 748.36 MB (0.1%) DataNodes usages% (Min/Median/Max/stdDev): 0.10% / 0.10% / 0.10% / 0.00% Live Nodes 1 (Decommissioned: 0, In Maintenance: 0) Dead Nodes 0 (Decommissioned: 0, In Maintenance: 0) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services