[jira] [Created] (HUDI-3132) Minor fixes for HoodieCatalog

Danny Chen created HUDI-3132: Summary: Minor fixes for HoodieCatalog Key: HUDI-3132 URL: https://issues.apache.org/jira/browse/HUDI-3132 Project: Apache Hudi Issue Type: Bug Components: Flink Integration Reporter: Danny Chen Fix For: 0.11.0, 0.10.1 -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Assigned] (HUDI-2661) java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.catalog.CatalogTable.copy

[

https://issues.apache.org/jira/browse/HUDI-2661?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yann Byron reassigned HUDI-2661:

Assignee: Forward Xu (was: Yann Byron)

> java.lang.NoSuchMethodError:

> org.apache.spark.sql.catalyst.catalog.CatalogTable.copy

>

>

> Key: HUDI-2661

> URL: https://issues.apache.org/jira/browse/HUDI-2661

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Affects Versions: 0.10.0

>Reporter: Changjun Zhang

>Assignee: Forward Xu

>Priority: Critical

> Fix For: 0.11.0, 0.10.1

>

> Attachments: image-2021-11-01-21-47-44-538.png,

> image-2021-11-01-21-48-22-765.png

>

>

> Hudi Integrate with Spark SQL :

> when I add :

> {code:sh}

> // Some comments here

> spark-sql --conf

> 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \

> --conf

> 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'

> {code}

> to create a table on an existing hudi table:

> {code:sql}

> create table testdb.tb_hudi_operation_test using hudi

> location '/tmp/flinkdb/datas/tb_hudi_operation';

> {code}

> then throw Exception :

> !image-2021-11-01-21-47-44-538.png|thumbnail!

> !image-2021-11-01-21-48-22-765.png|thumbnail!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Assigned] (HUDI-1850) Read on table fails if the first write to table failed

[

https://issues.apache.org/jira/browse/HUDI-1850?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yann Byron reassigned HUDI-1850:

Assignee: sivabalan narayanan (was: Yann Byron)

> Read on table fails if the first write to table failed

> --

>

> Key: HUDI-1850

> URL: https://issues.apache.org/jira/browse/HUDI-1850

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Affects Versions: 0.8.0

>Reporter: Vaibhav Sinha

>Assignee: sivabalan narayanan

>Priority: Major

> Labels: core-flow-ds, pull-request-available, release-blocker,

> sev:high, spark

> Fix For: 0.11.0, 0.10.1

>

> Attachments: Screenshot 2021-04-24 at 7.53.22 PM.png

>

>

> {code:java}

> ava.util.NoSuchElementException: No value present in Option

> at org.apache.hudi.common.util.Option.get(Option.java:88)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.common.table.TableSchemaResolver.getTableSchemaFromCommitMetadata(TableSchemaResolver.java:215)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.common.table.TableSchemaResolver.getTableAvroSchema(TableSchemaResolver.java:166)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.common.table.TableSchemaResolver.getTableAvroSchema(TableSchemaResolver.java:155)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.MergeOnReadSnapshotRelation.(MergeOnReadSnapshotRelation.scala:65)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:99)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:63)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:354)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at

> org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:326)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at

> org.apache.spark.sql.DataFrameReader.$anonfun$load$3(DataFrameReader.scala:308)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at scala.Option.getOrElse(Option.scala:189)

> ~[scala-library-2.12.10.jar:?]

> at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:308)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:240)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> {code}

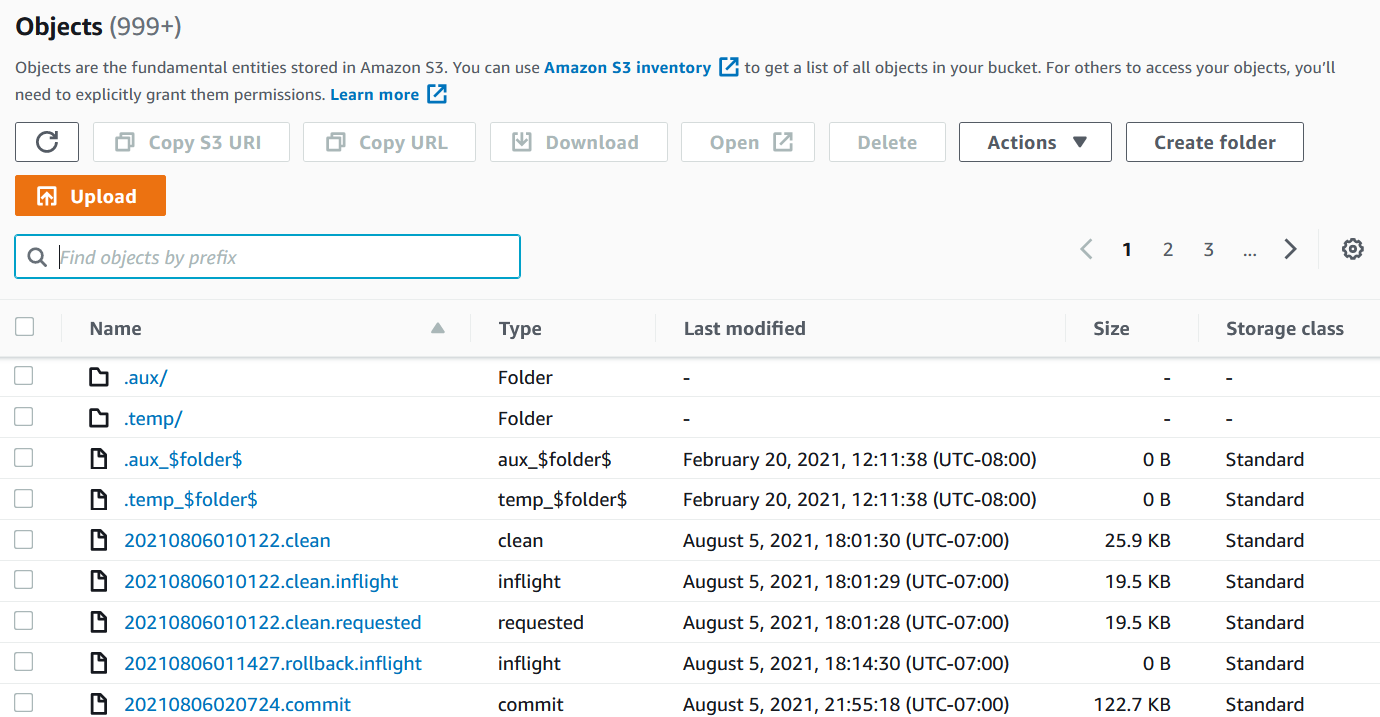

> The screenshot shows the files that got created before the write had failed.

>

> !Screenshot 2021-04-24 at 7.53.22 PM.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [hudi] vinothchandar commented on pull request #3173: [HUDI-1951] Add bucket hash index, compatible with the hive bucket

vinothchandar commented on pull request #3173: URL: https://github.com/apache/hudi/pull/3173#issuecomment-1002904375 ``` [INFO] Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 125.714 s - in org.apache.hudi.integ.command.ITTestHoodieSyncCommand [ERROR] Tests run: 2, Failures: 1, Errors: 0, Skipped: 1, Time elapsed: 29.812 s <<< FAILURE! - in org.apache.hudi.integ.ITTestHoodieDemo [ERROR] org.apache.hudi.integ.ITTestHoodieDemo.testParquetDemo Time elapsed: 29.622 s <<< FAILURE! org.opentest4j.AssertionFailedError: Command ([hdfs, dfsadmin, -safemode, wait]) expected to succeed. Exit (255) ==> expected: <0> but was: <255> at org.apache.hudi.integ.ITTestHoodieDemo.setupDemo(ITTestHoodieDemo.java:167) at org.apache.hudi.integ.ITTestHoodieDemo.testParquetDemo(ITTestHoodieDemo.java:107) ``` This keeps failing. Could you rebase again with latest master? want to try running the tests again -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2901) Fixed the bug clustering jobs are not running in parallel

[ https://issues.apache.org/jira/browse/HUDI-2901?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2901: - Sprint: Hudi-Sprint-0.10.1 > Fixed the bug clustering jobs are not running in parallel > - > > Key: HUDI-2901 > URL: https://issues.apache.org/jira/browse/HUDI-2901 > Project: Apache Hudi > Issue Type: Bug > Components: Performance >Affects Versions: 0.9.0 > Environment: spark2.4.5 >Reporter: tao meng >Assignee: tao meng >Priority: Critical > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > > Fixed the bug clustering jobs are not running in parasllel。 > [https://github.com/apache/hudi/issues/4135] > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2901) Fixed the bug clustering jobs are not running in parallel

[ https://issues.apache.org/jira/browse/HUDI-2901?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2901: - Story Points: 1 > Fixed the bug clustering jobs are not running in parallel > - > > Key: HUDI-2901 > URL: https://issues.apache.org/jira/browse/HUDI-2901 > Project: Apache Hudi > Issue Type: Bug > Components: Performance >Affects Versions: 0.9.0 > Environment: spark2.4.5 >Reporter: tao meng >Assignee: tao meng >Priority: Critical > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > > Fixed the bug clustering jobs are not running in parasllel。 > [https://github.com/apache/hudi/issues/4135] > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2938) Code Refactor: Metadata util to get latest file slices for readers and writers

[ https://issues.apache.org/jira/browse/HUDI-2938?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2938: - Issue Type: Improvement (was: Task) > Code Refactor: Metadata util to get latest file slices for readers and writers > -- > > Key: HUDI-2938 > URL: https://issues.apache.org/jira/browse/HUDI-2938 > Project: Apache Hudi > Issue Type: Improvement >Reporter: Manoj Govindassamy >Assignee: Manoj Govindassamy >Priority: Major > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > > Need to address review comments for > https://issues.apache.org/jira/browse/HUDI-2923 > https://github.com/apache/hudi/pull/4206/files -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Assigned] (HUDI-281) HiveSync failure through Spark when useJdbc is set to false

[

https://issues.apache.org/jira/browse/HUDI-281?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu reassigned HUDI-281:

---

Assignee: (was: Raymond Xu)

> HiveSync failure through Spark when useJdbc is set to false

> ---

>

> Key: HUDI-281

> URL: https://issues.apache.org/jira/browse/HUDI-281

> Project: Apache Hudi

> Issue Type: Improvement

> Components: Hive Integration, Spark Integration, Usability

>Reporter: Udit Mehrotra

>Priority: Major

> Labels: query-eng, user-support-issues

> Fix For: 0.11.0, 0.10.1

>

>

> Table creation with Hive sync through Spark fails, when I set *useJdbc* to

> *false*. Currently I had to modify the code to set *useJdbc* to *false* as

> there is not *DataSourceOption* through which I can specify this field when

> running Hudi code.

> Here is the failure:

> {noformat}

> java.lang.NoSuchMethodError:

> org.apache.hadoop.hive.ql.session.SessionState.start(Lorg/apache/hudi/org/apache/hadoop_hive/conf/HiveConf;)Lorg/apache/hadoop/hive/ql/session/SessionState;

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQLs(HoodieHiveClient.java:527)

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQLUsingHiveDriver(HoodieHiveClient.java:517)

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQL(HoodieHiveClient.java:507)

> at

> org.apache.hudi.hive.HoodieHiveClient.createTable(HoodieHiveClient.java:272)

> at org.apache.hudi.hive.HiveSyncTool.syncSchema(HiveSyncTool.java:132)

> at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:96)

> at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:68)

> at

> org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

> at

> org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

> at

> org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:156)

> at

> org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

> at

> org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

> at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

> at

> org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

> at

> org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

> at

> org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

> at

> org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

> at

> org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

> at

> org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

> at

> org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229){noformat}

> I was expecting this to fail through Spark, becuase *hive-exec* is not shaded

> inside *hudi-spark-bundle*, while *HiveConf* is shaded and relocated. This

> *SessionState* is coming from the spark-hive jar and obviously it does not

> accept the relocated *HiveConf*.

> We in *EMR* are running into same problem when trying to integrate with Glue

> Catalog. For this we have to create Hive metastore client through

> *Hive.get(conf).getMsc()* instead of how it is being down now, so that

> alternate implementations of metastore can get created. However, because

> hive-exec is not shaded but HiveConf is relocated we run into same issues

> there.

> It would not be recommended to shade *hive-exec* either because it itself is

> an Uber jar that shades a lot of things, and all of them would end up in

> *hudi-spark-bundle* jar. We would not want to head that route.

[GitHub] [hudi] harsh1231 commented on a change in pull request #4404: [HUDI-2558] Fixing Clustering w/ sort columns with null values fails

harsh1231 commented on a change in pull request #4404:

URL: https://github.com/apache/hudi/pull/4404#discussion_r776596935

##

File path:

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RDDCustomColumnsSortPartitioner.java

##

@@ -55,8 +55,17 @@ public RDDCustomColumnsSortPartitioner(String[] columnNames,

Schema schema) {

final String[] sortColumns = this.sortColumnNames;

final SerializableSchema schema = this.serializableSchema;

return records.sortBy(

-record -> HoodieAvroUtils.getRecordColumnValues(record, sortColumns,

schema),

+record -> {

+ Object recordValue = HoodieAvroUtils.getRecordColumnValues(record,

sortColumns, schema);

+ // null values are replaced with empty string for null_first order

+ if (recordValue == null) {

+return "";

Review comment:

Will update using `StringUtils.EMPTY_STRING`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] harsh1231 commented on a change in pull request #4404: [HUDI-2558] Fixing Clustering w/ sort columns with null values fails

harsh1231 commented on a change in pull request #4404:

URL: https://github.com/apache/hudi/pull/4404#discussion_r776596753

##

File path:

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RDDCustomColumnsSortPartitioner.java

##

@@ -55,8 +55,17 @@ public RDDCustomColumnsSortPartitioner(String[] columnNames,

Schema schema) {

final String[] sortColumns = this.sortColumnNames;

final SerializableSchema schema = this.serializableSchema;

return records.sortBy(

-record -> HoodieAvroUtils.getRecordColumnValues(record, sortColumns,

schema),

+record -> {

+ Object recordValue = HoodieAvroUtils.getRecordColumnValues(record,

sortColumns, schema);

+ // null values are replaced with empty string for null_first order

+ if (recordValue == null) {

+return "";

Review comment:

`if (columns.length == 1) {

return HoodieAvroUtils.getNestedFieldVal(genericRecord, columns[0],

true);

}` this nested trace can return data type other than string from

`convertValueForAvroLogicalTypes`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (HUDI-2946) Upgrade maven plugin to make Hudi be compatible with higher Java versions

[

https://issues.apache.org/jira/browse/HUDI-2946?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-2946:

-

Fix Version/s: (was: 0.10.1)

> Upgrade maven plugin to make Hudi be compatible with higher Java versions

> -

>

> Key: HUDI-2946

> URL: https://issues.apache.org/jira/browse/HUDI-2946

> Project: Apache Hudi

> Issue Type: Improvement

>Reporter: Wenning Ding

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.11.0

>

>

> I saw several issues while building Hudi w/ Java 11:

>

> {{[ERROR] Failed to execute goal

> org.apache.maven.plugins:maven-jar-plugin:2.6:test-jar (default) on project

> hudi-common: Execution default of goal

> org.apache.maven.plugins:maven-jar-plugin:2.6:test-jar failed: An API

> incompatibility was encountered while executing

> org.apache.maven.plugins:maven-jar-plugin:2.6:test-jar:

> java.lang.ExceptionInInitializerError: null[ERROR] Failed to execute goal

> org.apache.maven.plugins:maven-shade-plugin:3.1.1:shade (default) on project

> hudi-hadoop-mr-bundle: Error creating shaded jar: Problem shading JAR

> /workspace/workspace/rchertar.bigtop.hudi-rpm-mainline-6.x-0.9.0/build/hudi/rpm/BUILD/hudi-0.9.0-amzn-1-SNAPSHOT/packaging/hudi-hadoop-mr-bundle/target/hudi-hadoop-mr-bundle-0.9.0-amzn-1-SNAPSHOT.jar

> entry org/apache/hudi/hadoop/bundle/Main.class:

> java.lang.IllegalArgumentException -> [Help 1]}}

>

> We need to upgrade maven plugin versions to make it be compatible with Java

> 11.

> Also upgrade dockerfile-maven-plugin to latest versions to support Java 11

> [https://github.com/spotify/dockerfile-maven/pull/230]

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-2426) spark sql extensions breaks read.table from metastore

[

https://issues.apache.org/jira/browse/HUDI-2426?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-2426:

-

Story Points: 1

> spark sql extensions breaks read.table from metastore

> -

>

> Key: HUDI-2426

> URL: https://issues.apache.org/jira/browse/HUDI-2426

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Reporter: nicolas paris

>Assignee: Yann Byron

>Priority: Critical

> Labels: sev:critical, user-support-issues

> Fix For: 0.11.0, 0.10.1

>

>

> when adding the hudi spark sql support, this breaks the ability to read a

> hudi metastore from spark:

> bash-4.2$ ./spark3.0.2/bin/spark-shell --packages

> org.apache.hudi:hudi-spark3-bundle_2.12:0.9.0,org.apache.spark:spark-avro_2.12:3.1.2

> --conf "spark.serializer=org.apache.spark.serializer.KryoSerializer" --conf

> 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'

>

> scala> spark.table("default.test_hudi_table").show

> java.lang.UnsupportedOperationException: Unsupported parseMultipartIdentifier

> method

> at

> org.apache.spark.sql.parser.HoodieCommonSqlParser.parseMultipartIdentifier(HoodieCommonSqlParser.scala:65)

> at org.apache.spark.sql.SparkSession.table(SparkSession.scala:581)

> ... 47 elided

>

> removing the config makes the hive table readable again from spark

> this affect at least spark 3.0.x and 3.1.x

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-2611) `create table if not exists` should print message instead of throwing error

[ https://issues.apache.org/jira/browse/HUDI-2611?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2611: - Story Points: 1 > `create table if not exists` should print message instead of throwing error > --- > > Key: HUDI-2611 > URL: https://issues.apache.org/jira/browse/HUDI-2611 > Project: Apache Hudi > Issue Type: Bug > Components: Spark Integration >Reporter: Raymond Xu >Assignee: Yann Byron >Priority: Critical > Labels: user-support-issues > Fix For: 0.11.0, 0.10.1 > > > See details in > https://github.com/apache/hudi/issues/3845#issue-1033218877 -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2661) java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.catalog.CatalogTable.copy

[

https://issues.apache.org/jira/browse/HUDI-2661?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-2661:

-

Story Points: 1

> java.lang.NoSuchMethodError:

> org.apache.spark.sql.catalyst.catalog.CatalogTable.copy

>

>

> Key: HUDI-2661

> URL: https://issues.apache.org/jira/browse/HUDI-2661

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Affects Versions: 0.10.0

>Reporter: Changjun Zhang

>Assignee: Yann Byron

>Priority: Critical

> Fix For: 0.11.0, 0.10.1

>

> Attachments: image-2021-11-01-21-47-44-538.png,

> image-2021-11-01-21-48-22-765.png

>

>

> Hudi Integrate with Spark SQL :

> when I add :

> {code:sh}

> // Some comments here

> spark-sql --conf

> 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \

> --conf

> 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'

> {code}

> to create a table on an existing hudi table:

> {code:sql}

> create table testdb.tb_hudi_operation_test using hudi

> location '/tmp/flinkdb/datas/tb_hudi_operation';

> {code}

> then throw Exception :

> !image-2021-11-01-21-47-44-538.png|thumbnail!

> !image-2021-11-01-21-48-22-765.png|thumbnail!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-2915) Fix field not found in record error for spark-sql

[ https://issues.apache.org/jira/browse/HUDI-2915?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2915: - Story Points: 1 (was: 2) > Fix field not found in record error for spark-sql > - > > Key: HUDI-2915 > URL: https://issues.apache.org/jira/browse/HUDI-2915 > Project: Apache Hudi > Issue Type: Bug > Components: Spark Integration >Reporter: Raymond Xu >Assignee: Forward Xu >Priority: Critical > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > Attachments: image-2021-12-02-19-37-10-346.png > > > !image-2021-12-02-19-37-10-346.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-1850) Read on table fails if the first write to table failed

[

https://issues.apache.org/jira/browse/HUDI-1850?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-1850:

-

Story Points: 1

> Read on table fails if the first write to table failed

> --

>

> Key: HUDI-1850

> URL: https://issues.apache.org/jira/browse/HUDI-1850

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Affects Versions: 0.8.0

>Reporter: Vaibhav Sinha

>Assignee: Yann Byron

>Priority: Major

> Labels: core-flow-ds, pull-request-available, release-blocker,

> sev:high, spark

> Fix For: 0.11.0, 0.10.1

>

> Attachments: Screenshot 2021-04-24 at 7.53.22 PM.png

>

>

> {code:java}

> ava.util.NoSuchElementException: No value present in Option

> at org.apache.hudi.common.util.Option.get(Option.java:88)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.common.table.TableSchemaResolver.getTableSchemaFromCommitMetadata(TableSchemaResolver.java:215)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.common.table.TableSchemaResolver.getTableAvroSchema(TableSchemaResolver.java:166)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.common.table.TableSchemaResolver.getTableAvroSchema(TableSchemaResolver.java:155)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.hudi.MergeOnReadSnapshotRelation.(MergeOnReadSnapshotRelation.scala:65)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:99)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:63)

> ~[hudi-spark3-bundle_2.12-0.8.0.jar:0.8.0]

> at

> org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:354)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at

> org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:326)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at

> org.apache.spark.sql.DataFrameReader.$anonfun$load$3(DataFrameReader.scala:308)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at scala.Option.getOrElse(Option.scala:189)

> ~[scala-library-2.12.10.jar:?]

> at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:308)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:240)

> ~[spark-sql_2.12-3.1.1.jar:3.1.1]

> {code}

> The screenshot shows the files that got created before the write had failed.

>

> !Screenshot 2021-04-24 at 7.53.22 PM.png!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-3100) Hive Conditional sync cannot be set from deltastreamer

[ https://issues.apache.org/jira/browse/HUDI-3100?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-3100: - Story Points: 1 > Hive Conditional sync cannot be set from deltastreamer > -- > > Key: HUDI-3100 > URL: https://issues.apache.org/jira/browse/HUDI-3100 > Project: Apache Hudi > Issue Type: Bug > Components: DeltaStreamer, Hive Integration >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2915) Fix field not found in record error for spark-sql

[ https://issues.apache.org/jira/browse/HUDI-2915?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2915: - Story Points: 2 > Fix field not found in record error for spark-sql > - > > Key: HUDI-2915 > URL: https://issues.apache.org/jira/browse/HUDI-2915 > Project: Apache Hudi > Issue Type: Bug > Components: Spark Integration >Reporter: Raymond Xu >Assignee: Forward Xu >Priority: Critical > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > Attachments: image-2021-12-02-19-37-10-346.png > > > !image-2021-12-02-19-37-10-346.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2966) Add TaskCompletionListener for HoodieMergeOnReadRDD to close logScanner

[ https://issues.apache.org/jira/browse/HUDI-2966?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2966: - Story Points: 1 > Add TaskCompletionListener for HoodieMergeOnReadRDD to close logScanner > --- > > Key: HUDI-2966 > URL: https://issues.apache.org/jira/browse/HUDI-2966 > Project: Apache Hudi > Issue Type: Improvement > Components: Spark Integration >Reporter: tao meng >Assignee: tao meng >Priority: Major > Labels: core-flow-ds, pull-request-available, sev:high > Fix For: 0.11.0, 0.10.1 > > > Add TaskCompletionListener for HoodieMergeOnReadRDD to close logScanner When > the query is completed。 -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-3107) Fix HiveSyncTool drop partitions using JDBC

[ https://issues.apache.org/jira/browse/HUDI-3107?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-3107: - Story Points: 1 > Fix HiveSyncTool drop partitions using JDBC > --- > > Key: HUDI-3107 > URL: https://issues.apache.org/jira/browse/HUDI-3107 > Project: Apache Hudi > Issue Type: Bug > Components: Hive Integration >Reporter: Yue Zhang >Assignee: Yue Zhang >Priority: Major > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > > ``` > org.apache.hudi.exception.HoodieException: Unable to delete table partitions > in /Users/yuezhang/tmp/hudiAfTable/forecast_agg > at > org.apache.hudi.utilities.HoodieDropPartitionsTool.run(HoodieDropPartitionsTool.java:240) > at > org.apache.hudi.utilities.HoodieDropPartitionsTool.main(HoodieDropPartitionsTool.java:212) > at HoodieDropPartitionsToolTest.main(HoodieDropPartitionsToolTest.java:31) > Caused by: org.apache.hudi.exception.HoodieException: Got runtime exception > when hive syncing forecast_agg > at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:119) > at > org.apache.hudi.utilities.HoodieDropPartitionsTool.syncHive(HoodieDropPartitionsTool.java:404) > at > org.apache.hudi.utilities.HoodieDropPartitionsTool.syncToHiveIfNecessary(HoodieDropPartitionsTool.java:270) > at > org.apache.hudi.utilities.HoodieDropPartitionsTool.doDeleteTablePartitionsEager(HoodieDropPartitionsTool.java:252) > at > org.apache.hudi.utilities.HoodieDropPartitionsTool.run(HoodieDropPartitionsTool.java:230) > ... 2 more > Caused by: org.apache.hudi.hive.HoodieHiveSyncException: Failed to sync > partitions for table forecast_agg > at org.apache.hudi.hive.HiveSyncTool.syncPartitions(HiveSyncTool.java:368) > at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:202) > at org.apache.hudi.hive.HiveSyncTool.doSync(HiveSyncTool.java:130) > at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:116) > ... 6 more > Caused by: org.apache.hudi.hive.HoodieHiveSyncException: Failed in executing > SQL ALTER TABLE `forecast_agg` DROP PARTITION (20210623/0/20210623) > at org.apache.hudi.hive.ddl.JDBCExecutor.runSQL(JDBCExecutor.java:64) > at java.util.stream.ForEachOps$ForEachOp$OfRef.accept(ForEachOps.java:184) > at java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193) > at > java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1382) > at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482) > at > java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472) > at > java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:151) > at > java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:174) > at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234) > at java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:418) > at > org.apache.hudi.hive.ddl.JDBCExecutor.dropPartitionsToTable(JDBCExecutor.java:149) > at > org.apache.hudi.hive.HoodieHiveClient.dropPartitionsToTable(HoodieHiveClient.java:130) > at org.apache.hudi.hive.HiveSyncTool.syncPartitions(HiveSyncTool.java:363) > ... 9 more > Caused by: org.apache.hive.service.cli.HiveSQLException: Error while > compiling statement: FAILED: ParseException line 1:43 cannot recognize input > near '20210623' '/' '0' in drop partition statement > at org.apache.hive.jdbc.Utils.verifySuccess(Utils.java:256) > at org.apache.hive.jdbc.Utils.verifySuccessWithInfo(Utils.java:242) > at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:254) > at org.apache.hudi.hive.ddl.JDBCExecutor.runSQL(JDBCExecutor.java:62) > ... 21 more > Caused by: org.apache.hive.service.cli.HiveSQLException: Error while > compiling statement: FAILED: ParseException line 1:43 cannot recognize input > near '20210623' '/' '0' in drop partition statement > at > org.apache.hive.service.cli.operation.Operation.toSQLException(Operation.java:380) > at > org.apache.hive.service.cli.operation.SQLOperation.prepare(SQLOperation.java:206) > at > org.apache.hive.service.cli.operation.SQLOperation.runInternal(SQLOperation.java:290) > at org.apache.hive.service.cli.operation.Operation.run(Operation.java:320) > at > org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementInternal(HiveSessionImpl.java:530) > at > org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementAsync(HiveSessionImpl.java:517) > at > org.apache.hive.service.cli.CLIService.executeStatementAsync(CLIService.java:310) > at > org.apache.hive.service.cli.thrift.ThriftCLIService.ExecuteStatement(ThriftCLIService.java:530) > at >

[jira] [Updated] (HUDI-281) HiveSync failure through Spark when useJdbc is set to false

[

https://issues.apache.org/jira/browse/HUDI-281?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-281:

Story Points: 1

> HiveSync failure through Spark when useJdbc is set to false

> ---

>

> Key: HUDI-281

> URL: https://issues.apache.org/jira/browse/HUDI-281

> Project: Apache Hudi

> Issue Type: Improvement

> Components: Hive Integration, Spark Integration, Usability

>Reporter: Udit Mehrotra

>Assignee: Raymond Xu

>Priority: Major

> Labels: query-eng, user-support-issues

> Fix For: 0.11.0, 0.10.1

>

>

> Table creation with Hive sync through Spark fails, when I set *useJdbc* to

> *false*. Currently I had to modify the code to set *useJdbc* to *false* as

> there is not *DataSourceOption* through which I can specify this field when

> running Hudi code.

> Here is the failure:

> {noformat}

> java.lang.NoSuchMethodError:

> org.apache.hadoop.hive.ql.session.SessionState.start(Lorg/apache/hudi/org/apache/hadoop_hive/conf/HiveConf;)Lorg/apache/hadoop/hive/ql/session/SessionState;

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQLs(HoodieHiveClient.java:527)

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQLUsingHiveDriver(HoodieHiveClient.java:517)

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQL(HoodieHiveClient.java:507)

> at

> org.apache.hudi.hive.HoodieHiveClient.createTable(HoodieHiveClient.java:272)

> at org.apache.hudi.hive.HiveSyncTool.syncSchema(HiveSyncTool.java:132)

> at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:96)

> at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:68)

> at

> org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

> at

> org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

> at

> org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:156)

> at

> org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

> at

> org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

> at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

> at

> org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

> at

> org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

> at

> org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

> at

> org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

> at

> org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

> at

> org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

> at

> org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229){noformat}

> I was expecting this to fail through Spark, becuase *hive-exec* is not shaded

> inside *hudi-spark-bundle*, while *HiveConf* is shaded and relocated. This

> *SessionState* is coming from the spark-hive jar and obviously it does not

> accept the relocated *HiveConf*.

> We in *EMR* are running into same problem when trying to integrate with Glue

> Catalog. For this we have to create Hive metastore client through

> *Hive.get(conf).getMsc()* instead of how it is being down now, so that

> alternate implementations of metastore can get created. However, because

> hive-exec is not shaded but HiveConf is relocated we run into same issues

> there.

> It would not be recommended to shade *hive-exec* either because it itself is

> an Uber jar that shades a lot of things, and all of them would end up in

> *hudi-spark-bundle* jar. We would not want to head

[jira] [Assigned] (HUDI-281) HiveSync failure through Spark when useJdbc is set to false

[

https://issues.apache.org/jira/browse/HUDI-281?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu reassigned HUDI-281:

---

Assignee: Raymond Xu

> HiveSync failure through Spark when useJdbc is set to false

> ---

>

> Key: HUDI-281

> URL: https://issues.apache.org/jira/browse/HUDI-281

> Project: Apache Hudi

> Issue Type: Improvement

> Components: Hive Integration, Spark Integration, Usability

>Reporter: Udit Mehrotra

>Assignee: Raymond Xu

>Priority: Major

> Labels: query-eng, user-support-issues

> Fix For: 0.11.0, 0.10.1

>

>

> Table creation with Hive sync through Spark fails, when I set *useJdbc* to

> *false*. Currently I had to modify the code to set *useJdbc* to *false* as

> there is not *DataSourceOption* through which I can specify this field when

> running Hudi code.

> Here is the failure:

> {noformat}

> java.lang.NoSuchMethodError:

> org.apache.hadoop.hive.ql.session.SessionState.start(Lorg/apache/hudi/org/apache/hadoop_hive/conf/HiveConf;)Lorg/apache/hadoop/hive/ql/session/SessionState;

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQLs(HoodieHiveClient.java:527)

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQLUsingHiveDriver(HoodieHiveClient.java:517)

> at

> org.apache.hudi.hive.HoodieHiveClient.updateHiveSQL(HoodieHiveClient.java:507)

> at

> org.apache.hudi.hive.HoodieHiveClient.createTable(HoodieHiveClient.java:272)

> at org.apache.hudi.hive.HiveSyncTool.syncSchema(HiveSyncTool.java:132)

> at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:96)

> at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:68)

> at

> org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

> at

> org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

> at

> org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

> at

> org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

> at

> org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:156)

> at

> org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

> at

> org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

> at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

> at

> org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

> at

> org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

> at

> org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

> at

> org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

> at

> org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

> at

> org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

> at

> org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

> at

> org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229){noformat}

> I was expecting this to fail through Spark, becuase *hive-exec* is not shaded

> inside *hudi-spark-bundle*, while *HiveConf* is shaded and relocated. This

> *SessionState* is coming from the spark-hive jar and obviously it does not

> accept the relocated *HiveConf*.

> We in *EMR* are running into same problem when trying to integrate with Glue

> Catalog. For this we have to create Hive metastore client through

> *Hive.get(conf).getMsc()* instead of how it is being down now, so that

> alternate implementations of metastore can get created. However, because

> hive-exec is not shaded but HiveConf is relocated we run into same issues

> there.

> It would not be recommended to shade *hive-exec* either because it itself is

> an Uber jar that shades a lot of things, and all of them would end up in

> *hudi-spark-bundle* jar. We would not

[jira] [Updated] (HUDI-3104) Hudi-kafka-connect can not scan hadoop config files by HADOOP_CONF_DIR

[

https://issues.apache.org/jira/browse/HUDI-3104?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-3104:

-

Status: In Progress (was: Open)

> Hudi-kafka-connect can not scan hadoop config files by HADOOP_CONF_DIR

> --

>

> Key: HUDI-3104

> URL: https://issues.apache.org/jira/browse/HUDI-3104

> Project: Apache Hudi

> Issue Type: Bug

> Components: configs

>Reporter: cdmikechen

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.11.0, 0.10.1

>

>

> I used hudi-kafka-connect to test pull kafka topic datas to hudi. I've build

> a kafka connect docker by this dockerfile:

> {code}

> FROM confluentinc/cp-kafka-connect:6.1.1

> RUN confluent-hub install --no-prompt confluentinc/kafka-connect-hdfs:10.1.3

> COPY hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar

> /usr/share/confluent-hub-components/confluentinc-kafka-connect-hdfs/lib

> {code}

> When I started this docker container and submit a task, hudi report this

> error:

> {code}

> [2021-12-27 15:04:55,214] INFO Setting record key volume and partition fields

> date for table

> hdfs://hdp-syzh-cluster/hive/warehouse/default.db/hudi-test-topichudi-test-topic

> (org.apache.hudi.connect.writers.KafkaConnectTransactionServices)

> [2021-12-27 15:04:55,224] INFO Initializing

> hdfs://hdp-syzh-cluster/hive/warehouse/default.db/hudi-test-topic as hoodie

> table hdfs://hdp-syzh-cluster/hive/warehouse/default.db/hudi-test-topic

> (org.apache.hudi.common.table.HoodieTableMetaClient)

> WARNING: An illegal reflective access operation has occurred

> WARNING: Illegal reflective access by

> org.apache.hadoop.security.authentication.util.KerberosUtil

> (file:/usr/share/confluent-hub-components/confluentinc-kafka-connect-hdfs/lib/hadoop-auth-2.10.1.jar)

> to method sun.security.krb5.Config.getInstance()

> WARNING: Please consider reporting this to the maintainers of

> org.apache.hadoop.security.authentication.util.KerberosUtil

> WARNING: Use --illegal-access=warn to enable warnings of further illegal

> reflective access operations

> WARNING: All illegal access operations will be denied in a future release

> [2021-12-27 15:04:55,571] WARN Unable to load native-hadoop library for your

> platform... using builtin-java classes where applicable

> (org.apache.hadoop.util.NativeCodeLoader)

> [2021-12-27 15:04:56,154] ERROR Fatal error initializing task null for

> partition 0 (org.apache.hudi.connect.HoodieSinkTask)

> org.apache.hudi.exception.HoodieException: Fatal error instantiating Hudi

> Transaction Services

> at

> org.apache.hudi.connect.writers.KafkaConnectTransactionServices.(KafkaConnectTransactionServices.java:113)

> ~[hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at

> org.apache.hudi.connect.transaction.ConnectTransactionCoordinator.(ConnectTransactionCoordinator.java:88)

> ~[hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at

> org.apache.hudi.connect.HoodieSinkTask.bootstrap(HoodieSinkTask.java:191)

> [hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at org.apache.hudi.connect.HoodieSinkTask.open(HoodieSinkTask.java:151)

> [hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at

> org.apache.kafka.connect.runtime.WorkerSinkTask.openPartitions(WorkerSinkTask.java:640)

> [connect-runtime-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.connect.runtime.WorkerSinkTask.access$1100(WorkerSinkTask.java:71)

> [connect-runtime-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.connect.runtime.WorkerSinkTask$HandleRebalance.onPartitionsAssigned(WorkerSinkTask.java:705)

> [connect-runtime-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.invokePartitionsAssigned(ConsumerCoordinator.java:293)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.onJoinComplete(ConsumerCoordinator.java:430)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.AbstractCoordinator.joinGroupIfNeeded(AbstractCoordinator.java:449)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureActiveGroup(AbstractCoordinator.java:365)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.poll(ConsumerCoordinator.java:508)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.updateAssignmentMetadataIfNeeded(KafkaConsumer.java:1257)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1226)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

>

[jira] [Assigned] (HUDI-3104) Hudi-kafka-connect can not scan hadoop config files by HADOOP_CONF_DIR

[

https://issues.apache.org/jira/browse/HUDI-3104?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu reassigned HUDI-3104:

Assignee: cdmikechen

> Hudi-kafka-connect can not scan hadoop config files by HADOOP_CONF_DIR

> --

>

> Key: HUDI-3104

> URL: https://issues.apache.org/jira/browse/HUDI-3104

> Project: Apache Hudi

> Issue Type: Bug

> Components: configs

>Reporter: cdmikechen

>Assignee: cdmikechen

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.11.0, 0.10.1

>

>

> I used hudi-kafka-connect to test pull kafka topic datas to hudi. I've build

> a kafka connect docker by this dockerfile:

> {code}

> FROM confluentinc/cp-kafka-connect:6.1.1

> RUN confluent-hub install --no-prompt confluentinc/kafka-connect-hdfs:10.1.3

> COPY hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar

> /usr/share/confluent-hub-components/confluentinc-kafka-connect-hdfs/lib

> {code}

> When I started this docker container and submit a task, hudi report this

> error:

> {code}

> [2021-12-27 15:04:55,214] INFO Setting record key volume and partition fields

> date for table

> hdfs://hdp-syzh-cluster/hive/warehouse/default.db/hudi-test-topichudi-test-topic

> (org.apache.hudi.connect.writers.KafkaConnectTransactionServices)

> [2021-12-27 15:04:55,224] INFO Initializing

> hdfs://hdp-syzh-cluster/hive/warehouse/default.db/hudi-test-topic as hoodie

> table hdfs://hdp-syzh-cluster/hive/warehouse/default.db/hudi-test-topic

> (org.apache.hudi.common.table.HoodieTableMetaClient)

> WARNING: An illegal reflective access operation has occurred

> WARNING: Illegal reflective access by

> org.apache.hadoop.security.authentication.util.KerberosUtil

> (file:/usr/share/confluent-hub-components/confluentinc-kafka-connect-hdfs/lib/hadoop-auth-2.10.1.jar)

> to method sun.security.krb5.Config.getInstance()

> WARNING: Please consider reporting this to the maintainers of

> org.apache.hadoop.security.authentication.util.KerberosUtil

> WARNING: Use --illegal-access=warn to enable warnings of further illegal

> reflective access operations

> WARNING: All illegal access operations will be denied in a future release

> [2021-12-27 15:04:55,571] WARN Unable to load native-hadoop library for your

> platform... using builtin-java classes where applicable

> (org.apache.hadoop.util.NativeCodeLoader)

> [2021-12-27 15:04:56,154] ERROR Fatal error initializing task null for

> partition 0 (org.apache.hudi.connect.HoodieSinkTask)

> org.apache.hudi.exception.HoodieException: Fatal error instantiating Hudi

> Transaction Services

> at

> org.apache.hudi.connect.writers.KafkaConnectTransactionServices.(KafkaConnectTransactionServices.java:113)

> ~[hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at

> org.apache.hudi.connect.transaction.ConnectTransactionCoordinator.(ConnectTransactionCoordinator.java:88)

> ~[hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at

> org.apache.hudi.connect.HoodieSinkTask.bootstrap(HoodieSinkTask.java:191)

> [hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at org.apache.hudi.connect.HoodieSinkTask.open(HoodieSinkTask.java:151)

> [hudi-kafka-connect-bundle-0.11.0-SNAPSHOT.jar:0.11.0-SNAPSHOT]

> at

> org.apache.kafka.connect.runtime.WorkerSinkTask.openPartitions(WorkerSinkTask.java:640)

> [connect-runtime-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.connect.runtime.WorkerSinkTask.access$1100(WorkerSinkTask.java:71)

> [connect-runtime-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.connect.runtime.WorkerSinkTask$HandleRebalance.onPartitionsAssigned(WorkerSinkTask.java:705)

> [connect-runtime-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.invokePartitionsAssigned(ConsumerCoordinator.java:293)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.onJoinComplete(ConsumerCoordinator.java:430)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.AbstractCoordinator.joinGroupIfNeeded(AbstractCoordinator.java:449)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureActiveGroup(AbstractCoordinator.java:365)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.poll(ConsumerCoordinator.java:508)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.updateAssignmentMetadataIfNeeded(KafkaConsumer.java:1257)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1226)

> [kafka-clients-6.1.1-ccs.jar:?]

> at

>

[jira] [Updated] (HUDI-3125) Spark SQL writing timestamp type don't need to disable `spark.sql.datetime.java8API.enabled` manually

[

https://issues.apache.org/jira/browse/HUDI-3125?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-3125:

-

Story Points: 1

> Spark SQL writing timestamp type don't need to disable

> `spark.sql.datetime.java8API.enabled` manually

> -

>

> Key: HUDI-3125

> URL: https://issues.apache.org/jira/browse/HUDI-3125

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Reporter: Yann Byron

>Assignee: Yann Byron

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.11.0, 0.10.1

>

>

> {code:java}

> create table h0_p(id int, name string, price double, dt timestamp) using hudi

> partitioned by(dt) options(type = 'cow', primaryKey = 'id');

> insert into h0_p values (3, 'a1', 10, cast('2021-05-08 00:00:00' as

> timestamp)); {code}

> By default, that run the sql above will throw exception:

> {code:java}

> Caused by: java.lang.ClassCastException: java.time.Instant cannot be cast to

> java.sql.Timestamp

> at

> org.apache.hudi.AvroConversionHelper$.$anonfun$createConverterToAvro$8(AvroConversionHelper.scala:306)

> at

> org.apache.hudi.AvroConversionHelper$.$anonfun$createConverterToAvro$8$adapted(AvroConversionHelper.scala:306)

> at scala.Option.map(Option.scala:230) {code}

> We need disable `spark.sql.datetime.java8API.enabled` manually to make it

> work:

> {code:java}

> set spark.sql.datetime.java8API.enabled=false; {code}

> And the command must be executed in the runtime. It can't work if provide

> this by spark-sql command: `spark-sql --conf

> spark.sql.datetime.java8API.enabled=false`. That's because this config is

> forced to enable when launch spark-sql.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-3131) Spark3.1.1 CTAS error

[

https://issues.apache.org/jira/browse/HUDI-3131?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-3131:

-

Story Points: 1

> Spark3.1.1 CTAS error

> -

>

> Key: HUDI-3131

> URL: https://issues.apache.org/jira/browse/HUDI-3131

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Reporter: Yann Byron

>Assignee: Yann Byron

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.11.0, 0.10.1

>

>

> Fail to run CTAS with Hudi0.10.0 and Spark3.1.1.

>

> Sql:

> {code:java}

> create table h1_p using hudi partitioned by(dt) options(type = 'cow',

> primaryKey = 'id') as select '2021-05-07' as dt, 1 as id, 'a1' as name, 10 as

> price; {code}

> Error:

> {code:java}

> java.lang.NoSuchMethodError:

> org.apache.spark.sql.catalyst.plans.logical.Command.producedAttributes$(Lorg/apache/spark/sql/catalyst/plans/logical/Command;)Lorg/apache/spark/sql/catalyst/expressions/AttributeSet;

> at

> org.apache.spark.sql.hudi.command.CreateHoodieTableAsSelectCommand.producedAttributes(CreateHoodieTableAsSelectCommand.scala:39)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-3125) Spark SQL writing timestamp type don't need to disable `spark.sql.datetime.java8API.enabled` manually

[

https://issues.apache.org/jira/browse/HUDI-3125?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Raymond Xu updated HUDI-3125:

-

Issue Type: Bug (was: Improvement)

> Spark SQL writing timestamp type don't need to disable

> `spark.sql.datetime.java8API.enabled` manually

> -

>

> Key: HUDI-3125

> URL: https://issues.apache.org/jira/browse/HUDI-3125

> Project: Apache Hudi

> Issue Type: Bug

> Components: Spark Integration

>Reporter: Yann Byron

>Assignee: Yann Byron

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.11.0, 0.10.1

>

>

> {code:java}

> create table h0_p(id int, name string, price double, dt timestamp) using hudi

> partitioned by(dt) options(type = 'cow', primaryKey = 'id');

> insert into h0_p values (3, 'a1', 10, cast('2021-05-08 00:00:00' as

> timestamp)); {code}

> By default, that run the sql above will throw exception:

> {code:java}

> Caused by: java.lang.ClassCastException: java.time.Instant cannot be cast to

> java.sql.Timestamp

> at

> org.apache.hudi.AvroConversionHelper$.$anonfun$createConverterToAvro$8(AvroConversionHelper.scala:306)

> at

> org.apache.hudi.AvroConversionHelper$.$anonfun$createConverterToAvro$8$adapted(AvroConversionHelper.scala:306)

> at scala.Option.map(Option.scala:230) {code}

> We need disable `spark.sql.datetime.java8API.enabled` manually to make it

> work:

> {code:java}

> set spark.sql.datetime.java8API.enabled=false; {code}

> And the command must be executed in the runtime. It can't work if provide

> this by spark-sql command: `spark-sql --conf

> spark.sql.datetime.java8API.enabled=false`. That's because this config is

> forced to enable when launch spark-sql.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (HUDI-3100) Hive Conditional sync cannot be set from deltastreamer

[ https://issues.apache.org/jira/browse/HUDI-3100?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-3100: - Status: In Progress (was: Open) > Hive Conditional sync cannot be set from deltastreamer > -- > > Key: HUDI-3100 > URL: https://issues.apache.org/jira/browse/HUDI-3100 > Project: Apache Hudi > Issue Type: Bug > Components: DeltaStreamer, Hive Integration >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (HUDI-2987) event time not recorded in commit metadata when insert or bulk insert

[ https://issues.apache.org/jira/browse/HUDI-2987?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-2987: - Fix Version/s: (was: 0.10.1) > event time not recorded in commit metadata when insert or bulk insert > - > > Key: HUDI-2987 > URL: https://issues.apache.org/jira/browse/HUDI-2987 > Project: Apache Hudi > Issue Type: Bug > Components: Writer Core >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Critical > Labels: pull-request-available, sev:high > Fix For: 0.11.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [hudi] LuPan2015 commented on issue #4475: [SUPPORT] Hudi and aws S3 integration exception

LuPan2015 commented on issue #4475: URL: https://github.com/apache/hudi/issues/4475#issuecomment-1002895931 solved #4474 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] LuPan2015 closed issue #4475: [SUPPORT] Hudi and aws S3 integration exception

LuPan2015 closed issue #4475: URL: https://github.com/apache/hudi/issues/4475 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #4065: [HUDI-2817] Sync the configuration inference for HoodieFlinkStreamer

danny0405 commented on pull request #4065: URL: https://github.com/apache/hudi/pull/4065#issuecomment-1002894948 Can we sync up all the inference logic ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #4189: [HUDI-2913] Disable auto clean in writer task

danny0405 commented on pull request #4189: URL: https://github.com/apache/hudi/pull/4189#issuecomment-1002894548 Close because it is not necessary ~ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 closed pull request #4189: [HUDI-2913] Disable auto clean in writer task

danny0405 closed pull request #4189: URL: https://github.com/apache/hudi/pull/4189 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 closed pull request #3386: [HUDI-2270] Remove corrupted clean action

danny0405 closed pull request #3386: URL: https://github.com/apache/hudi/pull/3386 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #3386: [HUDI-2270] Remove corrupted clean action

danny0405 commented on pull request #3386: URL: https://github.com/apache/hudi/pull/3386#issuecomment-1002893737 Close because #4016 solves the problem. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] LuPan2015 commented on issue #4474: [SUPPORT] Should we shade all aws dependencies to avoid class conflicts?

LuPan2015 commented on issue #4474: URL: https://github.com/apache/hudi/issues/4474#issuecomment-1002893633 yes. But it works fine。 Next I need to store the metadata in glue。 Thanks. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] boneanxs commented on issue #4474: [SUPPORT] Should we shade all aws dependencies to avoid class conflicts?

boneanxs commented on issue #4474: URL: https://github.com/apache/hudi/issues/4474#issuecomment-1002890635 > Error in query: Specified schema in create table statement is not equal to the table schema.You should not specify the schema for an exist table: `default`.`hudi_mor_s32` Not the same exception? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] YannByron commented on issue #4429: [SUPPORT] Spark SQL CTAS command doesn't work with 0.10.0 version and Spark 3.1.1

YannByron commented on issue #4429: URL: https://github.com/apache/hudi/issues/4429#issuecomment-1002887429 @vingov as the picture I mentioned above, need to `set spark.sql.datetime.java8API.enabled=false;` manually at this time. And i also try to improve it that don't need set by user, #4471. In this, just work for `insert`, not for `CTAS`, but i'm still working on it.. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] LuPan2015 edited a comment on issue #4474: [SUPPORT] Should we shade all aws dependencies to avoid class conflicts?

LuPan2015 edited a comment on issue #4474: URL: https://github.com/apache/hudi/issues/4474#issuecomment-1002882980 I tried it, but the following exception was still thrown。 ``` spark/bin/spark-sql --packages org.apache.hadoop:hadoop-aws:3.2.0,com.amazonaws:aws-java-sdk:1.12.22 --jars hudi-spark3-bundle_2.12-0.11.0-SNAPSHOT.jar \ --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \ --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension' ``` ``` Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 21/12/30 13:46:32 WARN HiveConf: HiveConf of name hive.stats.jdbc.timeout does not exist 21/12/30 13:46:32 WARN HiveConf: HiveConf of name hive.stats.retries.wait does not exist 21/12/30 13:46:34 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 2.3.0 21/12/30 13:46:34 WARN ObjectStore: setMetaStoreSchemaVersion called but recording version is disabled: version = 2.3.0, comment = Set by MetaStore lupan@127.0.1.1 Spark master: local[*], Application Id: local-1640843189215 spark-sql> create table default.hudi_mor_s32 ( > id bigint, > name string, > dt string > ) using hudi > tblproperties ( > type = 'mor', > primaryKey = 'id' > ) > partitioned by (dt) > location 's3a://iceberg-bucket/hudi-warehouse/'; ANTLR Tool version 4.7 used for code generation does not match the current runtime version 4.8ANTLR Tool version 4.7 used for code generation does not match the current runtime version 4.821/12/30 13:46:45 WARN MetricsConfig: Cannot locate configuration: tried hadoop-metrics2-s3a-file-system.properties,hadoop-metrics2.properties Error in query: Specified schema in create table statement is not equal to the table schema.You should not specify the schema for an exist table: `default`.`hudi_mor_s32` ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] LuPan2015 commented on issue #4474: [SUPPORT] Should we shade all aws dependencies to avoid class conflicts?

LuPan2015 commented on issue #4474: URL: https://github.com/apache/hudi/issues/4474#issuecomment-1002882980 I tried it, but the following exception was still thrown。 ``` spark/bin/spark-sql --packages org.apache.hadoop:hadoop-aws:3.2.0,com.amazonaws:aws-java-sdk:1.12.22 --jars hudi-spark3-bundle_2.12-0.11.0-SNAPSHOT.jar \ --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \ --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension' ``` ``` spark-sql> create table default.hudi_mor_s32 ( > id bigint, > name string, > dt string > ) using hudi > tblproperties ( > type = 'mor', > primaryKey = 'id' > ) > partitioned by (dt) > location 's3a://iceberg-bucket/hudi-warehouse/'; ANTLR Tool version 4.7 used for code generation does not match the current runtime version 4.8ANTLR Tool version 4.7 used for code generation does not match the current runtime version 4.821/12/30 13:46:45 WARN MetricsConfig: Cannot locate configuration: tried hadoop-metrics2-s3a-file-system.properties,hadoop-metrics2.properties Error in query: Specified schema in create table statement is not equal to the table schema.You should not specify the schema for an exist table: `default`.`hudi_mor_s32` ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] vingov edited a comment on issue #4429: [SUPPORT] Spark SQL CTAS command doesn't work with 0.10.0 version and Spark 3.1.1

vingov edited a comment on issue #4429:

URL: https://github.com/apache/hudi/issues/4429#issuecomment-1002881175

@YannByron - Thanks for the quick turnaround, I appreciate it!

@xushiyan - There are more errors with Spark 3.1.2 as well, see below:

```

spark-sql> create table h0_p using hudi partitioned by(dt)

> tblproperties(type = 'cow', primaryKey = 'id')

> as select cast('2021-05-07 00:00:00' as timestamp) as dt,

> 1 as id, 'a1' as name, 10 as price;

21/12/30 05:28:02 WARN DFSPropertiesConfiguration: Cannot find

HUDI_CONF_DIR, please set it as the dir of hudi-defaults.conf

21/12/30 05:28:02 WARN DFSPropertiesConfiguration: Properties file

file:/etc/hudi/conf/hudi-defaults.conf not found. Ignoring to load props file

21/12/30 05:28:07 WARN package: Truncated the string representation of a

plan since it was too large. This behavior can be adjusted by setting

'spark.sql.debug.maxToStringFields'.

21/12/30 05:28:14 ERROR Executor: Exception in task 0.0 in stage 3.0 (TID 3)

org.apache.spark.SparkException: Failed to execute user defined

function(UDFRegistration$$Lambda$3034/1190042877:

(struct) => string)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.project_doConsume_0$(Unknown

Source)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown

Source)

at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:755)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at

org.apache.spark.util.random.SamplingUtils$.reservoirSampleAndCount(SamplingUtils.scala:41)

at

org.apache.spark.RangePartitioner$.$anonfun$sketch$1(Partitioner.scala:306)

at

org.apache.spark.RangePartitioner$.$anonfun$sketch$1$adapted(Partitioner.scala:304)

at

org.apache.spark.rdd.RDD.$anonfun$mapPartitionsWithIndex$2(RDD.scala:915)

at

org.apache.spark.rdd.RDD.$anonfun$mapPartitionsWithIndex$2$adapted(RDD.scala:915)

at

org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at

org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:497)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1439)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:500)

at

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalArgumentException: Invalid format:

"2021-05-07T00:00:00Z" is malformed at "T00:00:00Z"

at

org.joda.time.format.DateTimeParserBucket.doParseMillis(DateTimeParserBucket.java:187)

at

org.joda.time.format.DateTimeFormatter.parseMillis(DateTimeFormatter.java:826)

at

org.apache.spark.sql.hudi.command.SqlKeyGenerator.$anonfun$convertPartitionPathToSqlType$1(SqlKeyGenerator.scala:97)

at

scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

at

scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36)

at

scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:198)

at scala.collection.TraversableLike.map(TraversableLike.scala:238)

at scala.collection.TraversableLike.map$(TraversableLike.scala:231)

at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:198)

at

org.apache.spark.sql.hudi.command.SqlKeyGenerator.convertPartitionPathToSqlType(SqlKeyGenerator.scala:88)

at

org.apache.spark.sql.hudi.command.SqlKeyGenerator.getPartitionPath(SqlKeyGenerator.scala:118)

at

org.apache.spark.sql.UDFRegistration.$anonfun$register$352(UDFRegistration.scala:777)

... 22 more

```

another error with 0.10.0, but these statements are working with 0.9.0

version:

```

spark-sql> use analytics;

Time taken: 0.103 seconds

spark-sql> desc insert_overwrite_table;

_hoodie_commit_time string NULL

_hoodie_commit_seqno string NULL

_hoodie_record_key string NULL

_hoodie_partition_path string NULL

_hoodie_file_namestring NULL

id string NULL

name string NULL

ts timestamp NULL

Time taken: 0.391

[jira] [Updated] (HUDI-3120) Cache compactionPlan in buffer

[ https://issues.apache.org/jira/browse/HUDI-3120?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen updated HUDI-3120: - Fix Version/s: 0.11.0 0.10.1 > Cache compactionPlan in buffer > -- > > Key: HUDI-3120 > URL: https://issues.apache.org/jira/browse/HUDI-3120 > Project: Apache Hudi > Issue Type: Improvement > Components: Flink Integration >Reporter: yuzhaojing >Assignee: yuzhaojing >Priority: Major > Labels: pull-request-available > Fix For: 0.11.0, 0.10.1 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [hudi] danny0405 commented on a change in pull request #4463: [HUDI-3120] Cache compactionPlan in buffer

danny0405 commented on a change in pull request #4463:

URL: https://github.com/apache/hudi/pull/4463#discussion_r776575452

##

File path:

hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

##

@@ -108,8 +124,15 @@ public void invoke(CompactionCommitEvent event, Context

context) throws Exceptio

* @param events Commit events ever received for the instant

*/

private void commitIfNecessary(String instant,

Collection events) throws IOException {

-HoodieCompactionPlan compactionPlan = CompactionUtils.getCompactionPlan(

-this.writeClient.getHoodieTable().getMetaClient(), instant);

+HoodieCompactionPlan compactionPlan;

+if (compactionPlanCache.containsKey(instant)) {

+ compactionPlan = compactionPlanCache.get(instant);

+} else {

+ compactionPlan = CompactionUtils.getCompactionPlan(

+ this.table.getMetaClient(), instant);

+ compactionPlanCache.put(instant, compactionPlan);

Review comment: