[GitHub] [incubator-mxnet] apeforest commented on issue #15499: Improve diagnose.py, adding build features info and binary library path.

apeforest commented on issue #15499: Improve diagnose.py, adding build features info and binary library path. URL: https://github.com/apache/incubator-mxnet/pull/15499#issuecomment-511091560 One minor comment: the ✖ etc. symbols were not recognized when I import the txt file into MS office or Google Doc. Can we replace these unicode symbols by simple ascii, like Y/N? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15499: Improve diagnose.py, adding build features info and binary library path.

apeforest commented on a change in pull request #15499: Improve diagnose.py,

adding build features info and binary library path.

URL: https://github.com/apache/incubator-mxnet/pull/15499#discussion_r303192850

##

File path: tools/diagnose.py

##

@@ -105,13 +110,20 @@ def check_mxnet():

mx_dir = os.path.dirname(mxnet.__file__)

print('Directory:', mx_dir)

commit_hash = os.path.join(mx_dir, 'COMMIT_HASH')

-with open(commit_hash, 'r') as f:

-ch = f.read().strip()

-print('Commit Hash :', ch)

+if os.path.exists(commit_hash):

+with open(commit_hash, 'r') as f:

+ch = f.read().strip()

+print('Commit Hash :', ch)

+else:

+print('Commit hash file "{}" not found. Not installed from

pre-built package or built from source.'.format(commit_hash))

+print('Library :', mxnet.libinfo.find_lib_path())

+try:

+print('Build features:')

+print(get_build_features_str())

Review comment:

This will fail if user use this script to diagnose an mxnet script before

1.5.0 release.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] apeforest commented on a change in pull request #15499: Improve diagnose.py, adding build features info and binary library path.

apeforest commented on a change in pull request #15499: Improve diagnose.py,

adding build features info and binary library path.

URL: https://github.com/apache/incubator-mxnet/pull/15499#discussion_r303192850

##

File path: tools/diagnose.py

##

@@ -105,13 +110,20 @@ def check_mxnet():

mx_dir = os.path.dirname(mxnet.__file__)

print('Directory:', mx_dir)

commit_hash = os.path.join(mx_dir, 'COMMIT_HASH')

-with open(commit_hash, 'r') as f:

-ch = f.read().strip()

-print('Commit Hash :', ch)

+if os.path.exists(commit_hash):

+with open(commit_hash, 'r') as f:

+ch = f.read().strip()

+print('Commit Hash :', ch)

+else:

+print('Commit hash file "{}" not found. Not installed from

pre-built package or built from source.'.format(commit_hash))

+print('Library :', mxnet.libinfo.find_lib_path())

+try:

+print('Build features:')

+print(get_build_features_str())

Review comment:

This will fail if user use this script to diagnose an mxnet script before

1.5.0 release.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] intgogo opened a new issue #15529: MXNET_CUDNN_AUTOTUNE_DEFAULT problems

intgogo opened a new issue #15529: MXNET_CUDNN_AUTOTUNE_DEFAULT problems URL: https://github.com/apache/incubator-mxnet/issues/15529 When I set MXNET_CUDNN_AUTOTUNE_DEFAULT =1, it won't stop.WTF~ ``` [12:39:44] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:03] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:18] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:23] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:33] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:39] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:45] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:52] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:40:59] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:05] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:14] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:19] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:26] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:31] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:38] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:45] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:53] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:41:59] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable) [12:42:07] /root/lilw/mxnet/src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to

[GitHub] [incubator-mxnet] mxnet-label-bot commented on issue #15529: MXNET_CUDNN_AUTOTUNE_DEFAULT problems

mxnet-label-bot commented on issue #15529: MXNET_CUDNN_AUTOTUNE_DEFAULT problems URL: https://github.com/apache/incubator-mxnet/issues/15529#issuecomment-511088398 Hey, this is the MXNet Label Bot. Thank you for submitting the issue! I will try and suggest some labels so that the appropriate MXNet community members can help resolve it. Here are my recommended labels: Cuda, Bug This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] Zha0q1 commented on a change in pull request #15490: Utility to help developers debug operators: Tensor Inspector

Zha0q1 commented on a change in pull request #15490: Utility to help developers

debug operators: Tensor Inspector

URL: https://github.com/apache/incubator-mxnet/pull/15490#discussion_r303189996

##

File path: src/common/tensor_inspector.h

##

@@ -0,0 +1,763 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+/*!

+ * Copyright (c) 2019 by Contributors

+ * \file tensor_inspector.h

+ * \brief utility to inspect tensor objects

+ * \author Zhaoqi Zhu

+ */

+

+#ifndef MXNET_COMMON_TENSOR_INSPECTOR_H_

+#define MXNET_COMMON_TENSOR_INSPECTOR_H_

+

+#include

+#include

+#include

+#include

+#include

+#include "../../3rdparty/mshadow/mshadow/base.h"

+#include "../../tests/cpp/include/test_util.h"

+

+namespace mxnet {

+

+/*!

+ * \brief this singleton struct mediates individual TensorInspector objects

+ * so that we can control the global behavior from each of them

+ */

+struct InspectorManager {

+ static InspectorManager* get() {

+static std::mutex mtx;

+static std::unique_ptr im = nullptr;

+if (!im) {

+ std::unique_lock lk(mtx);

+ if (!im)

+im = std::make_unique();

+}

+return im.get();

+ }

+ /* !\brief mutex used to lock interactive_print() and check_value() */

+ std::mutex mutex_;

+ /* !\brief skip all interactive prints */

+ bool interactive_print_skip_all_ = false;

+ /* !\brief skip all value checks */

+ bool check_value_skip_all_ = false;

+ /* !\brief visit count for interactive print tags */

+ std::unordered_map interactive_print_tag_counter_;

+ /* !\brief visit count for check value tags */

+ std::unordered_map check_value_tag_counter_;

+ /* !\brief visit count for dump value tags */

+ std::unordered_map dump_to_file_tag_counter_;

+};

+

+/*!

+ * \brief Enum for building value checkers for TensorInspector::check_value()

+ */

+enum CheckerType {

+ NegativeChecker, // check if is negative

+ PositiveChecker, // check if is positive

+ ZeroChecker, // check if is zero

+ NaNChecker, // check if is NaN, will always return false if DType is not a

float type

+ InfChecker, // check if is infinity, will always return false if DType is

not a float type

+ PositiveInfChecker, // check if is positive infinity,

+ // will always return false if DType is not a float type

+ NegativeInfChecker, // check if is nagative infinity,

+ // will always return false if DType is not a float type

+ FiniteChecker, // check if is finite, will always return false if DType is

not a float type

+ NormalChecker, // check if is neither infinity nor NaN

+ AbnormalChecker, // chekck if is infinity or nan

+};

+

+/**

+ * ___ _ _

+ * |__ __||_ _| | |

+ *| | ___ _ __ ___ ___ _ __| | _ __ ___ _ __ ___ ___| |_ ___ _ __

+ *| |/ _ \ '_ \/ __|/ _ \| '__| | | '_ \/ __| '_ \ / _ \/ __| __/ _ \| '__|

+ *| | __/ | | \__ \ (_) | | _| |_| | | \__ \ |_) | __/ (__| || (_) | |

+ *|_|\___|_| |_|___/\___/|_||_|_| |_|___/ .__/ \___|\___|\__\___/|_|

+ * | |

+ * |_|

+ */

+

+/*!

+ * \brief This class provides a unified interface to inspect the value of all

data types

+ * including Tensor, TBlob, and NDArray. If the tensor resides on GPU, then it

will be

+ * copied from GPU memory back to CPU memory to be operated on. Internally,

all data types

+ * are stored as a TBlob object tb_.

+ */

+class TensorInspector {

+ private:

+ /*!

+ * \brief generate the tensor info, including data type and shape

+ * \tparam DType the data type

+ * \tparam StreamType the type of the stream object

+ * \param os stream object to output to

+ */

+ template

+ void tensor_info_to_string(StreamType* os) {

+const int dimension = tb_.ndim();

+*os << "<" << typeid(tb_.dptr()[0]).name() << " Tensor ";

+*os << tb_.shape_[0];

+for (int i = 1; i < dimension; ++i) {

+ *os << 'x' << tb_.shape_[i];

+}

+*os << ">" << std::endl;

+ }

+

+ /*!

+ * \brief output the tensor info,

[GitHub] [incubator-mxnet] Zha0q1 commented on a change in pull request #15490: Utility to help developers debug operators: Tensor Inspector

Zha0q1 commented on a change in pull request #15490: Utility to help developers

debug operators: Tensor Inspector

URL: https://github.com/apache/incubator-mxnet/pull/15490#discussion_r303189612

##

File path: src/common/tensor_inspector.h

##

@@ -0,0 +1,763 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+/*!

+ * Copyright (c) 2019 by Contributors

+ * \file tensor_inspector.h

+ * \brief utility to inspect tensor objects

+ * \author Zhaoqi Zhu

+ */

+

+#ifndef MXNET_COMMON_TENSOR_INSPECTOR_H_

+#define MXNET_COMMON_TENSOR_INSPECTOR_H_

+

+#include

+#include

+#include

+#include

+#include

+#include "../../3rdparty/mshadow/mshadow/base.h"

+#include "../../tests/cpp/include/test_util.h"

+

+namespace mxnet {

+

+/*!

+ * \brief this singleton struct mediates individual TensorInspector objects

+ * so that we can control the global behavior from each of them

+ */

+struct InspectorManager {

+ static InspectorManager* get() {

+static std::mutex mtx;

+static std::unique_ptr im = nullptr;

+if (!im) {

+ std::unique_lock lk(mtx);

+ if (!im)

+im = std::make_unique();

+}

+return im.get();

+ }

+ /* !\brief mutex used to lock interactive_print() and check_value() */

+ std::mutex mutex_;

+ /* !\brief skip all interactive prints */

+ bool interactive_print_skip_all_ = false;

+ /* !\brief skip all value checks */

+ bool check_value_skip_all_ = false;

+ /* !\brief visit count for interactive print tags */

+ std::unordered_map interactive_print_tag_counter_;

+ /* !\brief visit count for check value tags */

+ std::unordered_map check_value_tag_counter_;

+ /* !\brief visit count for dump value tags */

+ std::unordered_map dump_to_file_tag_counter_;

+};

+

+/*!

+ * \brief Enum for building value checkers for TensorInspector::check_value()

+ */

+enum CheckerType {

+ NegativeChecker, // check if is negative

+ PositiveChecker, // check if is positive

+ ZeroChecker, // check if is zero

+ NaNChecker, // check if is NaN, will always return false if DType is not a

float type

+ InfChecker, // check if is infinity, will always return false if DType is

not a float type

+ PositiveInfChecker, // check if is positive infinity,

+ // will always return false if DType is not a float type

+ NegativeInfChecker, // check if is nagative infinity,

+ // will always return false if DType is not a float type

+ FiniteChecker, // check if is finite, will always return false if DType is

not a float type

+ NormalChecker, // check if is neither infinity nor NaN

+ AbnormalChecker, // chekck if is infinity or nan

+};

+

+/**

+ * ___ _ _

+ * |__ __||_ _| | |

+ *| | ___ _ __ ___ ___ _ __| | _ __ ___ _ __ ___ ___| |_ ___ _ __

+ *| |/ _ \ '_ \/ __|/ _ \| '__| | | '_ \/ __| '_ \ / _ \/ __| __/ _ \| '__|

+ *| | __/ | | \__ \ (_) | | _| |_| | | \__ \ |_) | __/ (__| || (_) | |

+ *|_|\___|_| |_|___/\___/|_||_|_| |_|___/ .__/ \___|\___|\__\___/|_|

+ * | |

+ * |_|

+ */

+

+/*!

+ * \brief This class provides a unified interface to inspect the value of all

data types

+ * including Tensor, TBlob, and NDArray. If the tensor resides on GPU, then it

will be

+ * copied from GPU memory back to CPU memory to be operated on. Internally,

all data types

+ * are stored as a TBlob object tb_.

+ */

+class TensorInspector {

+ private:

+ /*!

+ * \brief generate the tensor info, including data type and shape

+ * \tparam DType the data type

+ * \tparam StreamType the type of the stream object

+ * \param os stream object to output to

+ */

+ template

+ void tensor_info_to_string(StreamType* os) {

+const int dimension = tb_.ndim();

+*os << "<" << typeid(tb_.dptr()[0]).name() << " Tensor ";

+*os << tb_.shape_[0];

+for (int i = 1; i < dimension; ++i) {

+ *os << 'x' << tb_.shape_[i];

+}

+*os << ">" << std::endl;

+ }

+

+ /*!

+ * \brief output the tensor info,

[GitHub] [incubator-mxnet] anirudh2290 opened a new pull request #15528: [WIP] Add AMP Conversion support for BucketingModule

anirudh2290 opened a new pull request #15528: [WIP] Add AMP Conversion support for BucketingModule URL: https://github.com/apache/incubator-mxnet/pull/15528 ## Description ## - Add conversion APIs for bucketing module - Add support for saving and loading BucketingModule from a checkpoint - Add support for loading BucketingModule from a dict of bucket keys to symbols - Add tests ## Checklist ## ### Essentials ### Please feel free to remove inapplicable items for your PR. - [ ] The PR title starts with [MXNET-$JIRA_ID], where $JIRA_ID refers to the relevant [JIRA issue](https://issues.apache.org/jira/projects/MXNET/issues) created (except PRs with tiny changes) - [ ] Changes are complete (i.e. I finished coding on this PR) - [ ] All changes have test coverage: - Unit tests are added for small changes to verify correctness (e.g. adding a new operator) - Nightly tests are added for complicated/long-running ones (e.g. changing distributed kvstore) - Build tests will be added for build configuration changes (e.g. adding a new build option with NCCL) - [ ] Code is well-documented: - For user-facing API changes, API doc string has been updated. - For new C++ functions in header files, their functionalities and arguments are documented. - For new examples, README.md is added to explain the what the example does, the source of the dataset, expected performance on test set and reference to the original paper if applicable - Check the API doc at http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-$PR_ID/$BUILD_ID/index.html - [ ] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change ### Changes ### - [ ] Feature1, tests, (and when applicable, API doc) - [ ] Feature2, tests, (and when applicable, API doc) ## Comments ## - If this change is a backward incompatible change, why must this change be made. - Interesting edge cases to note here This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] anirudh2290 commented on issue #13335: MXPredCreate lost dtype

anirudh2290 commented on issue #13335: MXPredCreate lost dtype URL: https://github.com/apache/incubator-mxnet/issues/13335#issuecomment-511080169 Resolved by #15245 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] anirudh2290 closed issue #13335: MXPredCreate lost dtype

anirudh2290 closed issue #13335: MXPredCreate lost dtype URL: https://github.com/apache/incubator-mxnet/issues/13335 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated (cbb6f7f -> d677d1a)

This is an automated email from the ASF dual-hosted git repository. anirudh2290 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from cbb6f7f Docs: Fix misprints (#15505) add d677d1a FP16 Support for C Predict API (#15245) No new revisions were added by this update. Summary of changes: amalgamation/python/mxnet_predict.py | 131 ++-- include/mxnet/c_predict_api.h| 65 src/c_api/c_predict_api.cc | 140 +-- tests/python/gpu/test_predictor.py | 128 4 files changed, 454 insertions(+), 10 deletions(-) create mode 100644 tests/python/gpu/test_predictor.py

[GitHub] [incubator-mxnet] anirudh2290 merged pull request #15245: FP16 Support for C Predict API

anirudh2290 merged pull request #15245: FP16 Support for C Predict API URL: https://github.com/apache/incubator-mxnet/pull/15245 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] anirudh2290 closed issue #14159: [Feature Request] Support fp16 for C Predict API

anirudh2290 closed issue #14159: [Feature Request] Support fp16 for C Predict API URL: https://github.com/apache/incubator-mxnet/issues/14159 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] braindotai removed a comment on issue #12185: from_logits definition seems different from what is expected?

braindotai removed a comment on issue #12185: from_logits definition seems different from what is expected? URL: https://github.com/apache/incubator-mxnet/issues/12185#issuecomment-510939653 [Check this out](https://www.tensorflow.org/api_docs/python/tf/nn/softmax_cross_entropy_with_logits) Here it says "logits: Per-label activations, typically a linear output", which means `nd.dot(x, w) + b` in terms of MXNet. There are actually two versions of logits, first is simply the linear layer(as mentions in the above link), and second is the unscaled log probabilities. That is the reason Tensorflow provided 2 versions of "softmax_cross_entropy_with_logits". In MXNet mx.gluon.loss.SoftmaxCrossEntropyLoss accepts the linear output(`nd.dot(x, w) + b`) as output in the argument. You can check [here](https://gluon.mxnet.io/chapter02_supervised-learning/softmax-regression-gluon.html), the layer definition is `net = gluon.nn.Dense(num_outputs)`, defining the loss as `gluon.loss.SoftmaxCrossEntropyLoss()` and then calculating loss as ```python output = net(data) loss = softmax_cross_entropy(output, label) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated: Docs: Fix misprints (#15505)

This is an automated email from the ASF dual-hosted git repository.

wkcn pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new cbb6f7f Docs: Fix misprints (#15505)

cbb6f7f is described below

commit cbb6f7fd6e297c17fd267b29174a4ed29100c757

Author: Ruslan Baratov

AuthorDate: Sat Jul 13 03:26:01 2019 +0300

Docs: Fix misprints (#15505)

* Docs: Fix 'bahavior' -> 'behavior'

* Docs: Fix 'the the' -> 'the'

* retrigger CI

* retrigger CI

---

NEWS.md | 4 ++--

R-package/R/viz.graph.R | 4 ++--

contrib/clojure-package/README.md | 2 +-

docs/api/python/gluon/gluon.md| 2 +-

docs/install/windows_setup.md | 2 +-

docs/tutorials/mkldnn/MKLDNN_README.md| 2 +-

example/gan/CGAN_mnist_R/README.md| 2 +-

include/mxnet/ndarray.h | 2 +-

perl-package/AI-MXNet/lib/AI/MXNet/Gluon.pm | 2 +-

perl-package/AI-MXNet/lib/AI/MXNet/Module/Base.pm | 2 +-

python/mxnet/contrib/onnx/onnx2mx/_op_translations.py | 2 +-

python/mxnet/gluon/data/dataloader.py | 2 +-

python/mxnet/module/base_module.py| 2 +-

python/mxnet/module/python_module.py | 2 +-

.../core/src/main/scala/org/apache/mxnet/module/BaseModule.scala | 2 +-

.../org/apache/mxnetexamples/javaapi/infer/objectdetector/README.md | 2 +-

.../java/org/apache/mxnetexamples/javaapi/infer/predictor/README.md | 2 +-

.../scala/org/apache/mxnetexamples/infer/objectdetector/README.md | 2 +-

src/operator/tensor/diag_op-inl.h | 2 +-

src/operator/tensor/matrix_op.cc | 2 +-

tools/staticbuild/README.md | 4 ++--

21 files changed, 24 insertions(+), 24 deletions(-)

diff --git a/NEWS.md b/NEWS.md

index 59f8de8..ee8a73c 100644

--- a/NEWS.md

+++ b/NEWS.md

@@ -678,8 +678,8 @@ This fixes an buffer overflow detected by ASAN.

This PR adds or updates the docs for the infer_range feature.

Clarifies the param in the C op docs

- Clarifies the param in the the Scala symbol docs

- Adds the param for the the Scala ndarray docs

+ Clarifies the param in the Scala symbol docs

+ Adds the param for the Scala ndarray docs

Adds the param for the Python symbol docs

Adds the param for the Python ndarray docs

diff --git a/R-package/R/viz.graph.R b/R-package/R/viz.graph.R

index 5804372..ab876af 100644

--- a/R-package/R/viz.graph.R

+++ b/R-package/R/viz.graph.R

@@ -34,7 +34,7 @@

#' @param symbol a \code{string} representing the symbol of a model.

#' @param shape a \code{numeric} representing the input dimensions to the

symbol.

#' @param direction a \code{string} representing the direction of the graph,

either TD or LR.

-#' @param type a \code{string} representing the rendering engine of the the

graph, either graph or vis.

+#' @param type a \code{string} representing the rendering engine of the graph,

either graph or vis.

#' @param graph.width.px a \code{numeric} representing the size (width) of the

graph. In pixels

#' @param graph.height.px a \code{numeric} representing the size (height) of

the graph. In pixels

#'

@@ -169,4 +169,4 @@ graph.viz <- function(symbol, shape=NULL, direction="TD",

type="graph", graph.wi

return(graph_render)

}

-globalVariables(c("color", "shape", "label", "id", ".", "op"))

\ No newline at end of file

+globalVariables(c("color", "shape", "label", "id", ".", "op"))

diff --git a/contrib/clojure-package/README.md

b/contrib/clojure-package/README.md

index 7566ade..7bb417e 100644

--- a/contrib/clojure-package/README.md

+++ b/contrib/clojure-package/README.md

@@ -237,7 +237,7 @@ If you are having trouble getting started or have a

question, feel free to reach

There are quite a few examples in the examples directory. To use.

`lein install` in the main project

-`cd` in the the example project of interest

+`cd` in the example project of interest

There are README is every directory outlining instructions.

diff --git a/docs/api/python/gluon/gluon.md b/docs/api/python/gluon/gluon.md

index c063a71..19e462e 100644

--- a/docs/api/python/gluon/gluon.md

+++ b/docs/api/python/gluon/gluon.md

@@ -28,7 +28,7 @@

The Gluon package is a high-level interface for MXNet designed to be easy to

use, while keeping most of the flexibility of a low level API. Gluon supports

both imperative and symbolic programming, making it easy to train complex

models imperatively in Python and

[GitHub] [incubator-mxnet] wkcn merged pull request #15505: Docs: Fix misprints

wkcn merged pull request #15505: Docs: Fix misprints URL: https://github.com/apache/incubator-mxnet/pull/15505 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] wkcn commented on issue #14894: Accelerate ROIPooling layer

wkcn commented on issue #14894: Accelerate ROIPooling layer URL: https://github.com/apache/incubator-mxnet/pull/14894#issuecomment-511070139 @larroy I think the main reason is that the indices of the maximum numbers are saved in this PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on issue #15516: Fix memory leak reported by ASAN in NNVM to ONNX conversion

ChaiBapchya commented on issue #15516: Fix memory leak reported by ASAN in NNVM to ONNX conversion URL: https://github.com/apache/incubator-mxnet/pull/15516#issuecomment-511069536 Gotcha! Makes sense! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy edited a comment on issue #14894: Accelerate ROIPooling layer

larroy edited a comment on issue #14894: Accelerate ROIPooling layer URL: https://github.com/apache/incubator-mxnet/pull/14894#issuecomment-511066311 This is great, is the performance increase only due to type changes as in the description? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] larroy commented on issue #14894: Accelerate ROIPooling layer

larroy commented on issue #14894: Accelerate ROIPooling layer URL: https://github.com/apache/incubator-mxnet/pull/14894#issuecomment-511066311 This is great, is the performance increase only due to type changes as in the description? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] KellenSunderland opened a new pull request #15527: Small typo fixes in batch_norm-inl.h

KellenSunderland opened a new pull request #15527: Small typo fixes in batch_norm-inl.h URL: https://github.com/apache/incubator-mxnet/pull/15527 ## Description ## Small typo fixes in batch_norm-inl.h This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch KellenSunderland-patch-2 created (now 6c38f3e)

This is an automated email from the ASF dual-hosted git repository. kellen pushed a change to branch KellenSunderland-patch-2 in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. at 6c38f3e Small typo fixes in batch_norm-inl.h No new revisions were added by this update.

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15490: Utility to help developers debug operators: Tensor Inspector

ChaiBapchya commented on a change in pull request #15490: Utility to help

developers debug operators: Tensor Inspector

URL: https://github.com/apache/incubator-mxnet/pull/15490#discussion_r303175784

##

File path: src/common/tensor_inspector.h

##

@@ -0,0 +1,763 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+/*!

+ * Copyright (c) 2019 by Contributors

+ * \file tensor_inspector.h

+ * \brief utility to inspect tensor objects

+ * \author Zhaoqi Zhu

+ */

+

+#ifndef MXNET_COMMON_TENSOR_INSPECTOR_H_

+#define MXNET_COMMON_TENSOR_INSPECTOR_H_

+

+#include

+#include

+#include

+#include

+#include

+#include "../../3rdparty/mshadow/mshadow/base.h"

+#include "../../tests/cpp/include/test_util.h"

+

+namespace mxnet {

+

+/*!

+ * \brief this singleton struct mediates individual TensorInspector objects

+ * so that we can control the global behavior from each of them

+ */

+struct InspectorManager {

+ static InspectorManager* get() {

+static std::mutex mtx;

+static std::unique_ptr im = nullptr;

+if (!im) {

+ std::unique_lock lk(mtx);

+ if (!im)

+im = std::make_unique();

+}

+return im.get();

+ }

+ /* !\brief mutex used to lock interactive_print() and check_value() */

+ std::mutex mutex_;

+ /* !\brief skip all interactive prints */

+ bool interactive_print_skip_all_ = false;

+ /* !\brief skip all value checks */

+ bool check_value_skip_all_ = false;

+ /* !\brief visit count for interactive print tags */

+ std::unordered_map interactive_print_tag_counter_;

+ /* !\brief visit count for check value tags */

+ std::unordered_map check_value_tag_counter_;

+ /* !\brief visit count for dump value tags */

+ std::unordered_map dump_to_file_tag_counter_;

+};

+

+/*!

+ * \brief Enum for building value checkers for TensorInspector::check_value()

+ */

+enum CheckerType {

+ NegativeChecker, // check if is negative

+ PositiveChecker, // check if is positive

+ ZeroChecker, // check if is zero

+ NaNChecker, // check if is NaN, will always return false if DType is not a

float type

+ InfChecker, // check if is infinity, will always return false if DType is

not a float type

+ PositiveInfChecker, // check if is positive infinity,

+ // will always return false if DType is not a float type

+ NegativeInfChecker, // check if is nagative infinity,

+ // will always return false if DType is not a float type

+ FiniteChecker, // check if is finite, will always return false if DType is

not a float type

+ NormalChecker, // check if is neither infinity nor NaN

+ AbnormalChecker, // chekck if is infinity or nan

+};

+

+/**

+ * ___ _ _

+ * |__ __||_ _| | |

+ *| | ___ _ __ ___ ___ _ __| | _ __ ___ _ __ ___ ___| |_ ___ _ __

+ *| |/ _ \ '_ \/ __|/ _ \| '__| | | '_ \/ __| '_ \ / _ \/ __| __/ _ \| '__|

+ *| | __/ | | \__ \ (_) | | _| |_| | | \__ \ |_) | __/ (__| || (_) | |

+ *|_|\___|_| |_|___/\___/|_||_|_| |_|___/ .__/ \___|\___|\__\___/|_|

+ * | |

+ * |_|

+ */

+

+/*!

+ * \brief This class provides a unified interface to inspect the value of all

data types

+ * including Tensor, TBlob, and NDArray. If the tensor resides on GPU, then it

will be

+ * copied from GPU memory back to CPU memory to be operated on. Internally,

all data types

+ * are stored as a TBlob object tb_.

+ */

+class TensorInspector {

+ private:

+ /*!

+ * \brief generate the tensor info, including data type and shape

+ * \tparam DType the data type

+ * \tparam StreamType the type of the stream object

+ * \param os stream object to output to

+ */

+ template

+ void tensor_info_to_string(StreamType* os) {

+const int dimension = tb_.ndim();

+*os << "<" << typeid(tb_.dptr()[0]).name() << " Tensor ";

+*os << tb_.shape_[0];

+for (int i = 1; i < dimension; ++i) {

+ *os << 'x' << tb_.shape_[i];

+}

+*os << ">" << std::endl;

+ }

+

+ /*!

+ * \brief output the tensor

[GitHub] [incubator-mxnet] zhreshold commented on issue #14894: Accelerate ROIPooling layer

zhreshold commented on issue #14894: Accelerate ROIPooling layer URL: https://github.com/apache/incubator-mxnet/pull/14894#issuecomment-511060877 Would be nice to apply to ROIAlign similarly since GluonCV have transitioned to use ROIAlign in recent networks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[incubator-mxnet] branch master updated (2565fa2 -> 9c5acb4)

This is an automated email from the ASF dual-hosted git repository. sxjscience pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from 2565fa2 fix nightly CI failure (#15452) add 9c5acb4 Accelerate ROIPooling layer (#14894) No new revisions were added by this update. Summary of changes: src/operator/roi_pooling-inl.h | 11 +++- src/operator/roi_pooling.cc| 128 ++--- src/operator/roi_pooling.cu| 127 ++-- 3 files changed, 66 insertions(+), 200 deletions(-)

[GitHub] [incubator-mxnet] sxjscience merged pull request #14894: Accelerate ROIPooling layer

sxjscience merged pull request #14894: Accelerate ROIPooling layer URL: https://github.com/apache/incubator-mxnet/pull/14894 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

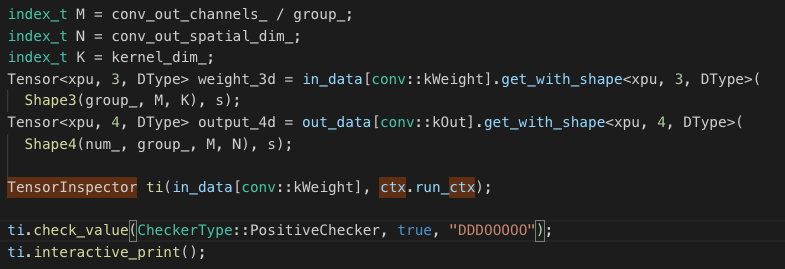

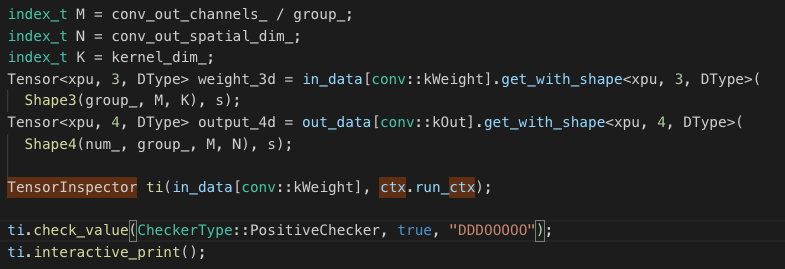

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303174181 ## File path: docs/faq/tensor_inspector_tutorial.md ## @@ -0,0 +1,164 @@ + + + + + + + + + + + + + + + +# Use TensorInspector to Help Debug Operators + +## Introduction + +When developing new operators, developers need to deal with tensor objects extensively. This new utility, Tensor Inspector, mainly aims to help developers debug by providing unified interfaces to print, check, and dump the tensor value. To developers' convenience, This utility works for all the three data types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU tensors. + + +## Usage + +This utility locates in `src/common/tensor_inspector.h`. To use it in any operator code, just include `tensor_inspector`, construct an `TensorInspector` object, and call the APIs on that object. You can run any script that uses the operator you just modified then. + +The screenshot below shows a sample usage in `src/operator/nn/convolution-inl.h`. + + Review comment: Would be great if you could give the image a recognizable name This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

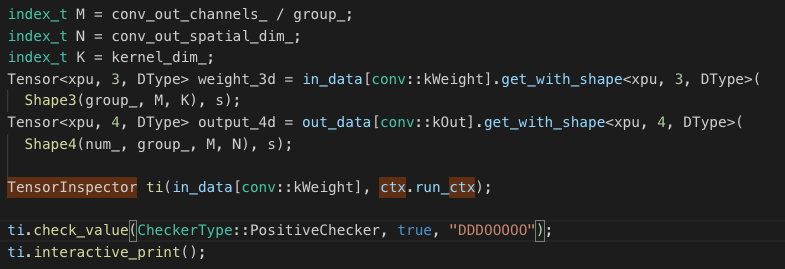

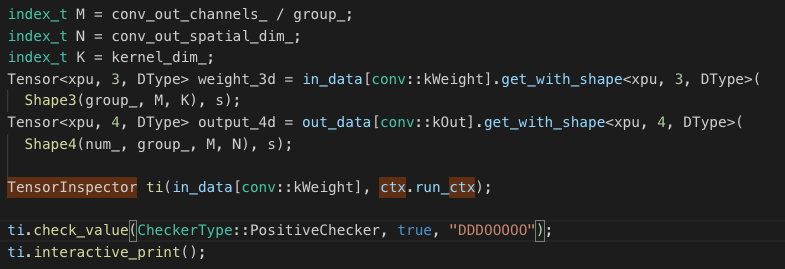

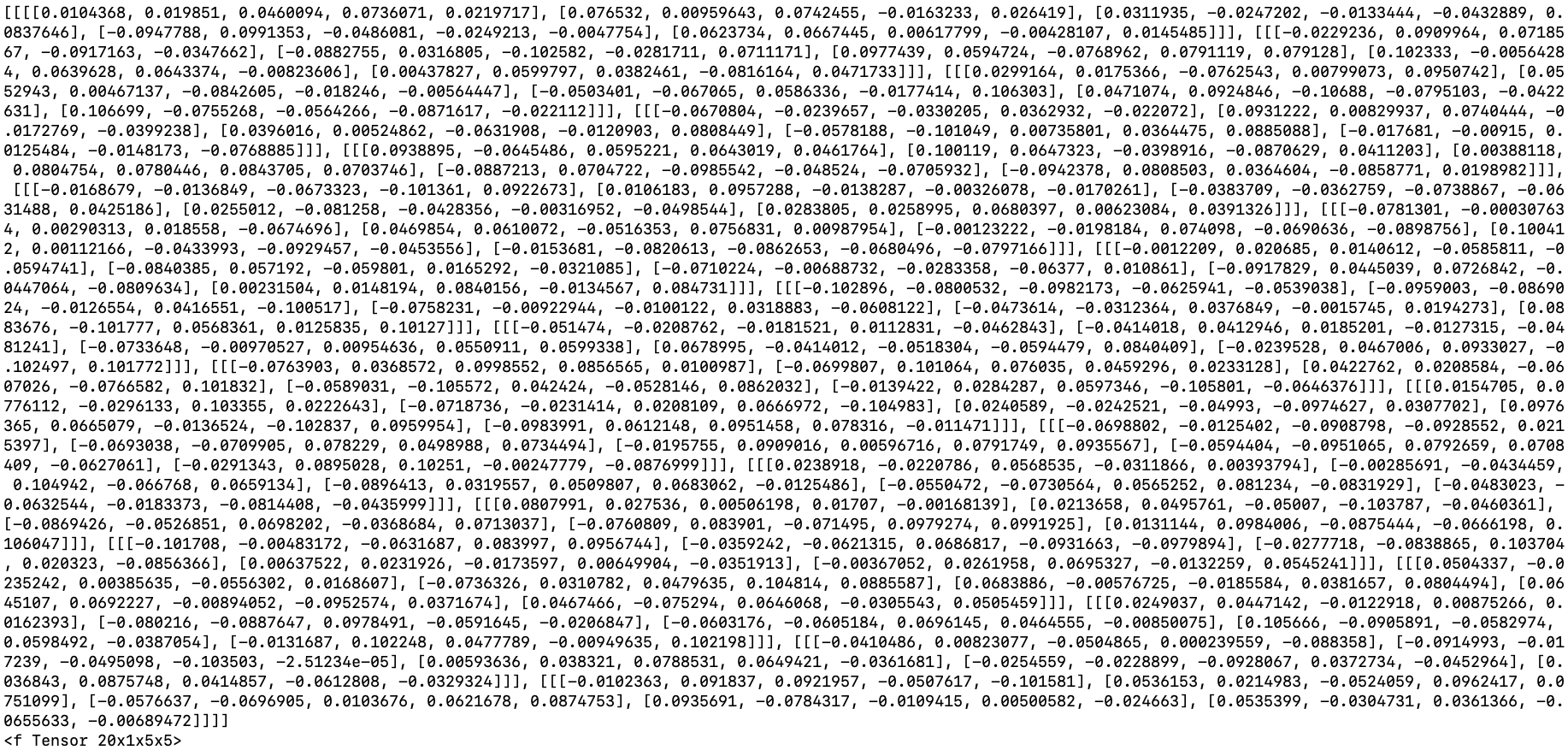

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector

Tutorial

URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303174854

##

File path: docs/faq/tensor_inspector_tutorial.md

##

@@ -0,0 +1,164 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# Use TensorInspector to Help Debug Operators

+

+## Introduction

+

+When developing new operators, developers need to deal with tensor objects

extensively. This new utility, Tensor Inspector, mainly aims to help developers

debug by providing unified interfaces to print, check, and dump the tensor

value. To developers' convenience, This utility works for all the three data

types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU

tensors.

+

+

+## Usage

+

+This utility locates in `src/common/tensor_inspector.h`. To use it in any

operator code, just include `tensor_inspector`, construct an `TensorInspector`

object, and call the APIs on that object. You can run any script that uses the

operator you just modified then.

+

+The screenshot below shows a sample usage in

`src/operator/nn/convolution-inl.h`.

+

+

+

+

+## Functionalities/APIs

+

+### Create a TensorInspector Object from Tensor, TBlob, and NDArray Objects

+

+You can create a `TensorInspector` object by passing in two things: 1) an

object of type `Tensor`, `Tbob`, or `NDArray`, and 2) an `RunContext` object.

+

+Essentially, `TensorInspector` can be understood as a wrapper class around

`TBlob`. Internally, the `Tensor`, `Tbob`, or `NDArray` object that you passed

in will all be converted to a `TBlob` object. The `RunContext` object is used

when the the tensor is a GPU tensor; in such case, we need to use the context

information to copy the data from GPU memory to CPU/main memory.

+

+Below are the three constructors:

+

+```c++

+// Construct from Tensor object

+template

+TensorInspector(const mshadow::Tensor& ts, const

RunContext& ctx);

+

+// Construct from TBlob object

+TensorInspector(const TBlob& tb, const RunContext& ctx);

+

+// Construct from NDArray object

+TensorInspector(const NDArray& arr, const RunContext& ctx):

+```

+

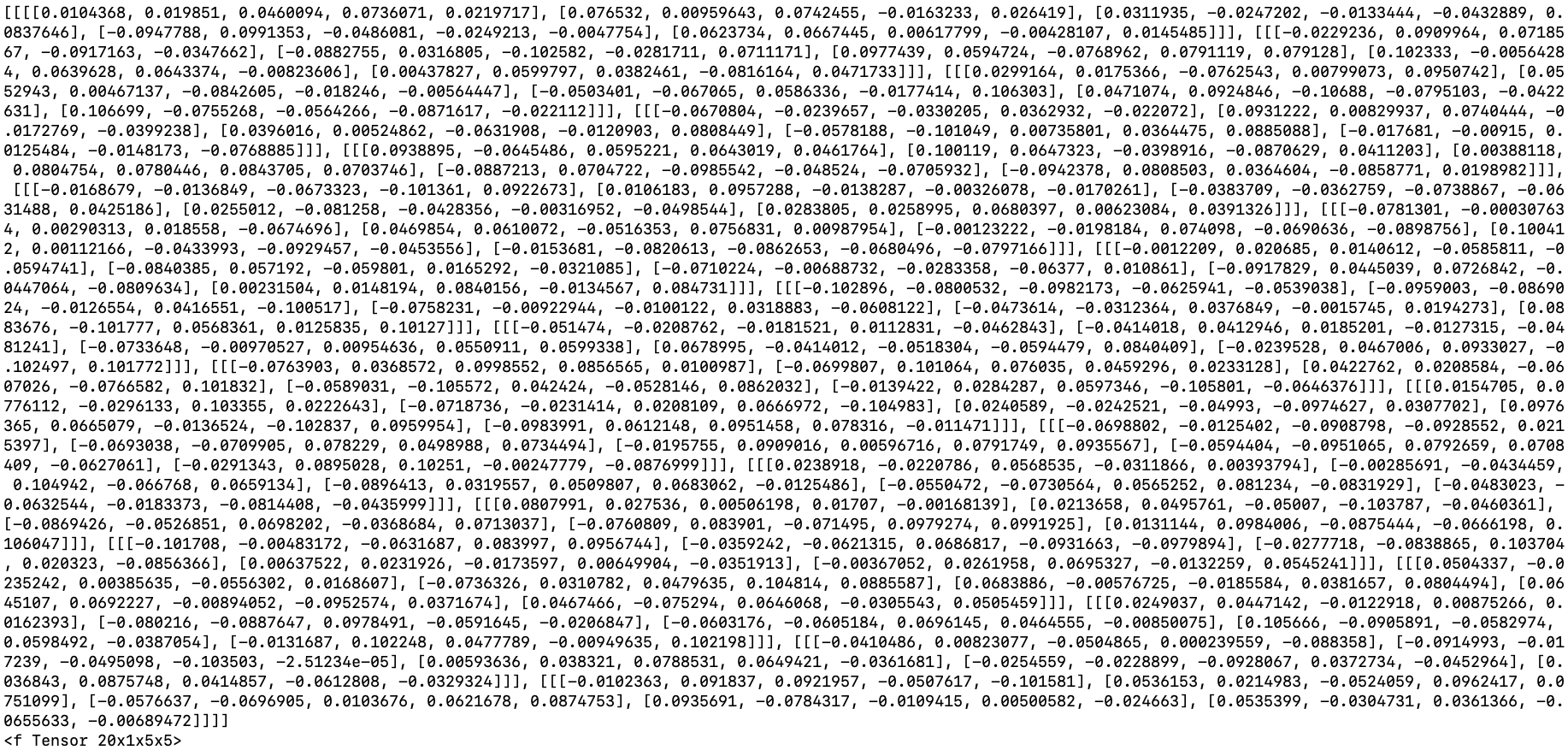

+### Print Tensor Value (Static)

+

+To print out the tensor value in a nicely structured way, you can use this

API:

+

+```c++

+void print_string();

+```

+

+This API will print the entire tensor to `std::cout` and preserve the shape

(it supports all dimensions from 1 and up). You can copy the output and

interpret it with any `JSON` loader. Also, on the last line of the output you

can find some useful information about the tensor. Refer to the case below, we

are able to know that this is a float-typed tensor with shape 20x1x5x5.

+

+

+

+If instead of printing the tensor to `std::cout`, you just need a `string`,

you can use this API:

+```c++

+std::string void to_string();

+```

+

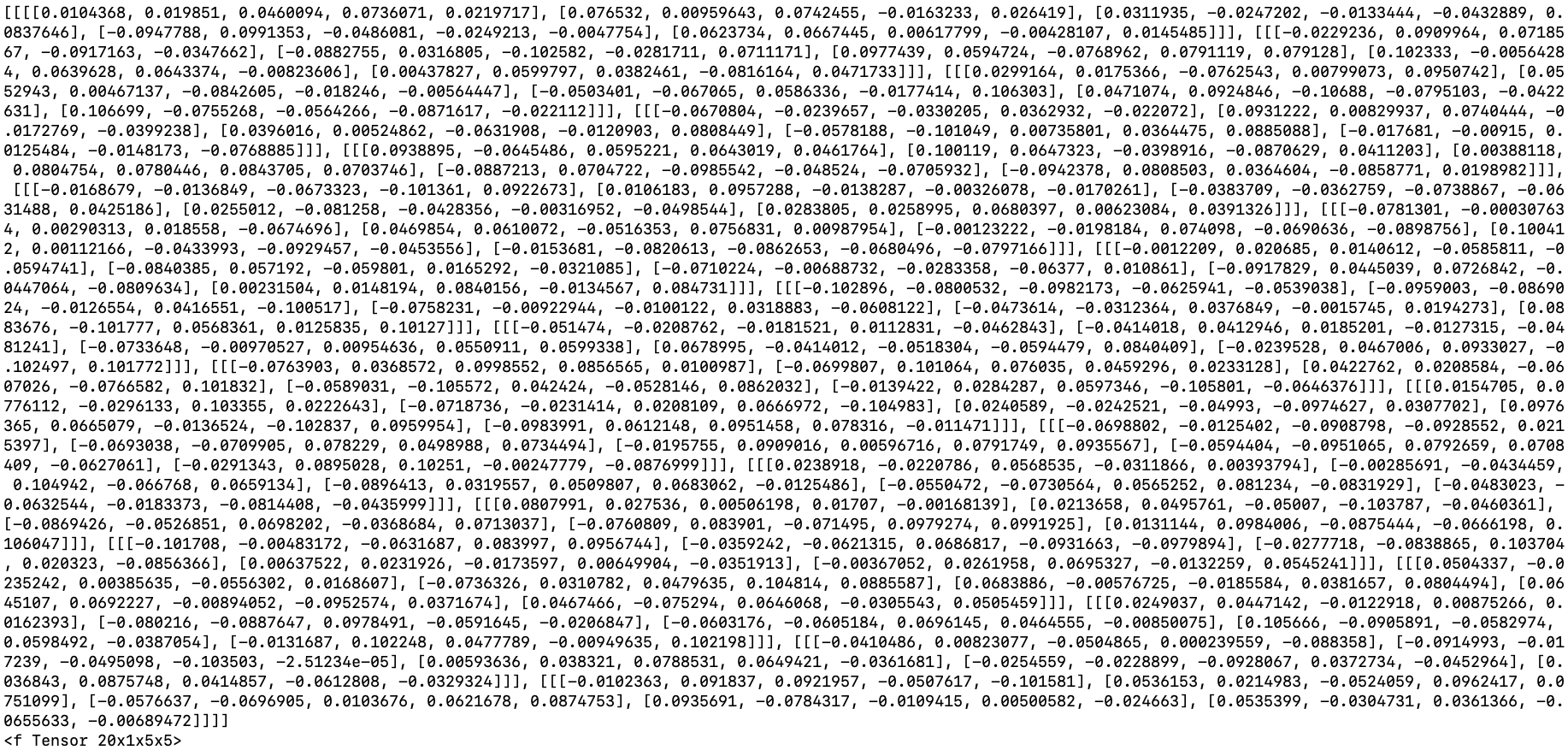

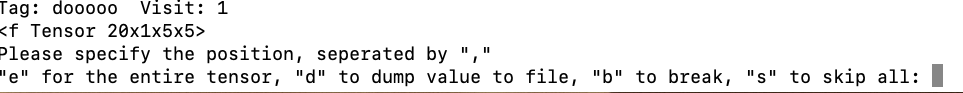

+### Interactively Print Tensor Value (Dynamic)

+

+When debugging, situations might occur that at compilation time, you do not

know which part of a tensor to inspect. Also, sometimes, it would be nice to

pause the operator control flow to “zoom into” a specific, erroneous part of a

tensor multiple times until you are satisfied. In this regard, you can use this

API to interactively inspect the tensor:

+

+```c++

+void interactive_print(std::string tag = "") {

+```

+

+This API will set a "break point" in your code, so that you will enter a loop

that will keep asking you for further command. In the API call, `tag` is an

optional parameter to give the call a name, so that you can identify it when

you have multiple `interactive_print()` calls in different parts of your code.

A visit count will tell you for how many times have you stepped into this

particular "break point", should this operator be called more than once. Note

that all `interactive_print()` calls are properly locked, so you can use it in

many different places without issues.

+

+

+

+Refer the screenshot above, there are many useful commands available: you can

type "e" to print out the entire tensor, ''d" to dump the tensor to file (see

below), "b" to break from this command loop, and "s" to skip all future

`interactive_print()`. Most importantly, in this screen, you can specify a part

of the tensor that you are particularly interested in and want to print out.

For example, for this 20x1x5x5 tensor, you can type in "0, 0" and presss enter

to check the sub-tensor with shape 5x5 at coordinate (0, 0).

+

+### Check Tensor Value

+

+Sometimes, developers might want to check if the tensor contains unexpected

values which could be negative

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector

Tutorial

URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303174924

##

File path: docs/faq/tensor_inspector_tutorial.md

##

@@ -0,0 +1,164 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# Use TensorInspector to Help Debug Operators

+

+## Introduction

+

+When developing new operators, developers need to deal with tensor objects

extensively. This new utility, Tensor Inspector, mainly aims to help developers

debug by providing unified interfaces to print, check, and dump the tensor

value. To developers' convenience, This utility works for all the three data

types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU

tensors.

+

+

+## Usage

+

+This utility locates in `src/common/tensor_inspector.h`. To use it in any

operator code, just include `tensor_inspector`, construct an `TensorInspector`

object, and call the APIs on that object. You can run any script that uses the

operator you just modified then.

+

+The screenshot below shows a sample usage in

`src/operator/nn/convolution-inl.h`.

+

+

+

+

+## Functionalities/APIs

+

+### Create a TensorInspector Object from Tensor, TBlob, and NDArray Objects

+

+You can create a `TensorInspector` object by passing in two things: 1) an

object of type `Tensor`, `Tbob`, or `NDArray`, and 2) an `RunContext` object.

+

+Essentially, `TensorInspector` can be understood as a wrapper class around

`TBlob`. Internally, the `Tensor`, `Tbob`, or `NDArray` object that you passed

in will all be converted to a `TBlob` object. The `RunContext` object is used

when the the tensor is a GPU tensor; in such case, we need to use the context

information to copy the data from GPU memory to CPU/main memory.

+

+Below are the three constructors:

+

+```c++

+// Construct from Tensor object

+template

+TensorInspector(const mshadow::Tensor& ts, const

RunContext& ctx);

+

+// Construct from TBlob object

+TensorInspector(const TBlob& tb, const RunContext& ctx);

+

+// Construct from NDArray object

+TensorInspector(const NDArray& arr, const RunContext& ctx):

+```

+

+### Print Tensor Value (Static)

+

+To print out the tensor value in a nicely structured way, you can use this

API:

+

+```c++

+void print_string();

+```

+

+This API will print the entire tensor to `std::cout` and preserve the shape

(it supports all dimensions from 1 and up). You can copy the output and

interpret it with any `JSON` loader. Also, on the last line of the output you

can find some useful information about the tensor. Refer to the case below, we

are able to know that this is a float-typed tensor with shape 20x1x5x5.

+

+

+

+If instead of printing the tensor to `std::cout`, you just need a `string`,

you can use this API:

+```c++

+std::string void to_string();

+```

+

+### Interactively Print Tensor Value (Dynamic)

+

+When debugging, situations might occur that at compilation time, you do not

know which part of a tensor to inspect. Also, sometimes, it would be nice to

pause the operator control flow to “zoom into” a specific, erroneous part of a

tensor multiple times until you are satisfied. In this regard, you can use this

API to interactively inspect the tensor:

+

+```c++

+void interactive_print(std::string tag = "") {

+```

+

+This API will set a "break point" in your code, so that you will enter a loop

that will keep asking you for further command. In the API call, `tag` is an

optional parameter to give the call a name, so that you can identify it when

you have multiple `interactive_print()` calls in different parts of your code.

A visit count will tell you for how many times have you stepped into this

particular "break point", should this operator be called more than once. Note

that all `interactive_print()` calls are properly locked, so you can use it in

many different places without issues.

+

+

+

+Refer the screenshot above, there are many useful commands available: you can

type "e" to print out the entire tensor, ''d" to dump the tensor to file (see

below), "b" to break from this command loop, and "s" to skip all future

`interactive_print()`. Most importantly, in this screen, you can specify a part

of the tensor that you are particularly interested in and want to print out.

For example, for this 20x1x5x5 tensor, you can type in "0, 0" and presss enter

to check the sub-tensor with shape 5x5 at coordinate (0, 0).

+

+### Check Tensor Value

+

+Sometimes, developers might want to check if the tensor contains unexpected

values which could be negative

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303174371 ## File path: docs/faq/tensor_inspector_tutorial.md ## @@ -0,0 +1,164 @@ + + + + + + + + + + + + + + + +# Use TensorInspector to Help Debug Operators + +## Introduction + +When developing new operators, developers need to deal with tensor objects extensively. This new utility, Tensor Inspector, mainly aims to help developers debug by providing unified interfaces to print, check, and dump the tensor value. To developers' convenience, This utility works for all the three data types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU tensors. + + +## Usage + +This utility locates in `src/common/tensor_inspector.h`. To use it in any operator code, just include `tensor_inspector`, construct an `TensorInspector` object, and call the APIs on that object. You can run any script that uses the operator you just modified then. + +The screenshot below shows a sample usage in `src/operator/nn/convolution-inl.h`. + + + + +## Functionalities/APIs + +### Create a TensorInspector Object from Tensor, TBlob, and NDArray Objects + +You can create a `TensorInspector` object by passing in two things: 1) an object of type `Tensor`, `Tbob`, or `NDArray`, and 2) an `RunContext` object. + +Essentially, `TensorInspector` can be understood as a wrapper class around `TBlob`. Internally, the `Tensor`, `Tbob`, or `NDArray` object that you passed in will all be converted to a `TBlob` object. The `RunContext` object is used when the the tensor is a GPU tensor; in such case, we need to use the context information to copy the data from GPU memory to CPU/main memory. Review comment: ```suggestion Essentially, `TensorInspector` can be understood as a wrapper class around `TBlob`. Internally, the `Tensor`, `Tbob`, or `NDArray` object that you passed in will be converted to a `TBlob` object. The `RunContext` object is used when the the tensor is a GPU tensor; in such a case, we need to use the context information to copy the data from GPU memory to CPU/main memory. ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303173725 ## File path: docs/faq/tensor_inspector_tutorial.md ## @@ -0,0 +1,164 @@ + + + + + + + + + + + + + + + +# Use TensorInspector to Help Debug Operators + +## Introduction + +When developing new operators, developers need to deal with tensor objects extensively. This new utility, Tensor Inspector, mainly aims to help developers debug by providing unified interfaces to print, check, and dump the tensor value. To developers' convenience, This utility works for all the three data types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU tensors. + + +## Usage + +This utility locates in `src/common/tensor_inspector.h`. To use it in any operator code, just include `tensor_inspector`, construct an `TensorInspector` object, and call the APIs on that object. You can run any script that uses the operator you just modified then. Review comment: ```suggestion This utility is located in `src/common/tensor_inspector.h`. To use it in any operator code, just include `tensor_inspector`, construct an `TensorInspector` object, and call the APIs on that object. You can run any script that uses the operator you just modified then. ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303173914 ## File path: docs/faq/tensor_inspector_tutorial.md ## @@ -0,0 +1,164 @@ + + + + + + + + + + + + + + + +# Use TensorInspector to Help Debug Operators + +## Introduction + +When developing new operators, developers need to deal with tensor objects extensively. This new utility, Tensor Inspector, mainly aims to help developers debug by providing unified interfaces to print, check, and dump the tensor value. To developers' convenience, This utility works for all the three data types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU tensors. Review comment: ```suggestion When developing new operators, developers need to deal with tensor objects extensively. This new utility, Tensor Inspector, mainly aims to help developers debug by providing unified interfaces to print, check, and dump the tensor value. To developers' convenience, this utility works for all the three data types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU tensors. ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial

ChaiBapchya commented on a change in pull request #15517: [WIP]Tensor Inspector Tutorial URL: https://github.com/apache/incubator-mxnet/pull/15517#discussion_r303174576 ## File path: docs/faq/tensor_inspector_tutorial.md ## @@ -0,0 +1,164 @@ + + + + + + + + + + + + + + + +# Use TensorInspector to Help Debug Operators + +## Introduction + +When developing new operators, developers need to deal with tensor objects extensively. This new utility, Tensor Inspector, mainly aims to help developers debug by providing unified interfaces to print, check, and dump the tensor value. To developers' convenience, This utility works for all the three data types: Tensors, TBlobs, and NDArrays. Also, it supports both CPU and GPU tensors. + + +## Usage + +This utility locates in `src/common/tensor_inspector.h`. To use it in any operator code, just include `tensor_inspector`, construct an `TensorInspector` object, and call the APIs on that object. You can run any script that uses the operator you just modified then. + +The screenshot below shows a sample usage in `src/operator/nn/convolution-inl.h`. + + + + +## Functionalities/APIs + +### Create a TensorInspector Object from Tensor, TBlob, and NDArray Objects + +You can create a `TensorInspector` object by passing in two things: 1) an object of type `Tensor`, `Tbob`, or `NDArray`, and 2) an `RunContext` object. + +Essentially, `TensorInspector` can be understood as a wrapper class around `TBlob`. Internally, the `Tensor`, `Tbob`, or `NDArray` object that you passed in will all be converted to a `TBlob` object. The `RunContext` object is used when the the tensor is a GPU tensor; in such case, we need to use the context information to copy the data from GPU memory to CPU/main memory. + +Below are the three constructors: + +```c++ +// Construct from Tensor object +template +TensorInspector(const mshadow::Tensor& ts, const RunContext& ctx); + +// Construct from TBlob object +TensorInspector(const TBlob& tb, const RunContext& ctx); + +// Construct from NDArray object +TensorInspector(const NDArray& arr, const RunContext& ctx): +``` + +### Print Tensor Value (Static) + +To print out the tensor value in a nicely structured way, you can use this API: + +```c++ +void print_string(); +``` + +This API will print the entire tensor to `std::cout` and preserve the shape (it supports all dimensions from 1 and up). You can copy the output and interpret it with any `JSON` loader. Also, on the last line of the output you can find some useful information about the tensor. Refer to the case below, we are able to know that this is a float-typed tensor with shape 20x1x5x5. + + Review comment: likewise This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] sxjscience commented on issue #14894: Accelerate ROIPooling layer

sxjscience commented on issue #14894: Accelerate ROIPooling layer URL: https://github.com/apache/incubator-mxnet/pull/14894#issuecomment-511059573 I think it should be good. One concern is it may be backward incompatible due to the `atomicAdd`. Although I feel it's reasonable to use the faster version, I need to confirm with the GluonCV team whether it will break the current training scripts. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] sxjscience commented on a change in pull request #14894: Accelerate ROIPooling layer

sxjscience commented on a change in pull request #14894: Accelerate ROIPooling

layer

URL: https://github.com/apache/incubator-mxnet/pull/14894#discussion_r303173596

##

File path: src/operator/roi_pooling.cc

##

@@ -137,91 +140,18 @@ template

inline void ROIPoolBackwardAcc(const Tensor _grad,

const Tensor _grad,

const Tensor ,

- const Tensor _idx,

+ const Tensor _idx,

const float spatial_scale_) {

const Dtype *top_diff = out_grad.dptr_;

- const Dtype *bottom_rois = bbox.dptr_;

Dtype *bottom_diff = in_grad.dptr_;

- Dtype *argmax_data = max_idx.dptr_;

-

- const int batch_size_ = in_grad.size(0);

- const int channels_ = in_grad.size(1);

- const int height_ = in_grad.size(2);

- const int width_ = in_grad.size(3);

- const int pooled_height_ = out_grad.size(2);

- const int pooled_width_ = out_grad.size(3);

-

- const int num_rois = bbox.size(0);

-

- for (int b = 0; b < batch_size_; ++b) {

-for (int c = 0; c < channels_; ++c) {

- for (int h = 0; h < height_; ++h) {

-for (int w = 0; w < width_; ++w) {

- int offset_bottom_diff = (b * channels_ + c) * height_ * width_;

- offset_bottom_diff += h * width_ + w;

-

- Dtype gradient = 0;

- // Accumulate gradient over all ROIs that pooled this element

- for (int roi_n = 0; roi_n < num_rois; ++roi_n) {

-const Dtype* offset_bottom_rois = bottom_rois + roi_n * 5;

-int roi_batch_ind = offset_bottom_rois[0];

-assert(roi_batch_ind >= 0);

-assert(roi_batch_ind < batch_size_);

-if (b != roi_batch_ind) {

- continue;

-}

+ index_t *argmax_data = max_idx.dptr_;

-int roi_start_w = std::round(offset_bottom_rois[1] *

spatial_scale_);

-int roi_start_h = std::round(offset_bottom_rois[2] *

spatial_scale_);

-int roi_end_w = std::round(offset_bottom_rois[3] * spatial_scale_);

-int roi_end_h = std::round(offset_bottom_rois[4] * spatial_scale_);

+ const index_t count = out_grad.shape_.Size();

-bool in_roi = (w >= roi_start_w && w <= roi_end_w &&

- h >= roi_start_h && h <= roi_end_h);

-if (!in_roi) {

- continue;

-}

-

-// force malformed ROIs to be 1 * 1

-int roi_height = max(roi_end_h - roi_start_h + 1, 1);

-int roi_width = max(roi_end_w - roi_start_w + 1, 1);

-const Dtype bin_size_h = static_cast(roi_height)

- / static_cast(pooled_height_);

-const Dtype bin_size_w = static_cast(roi_width)

- / static_cast(pooled_width_);

-

-// compute pooled regions correspond to original (h, w) point

-int phstart = static_cast(floor(static_cast(h -

roi_start_h)

- / bin_size_h));

-int pwstart = static_cast(floor(static_cast(w -

roi_start_w)

- / bin_size_w));

-int phend = static_cast(ceil(static_cast(h -

roi_start_h + 1)

- / bin_size_h));

-int pwend = static_cast(ceil(static_cast(w -

roi_start_w + 1)

- / bin_size_w));

-

-// clip to boundaries of pooled region

-phstart = min(max(phstart, 0), pooled_height_);

-phend = min(max(phend, 0), pooled_height_);

-pwstart = min(max(pwstart, 0), pooled_width_);

-pwend = min(max(pwend, 0), pooled_width_);

-

-// accumulate over gradients in pooled regions

-int offset = (roi_n * channels_ + c) * pooled_height_ *

pooled_width_;

-const Dtype* offset_top_diff = top_diff + offset;

-const Dtype* offset_argmax_data = argmax_data + offset;

-for (int ph = phstart; ph < phend; ++ph) {

- for (int pw = pwstart; pw < pwend; ++pw) {

-const int pooled_index = ph * pooled_width_ + pw;

-if (static_cast(offset_argmax_data[pooled_index]) == h *

width_ + w) {

- gradient += offset_top_diff[pooled_index];

-}

- }

-}

- }

- bottom_diff[offset_bottom_diff] += gradient;

-}

- }

+ for (int index = 0; index < count; ++index) {

+index_t max_idx = argmax_data[index];

+if (max_idx >= 0) {

+ bottom_diff[max_idx] += top_diff[index];

Review comment:

It's correct, sorry for the misreading.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the

[GitHub] [incubator-mxnet] sxjscience commented on a change in pull request #14894: Accelerate ROIPooling layer

sxjscience commented on a change in pull request #14894: Accelerate ROIPooling

layer

URL: https://github.com/apache/incubator-mxnet/pull/14894#discussion_r303173424

##

File path: src/operator/roi_pooling.cc

##

@@ -137,91 +140,18 @@ template

inline void ROIPoolBackwardAcc(const Tensor _grad,

const Tensor _grad,

const Tensor ,

- const Tensor _idx,

+ const Tensor _idx,

const float spatial_scale_) {

const Dtype *top_diff = out_grad.dptr_;

- const Dtype *bottom_rois = bbox.dptr_;

Dtype *bottom_diff = in_grad.dptr_;

- Dtype *argmax_data = max_idx.dptr_;

-

- const int batch_size_ = in_grad.size(0);

- const int channels_ = in_grad.size(1);

- const int height_ = in_grad.size(2);

- const int width_ = in_grad.size(3);

- const int pooled_height_ = out_grad.size(2);

- const int pooled_width_ = out_grad.size(3);

-

- const int num_rois = bbox.size(0);

-

- for (int b = 0; b < batch_size_; ++b) {

-for (int c = 0; c < channels_; ++c) {

- for (int h = 0; h < height_; ++h) {

-for (int w = 0; w < width_; ++w) {

- int offset_bottom_diff = (b * channels_ + c) * height_ * width_;

- offset_bottom_diff += h * width_ + w;

-

- Dtype gradient = 0;

- // Accumulate gradient over all ROIs that pooled this element

- for (int roi_n = 0; roi_n < num_rois; ++roi_n) {

-const Dtype* offset_bottom_rois = bottom_rois + roi_n * 5;

-int roi_batch_ind = offset_bottom_rois[0];

-assert(roi_batch_ind >= 0);

-assert(roi_batch_ind < batch_size_);

-if (b != roi_batch_ind) {

- continue;

-}

+ index_t *argmax_data = max_idx.dptr_;

-int roi_start_w = std::round(offset_bottom_rois[1] *

spatial_scale_);

-int roi_start_h = std::round(offset_bottom_rois[2] *

spatial_scale_);

-int roi_end_w = std::round(offset_bottom_rois[3] * spatial_scale_);

-int roi_end_h = std::round(offset_bottom_rois[4] * spatial_scale_);

+ const index_t count = out_grad.shape_.Size();

-bool in_roi = (w >= roi_start_w && w <= roi_end_w &&

- h >= roi_start_h && h <= roi_end_h);

-if (!in_roi) {

- continue;

-}

-

-// force malformed ROIs to be 1 * 1

-int roi_height = max(roi_end_h - roi_start_h + 1, 1);

-int roi_width = max(roi_end_w - roi_start_w + 1, 1);

-const Dtype bin_size_h = static_cast(roi_height)

- / static_cast(pooled_height_);

-const Dtype bin_size_w = static_cast(roi_width)

- / static_cast(pooled_width_);

-

-// compute pooled regions correspond to original (h, w) point

-int phstart = static_cast(floor(static_cast(h -

roi_start_h)

- / bin_size_h));

-int pwstart = static_cast(floor(static_cast(w -

roi_start_w)

- / bin_size_w));

-int phend = static_cast(ceil(static_cast(h -

roi_start_h + 1)

- / bin_size_h));

-int pwend = static_cast(ceil(static_cast(w -

roi_start_w + 1)

- / bin_size_w));

-

-// clip to boundaries of pooled region

-phstart = min(max(phstart, 0), pooled_height_);

-phend = min(max(phend, 0), pooled_height_);

-pwstart = min(max(pwstart, 0), pooled_width_);

-pwend = min(max(pwend, 0), pooled_width_);

-

-// accumulate over gradients in pooled regions

-int offset = (roi_n * channels_ + c) * pooled_height_ *

pooled_width_;

-const Dtype* offset_top_diff = top_diff + offset;

-const Dtype* offset_argmax_data = argmax_data + offset;

-for (int ph = phstart; ph < phend; ++ph) {

- for (int pw = pwstart; pw < pwend; ++pw) {

-const int pooled_index = ph * pooled_width_ + pw;

-if (static_cast(offset_argmax_data[pooled_index]) == h *

width_ + w) {

- gradient += offset_top_diff[pooled_index];

-}

- }

-}

- }

- bottom_diff[offset_bottom_diff] += gradient;

-}

- }

+ for (int index = 0; index < count; ++index) {

+index_t max_idx = argmax_data[index];

+if (max_idx >= 0) {

+ bottom_diff[max_idx] += top_diff[index];

Review comment:

~~Is it possible that two threads are writing to the same max_idx?~~

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

[GitHub] [incubator-mxnet] sxjscience commented on a change in pull request #14894: Accelerate ROIPooling layer

sxjscience commented on a change in pull request #14894: Accelerate ROIPooling

layer

URL: https://github.com/apache/incubator-mxnet/pull/14894#discussion_r303173424

##

File path: src/operator/roi_pooling.cc

##

@@ -137,91 +140,18 @@ template

inline void ROIPoolBackwardAcc(const Tensor _grad,

const Tensor _grad,

const Tensor ,

- const Tensor _idx,

+ const Tensor _idx,

const float spatial_scale_) {

const Dtype *top_diff = out_grad.dptr_;

- const Dtype *bottom_rois = bbox.dptr_;

Dtype *bottom_diff = in_grad.dptr_;

- Dtype *argmax_data = max_idx.dptr_;

-

- const int batch_size_ = in_grad.size(0);

- const int channels_ = in_grad.size(1);

- const int height_ = in_grad.size(2);

- const int width_ = in_grad.size(3);

- const int pooled_height_ = out_grad.size(2);

- const int pooled_width_ = out_grad.size(3);

-

- const int num_rois = bbox.size(0);

-

- for (int b = 0; b < batch_size_; ++b) {

-for (int c = 0; c < channels_; ++c) {

- for (int h = 0; h < height_; ++h) {

-for (int w = 0; w < width_; ++w) {

- int offset_bottom_diff = (b * channels_ + c) * height_ * width_;

- offset_bottom_diff += h * width_ + w;

-

- Dtype gradient = 0;

- // Accumulate gradient over all ROIs that pooled this element

- for (int roi_n = 0; roi_n < num_rois; ++roi_n) {

-const Dtype* offset_bottom_rois = bottom_rois + roi_n * 5;

-int roi_batch_ind = offset_bottom_rois[0];

-assert(roi_batch_ind >= 0);

-assert(roi_batch_ind < batch_size_);

-if (b != roi_batch_ind) {

- continue;

-}

+ index_t *argmax_data = max_idx.dptr_;

-int roi_start_w = std::round(offset_bottom_rois[1] *

spatial_scale_);

-int roi_start_h = std::round(offset_bottom_rois[2] *

spatial_scale_);

-int roi_end_w = std::round(offset_bottom_rois[3] * spatial_scale_);

-int roi_end_h = std::round(offset_bottom_rois[4] * spatial_scale_);

+ const index_t count = out_grad.shape_.Size();

-bool in_roi = (w >= roi_start_w && w <= roi_end_w &&

- h >= roi_start_h && h <= roi_end_h);

-if (!in_roi) {

- continue;

-}

-

-// force malformed ROIs to be 1 * 1

-int roi_height = max(roi_end_h - roi_start_h + 1, 1);

-int roi_width = max(roi_end_w - roi_start_w + 1, 1);

-const Dtype bin_size_h = static_cast(roi_height)

- / static_cast(pooled_height_);

-const Dtype bin_size_w = static_cast(roi_width)

- / static_cast(pooled_width_);

-

-// compute pooled regions correspond to original (h, w) point

-int phstart = static_cast(floor(static_cast(h -

roi_start_h)

- / bin_size_h));

-int pwstart = static_cast(floor(static_cast(w -

roi_start_w)

- / bin_size_w));

-int phend = static_cast(ceil(static_cast(h -

roi_start_h + 1)

- / bin_size_h));

-int pwend = static_cast(ceil(static_cast(w -

roi_start_w + 1)

- / bin_size_w));

-

-// clip to boundaries of pooled region

-phstart = min(max(phstart, 0), pooled_height_);

-phend = min(max(phend, 0), pooled_height_);

-pwstart = min(max(pwstart, 0), pooled_width_);

-pwend = min(max(pwend, 0), pooled_width_);

-

-// accumulate over gradients in pooled regions

-int offset = (roi_n * channels_ + c) * pooled_height_ *

pooled_width_;

-const Dtype* offset_top_diff = top_diff + offset;

-const Dtype* offset_argmax_data = argmax_data + offset;

-for (int ph = phstart; ph < phend; ++ph) {

- for (int pw = pwstart; pw < pwend; ++pw) {

-const int pooled_index = ph * pooled_width_ + pw;

-if (static_cast(offset_argmax_data[pooled_index]) == h *

width_ + w) {

- gradient += offset_top_diff[pooled_index];

-}

- }

-}

- }

- bottom_diff[offset_bottom_diff] += gradient;

-}

- }

+ for (int index = 0; index < count; ++index) {

+index_t max_idx = argmax_data[index];

+if (max_idx >= 0) {

+ bottom_diff[max_idx] += top_diff[index];

Review comment:

Is it possible that two threads are writing to the same max_idx?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL

[GitHub] [incubator-mxnet] anirudh2290 opened a new pull request #15526: Fix AMP Tutorial warnings and failures