[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating

profiler tutorial to include new custom operator profiling

URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r299308343

##

File path: docs/tutorials/python/profiler.md

##

@@ -206,6 +206,81 @@ Let's zoom in to check the time taken by operators

The above picture visualizes the sequence in which the operators were executed

and the time taken by each operator.

+### Profiling Custom Operators

+Should the existing NDArray operators fail to meet all your model's needs,

MXNet supports [Custom

Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html)

that you can define in Python. In `forward()` and `backward()` of a custom

operator, there are two kinds of code: "pure Python" code (NumPy operators

included) and "sub-operators" (NDArray operators called within `forward()` and

`backward()`). With that said, MXNet can profile the execution time of both

kinds without additional setup. Specifically, the MXNet profiler will break a

single custom operator call into a pure Python event and several sub-operator

events if there are any. Furthermore, all of those events will have a prefix in

their names, which is, conveniently, the name of the custom operator you called.

+

+Let's try profiling custom operators with the following code example:

+

+```python

+

+import mxnet as mx

+from mxnet import nd

+from mxnet import profiler

+

+class MyAddOne(mx.operator.CustomOp):

+def forward(self, is_train, req, in_data, out_data, aux):

+self.assign(out_data[0], req[0], in_data[0]+1)

+

+def backward(self, req, out_grad, in_data, out_data, in_grad, aux):

+self.assign(in_grad[0], req[0], out_grad[0])

+

+@mx.operator.register('MyAddOne')

+class CustomAddOneProp(mx.operator.CustomOpProp):

+def __init__(self):

+super(CustomAddOneProp, self).__init__(need_top_grad=True)

+

+def list_arguments(self):

+return ['data']

+

+def list_outputs(self):

+return ['output']

+

+def infer_shape(self, in_shape):

+return [in_shape[0]], [in_shape[0]], []

+

+def create_operator(self, ctx, shapes, dtypes):

+return MyAddOne()

+

+

+inp = mx.nd.zeros(shape=(500, 500))

+

+profiler.set_config(profile_all=True, continuous_dump = True)

+profiler.set_state('run')

+

+w = nd.Custom(inp, op_type="MyAddOne")

+

+mx.nd.waitall()

+

+profiler.set_state('stop')

+profiler.dump()

+```

+

+Here, we have created a custom operator called `MyAddOne`, and within its

`forward()` function, we simply add one to the input. We can visualize the dump

file in `chrome://tracing/`:

+

+

+

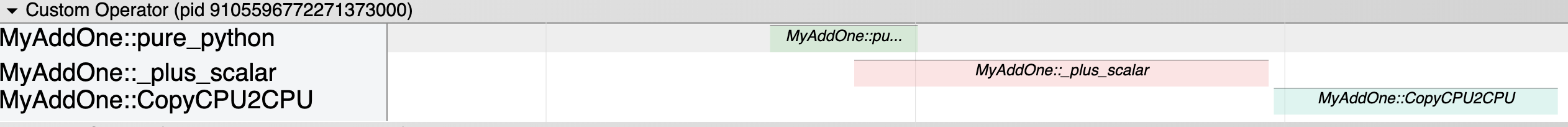

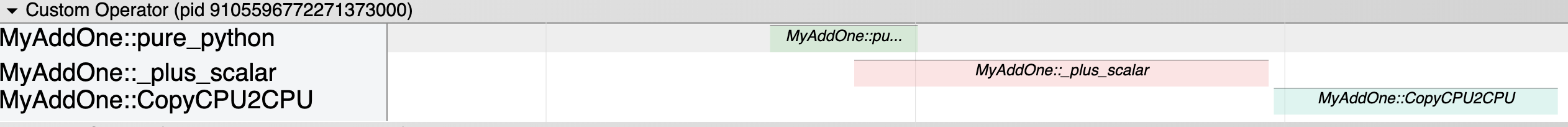

+As shown by the screenshot, in the **Custom Operator** domain where all the

custom operator-related events fall into, we can easily visualize the execution

time of each segment of `MyAddOne`. We can tell that `MyAddOne::pure_python` is

executed first. We also know that `CopyCPU2CPU` and `_plus_scalr` are two

"sub-operators" of `MyAddOne` and the sequence in which they are executed.

+

+Please note that: to be able to see the previously described information, you

need to set `profile_imperative` to `True` even when you are using custom

operators in [symbolic

mode](https://mxnet.incubator.apache.org/versions/master/tutorials/basic/symbol.html)

(refer to the code snippet below, which is the symbolic-mode equivelent of the

code example above). The reason is that within custom operators, pure python

code and sub-operators are still called imperatively.

+

+```python

+# Set profile_all to True

+profiler.set_config(profile_all=True, aggregate_stats=True, continuous_dump =

True)

+# OR, Explicitly Set profile_symbolic and profile_imperative to True

+profiler.set_config(profile_symbolic = False, profile_imperative = False, \

Review comment:

True right?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r299256224 ## File path: docs/tutorials/python/profiler.md ## @@ -206,6 +206,15 @@ Let's zoom in to check the time taken by operators The above picture visualizes the sequence in which the operators were executed and the time taken by each operator. +### Profiling Custom Operators +Should the existing NDArray operators fail to meet all your model's needs, MXNet supports [Custom Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html) that you can define in Python. In `forward()` and `backward()` of a custom operator, there are two kinds of code: "pure Python" code (NumPy operators included) and "sub-operators" (NDArray operators called within `forward()` and `backward()`). With that said, MXNet can profile the execution time of both kinds without additional setup. Specifically, the MXNet profiler will break a single custom operator call into a pure Python event and several sub-operator events if there are any. Furthermore, all of those events will have a prefix in their names, which is, conveniently, the name of the custom operator you called. + + + +As shown by the screenshot, in the **Custom Operator** domain where all the custom operator-related events fall into, you can easily visualize the execution time of each segment of your custom operator. For example, we know that `CustomAddTwo::sqrt` is a sub-operator of custom operator `CustomAddTwo`, and we also know when it is executed accurately. + +Please note that: to be able to see the previously described information, you need to set `profile_imperative` to `True` even when you are using custom operators in [symbolic mode](https://mxnet.incubator.apache.org/versions/master/tutorials/basic/symbol.html). The reason is that within custom operators, pure python code and sub-operators are still called imperatively. Review comment: Can we please have a code example for showing 'profile_imperative' option when using in Symbolic Mode. It might not be clear for users where to set 'profile_imperative' to True. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating

profiler tutorial to include new custom operator profiling

URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r299255870

##

File path: docs/tutorials/python/profiler.md

##

@@ -206,6 +206,63 @@ Let's zoom in to check the time taken by operators

The above picture visualizes the sequence in which the operators were executed

and the time taken by each operator.

+### Profiling Custom Operators

+Should the existing NDArray operators fail to meet all your model's needs,

MXNet supports [Custom

Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html)

that you can define in Python. In `forward()` and `backward()` of a custom

operator, there are two kinds of code: "pure Python" code (NumPy operators

included) and "sub-operators" (NDArray operators called within `forward()` and

`backward()`). With that said, MXNet can profile the execution time of both

kinds without additional setup. Specifically, the MXNet profiler will break a

single custom operator call into a pure Python event and several sub-operator

events if there are any. Furthermore, all of those events will have a prefix in

their names, which is, conveniently, the name of the custom operator you called.

+

+Let's try profiling custom operators with the following code example:

+

+```python

+

+import mxnet as mx

+from mxnet import nd

+from mxnet import profiler

+

+class MyAddOne(mx.operator.CustomOp):

+def forward(self, is_train, req, in_data, out_data, aux):

+self.assign(out_data[0], req[0], in_data[0]+1)

+

+def backward(self, req, out_grad, in_data, out_data, in_grad, aux):

+self.assign(in_grad[0], req[0], out_grad[0])

+

+@mx.operator.register('MyAddOne')

+class CustomAddOneProp(mx.operator.CustomOpProp):

+def __init__(self):

+super(CustomAddOneProp, self).__init__(need_top_grad=True)

+

+def list_arguments(self):

+return ['data']

+

+def list_outputs(self):

+return ['output']

+

+def infer_shape(self, in_shape):

+return [in_shape[0]], [in_shape[0]], []

+

+def create_operator(self, ctx, shapes, dtypes):

+return MyAddOne()

+

+

+inp = mx.nd.zeros(shape=(500, 500))

+

+profiler.set_config(profile_all=True, continuous_dump = True)

+profiler.set_state('run')

+

+w = nd.Custom(inp, op_type="MyAddOne")

+

+mx.nd.waitall()

+

+profiler.set_state('stop')

+profiler.dump()

+```

+

+Here, we have created a custom operator called `MyAddOne`, and within its

`foward()` function, we simply add one to the input. We can visualize the dump

file in `chrome://tracing/`:

+

+

+

+As shown by the screenshot, in the **Custom Operator** domain where all the

custom operator-related events fall into, we can easily visualize the execution

time of each segment of `MyAddOne`. We can tell that `MyAddOne::pure_python` is

executed first. We also know that `CopyCPU2CPU` and `_plus_scalr` are two

"sub-operators" of `MyAddOne` and the sequence in which they are exectued.

Review comment:

nit: spell check. 'exectued' -> 'executed'

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating

profiler tutorial to include new custom operator profiling

URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r299255615

##

File path: docs/tutorials/python/profiler.md

##

@@ -206,6 +206,63 @@ Let's zoom in to check the time taken by operators

The above picture visualizes the sequence in which the operators were executed

and the time taken by each operator.

+### Profiling Custom Operators

+Should the existing NDArray operators fail to meet all your model's needs,

MXNet supports [Custom

Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html)

that you can define in Python. In `forward()` and `backward()` of a custom

operator, there are two kinds of code: "pure Python" code (NumPy operators

included) and "sub-operators" (NDArray operators called within `forward()` and

`backward()`). With that said, MXNet can profile the execution time of both

kinds without additional setup. Specifically, the MXNet profiler will break a

single custom operator call into a pure Python event and several sub-operator

events if there are any. Furthermore, all of those events will have a prefix in

their names, which is, conveniently, the name of the custom operator you called.

+

+Let's try profiling custom operators with the following code example:

+

+```python

+

+import mxnet as mx

+from mxnet import nd

+from mxnet import profiler

+

+class MyAddOne(mx.operator.CustomOp):

+def forward(self, is_train, req, in_data, out_data, aux):

+self.assign(out_data[0], req[0], in_data[0]+1)

+

+def backward(self, req, out_grad, in_data, out_data, in_grad, aux):

+self.assign(in_grad[0], req[0], out_grad[0])

+

+@mx.operator.register('MyAddOne')

+class CustomAddOneProp(mx.operator.CustomOpProp):

+def __init__(self):

+super(CustomAddOneProp, self).__init__(need_top_grad=True)

+

+def list_arguments(self):

+return ['data']

+

+def list_outputs(self):

+return ['output']

+

+def infer_shape(self, in_shape):

+return [in_shape[0]], [in_shape[0]], []

+

+def create_operator(self, ctx, shapes, dtypes):

+return MyAddOne()

+

+

+inp = mx.nd.zeros(shape=(500, 500))

+

+profiler.set_config(profile_all=True, continuous_dump = True)

+profiler.set_state('run')

+

+w = nd.Custom(inp, op_type="MyAddOne")

+

+mx.nd.waitall()

+

+profiler.set_state('stop')

+profiler.dump()

+```

+

+Here, we have created a custom operator called `MyAddOne`, and within its

`foward()` function, we simply add one to the input. We can visualize the dump

file in `chrome://tracing/`:

Review comment:

nit: spell check. 'foward()' -> 'forward()'

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r298782963 ## File path: docs/tutorials/python/profiler.md ## @@ -206,6 +206,15 @@ Let's zoom in to check the time taken by operators The above picture visualizes the sequence in which the operators were executed and the time taken by each operator. +### Profiling Custom Operators +Should the existing NDArray operators fail to meet all your model's needs, MXNet supports [Custom Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html) that you can define in python. In forward() and backward() of a custom operator, there are two kinds of code: `pure python` code (Numpy operators inclued) and `sub-operators` (NDArray operators called within foward() and backward()). With that said, MXNet can profile the execution time of both kinds without additional setup. More specifically, the MXNet profiler will break a single custom operator call into a `pure python` event and several `sub-operator` events if there is any. Furthermore, all those events will have a prefix in their names, which is conviniently the name of the custom operator you called. + + + +As shown by the sreenshot, in the `Custom Operator` domain where all the custom-operator-related events fall into, you can easily visualize the execution time of each segment of your custom operator. For example, we know that "CustomAddTwo::sqrt" is a `sub-operator` of custom operator "CustomAddTwo", and we also know when it is exectued accurately. Review comment: Spell check. Sreenshot - Screenshot. Even better wording would be -As shown in the below image. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r298783010 ## File path: docs/tutorials/python/profiler.md ## @@ -206,6 +206,15 @@ Let's zoom in to check the time taken by operators The above picture visualizes the sequence in which the operators were executed and the time taken by each operator. +### Profiling Custom Operators +Should the existing NDArray operators fail to meet all your model's needs, MXNet supports [Custom Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html) that you can define in Python. In `forward()` and `backward()` of a custom operator, there are two kinds of code: "pure Python" code (NumPy operators included) and "sub-operators" (NDArray operators called within `forward()` and `backward()`). With that said, MXNet can profile the execution time of both kinds without additional setup. Specifically, the MXNet profiler will break a single custom operator call into a pure Python event and several sub-operator events if there are any. Furthermore, all of those events will have a prefix in their names, which is, conveniently, the name of the custom operator you called. + + Review comment: All MXNet images are served from dmlc/web-data repository. Let us create a PR in that repo to upload this image. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [incubator-mxnet] sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling

sandeep-krishnamurthy commented on a change in pull request #15403: Updating profiler tutorial to include new custom operator profiling URL: https://github.com/apache/incubator-mxnet/pull/15403#discussion_r298783062 ## File path: docs/tutorials/python/profiler.md ## @@ -206,6 +206,15 @@ Let's zoom in to check the time taken by operators The above picture visualizes the sequence in which the operators were executed and the time taken by each operator. +### Profiling Custom Operators +Should the existing NDArray operators fail to meet all your model's needs, MXNet supports [Custom Operators](https://mxnet.incubator.apache.org/versions/master/tutorials/gluon/customop.html) that you can define in Python. In `forward()` and `backward()` of a custom operator, there are two kinds of code: "pure Python" code (NumPy operators included) and "sub-operators" (NDArray operators called within `forward()` and `backward()`). With that said, MXNet can profile the execution time of both kinds without additional setup. Specifically, the MXNet profiler will break a single custom operator call into a pure Python event and several sub-operator events if there are any. Furthermore, all of those events will have a prefix in their names, which is, conveniently, the name of the custom operator you called. + + + +As shown by the screenshot, in the **Custom Operator** domain where all the custom operator-related events fall into, you can easily visualize the execution time of each segment of your custom operator. For example, we know that `CustomAddTwo::sqrt` is a sub-operator of custom operator `CustomAddTwo`, and we also know when it is executed accurately. + +Please note that: to be able to see the previously described information, you need to set `profile_imperative` to `True` even when you are using custom operators in [symbolic mode](https://mxnet.incubator.apache.org/versions/master/tutorials/basic/symbol.html). The reason is that within custom operators, pure python code and sub-operators are still called imperatively. Review comment: Please add a code example as well. Makes it very clear for readers on how to use. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services