[spark] branch master updated (e887c63 -> 6c80547)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable add 6c80547 [SPARK-32997][K8S] Support dynamic PVC creation and deletion in K8s driver No new revisions were added by this update. Summary of changes: .../k8s/features/MountVolumesFeatureStep.scala | 38 ++ .../features/MountVolumesFeatureStepSuite.scala| 17 ++ 2 files changed, 35 insertions(+), 20 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (e887c63 -> 6c80547)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable add 6c80547 [SPARK-32997][K8S] Support dynamic PVC creation and deletion in K8s driver No new revisions were added by this update. Summary of changes: .../k8s/features/MountVolumesFeatureStep.scala | 38 ++ .../features/MountVolumesFeatureStepSuite.scala| 17 ++ 2 files changed, 35 insertions(+), 20 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (e887c63 -> 6c80547)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable add 6c80547 [SPARK-32997][K8S] Support dynamic PVC creation and deletion in K8s driver No new revisions were added by this update. Summary of changes: .../k8s/features/MountVolumesFeatureStep.scala | 38 ++ .../features/MountVolumesFeatureStepSuite.scala| 17 ++ 2 files changed, 35 insertions(+), 20 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (e887c63 -> 6c80547)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable add 6c80547 [SPARK-32997][K8S] Support dynamic PVC creation and deletion in K8s driver No new revisions were added by this update. Summary of changes: .../k8s/features/MountVolumesFeatureStep.scala | 38 ++ .../features/MountVolumesFeatureStepSuite.scala| 17 ++ 2 files changed, 35 insertions(+), 20 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-32997][K8S] Support dynamic PVC creation and deletion in K8s driver

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 6c80547 [SPARK-32997][K8S] Support dynamic PVC creation and deletion

in K8s driver

6c80547 is described below

commit 6c805470a7e8d1f44747dc64c2e49ebd302f9ba4

Author: Dongjoon Hyun

AuthorDate: Fri Sep 25 16:36:15 2020 -0700

[SPARK-32997][K8S] Support dynamic PVC creation and deletion in K8s driver

### What changes were proposed in this pull request?

This PR aims to support dynamic PVC creation and deletion in K8s driver.

**Configuration**

This PR reuses the existing PVC volume configs.

```

spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-local-dir-1.options.claimName=OnDemand

spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-local-dir-1.options.storageClass=gp2

spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-local-dir-1.options.sizeLimit=200Gi

spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-local-dir-1.mount.path=/data

spark.kubernetes.driver.volumes.persistentVolumeClaim.spark-local-dir-1.mount.readOnly=false

```

**PVC**

```

$ kubectl get pvc | grep driver

tpcds-d6087874c6705564-driver-pvc-0 Bound

pvc-fae914a2-ca5c-4e1e-8aba-54a35357d072 200Gi RWO gp2 12m

```

**Disk**

```

$ k exec -it tpcds-d6087874c6705564-driver -- df -h | grep data

/dev/nvme5n1197G 61M 197G 1% /data

```

```

$ k exec -it tpcds-d6087874c6705564-driver -- ls -al /data

total 28

drwxr-xr-x 5 root root 4096 Sep 25 18:06 .

drwxr-xr-x 1 root root63 Sep 25 18:06 ..

drwxr-xr-x 66 root root 4096 Sep 25 18:09

blockmgr-2c9a8cc5-a05c-45fe-a58e-b8f42da88a57

drwx-- 2 root root 16384 Sep 25 18:06 lost+found

drwx-- 4 root root 4096 Sep 25 18:07

spark-0448efe7-da2c-4f3a-bd3c-769aadb11dd6

```

**NOTE**

This should be used carefully because Apache Spark doesn't delete driver

pod automatically. Since the driver PVC shares the lifecycle of driver pod, it

will exist after the job completion until the pod deletion. However, if the

users are already using pre-populated PVCs, this isn't a regression at all in

terms of the cost.

```

$ k get pod -l spark-role=driver

NAMEREADY STATUS RESTARTS AGE

tpcds-d6087874c6705564-driver 0/1 Completed 0 35m

```

### Why are the changes needed?

Like executors, driver also needs larger PVC.

### Does this PR introduce _any_ user-facing change?

Yes. This is a new feature.

### How was this patch tested?

Pass the newly added test case.

Closes #29873 from dongjoon-hyun/SPARK-32997.

Authored-by: Dongjoon Hyun

Signed-off-by: Dongjoon Hyun

---

.../k8s/features/MountVolumesFeatureStep.scala | 38 ++

.../features/MountVolumesFeatureStepSuite.scala| 17 ++

2 files changed, 35 insertions(+), 20 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/MountVolumesFeatureStep.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/MountVolumesFeatureStep.scala

index 788ddea..e297656 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/MountVolumesFeatureStep.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/MountVolumesFeatureStep.scala

@@ -66,32 +66,30 @@ private[spark] class MountVolumesFeatureStep(conf:

KubernetesConf)

case KubernetesPVCVolumeConf(claimNameTemplate, storageClass, size) =>

val claimName = conf match {

case c: KubernetesExecutorConf =>

- val claimName = claimNameTemplate

+ claimNameTemplate

.replaceAll(PVC_ON_DEMAND,

s"${conf.resourceNamePrefix}-exec-${c.executorId}$PVC_POSTFIX-$i")

.replaceAll(ENV_EXECUTOR_ID, c.executorId)

-

- if (storageClass.isDefined && size.isDefined) {

-additionalResources.append(new PersistentVolumeClaimBuilder()

- .withKind(PVC)

- .withApiVersion("v1")

- .withNewMetadata()

-.withName(claimName)

-.endMetadata()

- .withNewSpec()

-.withStorageClassName(storageClass.get)

-.withAccessModes(PVC_ACCESS_MODE)

-.withResources(new ResourceRequirementsBuilder()

- .withRequests(Map("storage" -> new

Quantity(size.get)).asJava).build())

-.endSpec()

- .build())

-

[spark] branch branch-2.4 updated: Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new 32a28ff Revert "[SPARK-27872][K8S][2.4] Fix executor service account

inconsistency"

32a28ff is described below

commit 32a28ff8cee534cd5fa0da1bdcf5efdc46d8c830

Author: Dongjoon Hyun

AuthorDate: Fri Sep 25 15:13:35 2020 -0700

Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This reverts commit bf32ac8efa9818be551fe720a71eaba50d5d41ad.

---

.../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +++-

.../apache/spark/deploy/k8s/KubernetesUtils.scala | 15

.../DriverKubernetesCredentialsFeatureStep.scala | 13 +--

.../ExecutorKubernetesCredentialsFeatureStep.scala | 45 --

.../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +-

.../k8s/KubernetesExecutorBuilderSuite.scala | 9 +

.../k8s/integrationtest/BasicTestsSuite.scala | 7

.../k8s/integrationtest/KubernetesSuite.scala | 4 --

8 files changed, 18 insertions(+), 102 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

index cfff6b9..c7338a7 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

@@ -61,9 +61,10 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

"spark.kubernetes.authenticate.driver"

- val KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX =

"spark.kubernetes.authenticate.executor"

- val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

"spark.kubernetes.authenticate.driver.mounted"

+ val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver"

+ val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver.mounted"

val KUBERNETES_AUTH_CLIENT_MODE_PREFIX = "spark.kubernetes.authenticate"

val OAUTH_TOKEN_CONF_SUFFIX = "oauthToken"

val OAUTH_TOKEN_FILE_CONF_SUFFIX = "oauthTokenFile"

@@ -71,7 +72,7 @@ private[spark] object Config extends Logging {

val CLIENT_CERT_FILE_CONF_SUFFIX = "clientCertFile"

val CA_CERT_FILE_CONF_SUFFIX = "caCertFile"

- val KUBERNETES_DRIVER_SERVICE_ACCOUNT_NAME =

+ val KUBERNETES_SERVICE_ACCOUNT_NAME =

ConfigBuilder(s"$KUBERNETES_AUTH_DRIVER_CONF_PREFIX.serviceAccountName")

.doc("Service account that is used when running the driver pod. The

driver pod uses " +

"this service account when requesting executor pods from the API

server. If specific " +

@@ -80,13 +81,6 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_EXECUTOR_SERVICE_ACCOUNT_NAME =

-ConfigBuilder(s"$KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX.serviceAccountName")

- .doc("Service account that is used when running the executor pod." +

-"If this parameter is not setup, the service account defaults to

none.")

- .stringConf

- .createWithDefault("none")

-

val KUBERNETES_DRIVER_LIMIT_CORES =

ConfigBuilder("spark.kubernetes.driver.limit.cores")

.doc("Specify the hard cpu limit for the driver pod")

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

index aa3cf83..588cd9d 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

@@ -16,10 +16,6 @@

*/

package org.apache.spark.deploy.k8s

-import io.fabric8.kubernetes.api.model.{Container, ContainerBuilder,

- ContainerStateRunning, ContainerStateTerminated,

- ContainerStateWaiting, ContainerStatus, Pod, PodBuilder}

-

import org.apache.spark.SparkConf

import org.apache.spark.util.Utils

@@ -64,15 +60,4 @@ private[spark] object KubernetesUtils {

}

def parseMasterUrl(url: String): String = url.substring("k8s://".length)

-

- def buildPodWithServiceAccount(serviceAccount: Option[String], pod:

SparkPod): Option[Pod] = {

-serviceAccount.map { account =>

- new PodBuilder(pod.pod)

-.editOrNewSpec()

-.withServiceAccount(account)

-.withServiceAccountName(account)

-.endSpec()

-.build()

-}

- }

}

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/DriverKubernetesCredentialsFeatureStep.scala

[spark] branch branch-2.4 updated: Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new 32a28ff Revert "[SPARK-27872][K8S][2.4] Fix executor service account

inconsistency"

32a28ff is described below

commit 32a28ff8cee534cd5fa0da1bdcf5efdc46d8c830

Author: Dongjoon Hyun

AuthorDate: Fri Sep 25 15:13:35 2020 -0700

Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This reverts commit bf32ac8efa9818be551fe720a71eaba50d5d41ad.

---

.../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +++-

.../apache/spark/deploy/k8s/KubernetesUtils.scala | 15

.../DriverKubernetesCredentialsFeatureStep.scala | 13 +--

.../ExecutorKubernetesCredentialsFeatureStep.scala | 45 --

.../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +-

.../k8s/KubernetesExecutorBuilderSuite.scala | 9 +

.../k8s/integrationtest/BasicTestsSuite.scala | 7

.../k8s/integrationtest/KubernetesSuite.scala | 4 --

8 files changed, 18 insertions(+), 102 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

index cfff6b9..c7338a7 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

@@ -61,9 +61,10 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

"spark.kubernetes.authenticate.driver"

- val KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX =

"spark.kubernetes.authenticate.executor"

- val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

"spark.kubernetes.authenticate.driver.mounted"

+ val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver"

+ val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver.mounted"

val KUBERNETES_AUTH_CLIENT_MODE_PREFIX = "spark.kubernetes.authenticate"

val OAUTH_TOKEN_CONF_SUFFIX = "oauthToken"

val OAUTH_TOKEN_FILE_CONF_SUFFIX = "oauthTokenFile"

@@ -71,7 +72,7 @@ private[spark] object Config extends Logging {

val CLIENT_CERT_FILE_CONF_SUFFIX = "clientCertFile"

val CA_CERT_FILE_CONF_SUFFIX = "caCertFile"

- val KUBERNETES_DRIVER_SERVICE_ACCOUNT_NAME =

+ val KUBERNETES_SERVICE_ACCOUNT_NAME =

ConfigBuilder(s"$KUBERNETES_AUTH_DRIVER_CONF_PREFIX.serviceAccountName")

.doc("Service account that is used when running the driver pod. The

driver pod uses " +

"this service account when requesting executor pods from the API

server. If specific " +

@@ -80,13 +81,6 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_EXECUTOR_SERVICE_ACCOUNT_NAME =

-ConfigBuilder(s"$KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX.serviceAccountName")

- .doc("Service account that is used when running the executor pod." +

-"If this parameter is not setup, the service account defaults to

none.")

- .stringConf

- .createWithDefault("none")

-

val KUBERNETES_DRIVER_LIMIT_CORES =

ConfigBuilder("spark.kubernetes.driver.limit.cores")

.doc("Specify the hard cpu limit for the driver pod")

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

index aa3cf83..588cd9d 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

@@ -16,10 +16,6 @@

*/

package org.apache.spark.deploy.k8s

-import io.fabric8.kubernetes.api.model.{Container, ContainerBuilder,

- ContainerStateRunning, ContainerStateTerminated,

- ContainerStateWaiting, ContainerStatus, Pod, PodBuilder}

-

import org.apache.spark.SparkConf

import org.apache.spark.util.Utils

@@ -64,15 +60,4 @@ private[spark] object KubernetesUtils {

}

def parseMasterUrl(url: String): String = url.substring("k8s://".length)

-

- def buildPodWithServiceAccount(serviceAccount: Option[String], pod:

SparkPod): Option[Pod] = {

-serviceAccount.map { account =>

- new PodBuilder(pod.pod)

-.editOrNewSpec()

-.withServiceAccount(account)

-.withServiceAccountName(account)

-.endSpec()

-.build()

-}

- }

}

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/DriverKubernetesCredentialsFeatureStep.scala

[spark] branch branch-2.4 updated: Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new 32a28ff Revert "[SPARK-27872][K8S][2.4] Fix executor service account

inconsistency"

32a28ff is described below

commit 32a28ff8cee534cd5fa0da1bdcf5efdc46d8c830

Author: Dongjoon Hyun

AuthorDate: Fri Sep 25 15:13:35 2020 -0700

Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This reverts commit bf32ac8efa9818be551fe720a71eaba50d5d41ad.

---

.../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +++-

.../apache/spark/deploy/k8s/KubernetesUtils.scala | 15

.../DriverKubernetesCredentialsFeatureStep.scala | 13 +--

.../ExecutorKubernetesCredentialsFeatureStep.scala | 45 --

.../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +-

.../k8s/KubernetesExecutorBuilderSuite.scala | 9 +

.../k8s/integrationtest/BasicTestsSuite.scala | 7

.../k8s/integrationtest/KubernetesSuite.scala | 4 --

8 files changed, 18 insertions(+), 102 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

index cfff6b9..c7338a7 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

@@ -61,9 +61,10 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

"spark.kubernetes.authenticate.driver"

- val KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX =

"spark.kubernetes.authenticate.executor"

- val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

"spark.kubernetes.authenticate.driver.mounted"

+ val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver"

+ val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver.mounted"

val KUBERNETES_AUTH_CLIENT_MODE_PREFIX = "spark.kubernetes.authenticate"

val OAUTH_TOKEN_CONF_SUFFIX = "oauthToken"

val OAUTH_TOKEN_FILE_CONF_SUFFIX = "oauthTokenFile"

@@ -71,7 +72,7 @@ private[spark] object Config extends Logging {

val CLIENT_CERT_FILE_CONF_SUFFIX = "clientCertFile"

val CA_CERT_FILE_CONF_SUFFIX = "caCertFile"

- val KUBERNETES_DRIVER_SERVICE_ACCOUNT_NAME =

+ val KUBERNETES_SERVICE_ACCOUNT_NAME =

ConfigBuilder(s"$KUBERNETES_AUTH_DRIVER_CONF_PREFIX.serviceAccountName")

.doc("Service account that is used when running the driver pod. The

driver pod uses " +

"this service account when requesting executor pods from the API

server. If specific " +

@@ -80,13 +81,6 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_EXECUTOR_SERVICE_ACCOUNT_NAME =

-ConfigBuilder(s"$KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX.serviceAccountName")

- .doc("Service account that is used when running the executor pod." +

-"If this parameter is not setup, the service account defaults to

none.")

- .stringConf

- .createWithDefault("none")

-

val KUBERNETES_DRIVER_LIMIT_CORES =

ConfigBuilder("spark.kubernetes.driver.limit.cores")

.doc("Specify the hard cpu limit for the driver pod")

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

index aa3cf83..588cd9d 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

@@ -16,10 +16,6 @@

*/

package org.apache.spark.deploy.k8s

-import io.fabric8.kubernetes.api.model.{Container, ContainerBuilder,

- ContainerStateRunning, ContainerStateTerminated,

- ContainerStateWaiting, ContainerStatus, Pod, PodBuilder}

-

import org.apache.spark.SparkConf

import org.apache.spark.util.Utils

@@ -64,15 +60,4 @@ private[spark] object KubernetesUtils {

}

def parseMasterUrl(url: String): String = url.substring("k8s://".length)

-

- def buildPodWithServiceAccount(serviceAccount: Option[String], pod:

SparkPod): Option[Pod] = {

-serviceAccount.map { account =>

- new PodBuilder(pod.pod)

-.editOrNewSpec()

-.withServiceAccount(account)

-.withServiceAccountName(account)

-.endSpec()

-.build()

-}

- }

}

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/DriverKubernetesCredentialsFeatureStep.scala

[spark] branch branch-2.4 updated: Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new 32a28ff Revert "[SPARK-27872][K8S][2.4] Fix executor service account

inconsistency"

32a28ff is described below

commit 32a28ff8cee534cd5fa0da1bdcf5efdc46d8c830

Author: Dongjoon Hyun

AuthorDate: Fri Sep 25 15:13:35 2020 -0700

Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This reverts commit bf32ac8efa9818be551fe720a71eaba50d5d41ad.

---

.../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +++-

.../apache/spark/deploy/k8s/KubernetesUtils.scala | 15

.../DriverKubernetesCredentialsFeatureStep.scala | 13 +--

.../ExecutorKubernetesCredentialsFeatureStep.scala | 45 --

.../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +-

.../k8s/KubernetesExecutorBuilderSuite.scala | 9 +

.../k8s/integrationtest/BasicTestsSuite.scala | 7

.../k8s/integrationtest/KubernetesSuite.scala | 4 --

8 files changed, 18 insertions(+), 102 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

index cfff6b9..c7338a7 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

@@ -61,9 +61,10 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

"spark.kubernetes.authenticate.driver"

- val KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX =

"spark.kubernetes.authenticate.executor"

- val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

"spark.kubernetes.authenticate.driver.mounted"

+ val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver"

+ val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver.mounted"

val KUBERNETES_AUTH_CLIENT_MODE_PREFIX = "spark.kubernetes.authenticate"

val OAUTH_TOKEN_CONF_SUFFIX = "oauthToken"

val OAUTH_TOKEN_FILE_CONF_SUFFIX = "oauthTokenFile"

@@ -71,7 +72,7 @@ private[spark] object Config extends Logging {

val CLIENT_CERT_FILE_CONF_SUFFIX = "clientCertFile"

val CA_CERT_FILE_CONF_SUFFIX = "caCertFile"

- val KUBERNETES_DRIVER_SERVICE_ACCOUNT_NAME =

+ val KUBERNETES_SERVICE_ACCOUNT_NAME =

ConfigBuilder(s"$KUBERNETES_AUTH_DRIVER_CONF_PREFIX.serviceAccountName")

.doc("Service account that is used when running the driver pod. The

driver pod uses " +

"this service account when requesting executor pods from the API

server. If specific " +

@@ -80,13 +81,6 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_EXECUTOR_SERVICE_ACCOUNT_NAME =

-ConfigBuilder(s"$KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX.serviceAccountName")

- .doc("Service account that is used when running the executor pod." +

-"If this parameter is not setup, the service account defaults to

none.")

- .stringConf

- .createWithDefault("none")

-

val KUBERNETES_DRIVER_LIMIT_CORES =

ConfigBuilder("spark.kubernetes.driver.limit.cores")

.doc("Specify the hard cpu limit for the driver pod")

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

index aa3cf83..588cd9d 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

@@ -16,10 +16,6 @@

*/

package org.apache.spark.deploy.k8s

-import io.fabric8.kubernetes.api.model.{Container, ContainerBuilder,

- ContainerStateRunning, ContainerStateTerminated,

- ContainerStateWaiting, ContainerStatus, Pod, PodBuilder}

-

import org.apache.spark.SparkConf

import org.apache.spark.util.Utils

@@ -64,15 +60,4 @@ private[spark] object KubernetesUtils {

}

def parseMasterUrl(url: String): String = url.substring("k8s://".length)

-

- def buildPodWithServiceAccount(serviceAccount: Option[String], pod:

SparkPod): Option[Pod] = {

-serviceAccount.map { account =>

- new PodBuilder(pod.pod)

-.editOrNewSpec()

-.withServiceAccount(account)

-.withServiceAccountName(account)

-.endSpec()

-.build()

-}

- }

}

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/DriverKubernetesCredentialsFeatureStep.scala

[spark] branch branch-2.4 updated: Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new 32a28ff Revert "[SPARK-27872][K8S][2.4] Fix executor service account

inconsistency"

32a28ff is described below

commit 32a28ff8cee534cd5fa0da1bdcf5efdc46d8c830

Author: Dongjoon Hyun

AuthorDate: Fri Sep 25 15:13:35 2020 -0700

Revert "[SPARK-27872][K8S][2.4] Fix executor service account inconsistency"

This reverts commit bf32ac8efa9818be551fe720a71eaba50d5d41ad.

---

.../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +++-

.../apache/spark/deploy/k8s/KubernetesUtils.scala | 15

.../DriverKubernetesCredentialsFeatureStep.scala | 13 +--

.../ExecutorKubernetesCredentialsFeatureStep.scala | 45 --

.../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +-

.../k8s/KubernetesExecutorBuilderSuite.scala | 9 +

.../k8s/integrationtest/BasicTestsSuite.scala | 7

.../k8s/integrationtest/KubernetesSuite.scala | 4 --

8 files changed, 18 insertions(+), 102 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

index cfff6b9..c7338a7 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

@@ -61,9 +61,10 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

"spark.kubernetes.authenticate.driver"

- val KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX =

"spark.kubernetes.authenticate.executor"

- val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

"spark.kubernetes.authenticate.driver.mounted"

+ val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver"

+ val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

+ "spark.kubernetes.authenticate.driver.mounted"

val KUBERNETES_AUTH_CLIENT_MODE_PREFIX = "spark.kubernetes.authenticate"

val OAUTH_TOKEN_CONF_SUFFIX = "oauthToken"

val OAUTH_TOKEN_FILE_CONF_SUFFIX = "oauthTokenFile"

@@ -71,7 +72,7 @@ private[spark] object Config extends Logging {

val CLIENT_CERT_FILE_CONF_SUFFIX = "clientCertFile"

val CA_CERT_FILE_CONF_SUFFIX = "caCertFile"

- val KUBERNETES_DRIVER_SERVICE_ACCOUNT_NAME =

+ val KUBERNETES_SERVICE_ACCOUNT_NAME =

ConfigBuilder(s"$KUBERNETES_AUTH_DRIVER_CONF_PREFIX.serviceAccountName")

.doc("Service account that is used when running the driver pod. The

driver pod uses " +

"this service account when requesting executor pods from the API

server. If specific " +

@@ -80,13 +81,6 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_EXECUTOR_SERVICE_ACCOUNT_NAME =

-ConfigBuilder(s"$KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX.serviceAccountName")

- .doc("Service account that is used when running the executor pod." +

-"If this parameter is not setup, the service account defaults to

none.")

- .stringConf

- .createWithDefault("none")

-

val KUBERNETES_DRIVER_LIMIT_CORES =

ConfigBuilder("spark.kubernetes.driver.limit.cores")

.doc("Specify the hard cpu limit for the driver pod")

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

index aa3cf83..588cd9d 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

@@ -16,10 +16,6 @@

*/

package org.apache.spark.deploy.k8s

-import io.fabric8.kubernetes.api.model.{Container, ContainerBuilder,

- ContainerStateRunning, ContainerStateTerminated,

- ContainerStateWaiting, ContainerStatus, Pod, PodBuilder}

-

import org.apache.spark.SparkConf

import org.apache.spark.util.Utils

@@ -64,15 +60,4 @@ private[spark] object KubernetesUtils {

}

def parseMasterUrl(url: String): String = url.substring("k8s://".length)

-

- def buildPodWithServiceAccount(serviceAccount: Option[String], pod:

SparkPod): Option[Pod] = {

-serviceAccount.map { account =>

- new PodBuilder(pod.pod)

-.editOrNewSpec()

-.withServiceAccount(account)

-.withServiceAccountName(account)

-.endSpec()

-.build()

-}

- }

}

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/DriverKubernetesCredentialsFeatureStep.scala

[spark] branch branch-2.4 updated: [SPARK-27872][K8S][2.4] Fix executor service account inconsistency

This is an automated email from the ASF dual-hosted git repository.

eje pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new bf32ac8 [SPARK-27872][K8S][2.4] Fix executor service account

inconsistency

bf32ac8 is described below

commit bf32ac8efa9818be551fe720a71eaba50d5d41ad

Author: nssalian

AuthorDate: Fri Sep 25 12:05:40 2020 -0700

[SPARK-27872][K8S][2.4] Fix executor service account inconsistency

### What changes were proposed in this pull request?

Similar patch to https://github.com/apache/spark/pull/24748 but applied to

the branch-2.4.

Backporting the fix to releases 2.4.x.

Please let me know if I missed some step; I haven't contributed to spark in

a long time.

Closes #29844 from nssalian/patch-SPARK-27872.

Authored-by: nssalian

Signed-off-by: Erik Erlandson

---

.../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +---

.../apache/spark/deploy/k8s/KubernetesUtils.scala | 15

.../DriverKubernetesCredentialsFeatureStep.scala | 13 ++-

.../ExecutorKubernetesCredentialsFeatureStep.scala | 45 ++

.../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +-

.../k8s/KubernetesExecutorBuilderSuite.scala | 9 -

.../k8s/integrationtest/BasicTestsSuite.scala | 7

.../k8s/integrationtest/KubernetesSuite.scala | 4 ++

8 files changed, 102 insertions(+), 18 deletions(-)

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

index c7338a7..cfff6b9 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

@@ -61,10 +61,9 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

- val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

- "spark.kubernetes.authenticate.driver"

- val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

- "spark.kubernetes.authenticate.driver.mounted"

+ val KUBERNETES_AUTH_DRIVER_CONF_PREFIX =

"spark.kubernetes.authenticate.driver"

+ val KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX =

"spark.kubernetes.authenticate.executor"

+ val KUBERNETES_AUTH_DRIVER_MOUNTED_CONF_PREFIX =

"spark.kubernetes.authenticate.driver.mounted"

val KUBERNETES_AUTH_CLIENT_MODE_PREFIX = "spark.kubernetes.authenticate"

val OAUTH_TOKEN_CONF_SUFFIX = "oauthToken"

val OAUTH_TOKEN_FILE_CONF_SUFFIX = "oauthTokenFile"

@@ -72,7 +71,7 @@ private[spark] object Config extends Logging {

val CLIENT_CERT_FILE_CONF_SUFFIX = "clientCertFile"

val CA_CERT_FILE_CONF_SUFFIX = "caCertFile"

- val KUBERNETES_SERVICE_ACCOUNT_NAME =

+ val KUBERNETES_DRIVER_SERVICE_ACCOUNT_NAME =

ConfigBuilder(s"$KUBERNETES_AUTH_DRIVER_CONF_PREFIX.serviceAccountName")

.doc("Service account that is used when running the driver pod. The

driver pod uses " +

"this service account when requesting executor pods from the API

server. If specific " +

@@ -81,6 +80,13 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

+ val KUBERNETES_EXECUTOR_SERVICE_ACCOUNT_NAME =

+ConfigBuilder(s"$KUBERNETES_AUTH_EXECUTOR_CONF_PREFIX.serviceAccountName")

+ .doc("Service account that is used when running the executor pod." +

+"If this parameter is not setup, the service account defaults to

none.")

+ .stringConf

+ .createWithDefault("none")

+

val KUBERNETES_DRIVER_LIMIT_CORES =

ConfigBuilder("spark.kubernetes.driver.limit.cores")

.doc("Specify the hard cpu limit for the driver pod")

diff --git

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

index 588cd9d..aa3cf83 100644

---

a/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

+++

b/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesUtils.scala

@@ -16,6 +16,10 @@

*/

package org.apache.spark.deploy.k8s

+import io.fabric8.kubernetes.api.model.{Container, ContainerBuilder,

+ ContainerStateRunning, ContainerStateTerminated,

+ ContainerStateWaiting, ContainerStatus, Pod, PodBuilder}

+

import org.apache.spark.SparkConf

import org.apache.spark.util.Utils

@@ -60,4 +64,15 @@ private[spark] object KubernetesUtils {

}

def parseMasterUrl(url: String): String = url.substring("k8s://".length)

+

+ def buildPodWithServiceAccount(serviceAccount: Option[String], pod:

SparkPod): Option[Pod] = {

+serviceAccount.map { account =>

+ new PodBuilder(pod.pod)

+.editOrNewSpec()

+

[spark] branch branch-2.4 updated (cd3caab -> bf32ac8)

This is an automated email from the ASF dual-hosted git repository. eje pushed a change to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git. from cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view add bf32ac8 [SPARK-27872][K8S][2.4] Fix executor service account inconsistency No new revisions were added by this update. Summary of changes: .../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +--- .../apache/spark/deploy/k8s/KubernetesUtils.scala | 15 .../DriverKubernetesCredentialsFeatureStep.scala | 13 ++- .../ExecutorKubernetesCredentialsFeatureStep.scala | 45 ++ .../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +- .../k8s/KubernetesExecutorBuilderSuite.scala | 9 - .../k8s/integrationtest/BasicTestsSuite.scala | 7 .../k8s/integrationtest/KubernetesSuite.scala | 4 ++ 8 files changed, 102 insertions(+), 18 deletions(-) create mode 100644 resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/ExecutorKubernetesCredentialsFeatureStep.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated (cd3caab -> bf32ac8)

This is an automated email from the ASF dual-hosted git repository. eje pushed a change to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git. from cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view add bf32ac8 [SPARK-27872][K8S][2.4] Fix executor service account inconsistency No new revisions were added by this update. Summary of changes: .../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +--- .../apache/spark/deploy/k8s/KubernetesUtils.scala | 15 .../DriverKubernetesCredentialsFeatureStep.scala | 13 ++- .../ExecutorKubernetesCredentialsFeatureStep.scala | 45 ++ .../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +- .../k8s/KubernetesExecutorBuilderSuite.scala | 9 - .../k8s/integrationtest/BasicTestsSuite.scala | 7 .../k8s/integrationtest/KubernetesSuite.scala | 4 ++ 8 files changed, 102 insertions(+), 18 deletions(-) create mode 100644 resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/ExecutorKubernetesCredentialsFeatureStep.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated (cd3caab -> bf32ac8)

This is an automated email from the ASF dual-hosted git repository. eje pushed a change to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git. from cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view add bf32ac8 [SPARK-27872][K8S][2.4] Fix executor service account inconsistency No new revisions were added by this update. Summary of changes: .../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +--- .../apache/spark/deploy/k8s/KubernetesUtils.scala | 15 .../DriverKubernetesCredentialsFeatureStep.scala | 13 ++- .../ExecutorKubernetesCredentialsFeatureStep.scala | 45 ++ .../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +- .../k8s/KubernetesExecutorBuilderSuite.scala | 9 - .../k8s/integrationtest/BasicTestsSuite.scala | 7 .../k8s/integrationtest/KubernetesSuite.scala | 4 ++ 8 files changed, 102 insertions(+), 18 deletions(-) create mode 100644 resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/ExecutorKubernetesCredentialsFeatureStep.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated (cd3caab -> bf32ac8)

This is an automated email from the ASF dual-hosted git repository. eje pushed a change to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git. from cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view add bf32ac8 [SPARK-27872][K8S][2.4] Fix executor service account inconsistency No new revisions were added by this update. Summary of changes: .../scala/org/apache/spark/deploy/k8s/Config.scala | 16 +--- .../apache/spark/deploy/k8s/KubernetesUtils.scala | 15 .../DriverKubernetesCredentialsFeatureStep.scala | 13 ++- .../ExecutorKubernetesCredentialsFeatureStep.scala | 45 ++ .../cluster/k8s/KubernetesExecutorBuilder.scala| 11 +- .../k8s/KubernetesExecutorBuilderSuite.scala | 9 - .../k8s/integrationtest/BasicTestsSuite.scala | 7 .../k8s/integrationtest/KubernetesSuite.scala | 4 ++ 8 files changed, 102 insertions(+), 18 deletions(-) create mode 100644 resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/ExecutorKubernetesCredentialsFeatureStep.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

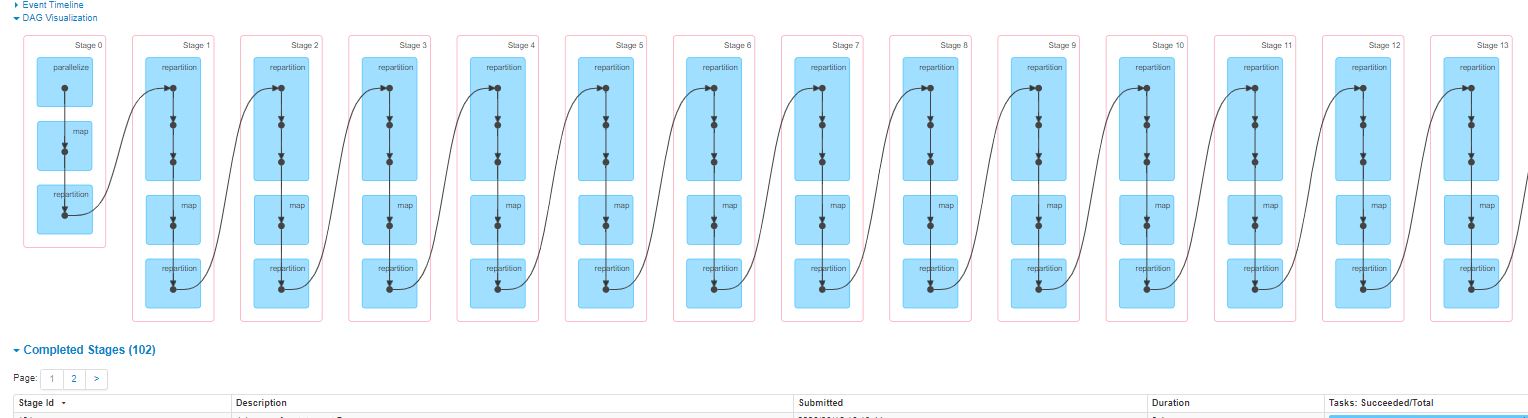

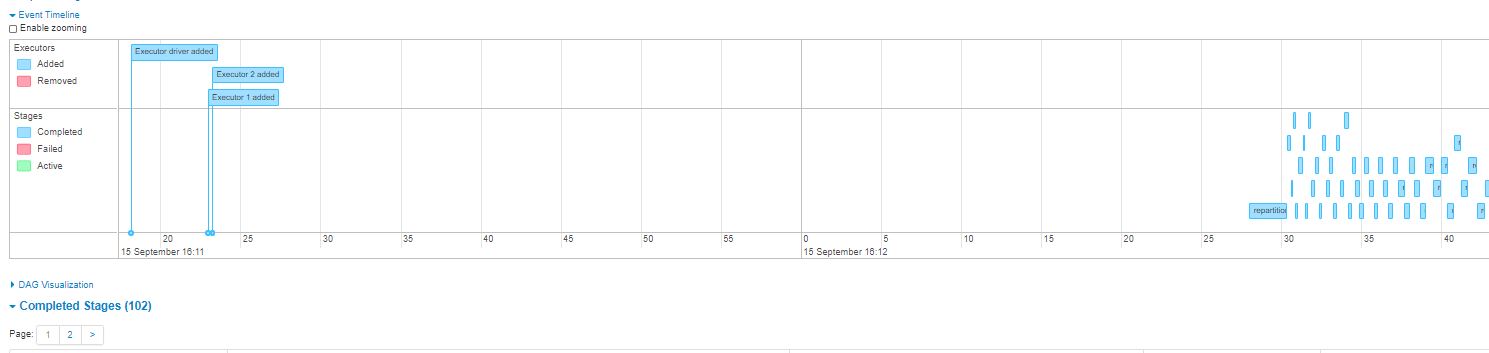

[spark] branch branch-2.4 updated (1366443 -> cd3caab)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git. from 1366443 [MINOR][SQL][2.4] Improve examples for `percentile_approx()` add cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view No new revisions were added by this update. Summary of changes: .../org/apache/spark/ui/static/spark-dag-viz.js| 9 ++-- .../org/apache/spark/ui/static/timeline-view.js| 53 ++ .../resources/org/apache/spark/ui/static/webui.js | 7 ++- .../main/scala/org/apache/spark/ui/UIUtils.scala | 1 + 4 files changed, 43 insertions(+), 27 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

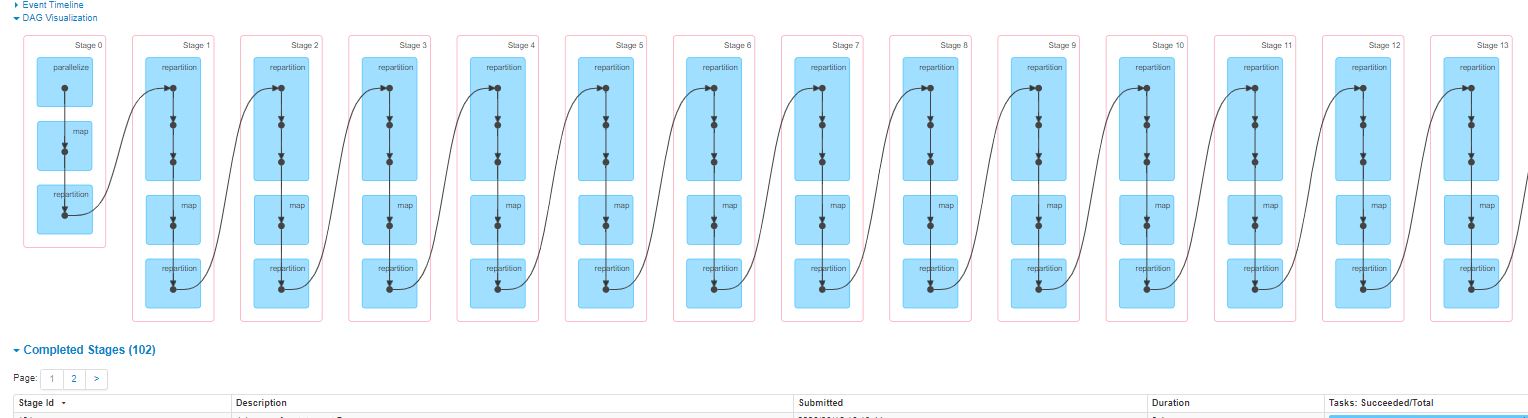

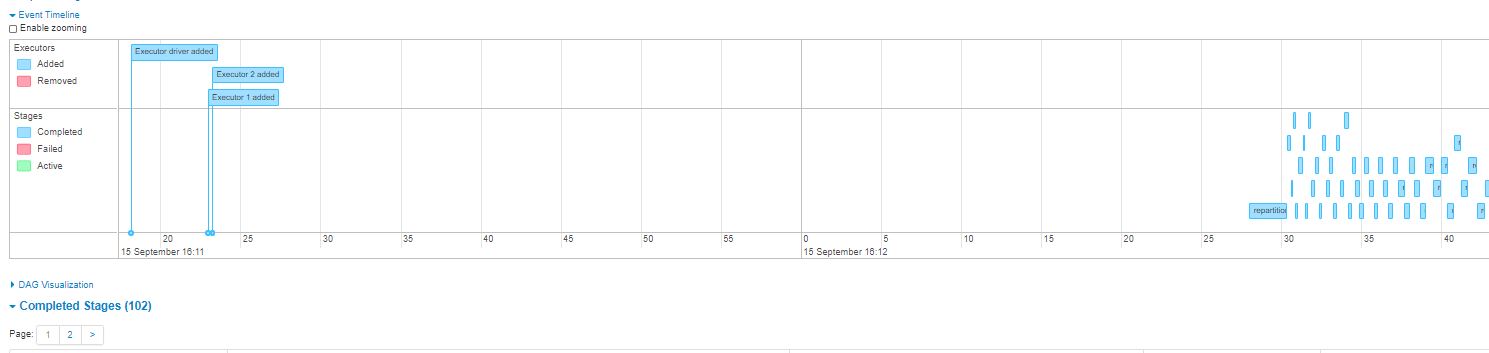

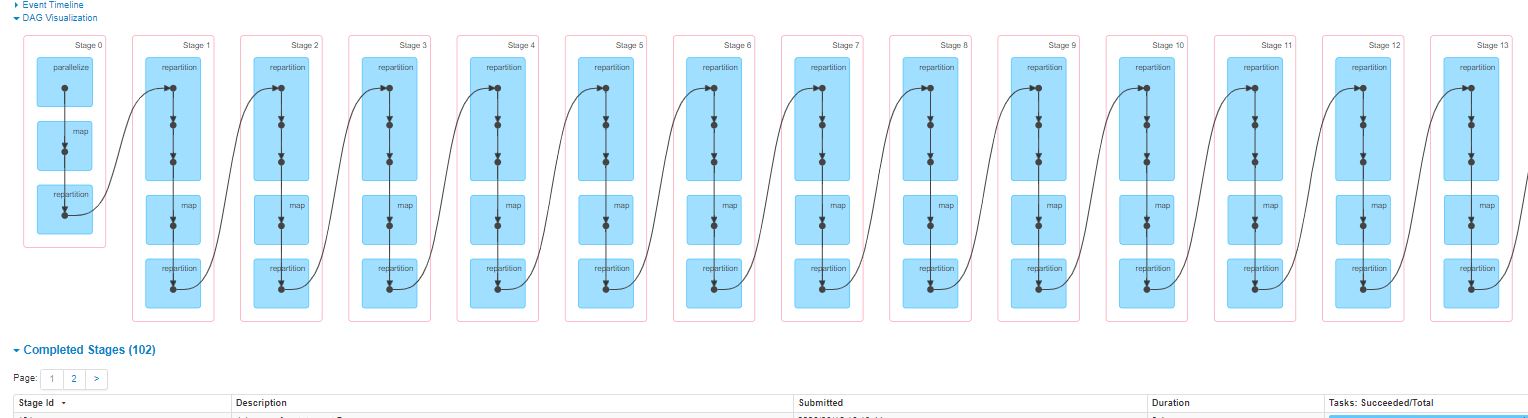

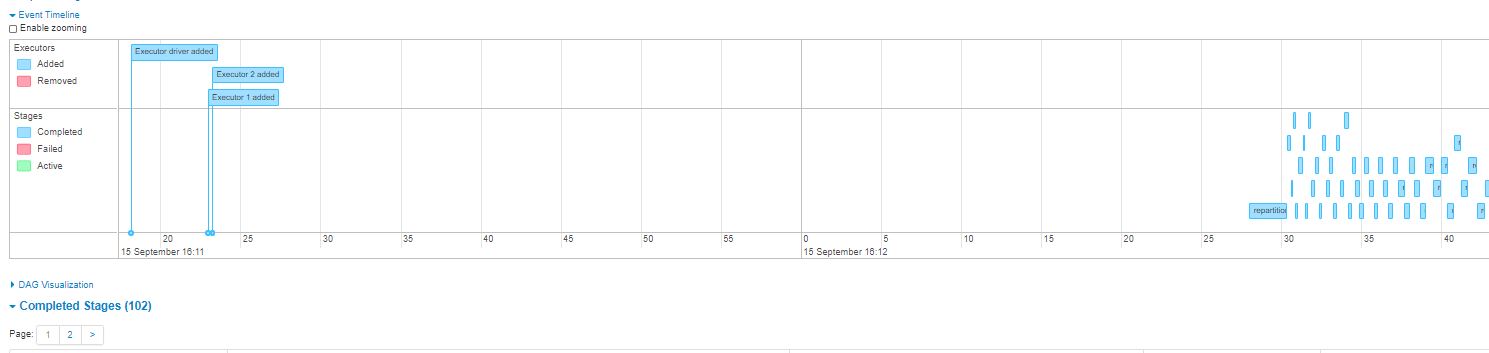

[spark] branch branch-2.4 updated: [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link

in event timeline view

cd3caab is described below

commit cd3caabea60cdbf9f131c12f7225bd97581da659

Author: Zhen Li

AuthorDate: Fri Sep 25 08:34:19 2020 -0500

[SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event

timeline view

### What changes were proposed in this pull request?

Fix

[SPARK-32886](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

in branch-2.4. i cherry-pick

[29757](https://github.com/apache/spark/pull/29757) and partial

[28690](https://github.com/apache/spark/pull/28690)(test part is ignored as

conflict), which PR `29757` has dependency on PR `28690`. This change fixes two

below issues in branch-2.4.

[SPARK-31882: DAG-viz is not rendered correctly with

pagination.](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-31882)

[SPARK-32886: '.../jobs/undefined' link from "Event Timeline" in jobs

page](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

### Why are the changes needed?

sarutak found `29757` has dependency on `28690`. If we only merge `29757`

to 2.4 branch, it would cause UI break. And I verified both issues mentioned in

[SPARK-32886](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

and

[SPARK-31882](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-31882)

exist in branch-2.4. So i cherry pick them to branch 2.4 in same PR.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually tested.

Closes #29833 from zhli1142015/cherry-pick-fix-for-31882-32886.

Lead-authored-by: Zhen Li

Co-authored-by: Kousuke Saruta

Signed-off-by: Sean Owen

---

.../org/apache/spark/ui/static/spark-dag-viz.js| 9 ++--

.../org/apache/spark/ui/static/timeline-view.js| 53 ++

.../resources/org/apache/spark/ui/static/webui.js | 7 ++-

.../main/scala/org/apache/spark/ui/UIUtils.scala | 1 +

4 files changed, 43 insertions(+), 27 deletions(-)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

b/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

index 75b959f..990b2f8 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

@@ -210,7 +210,7 @@ function renderDagVizForJob(svgContainer) {

var dot = metadata.select(".dot-file").text();

var stageId = metadata.attr("stage-id");

var containerId = VizConstants.graphPrefix + stageId;

-var isSkipped = metadata.attr("skipped") == "true";

+var isSkipped = metadata.attr("skipped") === "true";

var container;

if (isSkipped) {

container = svgContainer

@@ -219,11 +219,8 @@ function renderDagVizForJob(svgContainer) {

.attr("skipped", "true");

} else {

// Link each graph to the corresponding stage page (TODO: handle stage

attempts)

- // Use the link from the stage table so it also works for the history

server

- var attemptId = 0

- var stageLink = d3.select("#stage-" + stageId + "-" + attemptId)

-.select("a.name-link")

-.attr("href");

+ var attemptId = 0;

+ var stageLink = uiRoot + appBasePath + "/stages/stage/?id=" + stageId +

"=" + attemptId;

container = svgContainer

.append("a")

.attr("xlink:href", stageLink)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

b/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

index 5be8cff..220b76a 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

@@ -42,26 +42,31 @@ function drawApplicationTimeline(groupArray, eventObjArray,

startTime, offset) {

setupZoomable("#application-timeline-zoom-lock", applicationTimeline);

setupExecutorEventAction();

+ function getIdForJobEntry(baseElem) {

+var jobIdText =

$($(baseElem).find(".application-timeline-content")[0]).text();

+var jobId = jobIdText.match("\\(Job (\\d+)\\)$")[1];

+return jobId;

+ }

+

+ function getSelectorForJobEntry(jobId) {

+return "#job-" + jobId;

+ }

+

function setupJobEventAction() {

$(".vis-item.vis-range.job.application-timeline-object").each(function() {

- var getSelectorForJobEntry =

[spark] branch branch-2.4 updated: [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link

in event timeline view

cd3caab is described below

commit cd3caabea60cdbf9f131c12f7225bd97581da659

Author: Zhen Li

AuthorDate: Fri Sep 25 08:34:19 2020 -0500

[SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event

timeline view

### What changes were proposed in this pull request?

Fix

[SPARK-32886](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

in branch-2.4. i cherry-pick

[29757](https://github.com/apache/spark/pull/29757) and partial

[28690](https://github.com/apache/spark/pull/28690)(test part is ignored as

conflict), which PR `29757` has dependency on PR `28690`. This change fixes two

below issues in branch-2.4.

[SPARK-31882: DAG-viz is not rendered correctly with

pagination.](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-31882)

[SPARK-32886: '.../jobs/undefined' link from "Event Timeline" in jobs

page](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

### Why are the changes needed?

sarutak found `29757` has dependency on `28690`. If we only merge `29757`

to 2.4 branch, it would cause UI break. And I verified both issues mentioned in

[SPARK-32886](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

and

[SPARK-31882](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-31882)

exist in branch-2.4. So i cherry pick them to branch 2.4 in same PR.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually tested.

Closes #29833 from zhli1142015/cherry-pick-fix-for-31882-32886.

Lead-authored-by: Zhen Li

Co-authored-by: Kousuke Saruta

Signed-off-by: Sean Owen

---

.../org/apache/spark/ui/static/spark-dag-viz.js| 9 ++--

.../org/apache/spark/ui/static/timeline-view.js| 53 ++

.../resources/org/apache/spark/ui/static/webui.js | 7 ++-

.../main/scala/org/apache/spark/ui/UIUtils.scala | 1 +

4 files changed, 43 insertions(+), 27 deletions(-)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

b/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

index 75b959f..990b2f8 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

@@ -210,7 +210,7 @@ function renderDagVizForJob(svgContainer) {

var dot = metadata.select(".dot-file").text();

var stageId = metadata.attr("stage-id");

var containerId = VizConstants.graphPrefix + stageId;

-var isSkipped = metadata.attr("skipped") == "true";

+var isSkipped = metadata.attr("skipped") === "true";

var container;

if (isSkipped) {

container = svgContainer

@@ -219,11 +219,8 @@ function renderDagVizForJob(svgContainer) {

.attr("skipped", "true");

} else {

// Link each graph to the corresponding stage page (TODO: handle stage

attempts)

- // Use the link from the stage table so it also works for the history

server

- var attemptId = 0

- var stageLink = d3.select("#stage-" + stageId + "-" + attemptId)

-.select("a.name-link")

-.attr("href");

+ var attemptId = 0;

+ var stageLink = uiRoot + appBasePath + "/stages/stage/?id=" + stageId +

"=" + attemptId;

container = svgContainer

.append("a")

.attr("xlink:href", stageLink)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

b/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

index 5be8cff..220b76a 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

@@ -42,26 +42,31 @@ function drawApplicationTimeline(groupArray, eventObjArray,

startTime, offset) {

setupZoomable("#application-timeline-zoom-lock", applicationTimeline);

setupExecutorEventAction();

+ function getIdForJobEntry(baseElem) {

+var jobIdText =

$($(baseElem).find(".application-timeline-content")[0]).text();

+var jobId = jobIdText.match("\\(Job (\\d+)\\)$")[1];

+return jobId;

+ }

+

+ function getSelectorForJobEntry(jobId) {

+return "#job-" + jobId;

+ }

+

function setupJobEventAction() {

$(".vis-item.vis-range.job.application-timeline-object").each(function() {

- var getSelectorForJobEntry =

[spark] branch branch-2.4 updated: [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch branch-2.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-2.4 by this push:

new cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link

in event timeline view

cd3caab is described below

commit cd3caabea60cdbf9f131c12f7225bd97581da659

Author: Zhen Li

AuthorDate: Fri Sep 25 08:34:19 2020 -0500

[SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event

timeline view

### What changes were proposed in this pull request?

Fix

[SPARK-32886](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

in branch-2.4. i cherry-pick

[29757](https://github.com/apache/spark/pull/29757) and partial

[28690](https://github.com/apache/spark/pull/28690)(test part is ignored as

conflict), which PR `29757` has dependency on PR `28690`. This change fixes two

below issues in branch-2.4.

[SPARK-31882: DAG-viz is not rendered correctly with

pagination.](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-31882)

[SPARK-32886: '.../jobs/undefined' link from "Event Timeline" in jobs

page](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

### Why are the changes needed?

sarutak found `29757` has dependency on `28690`. If we only merge `29757`

to 2.4 branch, it would cause UI break. And I verified both issues mentioned in

[SPARK-32886](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-32886)

and

[SPARK-31882](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-31882)

exist in branch-2.4. So i cherry pick them to branch 2.4 in same PR.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually tested.

Closes #29833 from zhli1142015/cherry-pick-fix-for-31882-32886.

Lead-authored-by: Zhen Li

Co-authored-by: Kousuke Saruta

Signed-off-by: Sean Owen

---

.../org/apache/spark/ui/static/spark-dag-viz.js| 9 ++--

.../org/apache/spark/ui/static/timeline-view.js| 53 ++

.../resources/org/apache/spark/ui/static/webui.js | 7 ++-

.../main/scala/org/apache/spark/ui/UIUtils.scala | 1 +

4 files changed, 43 insertions(+), 27 deletions(-)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

b/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

index 75b959f..990b2f8 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/spark-dag-viz.js

@@ -210,7 +210,7 @@ function renderDagVizForJob(svgContainer) {

var dot = metadata.select(".dot-file").text();

var stageId = metadata.attr("stage-id");

var containerId = VizConstants.graphPrefix + stageId;

-var isSkipped = metadata.attr("skipped") == "true";

+var isSkipped = metadata.attr("skipped") === "true";

var container;

if (isSkipped) {

container = svgContainer

@@ -219,11 +219,8 @@ function renderDagVizForJob(svgContainer) {

.attr("skipped", "true");

} else {

// Link each graph to the corresponding stage page (TODO: handle stage

attempts)

- // Use the link from the stage table so it also works for the history

server

- var attemptId = 0

- var stageLink = d3.select("#stage-" + stageId + "-" + attemptId)

-.select("a.name-link")

-.attr("href");

+ var attemptId = 0;

+ var stageLink = uiRoot + appBasePath + "/stages/stage/?id=" + stageId +

"=" + attemptId;

container = svgContainer

.append("a")

.attr("xlink:href", stageLink)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

b/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

index 5be8cff..220b76a 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/timeline-view.js

@@ -42,26 +42,31 @@ function drawApplicationTimeline(groupArray, eventObjArray,

startTime, offset) {

setupZoomable("#application-timeline-zoom-lock", applicationTimeline);

setupExecutorEventAction();

+ function getIdForJobEntry(baseElem) {

+var jobIdText =

$($(baseElem).find(".application-timeline-content")[0]).text();

+var jobId = jobIdText.match("\\(Job (\\d+)\\)$")[1];

+return jobId;

+ }

+

+ function getSelectorForJobEntry(jobId) {

+return "#job-" + jobId;

+ }

+

function setupJobEventAction() {

$(".vis-item.vis-range.job.application-timeline-object").each(function() {

- var getSelectorForJobEntry =

[spark] branch branch-2.4 updated (1366443 -> cd3caab)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git. from 1366443 [MINOR][SQL][2.4] Improve examples for `percentile_approx()` add cd3caab [SPARK-32886][SPARK-31882][WEBUI][2.4] fix 'undefined' link in event timeline view No new revisions were added by this update. Summary of changes: .../org/apache/spark/ui/static/spark-dag-viz.js| 9 ++-- .../org/apache/spark/ui/static/timeline-view.js| 53 ++ .../resources/org/apache/spark/ui/static/webui.js | 7 ++- .../main/scala/org/apache/spark/ui/UIUtils.scala | 1 + 4 files changed, 43 insertions(+), 27 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (9e6882f -> e887c63)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API add e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable No new revisions were added by this update. Summary of changes: .../org/apache/spark/sql/catalyst/analysis/unresolved.scala | 5 - .../apache/spark/sql/catalyst/expressions/Expression.scala | 4 +++- .../apache/spark/sql/catalyst/expressions/SortOrder.scala| 3 --- .../sql/catalyst/expressions/aggregate/interfaces.scala | 12 .../spark/sql/catalyst/expressions/complexTypeCreator.scala | 1 - .../org/apache/spark/sql/catalyst/expressions/misc.scala | 2 -- .../apache/spark/sql/catalyst/expressions/predicates.scala | 1 - .../spark/sql/catalyst/expressions/windowExpressions.scala | 10 -- .../apache/spark/sql/catalyst/plans/logical/v2Commands.scala | 2 -- 9 files changed, 15 insertions(+), 25 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (9e6882f -> e887c63)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API add e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable No new revisions were added by this update. Summary of changes: .../org/apache/spark/sql/catalyst/analysis/unresolved.scala | 5 - .../apache/spark/sql/catalyst/expressions/Expression.scala | 4 +++- .../apache/spark/sql/catalyst/expressions/SortOrder.scala| 3 --- .../sql/catalyst/expressions/aggregate/interfaces.scala | 12 .../spark/sql/catalyst/expressions/complexTypeCreator.scala | 1 - .../org/apache/spark/sql/catalyst/expressions/misc.scala | 2 -- .../apache/spark/sql/catalyst/expressions/predicates.scala | 1 - .../spark/sql/catalyst/expressions/windowExpressions.scala | 10 -- .../apache/spark/sql/catalyst/plans/logical/v2Commands.scala | 2 -- 9 files changed, 15 insertions(+), 25 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (9e6882f -> e887c63)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API add e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable No new revisions were added by this update. Summary of changes: .../org/apache/spark/sql/catalyst/analysis/unresolved.scala | 5 - .../apache/spark/sql/catalyst/expressions/Expression.scala | 4 +++- .../apache/spark/sql/catalyst/expressions/SortOrder.scala| 3 --- .../sql/catalyst/expressions/aggregate/interfaces.scala | 12 .../spark/sql/catalyst/expressions/complexTypeCreator.scala | 1 - .../org/apache/spark/sql/catalyst/expressions/misc.scala | 2 -- .../apache/spark/sql/catalyst/expressions/predicates.scala | 1 - .../spark/sql/catalyst/expressions/windowExpressions.scala | 10 -- .../apache/spark/sql/catalyst/plans/logical/v2Commands.scala | 2 -- 9 files changed, 15 insertions(+), 25 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (9e6882f -> e887c63)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API add e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable No new revisions were added by this update. Summary of changes: .../org/apache/spark/sql/catalyst/analysis/unresolved.scala | 5 - .../apache/spark/sql/catalyst/expressions/Expression.scala | 4 +++- .../apache/spark/sql/catalyst/expressions/SortOrder.scala| 3 --- .../sql/catalyst/expressions/aggregate/interfaces.scala | 12 .../spark/sql/catalyst/expressions/complexTypeCreator.scala | 1 - .../org/apache/spark/sql/catalyst/expressions/misc.scala | 2 -- .../apache/spark/sql/catalyst/expressions/predicates.scala | 1 - .../spark/sql/catalyst/expressions/windowExpressions.scala | 10 -- .../apache/spark/sql/catalyst/plans/logical/v2Commands.scala | 2 -- 9 files changed, 15 insertions(+), 25 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (9e6882f -> e887c63)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API add e887c63 [SPARK-32931][SQL] Unevaluable Expressions are not Foldable No new revisions were added by this update. Summary of changes: .../org/apache/spark/sql/catalyst/analysis/unresolved.scala | 5 - .../apache/spark/sql/catalyst/expressions/Expression.scala | 4 +++- .../apache/spark/sql/catalyst/expressions/SortOrder.scala| 3 --- .../sql/catalyst/expressions/aggregate/interfaces.scala | 12 .../spark/sql/catalyst/expressions/complexTypeCreator.scala | 1 - .../org/apache/spark/sql/catalyst/expressions/misc.scala | 2 -- .../apache/spark/sql/catalyst/expressions/predicates.scala | 1 - .../spark/sql/catalyst/expressions/windowExpressions.scala | 10 -- .../apache/spark/sql/catalyst/plans/logical/v2Commands.scala | 2 -- 9 files changed, 15 insertions(+), 25 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f2fc966 -> 9e6882f)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f2fc966 [SPARK-32877][SQL][TEST] Add test for Hive UDF complex decimal type add 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API No new revisions were added by this update. Summary of changes: .../spark/sql/catalyst/analysis/Analyzer.scala | 85 ++-- .../sql/catalyst/analysis/CTESubstitution.scala| 2 +- .../spark/sql/catalyst/analysis/ResolveHints.scala | 4 +- .../spark/sql/catalyst/analysis/unresolved.scala | 11 +- .../spark/sql/catalyst/catalog/interface.scala | 3 +- .../catalyst/streaming/StreamingRelationV2.scala | 4 +- .../spark/sql/connector/catalog/V1Table.scala | 8 + .../apache/spark/sql/execution/command/views.scala | 2 +- .../execution/datasources/DataSourceStrategy.scala | 41 +++- .../execution/streaming/MicroBatchExecution.scala | 2 +- .../streaming/continuous/ContinuousExecution.scala | 2 +- .../spark/sql/execution/streaming/memory.scala | 2 + .../spark/sql/streaming/DataStreamReader.scala | 21 +- .../sql-tests/results/explain-aqe.sql.out | 2 +- .../resources/sql-tests/results/explain.sql.out| 2 +- .../sql/connector/TableCapabilityCheckSuite.scala | 2 + .../streaming/test/DataStreamTableAPISuite.scala | 234 + .../org/apache/spark/sql/hive/test/TestHive.scala | 2 +- 18 files changed, 391 insertions(+), 38 deletions(-) create mode 100644 sql/core/src/test/scala/org/apache/spark/sql/streaming/test/DataStreamTableAPISuite.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f2fc966 -> 9e6882f)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f2fc966 [SPARK-32877][SQL][TEST] Add test for Hive UDF complex decimal type add 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API No new revisions were added by this update. Summary of changes: .../spark/sql/catalyst/analysis/Analyzer.scala | 85 ++-- .../sql/catalyst/analysis/CTESubstitution.scala| 2 +- .../spark/sql/catalyst/analysis/ResolveHints.scala | 4 +- .../spark/sql/catalyst/analysis/unresolved.scala | 11 +- .../spark/sql/catalyst/catalog/interface.scala | 3 +- .../catalyst/streaming/StreamingRelationV2.scala | 4 +- .../spark/sql/connector/catalog/V1Table.scala | 8 + .../apache/spark/sql/execution/command/views.scala | 2 +- .../execution/datasources/DataSourceStrategy.scala | 41 +++- .../execution/streaming/MicroBatchExecution.scala | 2 +- .../streaming/continuous/ContinuousExecution.scala | 2 +- .../spark/sql/execution/streaming/memory.scala | 2 + .../spark/sql/streaming/DataStreamReader.scala | 21 +- .../sql-tests/results/explain-aqe.sql.out | 2 +- .../resources/sql-tests/results/explain.sql.out| 2 +- .../sql/connector/TableCapabilityCheckSuite.scala | 2 + .../streaming/test/DataStreamTableAPISuite.scala | 234 + .../org/apache/spark/sql/hive/test/TestHive.scala | 2 +- 18 files changed, 391 insertions(+), 38 deletions(-) create mode 100644 sql/core/src/test/scala/org/apache/spark/sql/streaming/test/DataStreamTableAPISuite.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f2fc966 -> 9e6882f)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f2fc966 [SPARK-32877][SQL][TEST] Add test for Hive UDF complex decimal type add 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API No new revisions were added by this update. Summary of changes: .../spark/sql/catalyst/analysis/Analyzer.scala | 85 ++-- .../sql/catalyst/analysis/CTESubstitution.scala| 2 +- .../spark/sql/catalyst/analysis/ResolveHints.scala | 4 +- .../spark/sql/catalyst/analysis/unresolved.scala | 11 +- .../spark/sql/catalyst/catalog/interface.scala | 3 +- .../catalyst/streaming/StreamingRelationV2.scala | 4 +- .../spark/sql/connector/catalog/V1Table.scala | 8 + .../apache/spark/sql/execution/command/views.scala | 2 +- .../execution/datasources/DataSourceStrategy.scala | 41 +++- .../execution/streaming/MicroBatchExecution.scala | 2 +- .../streaming/continuous/ContinuousExecution.scala | 2 +- .../spark/sql/execution/streaming/memory.scala | 2 + .../spark/sql/streaming/DataStreamReader.scala | 21 +- .../sql-tests/results/explain-aqe.sql.out | 2 +- .../resources/sql-tests/results/explain.sql.out| 2 +- .../sql/connector/TableCapabilityCheckSuite.scala | 2 + .../streaming/test/DataStreamTableAPISuite.scala | 234 + .../org/apache/spark/sql/hive/test/TestHive.scala | 2 +- 18 files changed, 391 insertions(+), 38 deletions(-) create mode 100644 sql/core/src/test/scala/org/apache/spark/sql/streaming/test/DataStreamTableAPISuite.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f2fc966 -> 9e6882f)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f2fc966 [SPARK-32877][SQL][TEST] Add test for Hive UDF complex decimal type add 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API No new revisions were added by this update. Summary of changes: .../spark/sql/catalyst/analysis/Analyzer.scala | 85 ++-- .../sql/catalyst/analysis/CTESubstitution.scala| 2 +- .../spark/sql/catalyst/analysis/ResolveHints.scala | 4 +- .../spark/sql/catalyst/analysis/unresolved.scala | 11 +- .../spark/sql/catalyst/catalog/interface.scala | 3 +- .../catalyst/streaming/StreamingRelationV2.scala | 4 +- .../spark/sql/connector/catalog/V1Table.scala | 8 + .../apache/spark/sql/execution/command/views.scala | 2 +- .../execution/datasources/DataSourceStrategy.scala | 41 +++- .../execution/streaming/MicroBatchExecution.scala | 2 +- .../streaming/continuous/ContinuousExecution.scala | 2 +- .../spark/sql/execution/streaming/memory.scala | 2 + .../spark/sql/streaming/DataStreamReader.scala | 21 +- .../sql-tests/results/explain-aqe.sql.out | 2 +- .../resources/sql-tests/results/explain.sql.out| 2 +- .../sql/connector/TableCapabilityCheckSuite.scala | 2 + .../streaming/test/DataStreamTableAPISuite.scala | 234 + .../org/apache/spark/sql/hive/test/TestHive.scala | 2 +- 18 files changed, 391 insertions(+), 38 deletions(-) create mode 100644 sql/core/src/test/scala/org/apache/spark/sql/streaming/test/DataStreamTableAPISuite.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f2fc966 -> 9e6882f)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f2fc966 [SPARK-32877][SQL][TEST] Add test for Hive UDF complex decimal type add 9e6882f [SPARK-32885][SS] Add DataStreamReader.table API No new revisions were added by this update. Summary of changes: .../spark/sql/catalyst/analysis/Analyzer.scala | 85 ++-- .../sql/catalyst/analysis/CTESubstitution.scala| 2 +- .../spark/sql/catalyst/analysis/ResolveHints.scala | 4 +- .../spark/sql/catalyst/analysis/unresolved.scala | 11 +- .../spark/sql/catalyst/catalog/interface.scala | 3 +- .../catalyst/streaming/StreamingRelationV2.scala | 4 +- .../spark/sql/connector/catalog/V1Table.scala | 8 + .../apache/spark/sql/execution/command/views.scala | 2 +- .../execution/datasources/DataSourceStrategy.scala | 41 +++- .../execution/streaming/MicroBatchExecution.scala | 2 +- .../streaming/continuous/ContinuousExecution.scala | 2 +- .../spark/sql/execution/streaming/memory.scala | 2 + .../spark/sql/streaming/DataStreamReader.scala | 21 +- .../sql-tests/results/explain-aqe.sql.out | 2 +- .../resources/sql-tests/results/explain.sql.out| 2 +- .../sql/connector/TableCapabilityCheckSuite.scala | 2 + .../streaming/test/DataStreamTableAPISuite.scala | 234 + .../org/apache/spark/sql/hive/test/TestHive.scala | 2 +- 18 files changed, 391 insertions(+), 38 deletions(-) create mode 100644 sql/core/src/test/scala/org/apache/spark/sql/streaming/test/DataStreamTableAPISuite.scala - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org