[spark] branch master updated: [SPARK-31259][CORE] Fix log message about fetch request size in ShuffleBlockFetcherIterator

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 33f532a [SPARK-31259][CORE] Fix log message about fetch request size

in ShuffleBlockFetcherIterator

33f532a is described below

commit 33f532a9f201fb9c7895d685b3dce82cf042dc61

Author: yi.wu

AuthorDate: Thu Mar 26 09:11:13 2020 -0700

[SPARK-31259][CORE] Fix log message about fetch request size in

ShuffleBlockFetcherIterator

### What changes were proposed in this pull request?

Fix incorrect log of `cureRequestSize`.

### Why are the changes needed?

In batch mode, `curRequestSize` can be the total size of several block

groups. And each group should have its own request size instead of using the

total size.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

It's only affect log.

Closes #28028 from Ngone51/fix_curRequestSize.

Authored-by: yi.wu

Signed-off-by: Dongjoon Hyun

---

.../apache/spark/storage/ShuffleBlockFetcherIterator.scala | 14 ++

1 file changed, 6 insertions(+), 8 deletions(-)

diff --git

a/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

b/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

index f1a7d88..404e055 100644

---

a/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

+++

b/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

@@ -329,9 +329,8 @@ final class ShuffleBlockFetcherIterator(

private def createFetchRequest(

blocks: Seq[FetchBlockInfo],

- address: BlockManagerId,

- curRequestSize: Long): FetchRequest = {

-logDebug(s"Creating fetch request of $curRequestSize at $address "

+ address: BlockManagerId): FetchRequest = {

+logDebug(s"Creating fetch request of ${blocks.map(_.size).sum} at $address

"

+ s"with ${blocks.size} blocks")

FetchRequest(address, blocks)

}

@@ -339,17 +338,16 @@ final class ShuffleBlockFetcherIterator(

private def createFetchRequests(

curBlocks: Seq[FetchBlockInfo],

address: BlockManagerId,

- curRequestSize: Long,

isLast: Boolean,

collectedRemoteRequests: ArrayBuffer[FetchRequest]): Seq[FetchBlockInfo]

= {

val mergedBlocks = mergeContinuousShuffleBlockIdsIfNeeded(curBlocks)

var retBlocks = Seq.empty[FetchBlockInfo]

if (mergedBlocks.length <= maxBlocksInFlightPerAddress) {

- collectedRemoteRequests += createFetchRequest(mergedBlocks, address,

curRequestSize)

+ collectedRemoteRequests += createFetchRequest(mergedBlocks, address)

} else {

mergedBlocks.grouped(maxBlocksInFlightPerAddress).foreach { blocks =>

if (blocks.length == maxBlocksInFlightPerAddress || isLast) {

- collectedRemoteRequests += createFetchRequest(blocks, address,

curRequestSize)

+ collectedRemoteRequests += createFetchRequest(blocks, address)

} else {

// The last group does not exceed `maxBlocksInFlightPerAddress`. Put

it back

// to `curBlocks`.

@@ -377,14 +375,14 @@ final class ShuffleBlockFetcherIterator(

// For batch fetch, the actual block in flight should count for merged

block.

val mayExceedsMaxBlocks = !doBatchFetch && curBlocks.size >=

maxBlocksInFlightPerAddress

if (curRequestSize >= targetRemoteRequestSize || mayExceedsMaxBlocks) {

-curBlocks = createFetchRequests(curBlocks, address, curRequestSize,

isLast = false,

+curBlocks = createFetchRequests(curBlocks, address, isLast = false,

collectedRemoteRequests).to[ArrayBuffer]

curRequestSize = curBlocks.map(_.size).sum

}

}

// Add in the final request

if (curBlocks.nonEmpty) {

- curBlocks = createFetchRequests(curBlocks, address, curRequestSize,

isLast = true,

+ curBlocks = createFetchRequests(curBlocks, address, isLast = true,

collectedRemoteRequests).to[ArrayBuffer]

curRequestSize = curBlocks.map(_.size).sum

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-31259][CORE] Fix log message about fetch request size in ShuffleBlockFetcherIterator

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 33f532a [SPARK-31259][CORE] Fix log message about fetch request size

in ShuffleBlockFetcherIterator

33f532a is described below

commit 33f532a9f201fb9c7895d685b3dce82cf042dc61

Author: yi.wu

AuthorDate: Thu Mar 26 09:11:13 2020 -0700

[SPARK-31259][CORE] Fix log message about fetch request size in

ShuffleBlockFetcherIterator

### What changes were proposed in this pull request?

Fix incorrect log of `cureRequestSize`.

### Why are the changes needed?

In batch mode, `curRequestSize` can be the total size of several block

groups. And each group should have its own request size instead of using the

total size.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

It's only affect log.

Closes #28028 from Ngone51/fix_curRequestSize.

Authored-by: yi.wu

Signed-off-by: Dongjoon Hyun

---

.../apache/spark/storage/ShuffleBlockFetcherIterator.scala | 14 ++

1 file changed, 6 insertions(+), 8 deletions(-)

diff --git

a/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

b/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

index f1a7d88..404e055 100644

---

a/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

+++

b/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

@@ -329,9 +329,8 @@ final class ShuffleBlockFetcherIterator(

private def createFetchRequest(

blocks: Seq[FetchBlockInfo],

- address: BlockManagerId,

- curRequestSize: Long): FetchRequest = {

-logDebug(s"Creating fetch request of $curRequestSize at $address "

+ address: BlockManagerId): FetchRequest = {

+logDebug(s"Creating fetch request of ${blocks.map(_.size).sum} at $address

"

+ s"with ${blocks.size} blocks")

FetchRequest(address, blocks)

}

@@ -339,17 +338,16 @@ final class ShuffleBlockFetcherIterator(

private def createFetchRequests(

curBlocks: Seq[FetchBlockInfo],

address: BlockManagerId,

- curRequestSize: Long,

isLast: Boolean,

collectedRemoteRequests: ArrayBuffer[FetchRequest]): Seq[FetchBlockInfo]

= {

val mergedBlocks = mergeContinuousShuffleBlockIdsIfNeeded(curBlocks)

var retBlocks = Seq.empty[FetchBlockInfo]

if (mergedBlocks.length <= maxBlocksInFlightPerAddress) {

- collectedRemoteRequests += createFetchRequest(mergedBlocks, address,

curRequestSize)

+ collectedRemoteRequests += createFetchRequest(mergedBlocks, address)

} else {

mergedBlocks.grouped(maxBlocksInFlightPerAddress).foreach { blocks =>

if (blocks.length == maxBlocksInFlightPerAddress || isLast) {

- collectedRemoteRequests += createFetchRequest(blocks, address,

curRequestSize)

+ collectedRemoteRequests += createFetchRequest(blocks, address)

} else {

// The last group does not exceed `maxBlocksInFlightPerAddress`. Put

it back

// to `curBlocks`.

@@ -377,14 +375,14 @@ final class ShuffleBlockFetcherIterator(

// For batch fetch, the actual block in flight should count for merged

block.

val mayExceedsMaxBlocks = !doBatchFetch && curBlocks.size >=

maxBlocksInFlightPerAddress

if (curRequestSize >= targetRemoteRequestSize || mayExceedsMaxBlocks) {

-curBlocks = createFetchRequests(curBlocks, address, curRequestSize,

isLast = false,

+curBlocks = createFetchRequests(curBlocks, address, isLast = false,

collectedRemoteRequests).to[ArrayBuffer]

curRequestSize = curBlocks.map(_.size).sum

}

}

// Add in the final request

if (curBlocks.nonEmpty) {

- curBlocks = createFetchRequests(curBlocks, address, curRequestSize,

isLast = true,

+ curBlocks = createFetchRequests(curBlocks, address, isLast = true,

collectedRemoteRequests).to[ArrayBuffer]

curRequestSize = curBlocks.map(_.size).sum

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-31259][CORE] Fix log message about fetch request size in ShuffleBlockFetcherIterator

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 8f93dc2 [SPARK-31259][CORE] Fix log message about fetch request size

in ShuffleBlockFetcherIterator

8f93dc2 is described below

commit 8f93dc2f1dd8bd09d52fd3dc07a4c10e70bd237c

Author: yi.wu

AuthorDate: Thu Mar 26 09:11:13 2020 -0700

[SPARK-31259][CORE] Fix log message about fetch request size in

ShuffleBlockFetcherIterator

### What changes were proposed in this pull request?

Fix incorrect log of `cureRequestSize`.

### Why are the changes needed?

In batch mode, `curRequestSize` can be the total size of several block

groups. And each group should have its own request size instead of using the

total size.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

It's only affect log.

Closes #28028 from Ngone51/fix_curRequestSize.

Authored-by: yi.wu

Signed-off-by: Dongjoon Hyun

(cherry picked from commit 33f532a9f201fb9c7895d685b3dce82cf042dc61)

Signed-off-by: Dongjoon Hyun

---

.../apache/spark/storage/ShuffleBlockFetcherIterator.scala | 14 ++

1 file changed, 6 insertions(+), 8 deletions(-)

diff --git

a/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

b/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

index f1a7d88..404e055 100644

---

a/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

+++

b/core/src/main/scala/org/apache/spark/storage/ShuffleBlockFetcherIterator.scala

@@ -329,9 +329,8 @@ final class ShuffleBlockFetcherIterator(

private def createFetchRequest(

blocks: Seq[FetchBlockInfo],

- address: BlockManagerId,

- curRequestSize: Long): FetchRequest = {

-logDebug(s"Creating fetch request of $curRequestSize at $address "

+ address: BlockManagerId): FetchRequest = {

+logDebug(s"Creating fetch request of ${blocks.map(_.size).sum} at $address

"

+ s"with ${blocks.size} blocks")

FetchRequest(address, blocks)

}

@@ -339,17 +338,16 @@ final class ShuffleBlockFetcherIterator(

private def createFetchRequests(

curBlocks: Seq[FetchBlockInfo],

address: BlockManagerId,

- curRequestSize: Long,

isLast: Boolean,

collectedRemoteRequests: ArrayBuffer[FetchRequest]): Seq[FetchBlockInfo]

= {

val mergedBlocks = mergeContinuousShuffleBlockIdsIfNeeded(curBlocks)

var retBlocks = Seq.empty[FetchBlockInfo]

if (mergedBlocks.length <= maxBlocksInFlightPerAddress) {

- collectedRemoteRequests += createFetchRequest(mergedBlocks, address,

curRequestSize)

+ collectedRemoteRequests += createFetchRequest(mergedBlocks, address)

} else {

mergedBlocks.grouped(maxBlocksInFlightPerAddress).foreach { blocks =>

if (blocks.length == maxBlocksInFlightPerAddress || isLast) {

- collectedRemoteRequests += createFetchRequest(blocks, address,

curRequestSize)

+ collectedRemoteRequests += createFetchRequest(blocks, address)

} else {

// The last group does not exceed `maxBlocksInFlightPerAddress`. Put

it back

// to `curBlocks`.

@@ -377,14 +375,14 @@ final class ShuffleBlockFetcherIterator(

// For batch fetch, the actual block in flight should count for merged

block.

val mayExceedsMaxBlocks = !doBatchFetch && curBlocks.size >=

maxBlocksInFlightPerAddress

if (curRequestSize >= targetRemoteRequestSize || mayExceedsMaxBlocks) {

-curBlocks = createFetchRequests(curBlocks, address, curRequestSize,

isLast = false,

+curBlocks = createFetchRequests(curBlocks, address, isLast = false,

collectedRemoteRequests).to[ArrayBuffer]

curRequestSize = curBlocks.map(_.size).sum

}

}

// Add in the final request

if (curBlocks.nonEmpty) {

- curBlocks = createFetchRequests(curBlocks, address, curRequestSize,

isLast = true,

+ curBlocks = createFetchRequests(curBlocks, address, isLast = true,

collectedRemoteRequests).to[ArrayBuffer]

curRequestSize = curBlocks.map(_.size).sum

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-31238][SQL] Rebase dates to/from Julian calendar in write/read for ORC datasource

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 78cc2ef [SPARK-31238][SQL] Rebase dates to/from Julian calendar in

write/read for ORC datasource

78cc2ef is described below

commit 78cc2ef5b663d6d605e3d4febc6fb99e20b7f165

Author: Maxim Gekk

AuthorDate: Thu Mar 26 13:14:28 2020 -0700

[SPARK-31238][SQL] Rebase dates to/from Julian calendar in write/read for

ORC datasource

### What changes were proposed in this pull request?

This PR (SPARK-31238) aims the followings.

1. Modified ORC Vectorized Reader, in particular, OrcColumnVector v1.2 and

v2.3. After the changes, it uses `DateTimeUtils. rebaseJulianToGregorianDays()`

added by https://github.com/apache/spark/pull/27915 . The method performs

rebasing days from the hybrid calendar (Julian + Gregorian) to Proleptic

Gregorian calendar. It builds a local date in the original calendar, extracts

date fields `year`, `month` and `day` from the local date, and builds another

local date in the target calend [...]

2. Introduced rebasing dates while saving ORC files, in particular, I

modified `OrcShimUtils. getDateWritable` v1.2 and v2.3, and returned

`DaysWritable` instead of Hive's `DateWritable`. The `DaysWritable` class was

added by the PR https://github.com/apache/spark/pull/27890 (and fixed by

https://github.com/apache/spark/pull/27962). I moved `DaysWritable` from

`sql/hive` to `sql/core` to re-use it in ORC datasource.

### Why are the changes needed?

For the backward compatibility with Spark 2.4 and earlier versions. The

changes allow users to read dates/timestamps saved by previous version, and get

the same result.

### Does this PR introduce any user-facing change?

Yes. Before the changes, loading the date `1200-01-01` saved by Spark 2.4.5

returns the following:

```scala

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-08|

+--+

```

After the changes

```scala

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-01|

+--+

```

### How was this patch tested?

- By running `OrcSourceSuite` and `HiveOrcSourceSuite`.

- Add new test `SPARK-31238: compatibility with Spark 2.4 in reading dates`

to `OrcSuite` which reads an ORC file saved by Spark 2.4.5 via the commands:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> sql("select cast('1200-01-01' as date)

dt").write.mode("overwrite").orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc")

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-01|

+--+

```

- Add round trip test `SPARK-31238: rebasing dates in write`. The test

`SPARK-31238: compatibility with Spark 2.4 in reading dates` confirms rebasing

in read. So, we can check rebasing in write.

Closes #28016 from MaxGekk/rebase-date-orc.

Authored-by: Maxim Gekk

Signed-off-by: Dongjoon Hyun

(cherry picked from commit d72ec8574113f9a7e87f3d7ec56c8447267b0506)

Signed-off-by: Dongjoon Hyun

---

.../sql/execution/datasources}/DaysWritable.scala | 10 ++--

.../test-data/before_1582_date_v2_4.snappy.orc | Bin 0 -> 201 bytes

.../execution/datasources/orc/OrcSourceSuite.scala | 28 -

.../sql/execution/datasources/orc/OrcTest.scala| 5

.../execution/datasources/orc/OrcColumnVector.java | 15 ++-

.../execution/datasources/orc}/DaysWritable.scala | 17 ++---

.../execution/datasources/orc/OrcShimUtils.scala | 4 +--

.../execution/datasources/orc/OrcColumnVector.java | 15 ++-

.../execution/datasources/orc/OrcShimUtils.scala | 5 ++--

.../org/apache/spark/sql/hive/HiveInspectors.scala | 1 +

10 files changed, 88 insertions(+), 12 deletions(-)

diff --git

a/sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

similarity index 92%

copy from sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

copy to

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

index 1eec8d7..00b710f 100644

--- a/sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

@@ -15,7 +15,7 @@

* limitations under the License.

*/

-package org.apache.spark.sql.hive

+package org.apache.spark.sql.execution.datasources

import java.io.{DataInput, DataOutput,

[spark] branch master updated: [SPARK-31238][SQL] Rebase dates to/from Julian calendar in write/read for ORC datasource

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new d72ec85 [SPARK-31238][SQL] Rebase dates to/from Julian calendar in

write/read for ORC datasource

d72ec85 is described below

commit d72ec8574113f9a7e87f3d7ec56c8447267b0506

Author: Maxim Gekk

AuthorDate: Thu Mar 26 13:14:28 2020 -0700

[SPARK-31238][SQL] Rebase dates to/from Julian calendar in write/read for

ORC datasource

### What changes were proposed in this pull request?

This PR (SPARK-31238) aims the followings.

1. Modified ORC Vectorized Reader, in particular, OrcColumnVector v1.2 and

v2.3. After the changes, it uses `DateTimeUtils. rebaseJulianToGregorianDays()`

added by https://github.com/apache/spark/pull/27915 . The method performs

rebasing days from the hybrid calendar (Julian + Gregorian) to Proleptic

Gregorian calendar. It builds a local date in the original calendar, extracts

date fields `year`, `month` and `day` from the local date, and builds another

local date in the target calend [...]

2. Introduced rebasing dates while saving ORC files, in particular, I

modified `OrcShimUtils. getDateWritable` v1.2 and v2.3, and returned

`DaysWritable` instead of Hive's `DateWritable`. The `DaysWritable` class was

added by the PR https://github.com/apache/spark/pull/27890 (and fixed by

https://github.com/apache/spark/pull/27962). I moved `DaysWritable` from

`sql/hive` to `sql/core` to re-use it in ORC datasource.

### Why are the changes needed?

For the backward compatibility with Spark 2.4 and earlier versions. The

changes allow users to read dates/timestamps saved by previous version, and get

the same result.

### Does this PR introduce any user-facing change?

Yes. Before the changes, loading the date `1200-01-01` saved by Spark 2.4.5

returns the following:

```scala

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-08|

+--+

```

After the changes

```scala

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-01|

+--+

```

### How was this patch tested?

- By running `OrcSourceSuite` and `HiveOrcSourceSuite`.

- Add new test `SPARK-31238: compatibility with Spark 2.4 in reading dates`

to `OrcSuite` which reads an ORC file saved by Spark 2.4.5 via the commands:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> sql("select cast('1200-01-01' as date)

dt").write.mode("overwrite").orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc")

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-01|

+--+

```

- Add round trip test `SPARK-31238: rebasing dates in write`. The test

`SPARK-31238: compatibility with Spark 2.4 in reading dates` confirms rebasing

in read. So, we can check rebasing in write.

Closes #28016 from MaxGekk/rebase-date-orc.

Authored-by: Maxim Gekk

Signed-off-by: Dongjoon Hyun

---

.../sql/execution/datasources}/DaysWritable.scala | 10 ++--

.../test-data/before_1582_date_v2_4.snappy.orc | Bin 0 -> 201 bytes

.../execution/datasources/orc/OrcSourceSuite.scala | 28 -

.../sql/execution/datasources/orc/OrcTest.scala| 5

.../execution/datasources/orc/OrcColumnVector.java | 15 ++-

.../execution/datasources/orc}/DaysWritable.scala | 17 ++---

.../execution/datasources/orc/OrcShimUtils.scala | 4 +--

.../execution/datasources/orc/OrcColumnVector.java | 15 ++-

.../execution/datasources/orc/OrcShimUtils.scala | 5 ++--

.../org/apache/spark/sql/hive/HiveInspectors.scala | 1 +

10 files changed, 88 insertions(+), 12 deletions(-)

diff --git

a/sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

similarity index 92%

copy from sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

copy to

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

index 1eec8d7..00b710f 100644

--- a/sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

@@ -15,7 +15,7 @@

* limitations under the License.

*/

-package org.apache.spark.sql.hive

+package org.apache.spark.sql.execution.datasources

import java.io.{DataInput, DataOutput, IOException}

import java.sql.Date

@@ -35,11 +35,12 @@ import

[spark] branch branch-3.0 updated: [SPARK-31238][SQL] Rebase dates to/from Julian calendar in write/read for ORC datasource

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 78cc2ef [SPARK-31238][SQL] Rebase dates to/from Julian calendar in

write/read for ORC datasource

78cc2ef is described below

commit 78cc2ef5b663d6d605e3d4febc6fb99e20b7f165

Author: Maxim Gekk

AuthorDate: Thu Mar 26 13:14:28 2020 -0700

[SPARK-31238][SQL] Rebase dates to/from Julian calendar in write/read for

ORC datasource

### What changes were proposed in this pull request?

This PR (SPARK-31238) aims the followings.

1. Modified ORC Vectorized Reader, in particular, OrcColumnVector v1.2 and

v2.3. After the changes, it uses `DateTimeUtils. rebaseJulianToGregorianDays()`

added by https://github.com/apache/spark/pull/27915 . The method performs

rebasing days from the hybrid calendar (Julian + Gregorian) to Proleptic

Gregorian calendar. It builds a local date in the original calendar, extracts

date fields `year`, `month` and `day` from the local date, and builds another

local date in the target calend [...]

2. Introduced rebasing dates while saving ORC files, in particular, I

modified `OrcShimUtils. getDateWritable` v1.2 and v2.3, and returned

`DaysWritable` instead of Hive's `DateWritable`. The `DaysWritable` class was

added by the PR https://github.com/apache/spark/pull/27890 (and fixed by

https://github.com/apache/spark/pull/27962). I moved `DaysWritable` from

`sql/hive` to `sql/core` to re-use it in ORC datasource.

### Why are the changes needed?

For the backward compatibility with Spark 2.4 and earlier versions. The

changes allow users to read dates/timestamps saved by previous version, and get

the same result.

### Does this PR introduce any user-facing change?

Yes. Before the changes, loading the date `1200-01-01` saved by Spark 2.4.5

returns the following:

```scala

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-08|

+--+

```

After the changes

```scala

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-01|

+--+

```

### How was this patch tested?

- By running `OrcSourceSuite` and `HiveOrcSourceSuite`.

- Add new test `SPARK-31238: compatibility with Spark 2.4 in reading dates`

to `OrcSuite` which reads an ORC file saved by Spark 2.4.5 via the commands:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> sql("select cast('1200-01-01' as date)

dt").write.mode("overwrite").orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc")

scala>

spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+--+

|dt|

+--+

|1200-01-01|

+--+

```

- Add round trip test `SPARK-31238: rebasing dates in write`. The test

`SPARK-31238: compatibility with Spark 2.4 in reading dates` confirms rebasing

in read. So, we can check rebasing in write.

Closes #28016 from MaxGekk/rebase-date-orc.

Authored-by: Maxim Gekk

Signed-off-by: Dongjoon Hyun

(cherry picked from commit d72ec8574113f9a7e87f3d7ec56c8447267b0506)

Signed-off-by: Dongjoon Hyun

---

.../sql/execution/datasources}/DaysWritable.scala | 10 ++--

.../test-data/before_1582_date_v2_4.snappy.orc | Bin 0 -> 201 bytes

.../execution/datasources/orc/OrcSourceSuite.scala | 28 -

.../sql/execution/datasources/orc/OrcTest.scala| 5

.../execution/datasources/orc/OrcColumnVector.java | 15 ++-

.../execution/datasources/orc}/DaysWritable.scala | 17 ++---

.../execution/datasources/orc/OrcShimUtils.scala | 4 +--

.../execution/datasources/orc/OrcColumnVector.java | 15 ++-

.../execution/datasources/orc/OrcShimUtils.scala | 5 ++--

.../org/apache/spark/sql/hive/HiveInspectors.scala | 1 +

10 files changed, 88 insertions(+), 12 deletions(-)

diff --git

a/sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

similarity index 92%

copy from sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

copy to

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

index 1eec8d7..00b710f 100644

--- a/sql/hive/src/main/scala/org/apache/spark/sql/hive/DaysWritable.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DaysWritable.scala

@@ -15,7 +15,7 @@

* limitations under the License.

*/

-package org.apache.spark.sql.hive

+package org.apache.spark.sql.execution.datasources

import java.io.{DataInput, DataOutput,

[spark] branch branch-3.0 updated: [SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed comments

This is an automated email from the ASF dual-hosted git repository.

yamamuro pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 6f30ff4 [SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed

comments

6f30ff4 is described below

commit 6f30ff44cf2d3d347a516a0e0370d07e8de9352c

Author: beliefer

AuthorDate: Fri Mar 27 08:09:17 2020 +0900

[SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed comments

### What changes were proposed in this pull request?

This PR related to https://github.com/apache/spark/pull/27481.

If test case A uses `--IMPORT` to import test case B contains bracketed

comments, the output can't display bracketed comments in golden files well.

The content of `nested-comments.sql` show below:

```

-- This test case just used to test imported bracketed comments.

-- the first case of bracketed comment

--QUERY-DELIMITER-START

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first;

*/

SELECT 'selected content' AS first;

--QUERY-DELIMITER-END

```

The test case `comments.sql` imports `nested-comments.sql` below:

`--IMPORT nested-comments.sql`

Before this PR, the output will be:

```

-- !query

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.catalyst.parser.ParseException

mismatched input '/' expecting {'(', 'ADD', 'ALTER', 'ANALYZE', 'CACHE',

'CLEAR', 'COMMENT', 'COMMIT', 'CREATE', 'DELETE', 'DESC', 'DESCRIBE', 'DFS',

'DROP',

'EXPLAIN', 'EXPORT', 'FROM', 'GRANT', 'IMPORT', 'INSERT', 'LIST', 'LOAD',

'LOCK', 'MAP', 'MERGE', 'MSCK', 'REDUCE', 'REFRESH', 'REPLACE', 'RESET',

'REVOKE', '

ROLLBACK', 'SELECT', 'SET', 'SHOW', 'START', 'TABLE', 'TRUNCATE',

'UNCACHE', 'UNLOCK', 'UPDATE', 'USE', 'VALUES', 'WITH'}(line 1, pos 0)

== SQL ==

/* This is the first example of bracketed comment.

^^^

SELECT 'ommented out content' AS first

-- !query

*/

SELECT 'selected content' AS first

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.catalyst.parser.ParseException

extraneous input '*/' expecting {'(', 'ADD', 'ALTER', 'ANALYZE', 'CACHE',

'CLEAR', 'COMMENT', 'COMMIT', 'CREATE', 'DELETE', 'DESC', 'DESCRIBE', 'DFS',

'DROP', 'EXPLAIN', 'EXPORT', 'FROM', 'GRANT', 'IMPORT', 'INSERT', 'LIST',

'LOAD', 'LOCK', 'MAP', 'MERGE', 'MSCK', 'REDUCE', 'REFRESH', 'REPLACE',

'RESET', 'REVOKE', 'ROLLBACK', 'SELECT', 'SET', 'SHOW', 'START', 'TABLE',

'TRUNCATE', 'UNCACHE', 'UNLOCK', 'UPDATE', 'USE', 'VALUES', 'WITH'}(line 1, pos

0)

== SQL ==

*/

^^^

SELECT 'selected content' AS first

```

After this PR, the output will be:

```

-- !query

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first;

*/

SELECT 'selected content' AS first

-- !query schema

struct

-- !query output

selected content

```

### Why are the changes needed?

Golden files can't display the bracketed comments in imported test cases.

### Does this PR introduce any user-facing change?

'No'.

### How was this patch tested?

New UT.

Closes #28018 from beliefer/fix-bug-tests-imported-bracketed-comments.

Authored-by: beliefer

Signed-off-by: Takeshi Yamamuro

(cherry picked from commit 9e0fee933e62eb309d4aa32bb1e5126125d0bf9f)

Signed-off-by: Takeshi Yamamuro

---

.../src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala | 9 ++---

1 file changed, 6 insertions(+), 3 deletions(-)

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

index 6c66166..848966a 100644

--- a/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

+++ b/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

@@ -256,20 +256,23 @@ class SQLQueryTestSuite extends QueryTest with

SharedSparkSession {

def splitWithSemicolon(seq: Seq[String]) = {

seq.mkString("\n").split("(?<=[^]);")

}

-val input = fileToString(new File(testCase.inputFile))

-val (comments, code) = input.split("\n").partition { line =>

+def splitCommentsAndCodes(input: String) = input.split("\n").partition {

line =>

val newLine = line.trim

newLine.startsWith("--") && !newLine.startsWith("--QUERY-DELIMITER")

}

+val input = fileToString(new File(testCase.inputFile))

+

+val (comments, code) = splitCommentsAndCodes(input)

+

// If `--IMPORT` found, load code from another test case file, then insert

them

// into the head in this test.

val importedTestCaseName =

[spark] branch master updated: [SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed comments

This is an automated email from the ASF dual-hosted git repository.

yamamuro pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 9e0fee9 [SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed

comments

9e0fee9 is described below

commit 9e0fee933e62eb309d4aa32bb1e5126125d0bf9f

Author: beliefer

AuthorDate: Fri Mar 27 08:09:17 2020 +0900

[SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed comments

### What changes were proposed in this pull request?

This PR related to https://github.com/apache/spark/pull/27481.

If test case A uses `--IMPORT` to import test case B contains bracketed

comments, the output can't display bracketed comments in golden files well.

The content of `nested-comments.sql` show below:

```

-- This test case just used to test imported bracketed comments.

-- the first case of bracketed comment

--QUERY-DELIMITER-START

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first;

*/

SELECT 'selected content' AS first;

--QUERY-DELIMITER-END

```

The test case `comments.sql` imports `nested-comments.sql` below:

`--IMPORT nested-comments.sql`

Before this PR, the output will be:

```

-- !query

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.catalyst.parser.ParseException

mismatched input '/' expecting {'(', 'ADD', 'ALTER', 'ANALYZE', 'CACHE',

'CLEAR', 'COMMENT', 'COMMIT', 'CREATE', 'DELETE', 'DESC', 'DESCRIBE', 'DFS',

'DROP',

'EXPLAIN', 'EXPORT', 'FROM', 'GRANT', 'IMPORT', 'INSERT', 'LIST', 'LOAD',

'LOCK', 'MAP', 'MERGE', 'MSCK', 'REDUCE', 'REFRESH', 'REPLACE', 'RESET',

'REVOKE', '

ROLLBACK', 'SELECT', 'SET', 'SHOW', 'START', 'TABLE', 'TRUNCATE',

'UNCACHE', 'UNLOCK', 'UPDATE', 'USE', 'VALUES', 'WITH'}(line 1, pos 0)

== SQL ==

/* This is the first example of bracketed comment.

^^^

SELECT 'ommented out content' AS first

-- !query

*/

SELECT 'selected content' AS first

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.catalyst.parser.ParseException

extraneous input '*/' expecting {'(', 'ADD', 'ALTER', 'ANALYZE', 'CACHE',

'CLEAR', 'COMMENT', 'COMMIT', 'CREATE', 'DELETE', 'DESC', 'DESCRIBE', 'DFS',

'DROP', 'EXPLAIN', 'EXPORT', 'FROM', 'GRANT', 'IMPORT', 'INSERT', 'LIST',

'LOAD', 'LOCK', 'MAP', 'MERGE', 'MSCK', 'REDUCE', 'REFRESH', 'REPLACE',

'RESET', 'REVOKE', 'ROLLBACK', 'SELECT', 'SET', 'SHOW', 'START', 'TABLE',

'TRUNCATE', 'UNCACHE', 'UNLOCK', 'UPDATE', 'USE', 'VALUES', 'WITH'}(line 1, pos

0)

== SQL ==

*/

^^^

SELECT 'selected content' AS first

```

After this PR, the output will be:

```

-- !query

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first;

*/

SELECT 'selected content' AS first

-- !query schema

struct

-- !query output

selected content

```

### Why are the changes needed?

Golden files can't display the bracketed comments in imported test cases.

### Does this PR introduce any user-facing change?

'No'.

### How was this patch tested?

New UT.

Closes #28018 from beliefer/fix-bug-tests-imported-bracketed-comments.

Authored-by: beliefer

Signed-off-by: Takeshi Yamamuro

---

.../src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala | 9 ++---

1 file changed, 6 insertions(+), 3 deletions(-)

diff --git

a/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

index 6c66166..848966a 100644

--- a/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

+++ b/sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala

@@ -256,20 +256,23 @@ class SQLQueryTestSuite extends QueryTest with

SharedSparkSession {

def splitWithSemicolon(seq: Seq[String]) = {

seq.mkString("\n").split("(?<=[^]);")

}

-val input = fileToString(new File(testCase.inputFile))

-val (comments, code) = input.split("\n").partition { line =>

+def splitCommentsAndCodes(input: String) = input.split("\n").partition {

line =>

val newLine = line.trim

newLine.startsWith("--") && !newLine.startsWith("--QUERY-DELIMITER")

}

+val input = fileToString(new File(testCase.inputFile))

+

+val (comments, code) = splitCommentsAndCodes(input)

+

// If `--IMPORT` found, load code from another test case file, then insert

them

// into the head in this test.

val importedTestCaseName = comments.filter(_.startsWith("--IMPORT

")).map(_.substring(9))

val importedCode = importedTestCaseName.flatMap { testCaseName =>

[spark] branch master updated (d81df56 -> ee6f899)

This is an automated email from the ASF dual-hosted git repository. ruifengz pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from d81df56 [SPARK-31223][ML] Set seed in np.random to regenerate test data add ee6f899 [SPARK-30934][ML][FOLLOW-UP] Update ml-guide to include MulticlassClassificationEvaluator weight support in highlights No new revisions were added by this update. Summary of changes: docs/ml-guide.md | 8 1 file changed, 4 insertions(+), 4 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-31237][SQL][TESTS] Replace 3-letter time zones by zone offsets

This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 6b568d7 [SPARK-31237][SQL][TESTS] Replace 3-letter time zones by zone

offsets

6b568d7 is described below

commit 6b568d77fc0284b0b8a26b16fd105a7c5f54f874

Author: Maxim Gekk

AuthorDate: Thu Mar 26 13:36:00 2020 +0800

[SPARK-31237][SQL][TESTS] Replace 3-letter time zones by zone offsets

In the PR, I propose to add a few `ZoneId` constant values to the

`DateTimeTestUtils` object, and reuse the constants in tests. Proposed the

following constants:

- PST = -08:00

- UTC = +00:00

- CEST = +02:00

- CET = +01:00

- JST = +09:00

- MIT = -09:30

- LA = America/Los_Angeles

All proposed constant values (except `LA`) are initialized by zone offsets

according to their definitions. This will allow to avoid:

- Using of 3-letter time zones that have been already deprecated in JDK,

see _Three-letter time zone IDs_ in

https://docs.oracle.com/javase/8/docs/api/java/util/TimeZone.html

- Incorrect mapping of 3-letter time zones to zone offsets, see

SPARK-31237. For example, `PST` is mapped to `America/Los_Angeles` instead of

the `-08:00` zone offset.

Also this should improve stability and maintainability of test suites.

No

By running affected test suites.

Closes #28001 from MaxGekk/replace-pst.

Authored-by: Maxim Gekk

Signed-off-by: Wenchen Fan

(cherry picked from commit cec9604eaec2e5ff17e705ed60565bd7506c6374)

Signed-off-by: Wenchen Fan

---

.../sql/catalyst/csv/CSVInferSchemaSuite.scala | 28 ++--

.../sql/catalyst/csv/UnivocityParserSuite.scala| 45 +++---

.../spark/sql/catalyst/expressions/CastSuite.scala | 27 ++--

.../catalyst/expressions/CodeGenerationSuite.scala | 5 +-

.../expressions/CollectionExpressionsSuite.scala | 3 +-

.../catalyst/expressions/CsvExpressionsSuite.scala | 47 +++---

.../expressions/DateExpressionsSuite.scala | 177 ++--

.../expressions/JsonExpressionsSuite.scala | 77 +

.../sql/catalyst/util/DateTimeTestUtils.scala | 17 +-

.../sql/catalyst/util/DateTimeUtilsSuite.scala | 178 ++---

.../apache/spark/sql/util/ArrowUtilsSuite.scala| 3 +-

.../spark/sql/util/TimestampFormatterSuite.scala | 11 +-

.../apache/spark/sql/DataFrameFunctionsSuite.scala | 3 +-

.../org/apache/spark/sql/DataFramePivotSuite.scala | 2 +-

.../org/apache/spark/sql/DataFrameSuite.scala | 4 +-

.../org/apache/spark/sql/DateFunctionsSuite.scala | 21 +--

.../sql/execution/datasources/csv/CSVSuite.scala | 4 +-

.../sql/execution/datasources/json/JsonSuite.scala | 10 +-

.../parquet/ParquetPartitionDiscoverySuite.scala | 10 +-

.../apache/spark/sql/internal/SQLConfSuite.scala | 5 +-

.../spark/sql/sources/PartitionedWriteSuite.scala | 6 +-

.../sql/streaming/EventTimeWatermarkSuite.scala| 3 +-

22 files changed, 346 insertions(+), 340 deletions(-)

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/csv/CSVInferSchemaSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/csv/CSVInferSchemaSuite.scala

index ee73da3..b014eb9 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/csv/CSVInferSchemaSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/csv/CSVInferSchemaSuite.scala

@@ -28,7 +28,7 @@ import org.apache.spark.sql.types._

class CSVInferSchemaSuite extends SparkFunSuite with SQLHelper {

test("String fields types are inferred correctly from null types") {

-val options = new CSVOptions(Map("timestampFormat" -> "-MM-dd

HH:mm:ss"), false, "GMT")

+val options = new CSVOptions(Map("timestampFormat" -> "-MM-dd

HH:mm:ss"), false, "UTC")

val inferSchema = new CSVInferSchema(options)

assert(inferSchema.inferField(NullType, "") == NullType)

@@ -48,7 +48,7 @@ class CSVInferSchemaSuite extends SparkFunSuite with

SQLHelper {

}

test("String fields types are inferred correctly from other types") {

-val options = new CSVOptions(Map("timestampFormat" -> "-MM-dd

HH:mm:ss"), false, "GMT")

+val options = new CSVOptions(Map("timestampFormat" -> "-MM-dd

HH:mm:ss"), false, "UTC")

val inferSchema = new CSVInferSchema(options)

assert(inferSchema.inferField(LongType, "1.0") == DoubleType)

@@ -69,18 +69,18 @@ class CSVInferSchemaSuite extends SparkFunSuite with

SQLHelper {

}

test("Timestamp field types are inferred correctly via custom data format") {

-var options = new CSVOptions(Map("timestampFormat" -> "-mm"), false,

"GMT")

+var options = new CSVOptions(Map("timestampFormat" -> "-mm"), false,

"UTC")

var inferSchema = new CSVInferSchema(options)

[spark] branch branch-3.0 updated: [SPARK-30934][ML][FOLLOW-UP] Update ml-guide to include MulticlassClassificationEvaluator weight support in highlights

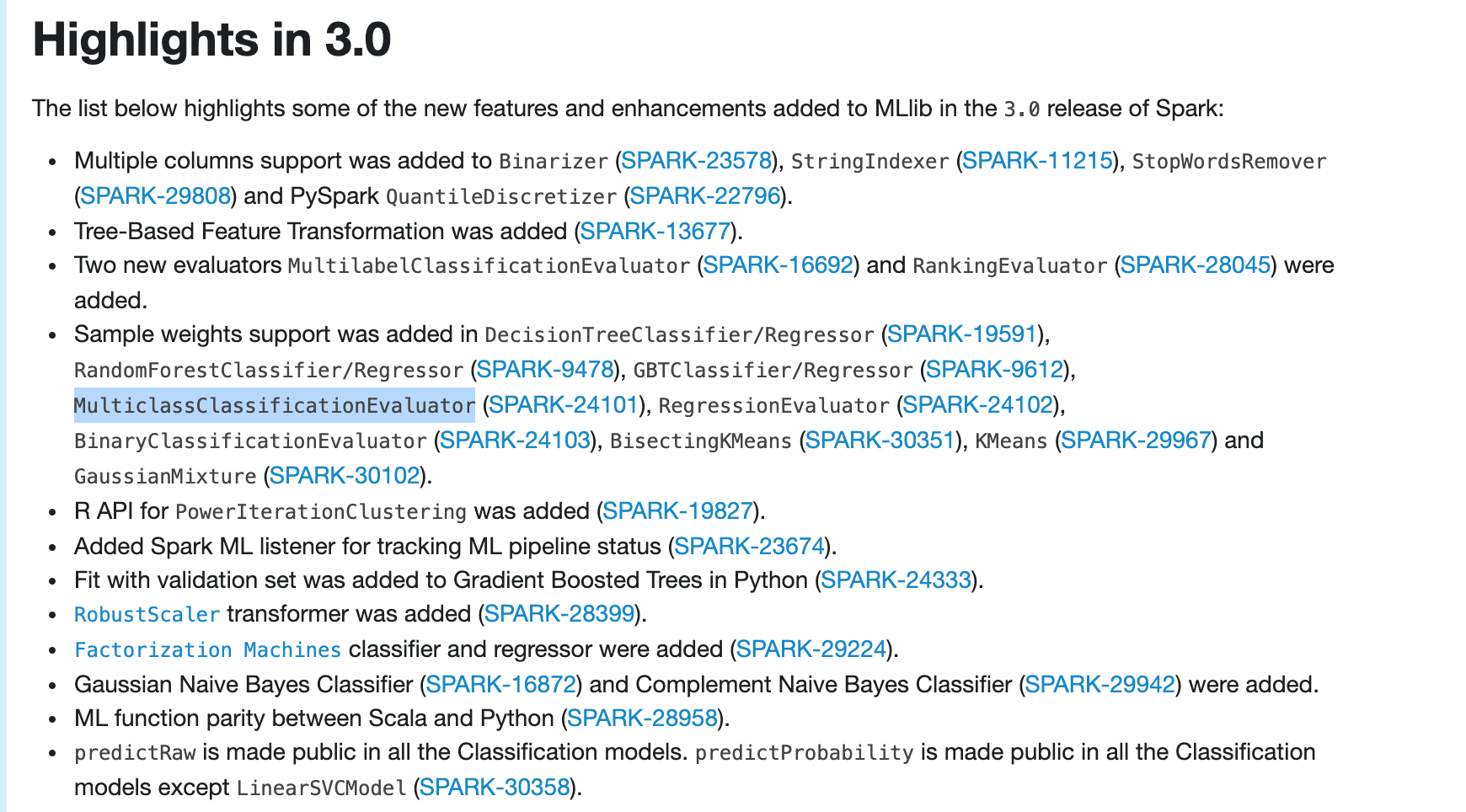

This is an automated email from the ASF dual-hosted git repository. ruifengz pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new a36e3c4 [SPARK-30934][ML][FOLLOW-UP] Update ml-guide to include MulticlassClassificationEvaluator weight support in highlights a36e3c4 is described below commit a36e3c4c898b513b90e58859e3ca8e550d5cb0cd Author: Huaxin Gao AuthorDate: Thu Mar 26 14:24:53 2020 +0800 [SPARK-30934][ML][FOLLOW-UP] Update ml-guide to include MulticlassClassificationEvaluator weight support in highlights ### What changes were proposed in this pull request? Update ml-guide to include ```MulticlassClassificationEvaluator``` weight support in highlights ### Why are the changes needed? ```MulticlassClassificationEvaluator``` weight support is very important, so should include it in highlights ### Does this PR introduce any user-facing change? Yes after:  ### How was this patch tested? manually build and check Closes #28031 from huaxingao/highlights-followup. Authored-by: Huaxin Gao Signed-off-by: zhengruifeng (cherry picked from commit ee6f8991a792e24a5b1c020d958877187af2f41b) Signed-off-by: zhengruifeng --- docs/ml-guide.md | 8 1 file changed, 4 insertions(+), 4 deletions(-) diff --git a/docs/ml-guide.md b/docs/ml-guide.md index 5ce6b4f..ddce98b 100644 --- a/docs/ml-guide.md +++ b/docs/ml-guide.md @@ -91,10 +91,10 @@ The list below highlights some of the new features and enhancements added to MLl release of Spark: * Multiple columns support was added to `Binarizer` ([SPARK-23578](https://issues.apache.org/jira/browse/SPARK-23578)), `StringIndexer` ([SPARK-11215](https://issues.apache.org/jira/browse/SPARK-11215)), `StopWordsRemover` ([SPARK-29808](https://issues.apache.org/jira/browse/SPARK-29808)) and PySpark `QuantileDiscretizer` ([SPARK-22796](https://issues.apache.org/jira/browse/SPARK-22796)). -* Support Tree-Based Feature Transformation was added +* Tree-Based Feature Transformation was added ([SPARK-13677](https://issues.apache.org/jira/browse/SPARK-13677)). * Two new evaluators `MultilabelClassificationEvaluator` ([SPARK-16692](https://issues.apache.org/jira/browse/SPARK-16692)) and `RankingEvaluator` ([SPARK-28045](https://issues.apache.org/jira/browse/SPARK-28045)) were added. -* Sample weights support was added in `DecisionTreeClassifier/Regressor` ([SPARK-19591](https://issues.apache.org/jira/browse/SPARK-19591)), `RandomForestClassifier/Regressor` ([SPARK-9478](https://issues.apache.org/jira/browse/SPARK-9478)), `GBTClassifier/Regressor` ([SPARK-9612](https://issues.apache.org/jira/browse/SPARK-9612)), `RegressionEvaluator` ([SPARK-24102](https://issues.apache.org/jira/browse/SPARK-24102)), `BinaryClassificationEvaluator` ([SPARK-24103](https://issues.apach [...] +* Sample weights support was added in `DecisionTreeClassifier/Regressor` ([SPARK-19591](https://issues.apache.org/jira/browse/SPARK-19591)), `RandomForestClassifier/Regressor` ([SPARK-9478](https://issues.apache.org/jira/browse/SPARK-9478)), `GBTClassifier/Regressor` ([SPARK-9612](https://issues.apache.org/jira/browse/SPARK-9612)), `MulticlassClassificationEvaluator` ([SPARK-24101](https://issues.apache.org/jira/browse/SPARK-24101)), `RegressionEvaluator` ([SPARK-24102](https://issues.a [...] * R API for `PowerIterationClustering` was added ([SPARK-19827](https://issues.apache.org/jira/browse/SPARK-19827)). * Added Spark ML listener for tracking ML pipeline status @@ -105,10 +105,10 @@ release of Spark: ([SPARK-28399](https://issues.apache.org/jira/browse/SPARK-28399)). * [`Factorization Machines`](ml-classification-regression.html#factorization-machines) classifier and regressor were added ([SPARK-29224](https://issues.apache.org/jira/browse/SPARK-29224)). -* Gaussian Naive Bayes ([SPARK-16872](https://issues.apache.org/jira/browse/SPARK-16872)) and Complement Naive Bayes ([SPARK-29942](https://issues.apache.org/jira/browse/SPARK-29942)) were added. +* Gaussian Naive Bayes Classifier ([SPARK-16872](https://issues.apache.org/jira/browse/SPARK-16872)) and Complement Naive Bayes Classifier ([SPARK-29942](https://issues.apache.org/jira/browse/SPARK-29942)) were added. * ML function parity between Scala and Python ([SPARK-28958](https://issues.apache.org/jira/browse/SPARK-28958)). -* `predictRaw` is made public in all the Classification models. `predictProbability` is made public in all the Classification models except `LinearSVCModel`. +* `predictRaw` is made public in all the Classification models. `predictProbability` is made public in all the Classification models except `LinearSVCModel`

[spark] branch master updated: [SPARK-31275][WEBUI] Improve the metrics format in ExecutionPage for StageId

This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new bc37fdc [SPARK-31275][WEBUI] Improve the metrics format in

ExecutionPage for StageId

bc37fdc is described below

commit bc37fdc77130ce4f60806db0bb2b1b8914452040

Author: Kousuke Saruta

AuthorDate: Fri Mar 27 13:35:28 2020 +0800

[SPARK-31275][WEBUI] Improve the metrics format in ExecutionPage for StageId

### What changes were proposed in this pull request?

In ExecutionPage, metrics format for stageId, attemptId and taskId are

displayed like `(stageId (attemptId): taskId)` for now.

I changed this format like `(stageId.attemptId taskId)`.

### Why are the changes needed?

As cloud-fan suggested

[here](https://github.com/apache/spark/pull/27927#discussion_r398591519),

`stageId.attemptId` is more standard in Spark.

### Does this PR introduce any user-facing change?

Yes. Before applying this change, we can see the UI like as follows.

And after this change applied, we can like as follows.

### How was this patch tested?

Modified `SQLMetricsSuite` and manual test.

Closes #28039 from sarutak/improve-metrics-format.

Authored-by: Kousuke Saruta

Signed-off-by: Wenchen Fan

---

.../spark/sql/execution/ui/static/spark-sql-viz.js | 2 +-

.../spark/sql/execution/metric/SQLMetrics.scala | 12 ++--

.../spark/sql/execution/ui/ExecutionPage.scala | 2 +-

.../spark/sql/execution/metric/SQLMetricsSuite.scala | 20 ++--

.../sql/execution/metric/SQLMetricsTestUtils.scala | 18 +-

5 files changed, 27 insertions(+), 27 deletions(-)

diff --git

a/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

b/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

index 0fb7dab..bb393d9 100644

---

a/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

+++

b/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

@@ -73,7 +73,7 @@ function setupTooltipForSparkPlanNode(nodeId) {

// labelSeparator should be a non-graphical character in order not to affect

the width of boxes.

var labelSeparator = "\x01";

-var stageAndTaskMetricsPattern = "^(.*)(\\(stage.*attempt.*task[^)]*\\))(.*)$";

+var stageAndTaskMetricsPattern = "^(.*)(\\(stage.*task[^)]*\\))(.*)$";

/*

* Helper function to pre-process the graph layout.

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

index 65aabe0..1394e0f 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

@@ -116,7 +116,7 @@ object SQLMetrics {

// data size total (min, med, max):

// 100GB (100MB, 1GB, 10GB)

val acc = new SQLMetric(SIZE_METRIC, -1)

-acc.register(sc, name = Some(s"$name total (min, med, max (stageId

(attemptId): taskId))"),

+acc.register(sc, name = Some(s"$name total (min, med, max (stageId:

taskId))"),

countFailedValues = false)

acc

}

@@ -126,7 +126,7 @@ object SQLMetrics {

// duration(min, med, max):

// 5s (800ms, 1s, 2s)

val acc = new SQLMetric(TIMING_METRIC, -1)

-acc.register(sc, name = Some(s"$name total (min, med, max (stageId

(attemptId): taskId))"),

+acc.register(sc, name = Some(s"$name total (min, med, max (stageId:

taskId))"),

countFailedValues = false)

acc

}

@@ -134,7 +134,7 @@ object SQLMetrics {

def createNanoTimingMetric(sc: SparkContext, name: String): SQLMetric = {

// Same with createTimingMetric, just normalize the unit of time to

millisecond.

val acc = new SQLMetric(NS_TIMING_METRIC, -1)

-acc.register(sc, name = Some(s"$name total (min, med, max (stageId

(attemptId): taskId))"),

+acc.register(sc, name = Some(s"$name total (min, med, max (stageId:

taskId))"),

countFailedValues = false)

acc

}

@@ -150,7 +150,7 @@ object SQLMetrics {

// probe avg (min, med, max):

// (1.2, 2.2, 6.3)

val acc = new SQLMetric(AVERAGE_METRIC)

-acc.register(sc, name = Some(s"$name (min, med, max (stageId (attemptId):

taskId))"),

+acc.register(sc, name = Some(s"$name (min, med, max (stageId: taskId))"),

countFailedValues = false)

acc

}

@@ -169,11 +169,11 @@ object SQLMetrics {

* and represent it

[spark] branch branch-3.0 updated: [SPARK-31186][PYSPARK][SQL] toPandas should not fail on duplicate column names

This is an automated email from the ASF dual-hosted git repository.

gurwls223 pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 6fea291 [SPARK-31186][PYSPARK][SQL] toPandas should not fail on

duplicate column names

6fea291 is described below

commit 6fea291762af3e802cb4c237bdad51ebf5d7152c

Author: Liang-Chi Hsieh

AuthorDate: Fri Mar 27 12:10:30 2020 +0900

[SPARK-31186][PYSPARK][SQL] toPandas should not fail on duplicate column

names

### What changes were proposed in this pull request?

When `toPandas` API works on duplicate column names produced from operators

like join, we see the error like:

```

ValueError: The truth value of a Series is ambiguous. Use a.empty,

a.bool(), a.item(), a.any() or a.all().

```

This patch fixes the error in `toPandas` API.

### Why are the changes needed?

To make `toPandas` work on dataframe with duplicate column names.

### Does this PR introduce any user-facing change?

Yes. Previously calling `toPandas` API on a dataframe with duplicate column

names will fail. After this patch, it will produce correct result.

### How was this patch tested?

Unit test.

Closes #28025 from viirya/SPARK-31186.

Authored-by: Liang-Chi Hsieh

Signed-off-by: HyukjinKwon

(cherry picked from commit 559d3e4051500d5c49e9a7f3ac33aac3de19c9c6)

Signed-off-by: HyukjinKwon

---

python/pyspark/sql/pandas/conversion.py| 48 +++---

python/pyspark/sql/tests/test_dataframe.py | 18 +++

2 files changed, 56 insertions(+), 10 deletions(-)

diff --git a/python/pyspark/sql/pandas/conversion.py

b/python/pyspark/sql/pandas/conversion.py

index 8548cd2..47cf8bb 100644

--- a/python/pyspark/sql/pandas/conversion.py

+++ b/python/pyspark/sql/pandas/conversion.py

@@ -21,6 +21,7 @@ if sys.version >= '3':

xrange = range

else:

from itertools import izip as zip

+from collections import Counter

from pyspark import since

from pyspark.rdd import _load_from_socket

@@ -131,9 +132,16 @@ class PandasConversionMixin(object):

# Below is toPandas without Arrow optimization.

pdf = pd.DataFrame.from_records(self.collect(), columns=self.columns)

+column_counter = Counter(self.columns)

+

+dtype = [None] * len(self.schema)

+for fieldIdx, field in enumerate(self.schema):

+# For duplicate column name, we use `iloc` to access it.

+if column_counter[field.name] > 1:

+pandas_col = pdf.iloc[:, fieldIdx]

+else:

+pandas_col = pdf[field.name]

-dtype = {}

-for field in self.schema:

pandas_type =

PandasConversionMixin._to_corrected_pandas_type(field.dataType)

# SPARK-21766: if an integer field is nullable and has null

values, it can be

# inferred by pandas as float column. Once we convert the column

with NaN back

@@ -141,16 +149,36 @@ class PandasConversionMixin(object):

# float type, not the corrected type from the schema in this case.

if pandas_type is not None and \

not(isinstance(field.dataType, IntegralType) and

field.nullable and

-pdf[field.name].isnull().any()):

-dtype[field.name] = pandas_type

+pandas_col.isnull().any()):

+dtype[fieldIdx] = pandas_type

# Ensure we fall back to nullable numpy types, even when whole

column is null:

-if isinstance(field.dataType, IntegralType) and

pdf[field.name].isnull().any():

-dtype[field.name] = np.float64

-if isinstance(field.dataType, BooleanType) and

pdf[field.name].isnull().any():

-dtype[field.name] = np.object

+if isinstance(field.dataType, IntegralType) and

pandas_col.isnull().any():

+dtype[fieldIdx] = np.float64

+if isinstance(field.dataType, BooleanType) and

pandas_col.isnull().any():

+dtype[fieldIdx] = np.object

+

+df = pd.DataFrame()

+for index, t in enumerate(dtype):

+column_name = self.schema[index].name

+

+# For duplicate column name, we use `iloc` to access it.

+if column_counter[column_name] > 1:

+series = pdf.iloc[:, index]

+else:

+series = pdf[column_name]

+

+if t is not None:

+series = series.astype(t, copy=False)

+

+# `insert` API makes copy of data, we only do it for Series of

duplicate column names.

+# `pdf.iloc[:, index] = pdf.iloc[:, index]...` doesn't always work

because `iloc` could

+# return a view or a copy depending by context.

+if column_counter[column_name] > 1:

+

[spark] branch master updated (9e0fee9 -> 559d3e4)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9e0fee9 [SPARK-31262][SQL][TESTS] Fix bug tests imported bracketed comments add 559d3e4 [SPARK-31186][PYSPARK][SQL] toPandas should not fail on duplicate column names No new revisions were added by this update. Summary of changes: python/pyspark/sql/pandas/conversion.py| 48 +++--- python/pyspark/sql/tests/test_dataframe.py | 18 +++ 2 files changed, 56 insertions(+), 10 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-31275][WEBUI] Improve the metrics format in ExecutionPage for StageId

This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new a7c58b1 [SPARK-31275][WEBUI] Improve the metrics format in

ExecutionPage for StageId

a7c58b1 is described below

commit a7c58b1ae5a05f509a034ca410a50c41ce94cf5f

Author: Kousuke Saruta

AuthorDate: Fri Mar 27 13:35:28 2020 +0800

[SPARK-31275][WEBUI] Improve the metrics format in ExecutionPage for StageId

### What changes were proposed in this pull request?

In ExecutionPage, metrics format for stageId, attemptId and taskId are

displayed like `(stageId (attemptId): taskId)` for now.

I changed this format like `(stageId.attemptId taskId)`.

### Why are the changes needed?

As cloud-fan suggested

[here](https://github.com/apache/spark/pull/27927#discussion_r398591519),

`stageId.attemptId` is more standard in Spark.

### Does this PR introduce any user-facing change?

Yes. Before applying this change, we can see the UI like as follows.

And after this change applied, we can like as follows.

### How was this patch tested?

Modified `SQLMetricsSuite` and manual test.

Closes #28039 from sarutak/improve-metrics-format.

Authored-by: Kousuke Saruta

Signed-off-by: Wenchen Fan

(cherry picked from commit bc37fdc77130ce4f60806db0bb2b1b8914452040)

Signed-off-by: Wenchen Fan

---

.../spark/sql/execution/ui/static/spark-sql-viz.js | 2 +-

.../spark/sql/execution/metric/SQLMetrics.scala | 12 ++--

.../spark/sql/execution/ui/ExecutionPage.scala | 2 +-

.../spark/sql/execution/metric/SQLMetricsSuite.scala | 20 ++--

.../sql/execution/metric/SQLMetricsTestUtils.scala | 18 +-

5 files changed, 27 insertions(+), 27 deletions(-)

diff --git

a/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

b/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

index b23ae9a..bded921 100644

---

a/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

+++

b/sql/core/src/main/resources/org/apache/spark/sql/execution/ui/static/spark-sql-viz.js

@@ -78,7 +78,7 @@ function setupTooltipForSparkPlanNode(nodeId) {

// labelSeparator should be a non-graphical character in order not to affect

the width of boxes.

var labelSeparator = "\x01";

-var stageAndTaskMetricsPattern = "^(.*)(\\(stage.*attempt.*task[^)]*\\))(.*)$";

+var stageAndTaskMetricsPattern = "^(.*)(\\(stage.*task[^)]*\\))(.*)$";

/*

* Helper function to pre-process the graph layout.

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

index 65aabe0..1394e0f 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/metric/SQLMetrics.scala

@@ -116,7 +116,7 @@ object SQLMetrics {

// data size total (min, med, max):

// 100GB (100MB, 1GB, 10GB)

val acc = new SQLMetric(SIZE_METRIC, -1)

-acc.register(sc, name = Some(s"$name total (min, med, max (stageId

(attemptId): taskId))"),

+acc.register(sc, name = Some(s"$name total (min, med, max (stageId:

taskId))"),

countFailedValues = false)

acc

}

@@ -126,7 +126,7 @@ object SQLMetrics {

// duration(min, med, max):

// 5s (800ms, 1s, 2s)

val acc = new SQLMetric(TIMING_METRIC, -1)

-acc.register(sc, name = Some(s"$name total (min, med, max (stageId

(attemptId): taskId))"),

+acc.register(sc, name = Some(s"$name total (min, med, max (stageId:

taskId))"),

countFailedValues = false)

acc

}

@@ -134,7 +134,7 @@ object SQLMetrics {

def createNanoTimingMetric(sc: SparkContext, name: String): SQLMetric = {

// Same with createTimingMetric, just normalize the unit of time to

millisecond.

val acc = new SQLMetric(NS_TIMING_METRIC, -1)

-acc.register(sc, name = Some(s"$name total (min, med, max (stageId

(attemptId): taskId))"),

+acc.register(sc, name = Some(s"$name total (min, med, max (stageId:

taskId))"),

countFailedValues = false)

acc

}

@@ -150,7 +150,7 @@ object SQLMetrics {

// probe avg (min, med, max):

// (1.2, 2.2, 6.3)

val acc = new SQLMetric(AVERAGE_METRIC)

-acc.register(sc, name = Some(s"$name (min, med, max (stageId (attemptId):

taskId))"),

+acc.register(sc, name = Some(s"$name (min, med, max (stageId: taskId))"),

[spark] branch master updated: [SPARK-31204][SQL] HiveResult compatibility for DatasourceV2 command

This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new a97d3b9 [SPARK-31204][SQL] HiveResult compatibility for DatasourceV2

command

a97d3b9 is described below

commit a97d3b9f4f4ddd215ecaa7f96c64aeba6e825f74

Author: Terry Kim

AuthorDate: Fri Mar 27 12:48:14 2020 +0800

[SPARK-31204][SQL] HiveResult compatibility for DatasourceV2 command

### What changes were proposed in this pull request?

`HiveResult` performs some conversions for commands to be compatible with

Hive output, e.g.:

```

// If it is a describe command for a Hive table, we want to have the output

format be similar with Hive.

case ExecutedCommandExec(_: DescribeCommandBase) =>

...

// SHOW TABLES in Hive only output table names, while ours output database,

table name, isTemp.

case command ExecutedCommandExec(s: ShowTablesCommand) if !s.isExtended =>

```

This conversion is needed for DatasourceV2 commands as well and this PR

proposes to add the conversion for v2 commands `SHOW TABLES` and `DESCRIBE

TABLE`.

### Why are the changes needed?

This is a bug where conversion is not applied to v2 commands.

### Does this PR introduce any user-facing change?

Yes, now the outputs for v2 commands `SHOW TABLES` and `DESCRIBE TABLE` are

compatible with HIVE output.

For example, with a table created as:

```

CREATE TABLE testcat.ns.tbl (id bigint COMMENT 'col1') USING foo

```

The output of `SHOW TABLES` has changed from

```

nstable

```

to

```

table

```

And the output of `DESCRIBE TABLE` has changed from

```

idbigintcol1

# Partitioning

Not partitioned

```

to

```

id bigint col1

# Partitioning

Not partitioned

```

### How was this patch tested?

Added unit tests.

Closes #28004 from imback82/hive_result.

Authored-by: Terry Kim

Signed-off-by: Wenchen Fan

---

.../apache/spark/sql/execution/HiveResult.scala| 29 +---

.../spark/sql/execution/HiveResultSuite.scala | 32 ++

2 files changed, 51 insertions(+), 10 deletions(-)

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

index ff820bf..21874bd 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

@@ -24,6 +24,7 @@ import java.time.{Instant, LocalDate}

import org.apache.spark.sql.Row

import org.apache.spark.sql.catalyst.util.{DateFormatter, DateTimeUtils,

TimestampFormatter}

import org.apache.spark.sql.execution.command.{DescribeCommandBase,

ExecutedCommandExec, ShowTablesCommand}

+import org.apache.spark.sql.execution.datasources.v2.{DescribeTableExec,

ShowTablesExec}

import org.apache.spark.sql.internal.SQLConf

import org.apache.spark.sql.types._

import org.apache.spark.unsafe.types.CalendarInterval

@@ -38,18 +39,17 @@ object HiveResult {

*/

def hiveResultString(executedPlan: SparkPlan): Seq[String] = executedPlan

match {

case ExecutedCommandExec(_: DescribeCommandBase) =>

- // If it is a describe command for a Hive table, we want to have the

output format

- // be similar with Hive.

- executedPlan.executeCollectPublic().map {

-case Row(name: String, dataType: String, comment) =>

- Seq(name, dataType,

-Option(comment.asInstanceOf[String]).getOrElse(""))

-.map(s => String.format(s"%-20s", s))

-.mkString("\t")

- }

-// SHOW TABLES in Hive only output table names, while ours output

database, table name, isTemp.

+ formatDescribeTableOutput(executedPlan.executeCollectPublic())

+case _: DescribeTableExec =>

+ formatDescribeTableOutput(executedPlan.executeCollectPublic())

+// SHOW TABLES in Hive only output table names while our v1 command outputs

+// database, table name, isTemp.

case command @ ExecutedCommandExec(s: ShowTablesCommand) if !s.isExtended

=>

command.executeCollect().map(_.getString(1))

+// SHOW TABLES in Hive only output table names while our v2 command outputs

+// namespace and table name.

+case command : ShowTablesExec =>

+ command.executeCollect().map(_.getString(1))

case other =>

val result: Seq[Seq[Any]] =

other.executeCollectPublic().map(_.toSeq).toSeq

// We need the types so we can output struct field names

@@ -59,6 +59,15 @@ object HiveResult {

.map(_.mkString("\t"))

}

+ private def formatDescribeTableOutput(rows: Array[Row]): Seq[String] = {

+rows.map {

+

[spark] branch branch-3.0 updated: [SPARK-31204][SQL] HiveResult compatibility for DatasourceV2 command

This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new dde7e45 [SPARK-31204][SQL] HiveResult compatibility for DatasourceV2

command

dde7e45 is described below

commit dde7e457e8aed561dfdc5309952bbfc99ddfc1a6

Author: Terry Kim

AuthorDate: Fri Mar 27 12:48:14 2020 +0800

[SPARK-31204][SQL] HiveResult compatibility for DatasourceV2 command

### What changes were proposed in this pull request?

`HiveResult` performs some conversions for commands to be compatible with

Hive output, e.g.:

```

// If it is a describe command for a Hive table, we want to have the output

format be similar with Hive.

case ExecutedCommandExec(_: DescribeCommandBase) =>

...

// SHOW TABLES in Hive only output table names, while ours output database,

table name, isTemp.

case command ExecutedCommandExec(s: ShowTablesCommand) if !s.isExtended =>

```

This conversion is needed for DatasourceV2 commands as well and this PR

proposes to add the conversion for v2 commands `SHOW TABLES` and `DESCRIBE

TABLE`.

### Why are the changes needed?

This is a bug where conversion is not applied to v2 commands.

### Does this PR introduce any user-facing change?

Yes, now the outputs for v2 commands `SHOW TABLES` and `DESCRIBE TABLE` are

compatible with HIVE output.

For example, with a table created as:

```

CREATE TABLE testcat.ns.tbl (id bigint COMMENT 'col1') USING foo

```

The output of `SHOW TABLES` has changed from

```

nstable

```

to

```

table

```

And the output of `DESCRIBE TABLE` has changed from

```

idbigintcol1

# Partitioning

Not partitioned

```

to

```

id bigint col1

# Partitioning

Not partitioned

```

### How was this patch tested?

Added unit tests.

Closes #28004 from imback82/hive_result.

Authored-by: Terry Kim

Signed-off-by: Wenchen Fan

(cherry picked from commit a97d3b9f4f4ddd215ecaa7f96c64aeba6e825f74)

Signed-off-by: Wenchen Fan

---

.../apache/spark/sql/execution/HiveResult.scala| 29 +---

.../spark/sql/execution/HiveResultSuite.scala | 32 ++

2 files changed, 51 insertions(+), 10 deletions(-)

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

index ff820bf..21874bd 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/execution/HiveResult.scala

@@ -24,6 +24,7 @@ import java.time.{Instant, LocalDate}

import org.apache.spark.sql.Row

import org.apache.spark.sql.catalyst.util.{DateFormatter, DateTimeUtils,

TimestampFormatter}

import org.apache.spark.sql.execution.command.{DescribeCommandBase,

ExecutedCommandExec, ShowTablesCommand}

+import org.apache.spark.sql.execution.datasources.v2.{DescribeTableExec,

ShowTablesExec}

import org.apache.spark.sql.internal.SQLConf

import org.apache.spark.sql.types._

import org.apache.spark.unsafe.types.CalendarInterval

@@ -38,18 +39,17 @@ object HiveResult {

*/

def hiveResultString(executedPlan: SparkPlan): Seq[String] = executedPlan

match {

case ExecutedCommandExec(_: DescribeCommandBase) =>

- // If it is a describe command for a Hive table, we want to have the

output format

- // be similar with Hive.

- executedPlan.executeCollectPublic().map {

-case Row(name: String, dataType: String, comment) =>

- Seq(name, dataType,

-Option(comment.asInstanceOf[String]).getOrElse(""))

-.map(s => String.format(s"%-20s", s))

-.mkString("\t")

- }

-// SHOW TABLES in Hive only output table names, while ours output

database, table name, isTemp.

+ formatDescribeTableOutput(executedPlan.executeCollectPublic())

+case _: DescribeTableExec =>

+ formatDescribeTableOutput(executedPlan.executeCollectPublic())

+// SHOW TABLES in Hive only output table names while our v1 command outputs

+// database, table name, isTemp.

case command @ ExecutedCommandExec(s: ShowTablesCommand) if !s.isExtended

=>

command.executeCollect().map(_.getString(1))

+// SHOW TABLES in Hive only output table names while our v2 command outputs

+// namespace and table name.

+case command : ShowTablesExec =>

+ command.executeCollect().map(_.getString(1))

case other =>

val result: Seq[Seq[Any]] =

other.executeCollectPublic().map(_.toSeq).toSeq

// We need the types so we can output struct field names

@@ -59,6 +59,15 @@ object HiveResult {

[spark] branch branch-3.0 updated: [SPARK-31170][SQL] Spark SQL Cli should respect hive-site.xml and spark.sql.warehouse.dir

This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 5f5ee4d [SPARK-31170][SQL] Spark SQL Cli should respect hive-site.xml

and spark.sql.warehouse.dir

5f5ee4d is described below

commit 5f5ee4d84acc933112c52b1818a865139c2af05a

Author: Kent Yao

AuthorDate: Fri Mar 27 12:05:45 2020 +0800

[SPARK-31170][SQL] Spark SQL Cli should respect hive-site.xml and

spark.sql.warehouse.dir

### What changes were proposed in this pull request?

In Spark CLI, we create a hive `CliSessionState` and it does not load the

`hive-site.xml`. So the configurations in `hive-site.xml` will not take effects

like other spark-hive integration apps.

Also, the warehouse directory is not correctly picked. If the `default`

database does not exist, the `CliSessionState` will create one during the first

time it talks to the metastore. The `Location` of the default DB will be

neither the value of `spark.sql.warehousr.dir` nor the user-specified value of

`hive.metastore.warehourse.dir`, but the default value of

`hive.metastore.warehourse.dir `which will always be `/user/hive/warehouse`.

This PR fixes CLiSuite failure with the hive-1.2 profile in

https://github.com/apache/spark/pull/27933.