(spark) branch master updated: [SPARK-46663][PYTHON] Disable memory profiler for pandas UDFs with iterators

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 48152b1779a5 [SPARK-46663][PYTHON] Disable memory profiler for pandas

UDFs with iterators

48152b1779a5 is described below

commit 48152b1779a5b8191dd0e09424fdb552cac55d49

Author: Xinrong Meng

AuthorDate: Tue Jan 16 11:20:40 2024 -0800

[SPARK-46663][PYTHON] Disable memory profiler for pandas UDFs with iterators

### What changes were proposed in this pull request?

When using pandas UDFs with iterators, if users enable the profiling spark

conf, a warning indicating non-support should be raised, and profiling should

be disabled.

However, currently, after raising the not-supported warning, the memory

profiler is still being enabled.

The PR proposed to fix that.

### Why are the changes needed?

A bug fix to eliminate misleading behavior.

### Does this PR introduce _any_ user-facing change?

The noticeable changes will affect only those using the PySpark shell. This

is because, in the PySpark shell, the memory profiler will raise an error,

which in turn blocks the execution of the UDF.

### How was this patch tested?

Manual test.

### Was this patch authored or co-authored using generative AI tooling?

Setup:

```py

$ ./bin/pyspark --conf spark.python.profile=true

>>> from typing import Iterator

>>> from pyspark.sql.functions import *

>>> import pandas as pd

>>> pandas_udf("long")

... def plus_one(iterator: Iterator[pd.Series]) -> Iterator[pd.Series]:

... for s in iterator:

... yield s + 1

...

>>> df = spark.createDataFrame(pd.DataFrame([1, 2, 3], columns=["v"]))

```

Before:

```

>>> df.select(plus_one(df.v)).show()

UserWarning: Profiling UDFs with iterators input/output is not supported.

Traceback (most recent call last):

...

OSError: could not get source code

```

After:

```

>>> df.select(plus_one(df.v)).show()

/Users/xinrong.meng/spark/python/pyspark/sql/udf.py:417: UserWarning:

Profiling UDFs with iterators input/output is not supported.

+---+

|plus_one(v)|

+---+

| 2|

| 3|

| 4|

+---+

```

Closes #44668 from xinrong-meng/fix_mp.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/tests/test_udf_profiler.py | 45 ++-

python/pyspark/sql/udf.py | 33 ++--

2 files changed, 60 insertions(+), 18 deletions(-)

diff --git a/python/pyspark/sql/tests/test_udf_profiler.py

b/python/pyspark/sql/tests/test_udf_profiler.py

index 136f423d0a35..776d5da88bb2 100644

--- a/python/pyspark/sql/tests/test_udf_profiler.py

+++ b/python/pyspark/sql/tests/test_udf_profiler.py

@@ -19,11 +19,13 @@ import tempfile

import unittest

import os

import sys

+import warnings

from io import StringIO

+from typing import Iterator

from pyspark import SparkConf

from pyspark.sql import SparkSession

-from pyspark.sql.functions import udf

+from pyspark.sql.functions import udf, pandas_udf

from pyspark.profiler import UDFBasicProfiler

@@ -101,6 +103,47 @@ class UDFProfilerTests(unittest.TestCase):

df = self.spark.range(10)

df.select(add1("id"), add2("id"), add1("id")).collect()

+# Unsupported

+def exec_pandas_udf_iter_to_iter(self):

+import pandas as pd

+

+@pandas_udf("int")

+def iter_to_iter(batch_ser: Iterator[pd.Series]) ->

Iterator[pd.Series]:

+for ser in batch_ser:

+yield ser + 1

+

+self.spark.range(10).select(iter_to_iter("id")).collect()

+

+# Unsupported

+def exec_map(self):

+import pandas as pd

+

+def map(pdfs: Iterator[pd.DataFrame]) -> Iterator[pd.DataFrame]:

+for pdf in pdfs:

+yield pdf[pdf.id == 1]

+

+df = self.spark.createDataFrame([(1, 1.0), (1, 2.0), (2, 3.0), (2,

5.0)], ("id", "v"))

+df.mapInPandas(map, schema=df.schema).collect()

+

+def test_unsupported(self):

+with warnings.catch_warnings(record=True) as warns:

+warnings.simplefilter("always")

+self.exec_pandas_udf_iter_to_iter()

+user_warns = [warn.message for warn in warns if

isinstance(warn.message, UserWarning)]

+self.assertTrue(len(user_warns) > 0)

+self.assertTrue(

+"Profiling UDFs with iterators input/output is not supported"

in str(user_warns[0])

+)

+

+with warnings.catch_warnin

(spark) branch master updated: [SPARK-46867][PYTHON][CONNECT][TESTS] Remove unnecessary dependency from test_mixed_udf_and_sql.py

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 79918028b142 [SPARK-46867][PYTHON][CONNECT][TESTS] Remove unnecessary

dependency from test_mixed_udf_and_sql.py

79918028b142 is described below

commit 79918028b142685fe1c3871a3593e91100ab6bbf

Author: Xinrong Meng

AuthorDate: Thu Jan 25 14:16:12 2024 -0800

[SPARK-46867][PYTHON][CONNECT][TESTS] Remove unnecessary dependency from

test_mixed_udf_and_sql.py

### What changes were proposed in this pull request?

Remove unnecessary dependency from test_mixed_udf_and_sql.py.

### Why are the changes needed?

Otherwise, test_mixed_udf_and_sql.py depends on Spark Connect's dependency

"grpc", possibly leading to conflicts or compatibility issues.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Test change only.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes #44886 from xinrong-meng/fix_dep.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/tests/connect/test_parity_pandas_udf_scalar.py | 4

python/pyspark/sql/tests/pandas/test_pandas_udf_scalar.py | 5 +++--

2 files changed, 7 insertions(+), 2 deletions(-)

diff --git a/python/pyspark/sql/tests/connect/test_parity_pandas_udf_scalar.py

b/python/pyspark/sql/tests/connect/test_parity_pandas_udf_scalar.py

index c950ca2e17c3..6a3d03246549 100644

--- a/python/pyspark/sql/tests/connect/test_parity_pandas_udf_scalar.py

+++ b/python/pyspark/sql/tests/connect/test_parity_pandas_udf_scalar.py

@@ -15,6 +15,7 @@

# limitations under the License.

#

import unittest

+from pyspark.sql.connect.column import Column

from pyspark.sql.tests.pandas.test_pandas_udf_scalar import

ScalarPandasUDFTestsMixin

from pyspark.testing.connectutils import ReusedConnectTestCase

@@ -51,6 +52,9 @@ class PandasUDFScalarParityTests(ScalarPandasUDFTestsMixin,

ReusedConnectTestCas

def test_vectorized_udf_invalid_length(self):

self.check_vectorized_udf_invalid_length()

+def test_mixed_udf_and_sql(self):

+self._test_mixed_udf_and_sql(Column)

+

if __name__ == "__main__":

from pyspark.sql.tests.connect.test_parity_pandas_udf_scalar import * #

noqa: F401

diff --git a/python/pyspark/sql/tests/pandas/test_pandas_udf_scalar.py

b/python/pyspark/sql/tests/pandas/test_pandas_udf_scalar.py

index dfbab5c8b3cd..9f6bdb83caf7 100644

--- a/python/pyspark/sql/tests/pandas/test_pandas_udf_scalar.py

+++ b/python/pyspark/sql/tests/pandas/test_pandas_udf_scalar.py

@@ -1321,8 +1321,9 @@ class ScalarPandasUDFTestsMixin:

self.assertEqual(expected_multi, df_multi_2.collect())

def test_mixed_udf_and_sql(self):

-from pyspark.sql.connect.column import Column as ConnectColumn

+self._test_mixed_udf_and_sql(Column)

+def _test_mixed_udf_and_sql(self, col_type):

df = self.spark.range(0, 1).toDF("v")

# Test mixture of UDFs, Pandas UDFs and SQL expression.

@@ -1333,7 +1334,7 @@ class ScalarPandasUDFTestsMixin:

return x + 1

def f2(x):

-assert type(x) in (Column, ConnectColumn)

+assert type(x) == col_type

return x + 10

@pandas_udf("int")

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

(spark) branch master updated: [SPARK-46689][SPARK-46690][PYTHON][CONNECT] Support v2 profiling in group/cogroup applyInPandas/applyInArrow

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 1a66c8c78a46 [SPARK-46689][SPARK-46690][PYTHON][CONNECT] Support v2

profiling in group/cogroup applyInPandas/applyInArrow

1a66c8c78a46 is described below

commit 1a66c8c78a468a5bdc6c033e8c7a26693e4bf62e

Author: Xinrong Meng

AuthorDate: Thu Feb 8 10:56:28 2024 -0800

[SPARK-46689][SPARK-46690][PYTHON][CONNECT] Support v2 profiling in

group/cogroup applyInPandas/applyInArrow

### What changes were proposed in this pull request?

Support v2 (perf, memory) profiling in group/cogroup

applyInPandas/applyInArrow, which rely on physical plan nodes

FlatMapGroupsInBatchExec and FlatMapCoGroupsInBatchExec.

### Why are the changes needed?

Complete v2 profiling support.

### Does this PR introduce _any_ user-facing change?

Yes. V2 profiling in group/cogroup applyInPandas/applyInArrow is supported.

### How was this patch tested?

Unit tests.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes #45050 from xinrong-meng/other_p2.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/tests/test_udf_profiler.py | 123 +

python/pyspark/tests/test_memory_profiler.py | 123 +

.../python/FlatMapCoGroupsInBatchExec.scala| 2 +-

.../python/FlatMapGroupsInBatchExec.scala | 2 +-

4 files changed, 248 insertions(+), 2 deletions(-)

diff --git a/python/pyspark/sql/tests/test_udf_profiler.py

b/python/pyspark/sql/tests/test_udf_profiler.py

index 99719b5475c1..4f767d274414 100644

--- a/python/pyspark/sql/tests/test_udf_profiler.py

+++ b/python/pyspark/sql/tests/test_udf_profiler.py

@@ -394,6 +394,129 @@ class UDFProfiler2TestsMixin:

io.getvalue(),

f"2.*{os.path.basename(inspect.getfile(_do_computation))}"

)

+@unittest.skipIf(

+not have_pandas or not have_pyarrow,

+cast(str, pandas_requirement_message or pyarrow_requirement_message),

+)

+def test_perf_profiler_group_apply_in_pandas(self):

+# FlatMapGroupsInBatchExec

+df = self.spark.createDataFrame(

+[(1, 1.0), (1, 2.0), (2, 3.0), (2, 5.0), (2, 10.0)], ("id", "v")

+)

+

+def normalize(pdf):

+v = pdf.v

+return pdf.assign(v=(v - v.mean()) / v.std())

+

+with self.sql_conf({"spark.sql.pyspark.udf.profiler": "perf"}):

+df.groupby("id").applyInPandas(normalize, schema="id long, v

double").show()

+

+self.assertEqual(1, len(self.profile_results),

str(self.profile_results.keys()))

+

+for id in self.profile_results:

+with self.trap_stdout() as io:

+self.spark.showPerfProfiles(id)

+

+self.assertIn(f"Profile of UDF", io.getvalue())

+self.assertRegex(

+io.getvalue(),

f"2.*{os.path.basename(inspect.getfile(_do_computation))}"

+)

+

+@unittest.skipIf(

+not have_pandas or not have_pyarrow,

+cast(str, pandas_requirement_message or pyarrow_requirement_message),

+)

+def test_perf_profiler_cogroup_apply_in_pandas(self):

+# FlatMapCoGroupsInBatchExec

+import pandas as pd

+

+df1 = self.spark.createDataFrame(

+[(2101, 1, 1.0), (2101, 2, 2.0), (2102, 1, 3.0),

(2102, 2, 4.0)],

+("time", "id", "v1"),

+)

+df2 = self.spark.createDataFrame(

+[(2101, 1, "x"), (2101, 2, "y")], ("time", "id", "v2")

+)

+

+def asof_join(left, right):

+return pd.merge_asof(left, right, on="time", by="id")

+

+with self.sql_conf({"spark.sql.pyspark.udf.profiler": "perf"}):

+df1.groupby("id").cogroup(df2.groupby("id")).applyInPandas(

+asof_join, schema="time int, id int, v1 double, v2 string"

+).show()

+

+self.assertEqual(1, len(self.profile_results),

str(self.profile_results.keys()))

+

+for id in self.profile_results:

+with self.trap_stdout() as io:

+self.spark.showPerfProfiles(id)

+

+self.assertIn(f"Profile of UDF", io.getvalue())

+self.assertRegex(

+io.getvalue(),

f"2.*{os.path.basename(inspect.getfile(_do_computation))}"

+)

+

+@unittest.skipIf(

+not have_pandas or not have_pyarrow,

+cast(str, pandas_requirement_message or pyarrow_requirement_messag

(spark) branch master updated: [SPARK-47014][PYTHON][CONNECT] Implement methods dumpPerfProfiles and dumpMemoryProfiles of SparkSession

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 4b9e9d7a9b7c [SPARK-47014][PYTHON][CONNECT] Implement methods

dumpPerfProfiles and dumpMemoryProfiles of SparkSession

4b9e9d7a9b7c is described below

commit 4b9e9d7a9b7c1b21c7d04cdf0095cc069a35b757

Author: Xinrong Meng

AuthorDate: Wed Feb 14 10:37:33 2024 -0800

[SPARK-47014][PYTHON][CONNECT] Implement methods dumpPerfProfiles and

dumpMemoryProfiles of SparkSession

### What changes were proposed in this pull request?

Implement methods dumpPerfProfiles and dumpMemoryProfiles of SparkSession

### Why are the changes needed?

Complete support of (v2) SparkSession-based profiling.

### Does this PR introduce _any_ user-facing change?

Yes. dumpPerfProfiles and dumpMemoryProfiles of SparkSession are supported.

An example of dumpPerfProfiles is shown below.

```py

>>> udf("long")

... def add(x):

... return x + 1

...

>>> spark.conf.set("spark.sql.pyspark.udf.profiler", "perf")

>>> spark.range(10).select(add("id")).collect()

...

>>> spark.dumpPerfProfiles("dummy_dir")

>>> os.listdir("dummy_dir")

['udf_2.pstats']

```

### How was this patch tested?

Unit tests.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes #45073 from xinrong-meng/dump_profile.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/connect/session.py | 10 +

python/pyspark/sql/profiler.py| 65 +++

python/pyspark/sql/session.py | 10 +

python/pyspark/sql/tests/test_udf_profiler.py | 20 +

python/pyspark/tests/test_memory_profiler.py | 22 +

5 files changed, 110 insertions(+), 17 deletions(-)

diff --git a/python/pyspark/sql/connect/session.py

b/python/pyspark/sql/connect/session.py

index 9a678c28a6cc..764f71ccc415 100644

--- a/python/pyspark/sql/connect/session.py

+++ b/python/pyspark/sql/connect/session.py

@@ -958,6 +958,16 @@ class SparkSession:

showMemoryProfiles.__doc__ = PySparkSession.showMemoryProfiles.__doc__

+def dumpPerfProfiles(self, path: str, id: Optional[int] = None) -> None:

+self._profiler_collector.dump_perf_profiles(path, id)

+

+dumpPerfProfiles.__doc__ = PySparkSession.dumpPerfProfiles.__doc__

+

+def dumpMemoryProfiles(self, path: str, id: Optional[int] = None) -> None:

+self._profiler_collector.dump_memory_profiles(path, id)

+

+dumpMemoryProfiles.__doc__ = PySparkSession.dumpMemoryProfiles.__doc__

+

SparkSession.__doc__ = PySparkSession.__doc__

diff --git a/python/pyspark/sql/profiler.py b/python/pyspark/sql/profiler.py

index 565752197238..0db9d9b8b9b4 100644

--- a/python/pyspark/sql/profiler.py

+++ b/python/pyspark/sql/profiler.py

@@ -15,6 +15,7 @@

# limitations under the License.

#

from abc import ABC, abstractmethod

+import os

import pstats

from threading import RLock

from typing import Dict, Optional, TYPE_CHECKING

@@ -158,6 +159,70 @@ class ProfilerCollector(ABC):

"""

...

+def dump_perf_profiles(self, path: str, id: Optional[int] = None) -> None:

+"""

+Dump the perf profile results into directory `path`.

+

+.. versionadded:: 4.0.0

+

+Parameters

+--

+path: str

+A directory in which to dump the perf profile.

+id : int, optional

+A UDF ID to be shown. If not specified, all the results will be

shown.

+"""

+with self._lock:

+stats = self._perf_profile_results

+

+def dump(id: int) -> None:

+s = stats.get(id)

+

+if s is not None:

+if not os.path.exists(path):

+os.makedirs(path)

+p = os.path.join(path, f"udf_{id}_perf.pstats")

+s.dump_stats(p)

+

+if id is not None:

+dump(id)

+else:

+for id in sorted(stats.keys()):

+dump(id)

+

+def dump_memory_profiles(self, path: str, id: Optional[int] = None) ->

None:

+"""

+Dump the memory profile results into directory `path`.

+

+.. versionadded:: 4.0.0

+

+Parameters

+--

+path: str

+A directory in which to dump the memory profile.

+id : int, optional

+A UDF ID to be shown. If not specified, all the results will be

shown.

+"""

+with self._lock:

+code_map = self._memory_profile_res

(spark) branch master updated (6de527e9ee94 -> 6185e5cad7be)

This is an automated email from the ASF dual-hosted git repository. xinrong pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 6de527e9ee94 [SPARK-43259][SQL] Assign a name to the error class _LEGACY_ERROR_TEMP_2024 add 6185e5cad7be [SPARK-47132][DOCS][PYTHON] Correct docstring for pyspark's dataframe.head No new revisions were added by this update. Summary of changes: python/pyspark/sql/dataframe.py | 7 +-- 1 file changed, 5 insertions(+), 2 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

(spark) branch master updated (06c741a0061b -> d20650bc8cf2)

This is an automated email from the ASF dual-hosted git repository. xinrong pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 06c741a0061b [SPARK-47129][CONNECT][SQL] Make `ResolveRelations` cache connect plan properly add d20650bc8cf2 [SPARK-46975][PS] Support dedicated fallback methods No new revisions were added by this update. Summary of changes: python/pyspark/pandas/frame.py | 49 +++--- 1 file changed, 36 insertions(+), 13 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

(spark) branch master updated: [SPARK-47276][PYTHON][CONNECT] Introduce `spark.profile.clear` for SparkSession-based profiling

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 501999a834ea [SPARK-47276][PYTHON][CONNECT] Introduce

`spark.profile.clear` for SparkSession-based profiling

501999a834ea is described below

commit 501999a834ea7761a792b823c543e40fba84231d

Author: Xinrong Meng

AuthorDate: Thu Mar 7 13:20:39 2024 -0800

[SPARK-47276][PYTHON][CONNECT] Introduce `spark.profile.clear` for

SparkSession-based profiling

### What changes were proposed in this pull request?

Introduce `spark.profile.clear` for SparkSession-based profiling.

### Why are the changes needed?

A straightforward and unified interface for managing and resetting

profiling results for SparkSession-based profilers.

### Does this PR introduce _any_ user-facing change?

Yes. `spark.profile.clear` is supported as shown below.

Preparation:

```py

>>> from pyspark.sql.functions import pandas_udf

>>> df = spark.range(3)

>>> pandas_udf("long")

... def add1(x):

... return x + 1

...

>>> added = df.select(add1("id"))

>>> spark.conf.set("spark.sql.pyspark.udf.profiler", "perf")

>>> added.show()

++

|add1(id)|

++

...

++

>>> spark.profile.show()

Profile of UDF

1410 function calls (1374 primitive calls) in 0.004 seconds

...

```

Example usage:

```py

>>> spark.profile.profiler_collector._profile_results

{2: (, None)}

>>> spark.profile.clear(1) # id mismatch

>>> spark.profile.profiler_collector._profile_results

{2: (, None)}

>>> spark.profile.clear(type="memory") # type mismatch

>>> spark.profile.profiler_collector._profile_results

{2: (, None)}

>>> spark.profile.clear() # clear all

>>> spark.profile.profiler_collector._profile_results

{}

>>> spark.profile.show()

>>>

```

### How was this patch tested?

Unit tests.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes #45378 from xinrong-meng/profile_clear.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/profiler.py| 79 +++

python/pyspark/sql/tests/test_session.py | 27 +

python/pyspark/sql/tests/test_udf_profiler.py | 26 +

python/pyspark/tests/test_memory_profiler.py | 59

4 files changed, 191 insertions(+)

diff --git a/python/pyspark/sql/profiler.py b/python/pyspark/sql/profiler.py

index 5ab27bce2582..711e39de4723 100644

--- a/python/pyspark/sql/profiler.py

+++ b/python/pyspark/sql/profiler.py

@@ -224,6 +224,56 @@ class ProfilerCollector(ABC):

for id in sorted(code_map.keys()):

dump(id)

+def clear_perf_profiles(self, id: Optional[int] = None) -> None:

+"""

+Clear the perf profile results.

+

+.. versionadded:: 4.0.0

+

+Parameters

+--

+id : int, optional

+The UDF ID whose profiling results should be cleared.

+If not specified, all the results will be cleared.

+"""

+with self._lock:

+if id is not None:

+if id in self._profile_results:

+perf, mem, *_ = self._profile_results[id]

+self._profile_results[id] = (None, mem, *_)

+if mem is None:

+self._profile_results.pop(id, None)

+else:

+for id, (perf, mem, *_) in list(self._profile_results.items()):

+self._profile_results[id] = (None, mem, *_)

+if mem is None:

+self._profile_results.pop(id, None)

+

+def clear_memory_profiles(self, id: Optional[int] = None) -> None:

+"""

+Clear the memory profile results.

+

+.. versionadded:: 4.0.0

+

+Parameters

+--

+id : int, optional

+The UDF ID whose profiling results should be cleared.

+If not specified, all the results will be cleared.

+"""

+with self._lock:

+if id is not None:

+if id in self._profile_results:

+perf, mem, *_ = self._profile_results[id]

+self._profile_results[id] = (perf, None, *_)

+if perf is N

(spark) branch master updated (f9ebe1b3d24b -> 6c827c10dc15)

This is an automated email from the ASF dual-hosted git repository. xinrong pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from f9ebe1b3d24b [SPARK-46375][DOCS] Add user guide for Python data source API add 6c827c10dc15 [SPARK-47876][PYTHON][DOCS] Improve docstring of mapInArrow No new revisions were added by this update. Summary of changes: python/pyspark/sql/pandas/map_ops.py | 19 +-- 1 file changed, 9 insertions(+), 10 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

(spark) branch master updated: [SPARK-47864][FOLLOWUP][PYTHON][DOCS] Fix minor typo: "MLLib" -> "MLlib"

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new e50737be366a [SPARK-47864][FOLLOWUP][PYTHON][DOCS] Fix minor typo:

"MLLib" -> "MLlib"

e50737be366a is described below

commit e50737be366ac0e8d5466b714f7d41991d0b05a8

Author: Haejoon Lee

AuthorDate: Tue Apr 23 10:10:20 2024 -0700

[SPARK-47864][FOLLOWUP][PYTHON][DOCS] Fix minor typo: "MLLib" -> "MLlib"

### What changes were proposed in this pull request?

This PR followups for https://github.com/apache/spark/pull/46096 to fix

minor typo.

### Why are the changes needed?

To use official naming from documentation for `MLlib` instead of `MLLib`.

See https://spark.apache.org/mllib/.

### Does this PR introduce _any_ user-facing change?

No API change, but the user-facing documentation will be updated.

### How was this patch tested?

Manually built the doc from local test envs.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes #46174 from itholic/minor_typo_installation.

Authored-by: Haejoon Lee

Signed-off-by: Xinrong Meng

---

python/docs/source/getting_started/install.rst | 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

diff --git a/python/docs/source/getting_started/install.rst

b/python/docs/source/getting_started/install.rst

index 33a0560764df..ee894981387a 100644

--- a/python/docs/source/getting_started/install.rst

+++ b/python/docs/source/getting_started/install.rst

@@ -244,7 +244,7 @@ Additional libraries that enhance functionality but are not

included in the inst

- **matplotlib**: Provide plotting for visualization. The default is

**plotly**.

-MLLib DataFrame-based API

+MLlib DataFrame-based API

^

Installable with ``pip install "pyspark[ml]"``.

@@ -252,7 +252,7 @@ Installable with ``pip install "pyspark[ml]"``.

=== = ==

Package Supported version Note

=== = ==

-`numpy` >=1.21Required for MLLib DataFrame-based API

+`numpy` >=1.21Required for MLlib DataFrame-based API

=== = ==

Additional libraries that enhance functionality but are not included in the

installation packages:

@@ -272,5 +272,5 @@ Installable with ``pip install "pyspark[mllib]"``.

=== = ==

Package Supported version Note

=== = ==

-`numpy` >=1.21Required for MLLib

+`numpy` >=1.21Required for MLlib

=== = ==

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

(spark) branch master updated: [SPARK-46277][PYTHON] Validate startup urls with the config being set

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 027aeb1764a8 [SPARK-46277][PYTHON] Validate startup urls with the

config being set

027aeb1764a8 is described below

commit 027aeb1764a816858b7ea071cd2b620f02a6a525

Author: Xinrong Meng

AuthorDate: Thu Dec 7 13:45:31 2023 -0800

[SPARK-46277][PYTHON] Validate startup urls with the config being set

### What changes were proposed in this pull request?

Validate startup urls with the config being set, see example in the "Does

this PR introduce _any_ user-facing change".

### Why are the changes needed?

Clear and user-friendly error messages.

### Does this PR introduce _any_ user-facing change?

Yes.

FROM

```py

>>> SparkSession.builder.config(map={"spark.master": "x", "spark.remote":

"y"})

>> SparkSession.builder.config(map={"spark.master": "x", "spark.remote":

"y"}).config("x", "z") # Only raises the error when adding new configs

Traceback (most recent call last):

...

RuntimeError: Spark master cannot be configured with Spark Connect server;

however, found URL for Spark Connect [y]

```

TO

```py

>>> SparkSession.builder.config(map={"spark.master": "x", "spark.remote":

"y"})

Traceback (most recent call last):

...

RuntimeError: Spark master cannot be configured with Spark Connect server;

however, found URL for Spark Connect [y]

```

### How was this patch tested?

Unit tests.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes #44194 from xinrong-meng/fix_session.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/errors/error_classes.py | 6 +++---

python/pyspark/sql/session.py| 28 +++-

python/pyspark/sql/tests/test_session.py | 30 --

3 files changed, 42 insertions(+), 22 deletions(-)

diff --git a/python/pyspark/errors/error_classes.py

b/python/pyspark/errors/error_classes.py

index 965fd04a9135..cc8400270967 100644

--- a/python/pyspark/errors/error_classes.py

+++ b/python/pyspark/errors/error_classes.py

@@ -86,12 +86,12 @@ ERROR_CLASSES_JSON = """

},

"CANNOT_CONFIGURE_SPARK_CONNECT": {

"message": [

- "Spark Connect server cannot be configured with Spark master; however,

found URL for Spark master []."

+ "Spark Connect server cannot be configured: Existing [],

New []."

]

},

- "CANNOT_CONFIGURE_SPARK_MASTER": {

+ "CANNOT_CONFIGURE_SPARK_CONNECT_MASTER": {

"message": [

- "Spark master cannot be configured with Spark Connect server; however,

found URL for Spark Connect []."

+ "Spark Connect server and Spark master cannot be configured together:

Spark master [], Spark Connect []."

]

},

"CANNOT_CONVERT_COLUMN_INTO_BOOL": {

diff --git a/python/pyspark/sql/session.py b/python/pyspark/sql/session.py

index 7f4589557cd2..86aacfa54c6e 100644

--- a/python/pyspark/sql/session.py

+++ b/python/pyspark/sql/session.py

@@ -286,17 +286,17 @@ class SparkSession(SparkConversionMixin):

with self._lock:

if conf is not None:

for k, v in conf.getAll():

-self._validate_startup_urls()

self._options[k] = v

+self._validate_startup_urls()

elif map is not None:

for k, v in map.items(): # type: ignore[assignment]

v = to_str(v) # type: ignore[assignment]

-self._validate_startup_urls()

self._options[k] = v

+self._validate_startup_urls()

else:

value = to_str(value)

-self._validate_startup_urls()

self._options[cast(str, key)] = value

+self._validate_startup_urls()

return self

def _validate_startup_urls(

@@ -306,22 +306,16 @@ class SparkSession(SparkConversionMixin):

Helper function that validates the combination of startup URLs and

raises an exception

if incompatible options are selected.

"""

-if "spark.master" in self._options and (

+if ("spark.master" in self._options or "MASTER" in os.environ) and

(

"spark.remote" in self._options or "SP

[spark] branch master updated: [SPARK-41372][CONNECT][PYTHON] Implement DataFrame TempView

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new d2d1b50bfac [SPARK-41372][CONNECT][PYTHON] Implement DataFrame TempView

d2d1b50bfac is described below

commit d2d1b50bfacf1c5bdcf56f150ae44d1b7e5cb5a6

Author: Rui Wang

AuthorDate: Mon Dec 5 09:20:10 2022 -0800

[SPARK-41372][CONNECT][PYTHON] Implement DataFrame TempView

### What changes were proposed in this pull request?

Implement DataFrame TempView (which is createTemView and

createOrReplaceTempView). This is a session local temp view which is different

from the global temp view.

### Why are the changes needed?

API coverage.

### Does this PR introduce _any_ user-facing change?

NO

### How was this patch tested?

UT

Closes #38891 from amaliujia/createTempView.

Authored-by: Rui Wang

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/connect/dataframe.py| 38 ++

.../sql/tests/connect/test_connect_basic.py| 14 +++-

2 files changed, 51 insertions(+), 1 deletion(-)

diff --git a/python/pyspark/sql/connect/dataframe.py

b/python/pyspark/sql/connect/dataframe.py

index 8e8a5f4d318..026b7e6099f 100644

--- a/python/pyspark/sql/connect/dataframe.py

+++ b/python/pyspark/sql/connect/dataframe.py

@@ -1554,6 +1554,44 @@ class DataFrame(object):

"""

print(self._explain_string(extended=extended, mode=mode))

+def createTempView(self, name: str) -> None:

+"""Creates a local temporary view with this :class:`DataFrame`.

+

+The lifetime of this temporary table is tied to the

:class:`SparkSession`

+that was used to create this :class:`DataFrame`.

+throws :class:`TempTableAlreadyExistsException`, if the view name

already exists in the

+catalog.

+

+.. versionadded:: 3.4.0

+

+Parameters

+--

+name : str

+Name of the view.

+"""

+command = plan.CreateView(

+child=self._plan, name=name, is_global=False, replace=False

+).command(session=self._session.client)

+self._session.client.execute_command(command)

+

+def createOrReplaceTempView(self, name: str) -> None:

+"""Creates or replaces a local temporary view with this

:class:`DataFrame`.

+

+The lifetime of this temporary table is tied to the

:class:`SparkSession`

+that was used to create this :class:`DataFrame`.

+

+.. versionadded:: 3.4.0

+

+Parameters

+--

+name : str

+Name of the view.

+"""

+command = plan.CreateView(

+child=self._plan, name=name, is_global=False, replace=True

+).command(session=self._session.client)

+self._session.client.execute_command(command)

+

def createGlobalTempView(self, name: str) -> None:

"""Creates a global temporary view with this :class:`DataFrame`.

diff --git a/python/pyspark/sql/tests/connect/test_connect_basic.py

b/python/pyspark/sql/tests/connect/test_connect_basic.py

index abab47b36bf..22ee98558de 100644

--- a/python/pyspark/sql/tests/connect/test_connect_basic.py

+++ b/python/pyspark/sql/tests/connect/test_connect_basic.py

@@ -530,11 +530,23 @@ class SparkConnectTests(SparkConnectSQLTestCase):

self.connect.sql("SELECT 2 AS X LIMIT

1").createOrReplaceGlobalTempView("view_1")

self.assertTrue(self.spark.catalog.tableExists("global_temp.view_1"))

-# Test when creating a view which is alreayd exists but

+# Test when creating a view which is already exists but

self.assertTrue(self.spark.catalog.tableExists("global_temp.view_1"))

with self.assertRaises(grpc.RpcError):

self.connect.sql("SELECT 1 AS X LIMIT

0").createGlobalTempView("view_1")

+def test_create_session_local_temp_view(self):

+# SPARK-41372: test session local temp view creation.

+with self.tempView("view_local_temp"):

+self.connect.sql("SELECT 1 AS X").createTempView("view_local_temp")

+self.assertEqual(self.connect.sql("SELECT * FROM

view_local_temp").count(), 1)

+self.connect.sql("SELECT 1 AS X LIMIT

0").createOrReplaceTempView("view_local_temp")

+self.assertEqual(self.connect.sql("SELECT * FROM

view_local_temp").count(), 0)

+

+# Test when creating a view which is already exists but

+with self.assertRaises(grpc.RpcError):

+self.connect.sql("SELECT 1 AS X LIMIT

0").

[spark] branch master updated: [SPARK-41225][CONNECT][PYTHON][FOLLOW-UP] Disable unsupported functions

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 29a70117b27 [SPARK-41225][CONNECT][PYTHON][FOLLOW-UP] Disable

unsupported functions

29a70117b27 is described below

commit 29a70117b272582d11e7b7b8951dff1be91d3de7

Author: Martin Grund

AuthorDate: Fri Dec 9 14:55:50 2022 -0800

[SPARK-41225][CONNECT][PYTHON][FOLLOW-UP] Disable unsupported functions

### What changes were proposed in this pull request?

This patch adds method stubs for unsupported functions in the Python client

for Spark Connect in the `Column` class that will throw a

`NoteImplementedError` when called. This is to give a clear indication to the

users that these methods will be implemented in the future.

### Why are the changes needed?

UX

### Does this PR introduce _any_ user-facing change?

NO

### How was this patch tested?

UT

Closes #39009 from grundprinzip/SPARK-41225-v2.

Authored-by: Martin Grund

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/connect/column.py | 36 ++

.../sql/tests/connect/test_connect_column.py | 25 +++

2 files changed, 61 insertions(+)

diff --git a/python/pyspark/sql/connect/column.py

b/python/pyspark/sql/connect/column.py

index 63e95c851db..f1a909b89fc 100644

--- a/python/pyspark/sql/connect/column.py

+++ b/python/pyspark/sql/connect/column.py

@@ -786,3 +786,39 @@ class Column:

def __repr__(self) -> str:

return "Column<'%s'>" % self._expr.__repr__()

+

+def otherwise(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("otherwise() is not yet implemented.")

+

+def over(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("over() is not yet implemented.")

+

+def isin(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("isin() is not yet implemented.")

+

+def when(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("when() is not yet implemented.")

+

+def getItem(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("getItem() is not yet implemented.")

+

+def astype(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("astype() is not yet implemented.")

+

+def between(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("between() is not yet implemented.")

+

+def getField(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("getField() is not yet implemented.")

+

+def withField(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("withField() is not yet implemented.")

+

+def dropFields(self, *args: Any, **kwargs: Any) -> None:

+raise NotImplementedError("dropFields() is not yet implemented.")

+

+def __getitem__(self, k: Any) -> None:

+raise NotImplementedError("apply() - __getitem__ is not yet

implemented.")

+

+def __iter__(self) -> None:

+raise TypeError("Column is not iterable")

diff --git a/python/pyspark/sql/tests/connect/test_connect_column.py

b/python/pyspark/sql/tests/connect/test_connect_column.py

index c73f1b5b0c7..734b0bbf226 100644

--- a/python/pyspark/sql/tests/connect/test_connect_column.py

+++ b/python/pyspark/sql/tests/connect/test_connect_column.py

@@ -119,6 +119,31 @@ class SparkConnectTests(SparkConnectSQLTestCase):

df.select(df.id.cast(x)).toPandas(),

df2.select(df2.id.cast(x)).toPandas()

)

+def test_unsupported_functions(self):

+# SPARK-41225: Disable unsupported functions.

+c = self.connect.range(1).id

+for f in (

+"otherwise",

+"over",

+"isin",

+"when",

+"getItem",

+"astype",

+"between",

+"getField",

+"withField",

+"dropFields",

+):

+with self.assertRaises(NotImplementedError):

+getattr(c, f)()

+

+with self.assertRaises(NotImplementedError):

+c["a"]

+

+with self.assertRaises(TypeError):

+for x in c:

+pass

+

if __name__ == "__main__":

import unittest

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-40307][PYTHON] Introduce Arrow-optimized Python UDFs

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 1d3ec69dfdf [SPARK-40307][PYTHON] Introduce Arrow-optimized Python UDFs

1d3ec69dfdf is described below

commit 1d3ec69dfdf3edb0d688fb5294f8a17cc8f5e7e9

Author: Xinrong Meng

AuthorDate: Thu Jan 12 20:23:20 2023 +0800

[SPARK-40307][PYTHON] Introduce Arrow-optimized Python UDFs

### What changes were proposed in this pull request?

Introduce Arrow-optimized Python UDFs. Please refer to

[design](https://docs.google.com/document/d/e/2PACX-1vQxFyrMqFM3zhDhKlczrl9ONixk56cVXUwDXK0MMx4Vv2kH3oo-tWYoujhrGbCXTF78CSD2kZtnhnrQ/pub)

for design details and micro benchmarks.

There are two ways to enable/disable the Arrow optimization for Python UDFs:

- the Spark configuration `spark.sql.execution.pythonUDF.arrow.enabled`,

disabled by default.

- the `useArrow` parameter of the `udf` function, None by default.

The Spark configuration takes effect only when `useArrow` is None.

Otherwise, `useArrow` decides whether a specific user-defined function is

optimized by Arrow or not.

The reason why we introduce these two ways is to provide both a convenient,

per-Spark-session control and a finer-grained, per-UDF control of the Arrow

optimization for Python UDFs.

### Why are the changes needed?

Python user-defined function (UDF) enables users to run arbitrary code

against PySpark columns. It uses Pickle for (de)serialization and executes row

by row.

One major performance bottleneck of Python UDFs is (de)serialization, that

is, the data interchanging between the worker JVM and the spawned Python

subprocess which actually executes the UDF.

The PR proposes a better alternative to handle the (de)serialization:

Arrow, which is used in the (de)serialization of Pandas UDF already.

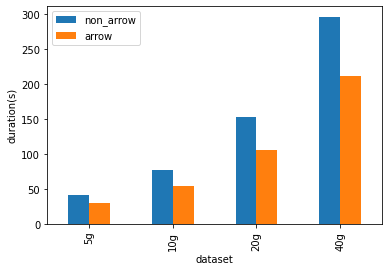

Benchmark

The micro benchmarks are conducted in a cluster with 1 driver (i3.2xlarge),

2 workers (i3.2xlarge). An i3.2xlarge machine has 61 GB Memory, 8 Cores. The

datasets used in the benchmarks are generated and sized 5 GB, 10 GB, 20 GB and

40 GB.

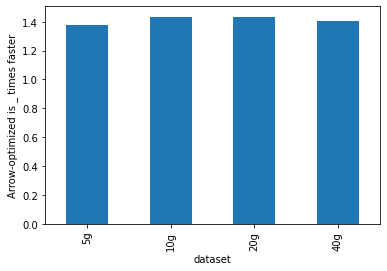

As shown below, Arrow-optimized Python UDFs are **~1.4x** faster than

non-Arrow-optimized Python UDFs.

Please refer to

[design](https://docs.google.com/document/d/e/2PACX-1vQxFyrMqFM3zhDhKlczrl9ONixk56cVXUwDXK0MMx4Vv2kH3oo-tWYoujhrGbCXTF78CSD2kZtnhnrQ/pub)

for details.

### Does this PR introduce _any_ user-facing change?

No, since the Arrow optimization for Python UDFs is disabled by default.

### How was this patch tested?

Unit tests.

Below is the script to generate the result table when the Arrow's type

coercion is needed, as in the

[docstring](https://github.com/apache/spark/pull/39384/files#diff-2df611ab00519d2d67e5fc20960bd5a6bd76ecd6f7d56cd50d8befd6ce30081bR96-R111)

of `_create_py_udf` .

```

import sys

import array

import datetime

from decimal import Decimal

from pyspark.sql import Row

from pyspark.sql.types import *

from pyspark.sql.functions import udf

data = [

None,

True,

1,

"a",

datetime.date(1970, 1, 1),

datetime.datetime(1970, 1, 1, 0, 0),

1.0,

array.array("i", [1]),

[1],

(1,),

bytearray([65, 66, 67]),

Decimal(1),

{"a": 1},

]

types = [

BooleanType(),

ByteType(),

ShortType(),

IntegerType(),

LongType(),

StringType(),

DateType(),

TimestampType(),

FloatType(),

DoubleType(),

BinaryType(),

DecimalType(10, 0),

]

df = spark.range(1)

results = []

count = 0

total = len(types) * len(data)

spark.sparkContext.setLogLevel("FATAL")

for t in types:

result = []

for v in data:

try:

row = df.select(udf(lambda _: v, t)("id")).first()

ret_str = repr(row[0])

except Exception:

ret_str = "X"

result.append(ret_str)

progress = "SQL Type: [%s]\n Python Value: [%s(%s)]\n Result

Python Value: [%s]" % (

t.simpleString(), str(v), type(v).__name__, ret_str)

count += 1

print("%s/%s:\n %s" % (count, total, progress))

results.append([t.simpleString()] + list(map(str, result)))

schema = ["SQL Type \\ Python Val

[spark] branch branch-3.4 created (now c43be4eeeea)

This is an automated email from the ASF dual-hosted git repository. xinrong pushed a change to branch branch-3.4 in repository https://gitbox.apache.org/repos/asf/spark.git at c43be4a [SPARK-42119][SQL] Add built-in table-valued functions inline and inline_outer No new revisions were added by this update. - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-42126][PYTHON][CONNECT] Accept return type in DDL strings for Python Scalar UDFs in Spark Connect

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new dbd667e7bc5 [SPARK-42126][PYTHON][CONNECT] Accept return type in DDL

strings for Python Scalar UDFs in Spark Connect

dbd667e7bc5 is described below

commit dbd667e7bc5fee443b8a39ca56d4cf3dd1bb2bae

Author: Xinrong Meng

AuthorDate: Thu Jan 26 19:15:13 2023 +0800

[SPARK-42126][PYTHON][CONNECT] Accept return type in DDL strings for Python

Scalar UDFs in Spark Connect

### What changes were proposed in this pull request?

Accept return type in DDL strings for Python Scalar UDFs in Spark Connect.

The approach proposed in this PR is a workaround to parse DataType from DDL

strings. We should think of a more elegant alternative to replace that later.

### Why are the changes needed?

To reach parity with vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. Return type in DDL strings are accepted now.

### How was this patch tested?

Unit tests.

Closes #39739 from xinrong-meng/datatype_ddl.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/connect/udf.py| 20 +++-

.../sql/tests/connect/test_connect_function.py | 8

2 files changed, 27 insertions(+), 1 deletion(-)

diff --git a/python/pyspark/sql/connect/udf.py

b/python/pyspark/sql/connect/udf.py

index 4a465084838..d0eb2fdfe6c 100644

--- a/python/pyspark/sql/connect/udf.py

+++ b/python/pyspark/sql/connect/udf.py

@@ -28,6 +28,7 @@ from pyspark.sql.connect.expressions import (

)

from pyspark.sql.connect.column import Column

from pyspark.sql.types import DataType, StringType

+from pyspark.sql.utils import is_remote

if TYPE_CHECKING:

@@ -90,7 +91,24 @@ class UserDefinedFunction:

)

self.func = func

-self._returnType = returnType

+

+if isinstance(returnType, str):

+# Currently we don't have a way to have a current Spark session in

Spark Connect, and

+# pyspark.sql.SparkSession has a centralized logic to control the

session creation.

+# So uses pyspark.sql.SparkSession for now. Should replace this to

using the current

+# Spark session for Spark Connect in the future.

+from pyspark.sql import SparkSession as PySparkSession

+

+assert is_remote()

+return_type_schema = ( # a workaround to parse the DataType from

DDL strings

+PySparkSession.builder.getOrCreate()

+.createDataFrame(data=[], schema=returnType)

+.schema

+)

+assert len(return_type_schema.fields) == 1, "returnType should be

singular"

+self._returnType = return_type_schema.fields[0].dataType

+else:

+self._returnType = returnType

self._name = name or (

func.__name__ if hasattr(func, "__name__") else

func.__class__.__name__

)

diff --git a/python/pyspark/sql/tests/connect/test_connect_function.py

b/python/pyspark/sql/tests/connect/test_connect_function.py

index 7042a7e8e6f..50fadb49ed4 100644

--- a/python/pyspark/sql/tests/connect/test_connect_function.py

+++ b/python/pyspark/sql/tests/connect/test_connect_function.py

@@ -2299,6 +2299,14 @@ class SparkConnectFunctionTests(ReusedConnectTestCase,

PandasOnSparkTestUtils, S

cdf.withColumn("A", CF.udf(lambda x: x + 1)(cdf.a)).toPandas(),

sdf.withColumn("A", SF.udf(lambda x: x + 1)(sdf.a)).toPandas(),

)

+self.assert_eq( # returnType as DDL strings

+cdf.withColumn("C", CF.udf(lambda x: len(x),

"int")(cdf.c)).toPandas(),

+sdf.withColumn("C", SF.udf(lambda x: len(x),

"int")(sdf.c)).toPandas(),

+)

+self.assert_eq( # returnType as DataType

+cdf.withColumn("C", CF.udf(lambda x: len(x),

IntegerType())(cdf.c)).toPandas(),

+sdf.withColumn("C", SF.udf(lambda x: len(x),

IntegerType())(sdf.c)).toPandas(),

+)

# as a decorator

@CF.udf(StringType())

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.4 updated: [SPARK-42126][PYTHON][CONNECT] Accept return type in DDL strings for Python Scalar UDFs in Spark Connect

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch branch-3.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.4 by this push:

new 79e8df84309 [SPARK-42126][PYTHON][CONNECT] Accept return type in DDL

strings for Python Scalar UDFs in Spark Connect

79e8df84309 is described below

commit 79e8df84309ed54d0c3fc7face414e6c440daa81

Author: Xinrong Meng

AuthorDate: Thu Jan 26 19:15:13 2023 +0800

[SPARK-42126][PYTHON][CONNECT] Accept return type in DDL strings for Python

Scalar UDFs in Spark Connect

### What changes were proposed in this pull request?

Accept return type in DDL strings for Python Scalar UDFs in Spark Connect.

The approach proposed in this PR is a workaround to parse DataType from DDL

strings. We should think of a more elegant alternative to replace that later.

### Why are the changes needed?

To reach parity with vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. Return type in DDL strings are accepted now.

### How was this patch tested?

Unit tests.

Closes #39739 from xinrong-meng/datatype_ddl.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

(cherry picked from commit dbd667e7bc5fee443b8a39ca56d4cf3dd1bb2bae)

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/connect/udf.py| 20 +++-

.../sql/tests/connect/test_connect_function.py | 8

2 files changed, 27 insertions(+), 1 deletion(-)

diff --git a/python/pyspark/sql/connect/udf.py

b/python/pyspark/sql/connect/udf.py

index 4a465084838..d0eb2fdfe6c 100644

--- a/python/pyspark/sql/connect/udf.py

+++ b/python/pyspark/sql/connect/udf.py

@@ -28,6 +28,7 @@ from pyspark.sql.connect.expressions import (

)

from pyspark.sql.connect.column import Column

from pyspark.sql.types import DataType, StringType

+from pyspark.sql.utils import is_remote

if TYPE_CHECKING:

@@ -90,7 +91,24 @@ class UserDefinedFunction:

)

self.func = func

-self._returnType = returnType

+

+if isinstance(returnType, str):

+# Currently we don't have a way to have a current Spark session in

Spark Connect, and

+# pyspark.sql.SparkSession has a centralized logic to control the

session creation.

+# So uses pyspark.sql.SparkSession for now. Should replace this to

using the current

+# Spark session for Spark Connect in the future.

+from pyspark.sql import SparkSession as PySparkSession

+

+assert is_remote()

+return_type_schema = ( # a workaround to parse the DataType from

DDL strings

+PySparkSession.builder.getOrCreate()

+.createDataFrame(data=[], schema=returnType)

+.schema

+)

+assert len(return_type_schema.fields) == 1, "returnType should be

singular"

+self._returnType = return_type_schema.fields[0].dataType

+else:

+self._returnType = returnType

self._name = name or (

func.__name__ if hasattr(func, "__name__") else

func.__class__.__name__

)

diff --git a/python/pyspark/sql/tests/connect/test_connect_function.py

b/python/pyspark/sql/tests/connect/test_connect_function.py

index 7042a7e8e6f..50fadb49ed4 100644

--- a/python/pyspark/sql/tests/connect/test_connect_function.py

+++ b/python/pyspark/sql/tests/connect/test_connect_function.py

@@ -2299,6 +2299,14 @@ class SparkConnectFunctionTests(ReusedConnectTestCase,

PandasOnSparkTestUtils, S

cdf.withColumn("A", CF.udf(lambda x: x + 1)(cdf.a)).toPandas(),

sdf.withColumn("A", SF.udf(lambda x: x + 1)(sdf.a)).toPandas(),

)

+self.assert_eq( # returnType as DDL strings

+cdf.withColumn("C", CF.udf(lambda x: len(x),

"int")(cdf.c)).toPandas(),

+sdf.withColumn("C", SF.udf(lambda x: len(x),

"int")(sdf.c)).toPandas(),

+)

+self.assert_eq( # returnType as DataType

+cdf.withColumn("C", CF.udf(lambda x: len(x),

IntegerType())(cdf.c)).toPandas(),

+sdf.withColumn("C", SF.udf(lambda x: len(x),

IntegerType())(sdf.c)).toPandas(),

+)

# as a decorator

@CF.udf(StringType())

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-42125][CONNECT][PYTHON] Pandas UDF in Spark Connect

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 0db63df2b28 [SPARK-42125][CONNECT][PYTHON] Pandas UDF in Spark Connect

0db63df2b28 is described below

commit 0db63df2b2829f1358fb711cd657a22b7838ece2

Author: Xinrong Meng

AuthorDate: Tue Jan 31 09:12:20 2023 +0800

[SPARK-42125][CONNECT][PYTHON] Pandas UDF in Spark Connect

### What changes were proposed in this pull request?

Support Pandas UDF in Spark Connect.

Since Pandas UDF and scalar inline Python UDF share the same proto message,

`ScalarInlineUserDefinedFunction` is renamed to `CommonUserDefinedFunction`.

### Why are the changes needed?

To reach parity with the vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. Pandas UDF is supported in Spark Connect, as shown below.

```py

>>> from pyspark.sql.functions import pandas_udf

>>> import pandas as pd

>>> pandas_udf("double")

... def mean_udf(v: pd.Series) -> float:

... return v.mean()

...

>>> df = spark.createDataFrame([(1, 1.0), (1, 2.0), (2, 3.0), (2, 5.0), (2,

10.0)], ("id", "v"))

>>> type(df)

>>> df.groupby("id").agg(mean_udf("v")).show()

+---+---+

| id|mean_udf(v)|

+---+---+

| 1|1.5|

| 2|6.0|

+---+---+

>>>

```

### How was this patch tested?

Existing tests.

Closes #39753 from xinrong-meng/connect_pd_udf.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

.../main/protobuf/spark/connect/expressions.proto| 4 ++--

.../sql/connect/planner/SparkConnectPlanner.scala| 12 ++--

.../connect/messages/ConnectProtoMessagesSuite.scala | 10 +-

python/pyspark/sql/connect/expressions.py| 13 +++--

python/pyspark/sql/connect/proto/expressions_pb2.py | 20 ++--

python/pyspark/sql/connect/proto/expressions_pb2.pyi | 20 ++--

python/pyspark/sql/connect/udf.py| 4 ++--

python/pyspark/sql/pandas/functions.py | 11 ++-

8 files changed, 52 insertions(+), 42 deletions(-)

diff --git

a/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

b/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

index 7ae0a6c5008..5b27d4593db 100644

--- a/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

+++ b/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

@@ -44,7 +44,7 @@ message Expression {

UnresolvedExtractValue unresolved_extract_value = 12;

UpdateFields update_fields = 13;

UnresolvedNamedLambdaVariable unresolved_named_lambda_variable = 14;

-ScalarInlineUserDefinedFunction scalar_inline_user_defined_function = 15;

+CommonInlineUserDefinedFunction common_inline_user_defined_function = 15;

// This field is used to mark extensions to the protocol. When plugins

generate arbitrary

// relations they can add them here. During the planning the correct

resolution is done.

@@ -297,7 +297,7 @@ message Expression {

}

}

-message ScalarInlineUserDefinedFunction {

+message CommonInlineUserDefinedFunction {

// (Required) Name of the user-defined function.

string function_name = 1;

// (Required) Indicate if the user-defined function is deterministic.

diff --git

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

index dc921cee282..9b5c4b93f62 100644

---

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

+++

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

@@ -742,8 +742,8 @@ class SparkConnectPlanner(val session: SparkSession) {

transformWindowExpression(exp.getWindow)

case proto.Expression.ExprTypeCase.EXTENSION =>

transformExpressionPlugin(exp.getExtension)

- case proto.Expression.ExprTypeCase.SCALAR_INLINE_USER_DEFINED_FUNCTION =>

-

transformScalarInlineUserDefinedFunction(exp.getScalarInlineUserDefinedFunction)

+ case proto.Expression.ExprTypeCase.COMMON_INLINE_USER_DEFINED_FUNCTION =>

+

transformCommonInlineUserDefinedFunction(exp.getCommonInlineUserDefinedFunction)

case _ =>

throw InvalidPlanInput(

s"Expression with ID: ${exp.getExprTypeCase.getNumber} is not

supported")

@@ -826,10 +826,10 @@ class SparkConnectPlanner(val session: SparkSession) {

* @re

[spark] branch branch-3.4 updated: [SPARK-42125][CONNECT][PYTHON] Pandas UDF in Spark Connect

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch branch-3.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.4 by this push:

new f599c9daeb0 [SPARK-42125][CONNECT][PYTHON] Pandas UDF in Spark Connect

f599c9daeb0 is described below

commit f599c9daeb06c81c9986d73a94abdf2592ac6f75

Author: Xinrong Meng

AuthorDate: Tue Jan 31 09:12:20 2023 +0800

[SPARK-42125][CONNECT][PYTHON] Pandas UDF in Spark Connect

### What changes were proposed in this pull request?

Support Pandas UDF in Spark Connect.

Since Pandas UDF and scalar inline Python UDF share the same proto message,

`ScalarInlineUserDefinedFunction` is renamed to `CommonUserDefinedFunction`.

### Why are the changes needed?

To reach parity with the vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. Pandas UDF is supported in Spark Connect, as shown below.

```py

>>> from pyspark.sql.functions import pandas_udf

>>> import pandas as pd

>>> pandas_udf("double")

... def mean_udf(v: pd.Series) -> float:

... return v.mean()

...

>>> df = spark.createDataFrame([(1, 1.0), (1, 2.0), (2, 3.0), (2, 5.0), (2,

10.0)], ("id", "v"))

>>> type(df)

>>> df.groupby("id").agg(mean_udf("v")).show()

+---+---+

| id|mean_udf(v)|

+---+---+

| 1|1.5|

| 2|6.0|

+---+---+

>>>

```

### How was this patch tested?

Existing tests.

Closes #39753 from xinrong-meng/connect_pd_udf.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

(cherry picked from commit 0db63df2b2829f1358fb711cd657a22b7838ece2)

Signed-off-by: Xinrong Meng

---

.../main/protobuf/spark/connect/expressions.proto| 4 ++--

.../sql/connect/planner/SparkConnectPlanner.scala| 12 ++--

.../connect/messages/ConnectProtoMessagesSuite.scala | 10 +-

python/pyspark/sql/connect/expressions.py| 13 +++--

python/pyspark/sql/connect/proto/expressions_pb2.py | 20 ++--

python/pyspark/sql/connect/proto/expressions_pb2.pyi | 20 ++--

python/pyspark/sql/connect/udf.py| 4 ++--

python/pyspark/sql/pandas/functions.py | 11 ++-

8 files changed, 52 insertions(+), 42 deletions(-)

diff --git

a/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

b/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

index 7ae0a6c5008..5b27d4593db 100644

--- a/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

+++ b/connector/connect/common/src/main/protobuf/spark/connect/expressions.proto

@@ -44,7 +44,7 @@ message Expression {

UnresolvedExtractValue unresolved_extract_value = 12;

UpdateFields update_fields = 13;

UnresolvedNamedLambdaVariable unresolved_named_lambda_variable = 14;

-ScalarInlineUserDefinedFunction scalar_inline_user_defined_function = 15;

+CommonInlineUserDefinedFunction common_inline_user_defined_function = 15;

// This field is used to mark extensions to the protocol. When plugins

generate arbitrary

// relations they can add them here. During the planning the correct

resolution is done.

@@ -297,7 +297,7 @@ message Expression {

}

}

-message ScalarInlineUserDefinedFunction {

+message CommonInlineUserDefinedFunction {

// (Required) Name of the user-defined function.

string function_name = 1;

// (Required) Indicate if the user-defined function is deterministic.

diff --git

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

index dc921cee282..9b5c4b93f62 100644

---

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

+++

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

@@ -742,8 +742,8 @@ class SparkConnectPlanner(val session: SparkSession) {

transformWindowExpression(exp.getWindow)

case proto.Expression.ExprTypeCase.EXTENSION =>

transformExpressionPlugin(exp.getExtension)

- case proto.Expression.ExprTypeCase.SCALAR_INLINE_USER_DEFINED_FUNCTION =>

-

transformScalarInlineUserDefinedFunction(exp.getScalarInlineUserDefinedFunction)

+ case proto.Expression.ExprTypeCase.COMMON_INLINE_USER_DEFINED_FUNCTION =>

+

transformCommonInlineUserDefinedFunction(exp.getCommonInlineUserDefinedFunction)

case _ =>

throw InvalidPlanInput(

s"Expression with ID: ${exp.getExprTypeCase.getNumb

[spark] branch master updated: [SPARK-42210][CONNECT][PYTHON] Standardize registered pickled Python UDFs

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new e7eb836376b [SPARK-42210][CONNECT][PYTHON] Standardize registered

pickled Python UDFs

e7eb836376b is described below

commit e7eb836376b72ae58b741e87d40f2d42c9914537

Author: Xinrong Meng

AuthorDate: Thu Feb 9 18:18:08 2023 +0800

[SPARK-42210][CONNECT][PYTHON] Standardize registered pickled Python UDFs

### What changes were proposed in this pull request?

Standardize registered pickled Python UDFs, specifically, implement

`spark.udf.register()`.

### Why are the changes needed?

To reach parity with vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. `spark.udf.register()` is added as shown below:

```py

>>> spark.udf

>>> f = spark.udf.register("f", lambda x: x+1, "int")

>>> f

at 0x7fbc905e5e50>

>>> spark.sql("SELECT f(id) FROM range(2)").collect()

[Row(f(id)=1), Row(f(id)=2)]

```

### How was this patch tested?

Unit tests.

Closes #39860 from xinrong-meng/connect_registered_udf.

Lead-authored-by: Xinrong Meng

Co-authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

.../src/main/protobuf/spark/connect/commands.proto | 1 +

.../sql/connect/planner/SparkConnectPlanner.scala | 33

python/pyspark/sql/connect/client.py | 59 ++

python/pyspark/sql/connect/expressions.py | 7 +++

python/pyspark/sql/connect/proto/commands_pb2.py | 40 +++

python/pyspark/sql/connect/proto/commands_pb2.pyi | 17 ++-

python/pyspark/sql/connect/session.py | 9 +++-

python/pyspark/sql/connect/udf.py | 58 -

python/pyspark/sql/session.py | 6 +--

.../sql/tests/connect/test_connect_basic.py| 1 -

.../pyspark/sql/tests/connect/test_parity_udf.py | 17 ---

python/pyspark/sql/udf.py | 3 ++

12 files changed, 216 insertions(+), 35 deletions(-)

diff --git

a/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

b/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

index 05c91d2c992..73218697577 100644

--- a/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

+++ b/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

@@ -31,6 +31,7 @@ option java_package = "org.apache.spark.connect.proto";

// produce a relational result.

message Command {

oneof command_type {

+CommonInlineUserDefinedFunction register_function = 1;

WriteOperation write_operation = 2;

CreateDataFrameViewCommand create_dataframe_view = 3;

WriteOperationV2 write_operation_v2 = 4;

diff --git

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

index c8a0860b871..3bf5d2b1d30 100644

---

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

+++

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

@@ -44,6 +44,7 @@ import org.apache.spark.sql.errors.QueryCompilationErrors

import org.apache.spark.sql.execution.QueryExecution

import org.apache.spark.sql.execution.arrow.ArrowConverters

import org.apache.spark.sql.execution.command.CreateViewCommand

+import org.apache.spark.sql.execution.python.UserDefinedPythonFunction

import org.apache.spark.sql.functions.{col, expr}

import org.apache.spark.sql.internal.CatalogImpl

import org.apache.spark.sql.types._

@@ -1399,6 +1400,8 @@ class SparkConnectPlanner(val session: SparkSession) {

def process(command: proto.Command): Unit = {

command.getCommandTypeCase match {

+ case proto.Command.CommandTypeCase.REGISTER_FUNCTION =>

+handleRegisterUserDefinedFunction(command.getRegisterFunction)

case proto.Command.CommandTypeCase.WRITE_OPERATION =>

handleWriteOperation(command.getWriteOperation)

case proto.Command.CommandTypeCase.CREATE_DATAFRAME_VIEW =>

@@ -1411,6 +1414,36 @@ class SparkConnectPlanner(val session: SparkSession) {

}

}

+ private def handleRegisterUserDefinedFunction(

+ fun: proto.CommonInlineUserDefinedFunction): Unit = {

+fun.getFunctionCase match {

+ case proto.CommonInlineUserDefinedFunction.FunctionCase.PYTHON_UDF =>

+handleRegisterPythonUDF(fun)

+ case _ =>

+throw InvalidPlanInput(

+ s"Function with ID: ${fun.getFunctionCase.getNumber} is not

supported")

+}

+ }

+

+

[spark] branch branch-3.4 updated: [SPARK-42210][CONNECT][PYTHON] Standardize registered pickled Python UDFs

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch branch-3.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.4 by this push:

new 9e2fc6448e7 [SPARK-42210][CONNECT][PYTHON] Standardize registered

pickled Python UDFs

9e2fc6448e7 is described below

commit 9e2fc6448e71c00b831d34e289278e6418d6d59f

Author: Xinrong Meng

AuthorDate: Thu Feb 9 18:18:08 2023 +0800

[SPARK-42210][CONNECT][PYTHON] Standardize registered pickled Python UDFs

### What changes were proposed in this pull request?

Standardize registered pickled Python UDFs, specifically, implement

`spark.udf.register()`.

### Why are the changes needed?

To reach parity with vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. `spark.udf.register()` is added as shown below:

```py

>>> spark.udf

>>> f = spark.udf.register("f", lambda x: x+1, "int")

>>> f

at 0x7fbc905e5e50>

>>> spark.sql("SELECT f(id) FROM range(2)").collect()

[Row(f(id)=1), Row(f(id)=2)]

```

### How was this patch tested?

Unit tests.

Closes #39860 from xinrong-meng/connect_registered_udf.

Lead-authored-by: Xinrong Meng

Co-authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

(cherry picked from commit e7eb836376b72ae58b741e87d40f2d42c9914537)

Signed-off-by: Xinrong Meng

---

.../src/main/protobuf/spark/connect/commands.proto | 1 +

.../sql/connect/planner/SparkConnectPlanner.scala | 33

python/pyspark/sql/connect/client.py | 59 ++

python/pyspark/sql/connect/expressions.py | 7 +++

python/pyspark/sql/connect/proto/commands_pb2.py | 40 +++

python/pyspark/sql/connect/proto/commands_pb2.pyi | 17 ++-

python/pyspark/sql/connect/session.py | 9 +++-

python/pyspark/sql/connect/udf.py | 58 -

python/pyspark/sql/session.py | 6 +--

.../sql/tests/connect/test_connect_basic.py| 1 -

.../pyspark/sql/tests/connect/test_parity_udf.py | 17 ---

python/pyspark/sql/udf.py | 3 ++

12 files changed, 216 insertions(+), 35 deletions(-)

diff --git

a/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

b/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

index 05c91d2c992..73218697577 100644

--- a/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

+++ b/connector/connect/common/src/main/protobuf/spark/connect/commands.proto

@@ -31,6 +31,7 @@ option java_package = "org.apache.spark.connect.proto";

// produce a relational result.

message Command {

oneof command_type {

+CommonInlineUserDefinedFunction register_function = 1;

WriteOperation write_operation = 2;

CreateDataFrameViewCommand create_dataframe_view = 3;

WriteOperationV2 write_operation_v2 = 4;

diff --git

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

index c8a0860b871..3bf5d2b1d30 100644

---

a/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

+++

b/connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala

@@ -44,6 +44,7 @@ import org.apache.spark.sql.errors.QueryCompilationErrors

import org.apache.spark.sql.execution.QueryExecution

import org.apache.spark.sql.execution.arrow.ArrowConverters

import org.apache.spark.sql.execution.command.CreateViewCommand

+import org.apache.spark.sql.execution.python.UserDefinedPythonFunction

import org.apache.spark.sql.functions.{col, expr}

import org.apache.spark.sql.internal.CatalogImpl

import org.apache.spark.sql.types._

@@ -1399,6 +1400,8 @@ class SparkConnectPlanner(val session: SparkSession) {

def process(command: proto.Command): Unit = {

command.getCommandTypeCase match {

+ case proto.Command.CommandTypeCase.REGISTER_FUNCTION =>

+handleRegisterUserDefinedFunction(command.getRegisterFunction)

case proto.Command.CommandTypeCase.WRITE_OPERATION =>

handleWriteOperation(command.getWriteOperation)

case proto.Command.CommandTypeCase.CREATE_DATAFRAME_VIEW =>

@@ -1411,6 +1414,36 @@ class SparkConnectPlanner(val session: SparkSession) {

}

}

+ private def handleRegisterUserDefinedFunction(

+ fun: proto.CommonInlineUserDefinedFunction): Unit = {

+fun.getFunctionCase match {

+ case proto.CommonInlineUserDefinedFunction.FunctionCase.PYTHON_UDF =>

+handleRegisterPythonUDF(fun)

+ case _ =>

+throw Inval

[spark] branch master updated: [SPARK-42263][CONNECT][PYTHON] Implement `spark.catalog.registerFunction`

This is an automated email from the ASF dual-hosted git repository.

xinrong pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new c619a402451 [SPARK-42263][CONNECT][PYTHON] Implement

`spark.catalog.registerFunction`

c619a402451 is described below

commit c619a402451df9ae5b305e5a48eb244c9ffd2eb6

Author: Xinrong Meng

AuthorDate: Tue Feb 14 13:56:12 2023 +0800

[SPARK-42263][CONNECT][PYTHON] Implement `spark.catalog.registerFunction`

### What changes were proposed in this pull request?

Implement `spark.catalog.registerFunction`.

### Why are the changes needed?

To reach parity with vanilla PySpark.

### Does this PR introduce _any_ user-facing change?

Yes. `spark.catalog.registerFunction` is supported, as shown below.

```py

>>> udf

... def f():

... return 'hi'

...

>>> spark.catalog.registerFunction('HI', f)

>>> spark.sql("SELECT HI()").collect()

[Row(HI()='hi')]

```

### How was this patch tested?

Unit tests.

Closes #39984 from xinrong-meng/catalog_register.

Authored-by: Xinrong Meng

Signed-off-by: Xinrong Meng

---

python/pyspark/sql/catalog.py | 3 ++

python/pyspark/sql/connect/catalog.py | 13 --

python/pyspark/sql/connect/udf.py | 2 +-

.../sql/tests/connect/test_connect_basic.py| 7

.../pyspark/sql/tests/connect/test_parity_udf.py | 49 +++---

python/pyspark/sql/tests/test_udf.py | 4 +-

6 files changed, 21 insertions(+), 57 deletions(-)

diff --git a/python/pyspark/sql/catalog.py b/python/pyspark/sql/catalog.py

index a7f3e761f3f..c83d02d4cb3 100644

--- a/python/pyspark/sql/catalog.py

+++ b/python/pyspark/sql/catalog.py

@@ -924,6 +924,9 @@ class Catalog:

.. deprecated:: 2.3.0

Use :func:`spark.udf.register` instead.

+