[jira] [Work logged] (HADOOP-17288) Use shaded guava from thirdparty

[ https://issues.apache.org/jira/browse/HADOOP-17288?focusedWorklogId=521557=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521557 ] ASF GitHub Bot logged work on HADOOP-17288: --- Author: ASF GitHub Bot Created on: 08/Dec/20 06:21 Start Date: 08/Dec/20 06:21 Worklog Time Spent: 10m Work Description: ayushtkn commented on pull request #2505: URL: https://github.com/apache/hadoop/pull/2505#issuecomment-740406872 @saintstack Thanx for the review. To be precise, I didn't change anything in the pom.xml's, I just removed the java code from trunk and applied the patch to branch-3.3 and luckily it had no conflicts, Then I just compiled and the java code got regenerated wrt branch-3.3, There is a javadoc build failure, that is there in branch-3.3 without my patch as well for aws, Test failures doesn't look related This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 521557) Time Spent: 4h 40m (was: 4.5h) > Use shaded guava from thirdparty > > > Key: HADOOP-17288 > URL: https://issues.apache.org/jira/browse/HADOOP-17288 > Project: Hadoop Common > Issue Type: Sub-task >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 4h 40m > Remaining Estimate: 0h > > Use the shaded version of guava in hadoop-thirdparty -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ayushtkn commented on pull request #2505: HADOOP-17288. Use shaded guava from thirdparty. (branch-3.3)

ayushtkn commented on pull request #2505: URL: https://github.com/apache/hadoop/pull/2505#issuecomment-740406872 @saintstack Thanx for the review. To be precise, I didn't change anything in the pom.xml's, I just removed the java code from trunk and applied the patch to branch-3.3 and luckily it had no conflicts, Then I just compiled and the java code got regenerated wrt branch-3.3, There is a javadoc build failure, that is there in branch-3.3 without my patch as well for aws, Test failures doesn't look related This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] iwasakims commented on pull request #2528: HDFS-15716. WaitforReplication in TestUpgradeDomainBlockPlacementPolicy

iwasakims commented on pull request #2528: URL: https://github.com/apache/hadoop/pull/2528#issuecomment-740400919 > On branch-2.10, this was fixed by waiting for the replication to be complete. Does this is done outside TestUpgradeDomainBlockPlacementPolicy? Your patch looks applicable to branch-2.10 too. Since branch-2.10 still support Java 7, cherry-picking would be easy by avoiding lambda here. I think the lambda does not improve readability here. @amahussein This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17288) Use shaded guava from thirdparty

[

https://issues.apache.org/jira/browse/HADOOP-17288?focusedWorklogId=521551=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521551

]

ASF GitHub Bot logged work on HADOOP-17288:

---

Author: ASF GitHub Bot

Created on: 08/Dec/20 06:03

Start Date: 08/Dec/20 06:03

Worklog Time Spent: 10m

Work Description: ayushtkn commented on a change in pull request #2505:

URL: https://github.com/apache/hadoop/pull/2505#discussion_r538058287

##

File path: Jenkinsfile

##

@@ -23,7 +23,7 @@ pipeline {

options {

buildDiscarder(logRotator(numToKeepStr: '5'))

-timeout (time: 20, unit: 'HOURS')

+timeout (time: 35, unit: 'HOURS')

Review comment:

I increased it, Luckily got the result this time

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 521551)

Time Spent: 4.5h (was: 4h 20m)

> Use shaded guava from thirdparty

>

>

> Key: HADOOP-17288

> URL: https://issues.apache.org/jira/browse/HADOOP-17288

> Project: Hadoop Common

> Issue Type: Sub-task

>Reporter: Ayush Saxena

>Assignee: Ayush Saxena

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Time Spent: 4.5h

> Remaining Estimate: 0h

>

> Use the shaded version of guava in hadoop-thirdparty

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ayushtkn commented on a change in pull request #2505: HADOOP-17288. Use shaded guava from thirdparty. (branch-3.3)

ayushtkn commented on a change in pull request #2505:

URL: https://github.com/apache/hadoop/pull/2505#discussion_r538058287

##

File path: Jenkinsfile

##

@@ -23,7 +23,7 @@ pipeline {

options {

buildDiscarder(logRotator(numToKeepStr: '5'))

-timeout (time: 20, unit: 'HOURS')

+timeout (time: 35, unit: 'HOURS')

Review comment:

I increased it, Luckily got the result this time

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17288) Use shaded guava from thirdparty

[ https://issues.apache.org/jira/browse/HADOOP-17288?focusedWorklogId=521547=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521547 ] ASF GitHub Bot logged work on HADOOP-17288: --- Author: ASF GitHub Bot Created on: 08/Dec/20 05:55 Start Date: 08/Dec/20 05:55 Worklog Time Spent: 10m Work Description: hadoop-yetus commented on pull request #2505: URL: https://github.com/apache/hadoop/pull/2505#issuecomment-740396279 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 0m 49s | Docker mode activated. | ||| _ Prechecks _ | | +1 :green_heart: | dupname | 1m 13s | No case conflicting files found. | | +1 :green_heart: | @author | 0m 1s | The patch does not contain any @author tags. | | -1 :x: | pathlen | 0m 0s | The patch appears to contain 5 files with names longer than 240 | | +1 :green_heart: | test4tests | 0m 1s | The patch appears to include 413 new or modified test files. | ||| _ branch-3.3 Compile Tests _ | | +0 :ok: | mvndep | 4m 1s | Maven dependency ordering for branch | | +1 :green_heart: | mvninstall | 28m 14s | branch-3.3 passed | | +1 :green_heart: | compile | 15m 40s | branch-3.3 passed | | +1 :green_heart: | checkstyle | 27m 21s | branch-3.3 passed | | +1 :green_heart: | mvnsite | 22m 7s | branch-3.3 passed | | +1 :green_heart: | shadedclient | 15m 0s | branch has no errors when building and testing our client artifacts. | | -1 :x: | javadoc | 5m 27s | root in branch-3.3 failed. | | +0 :ok: | spotbugs | 0m 46s | Used deprecated FindBugs config; considering switching to SpotBugs. | | +0 :ok: | findbugs | 0m 26s | branch/hadoop-project no findbugs output file (findbugsXml.xml) | | -1 :x: | findbugs | 29m 46s | root in branch-3.3 has 3 extant findbugs warnings. | | +0 :ok: | findbugs | 0m 32s | branch/hadoop-client-modules/hadoop-client-minicluster no findbugs output file (findbugsXml.xml) | | -1 :x: | findbugs | 0m 44s | hadoop-cloud-storage-project/hadoop-cos in branch-3.3 has 1 extant findbugs warnings. | | +0 :ok: | findbugs | 0m 36s | branch/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-tests no findbugs output file (findbugsXml.xml) | | -1 :x: | findbugs | 0m 49s | hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-timelineservice-hbase-tests in branch-3.3 has 2 extant findbugs warnings. | ||| _ Patch Compile Tests _ | | +0 :ok: | mvndep | 0m 38s | Maven dependency ordering for patch | | +1 :green_heart: | mvninstall | 59m 41s | the patch passed | | +1 :green_heart: | compile | 15m 29s | the patch passed | | +1 :green_heart: | javac | 15m 29s | the patch passed | | -0 :warning: | checkstyle | 27m 20s | root: The patch generated 191 new + 25239 unchanged - 13 fixed = 25430 total (was 25252) | | +1 :green_heart: | mvnsite | 20m 32s | the patch passed | | +1 :green_heart: | shellcheck | 0m 0s | There were no new shellcheck issues. | | +1 :green_heart: | shelldocs | 0m 22s | There were no new shelldocs issues. | | +1 :green_heart: | whitespace | 0m 0s | The patch has no whitespace issues. | | +1 :green_heart: | xml | 0m 49s | The patch has no ill-formed XML file. | | +1 :green_heart: | shadedclient | 15m 51s | patch has no errors when building and testing our client artifacts. | | -1 :x: | javadoc | 5m 28s | root in the patch failed. | | +0 :ok: | findbugs | 0m 25s | hadoop-project has no data from findbugs | | +0 :ok: | findbugs | 0m 31s | hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-tests has no data from findbugs | | +0 :ok: | findbugs | 0m 29s | hadoop-client-modules/hadoop-client-minicluster has no data from findbugs | ||| _ Other Tests _ | | -1 :x: | unit | 555m 38s | root in the patch passed. | | +1 :green_heart: | asflicense | 1m 45s | The patch does not generate ASF License warnings. | | | | 1029m 57s | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.yarn.applications.distributedshell.TestDistributedShell | | | hadoop.yarn.server.resourcemanager.scheduler.fair.TestFairSchedulerPreemption | | | hadoop.yarn.server.resourcemanager.TestRMHATimelineCollectors | | | hadoop.tools.dynamometer.TestDynamometerInfra | | | hadoop.hdfs.server.namenode.ha.TestHAAppend | | | hadoop.hdfs.server.datanode.TestBPOfferService | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.40 ServerAPI=1.40 base:

[GitHub] [hadoop] hadoop-yetus commented on pull request #2505: HADOOP-17288. Use shaded guava from thirdparty. (branch-3.3)

hadoop-yetus commented on pull request #2505: URL: https://github.com/apache/hadoop/pull/2505#issuecomment-740396279 :broken_heart: **-1 overall** | Vote | Subsystem | Runtime | Comment | |::|--:|:|:| | +0 :ok: | reexec | 0m 49s | Docker mode activated. | ||| _ Prechecks _ | | +1 :green_heart: | dupname | 1m 13s | No case conflicting files found. | | +1 :green_heart: | @author | 0m 1s | The patch does not contain any @author tags. | | -1 :x: | pathlen | 0m 0s | The patch appears to contain 5 files with names longer than 240 | | +1 :green_heart: | test4tests | 0m 1s | The patch appears to include 413 new or modified test files. | ||| _ branch-3.3 Compile Tests _ | | +0 :ok: | mvndep | 4m 1s | Maven dependency ordering for branch | | +1 :green_heart: | mvninstall | 28m 14s | branch-3.3 passed | | +1 :green_heart: | compile | 15m 40s | branch-3.3 passed | | +1 :green_heart: | checkstyle | 27m 21s | branch-3.3 passed | | +1 :green_heart: | mvnsite | 22m 7s | branch-3.3 passed | | +1 :green_heart: | shadedclient | 15m 0s | branch has no errors when building and testing our client artifacts. | | -1 :x: | javadoc | 5m 27s | root in branch-3.3 failed. | | +0 :ok: | spotbugs | 0m 46s | Used deprecated FindBugs config; considering switching to SpotBugs. | | +0 :ok: | findbugs | 0m 26s | branch/hadoop-project no findbugs output file (findbugsXml.xml) | | -1 :x: | findbugs | 29m 46s | root in branch-3.3 has 3 extant findbugs warnings. | | +0 :ok: | findbugs | 0m 32s | branch/hadoop-client-modules/hadoop-client-minicluster no findbugs output file (findbugsXml.xml) | | -1 :x: | findbugs | 0m 44s | hadoop-cloud-storage-project/hadoop-cos in branch-3.3 has 1 extant findbugs warnings. | | +0 :ok: | findbugs | 0m 36s | branch/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-tests no findbugs output file (findbugsXml.xml) | | -1 :x: | findbugs | 0m 49s | hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-timelineservice-hbase-tests in branch-3.3 has 2 extant findbugs warnings. | ||| _ Patch Compile Tests _ | | +0 :ok: | mvndep | 0m 38s | Maven dependency ordering for patch | | +1 :green_heart: | mvninstall | 59m 41s | the patch passed | | +1 :green_heart: | compile | 15m 29s | the patch passed | | +1 :green_heart: | javac | 15m 29s | the patch passed | | -0 :warning: | checkstyle | 27m 20s | root: The patch generated 191 new + 25239 unchanged - 13 fixed = 25430 total (was 25252) | | +1 :green_heart: | mvnsite | 20m 32s | the patch passed | | +1 :green_heart: | shellcheck | 0m 0s | There were no new shellcheck issues. | | +1 :green_heart: | shelldocs | 0m 22s | There were no new shelldocs issues. | | +1 :green_heart: | whitespace | 0m 0s | The patch has no whitespace issues. | | +1 :green_heart: | xml | 0m 49s | The patch has no ill-formed XML file. | | +1 :green_heart: | shadedclient | 15m 51s | patch has no errors when building and testing our client artifacts. | | -1 :x: | javadoc | 5m 28s | root in the patch failed. | | +0 :ok: | findbugs | 0m 25s | hadoop-project has no data from findbugs | | +0 :ok: | findbugs | 0m 31s | hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-tests has no data from findbugs | | +0 :ok: | findbugs | 0m 29s | hadoop-client-modules/hadoop-client-minicluster has no data from findbugs | ||| _ Other Tests _ | | -1 :x: | unit | 555m 38s | root in the patch passed. | | +1 :green_heart: | asflicense | 1m 45s | The patch does not generate ASF License warnings. | | | | 1029m 57s | | | Reason | Tests | |---:|:--| | Failed junit tests | hadoop.yarn.applications.distributedshell.TestDistributedShell | | | hadoop.yarn.server.resourcemanager.scheduler.fair.TestFairSchedulerPreemption | | | hadoop.yarn.server.resourcemanager.TestRMHATimelineCollectors | | | hadoop.tools.dynamometer.TestDynamometerInfra | | | hadoop.hdfs.server.namenode.ha.TestHAAppend | | | hadoop.hdfs.server.datanode.TestBPOfferService | | Subsystem | Report/Notes | |--:|:-| | Docker | ClientAPI=1.40 ServerAPI=1.40 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2505/4/artifact/out/Dockerfile | | GITHUB PR | https://github.com/apache/hadoop/pull/2505 | | Optional Tests | dupname asflicense shellcheck shelldocs compile javac javadoc mvninstall mvnsite unit shadedclient xml findbugs checkstyle | | uname | Linux 948f20ecfece 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux | | Build tool | maven | |

[jira] [Updated] (HADOOP-17389) KMS should log full UGI principal

[

https://issues.apache.org/jira/browse/HADOOP-17389?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Xiaoqiao He updated HADOOP-17389:

-

Fix Version/s: 3.2.2

cherry-pick to branch-3.2.2

> KMS should log full UGI principal

> -

>

> Key: HADOOP-17389

> URL: https://issues.apache.org/jira/browse/HADOOP-17389

> Project: Hadoop Common

> Issue Type: Improvement

>Reporter: Ahmed Hussein

>Assignee: Ahmed Hussein

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.2.2, 3.3.1, 3.4.0, 3.1.5, 3.2.3

>

> Time Spent: 1h 20m

> Remaining Estimate: 0h

>

> [~daryn] reported that the kms-audit log only logs the short username:

> {{OK[op=GENERATE_EEK, key=key1, user=hdfs, accessCount=4206,

> interval=10427ms]}}

> In this example, it's impossible to tell which NN(s) requested EDEKs when

> they are all lumped together.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tangzhankun commented on pull request #2494: YARN-10380: Import logic of multi-node allocation in CapacityScheduler

tangzhankun commented on pull request #2494: URL: https://github.com/apache/hadoop/pull/2494#issuecomment-740361831 @qizhu-lucas Thanks a lot! I'll merge it if no more comments. @jiwq This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-13571) ServerSocketUtil.getPort() should use loopback address, not 0.0.0.0

[

https://issues.apache.org/jira/browse/HADOOP-13571?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17245654#comment-17245654

]

Hadoop QA commented on HADOOP-13571:

| (/) *{color:green}+1 overall{color}* |

\\

\\

|| Vote || Subsystem || Runtime || Logfile || Comment ||

| {color:blue}0{color} | {color:blue} reexec {color} | {color:blue} 1m

33s{color} | {color:blue}{color} | {color:blue} Docker mode activated. {color} |

|| || || || {color:brown} Prechecks {color} || ||

| {color:green}+1{color} | {color:green} dupname {color} | {color:green} 0m

0s{color} | {color:green}{color} | {color:green} No case conflicting files

found. {color} |

| {color:green}+1{color} | {color:green} @author {color} | {color:green} 0m

0s{color} | {color:green}{color} | {color:green} The patch does not contain any

@author tags. {color} |

| {color:green}+1{color} | {color:green} {color} | {color:green} 0m 0s{color}

| {color:green}test4tests{color} | {color:green} The patch appears to include 1

new or modified test files. {color} |

|| || || || {color:brown} trunk Compile Tests {color} || ||

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 35m

54s{color} | {color:green}{color} | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 22m

11s{color} | {color:green}{color} | {color:green} trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 18m

9s{color} | {color:green}{color} | {color:green} trunk passed with JDK Private

Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

46s{color} | {color:green}{color} | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} mvnsite {color} | {color:green} 1m

26s{color} | {color:green}{color} | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} shadedclient {color} | {color:green}

19m 14s{color} | {color:green}{color} | {color:green} branch has no errors when

building and testing our client artifacts. {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 0m

57s{color} | {color:green}{color} | {color:green} trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

25s{color} | {color:green}{color} | {color:green} trunk passed with JDK Private

Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 {color} |

| {color:blue}0{color} | {color:blue} spotbugs {color} | {color:blue} 2m

19s{color} | {color:blue}{color} | {color:blue} Used deprecated FindBugs

config; considering switching to SpotBugs. {color} |

| {color:green}+1{color} | {color:green} findbugs {color} | {color:green} 2m

17s{color} | {color:green}{color} | {color:green} trunk passed {color} |

|| || || || {color:brown} Patch Compile Tests {color} || ||

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 0m

53s{color} | {color:green}{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 21m

2s{color} | {color:green}{color} | {color:green} the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 21m

2s{color} | {color:green}{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 18m

17s{color} | {color:green}{color} | {color:green} the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 18m

17s{color} | {color:green}{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

44s{color} | {color:green}{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} mvnsite {color} | {color:green} 1m

25s{color} | {color:green}{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} whitespace {color} | {color:green} 0m

0s{color} | {color:green}{color} | {color:green} The patch has no whitespace

issues. {color} |

| {color:green}+1{color} | {color:green} shadedclient {color} | {color:green}

17m 3s{color} | {color:green}{color} | {color:green} patch has no errors when

building and testing our client artifacts. {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 0m

56s{color} | {color:green}{color} | {color:green} the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

29s{color} | {color:green}{color} |

[GitHub] [hadoop] hadoop-yetus commented on pull request #2529: HDFS-15717. Improve fsck logging.

hadoop-yetus commented on pull request #2529:

URL: https://github.com/apache/hadoop/pull/2529#issuecomment-740349080

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 30s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | | 0m 0s | [test4tests](test4tests) | The patch

appears to include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 34s | | trunk passed |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | compile | 1m 12s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | checkstyle | 0m 54s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 20s | | trunk passed |

| +1 :green_heart: | shadedclient | 17m 27s | | branch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 56s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 29s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +0 :ok: | spotbugs | 3m 5s | | Used deprecated FindBugs config;

considering switching to SpotBugs. |

| +1 :green_heart: | findbugs | 3m 1s | | trunk passed |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 15s | | the patch passed |

| +1 :green_heart: | compile | 1m 10s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javac | 1m 10s | | the patch passed |

| +1 :green_heart: | compile | 1m 6s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | javac | 1m 6s | | the patch passed |

| +1 :green_heart: | checkstyle | 0m 44s | |

hadoop-hdfs-project/hadoop-hdfs: The patch generated 0 new + 270 unchanged - 1

fixed = 270 total (was 271) |

| +1 :green_heart: | mvnsite | 1m 12s | | the patch passed |

| +1 :green_heart: | whitespace | 0m 0s | | The patch has no

whitespace issues. |

| +1 :green_heart: | shadedclient | 15m 0s | | patch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 49s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 22s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | findbugs | 3m 2s | | the patch passed |

_ Other Tests _ |

| -1 :x: | unit | 97m 49s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2529/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 43s | | The patch does not

generate ASF License warnings. |

| | | 187m 2s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.TestDFSStripedOutputStreamWithRandomECPolicy |

| | hadoop.hdfs.TestRollingUpgrade |

| | hadoop.hdfs.server.datanode.TestBlockScanner |

| | hadoop.hdfs.TestDecommissionWithStriped |

| | hadoop.hdfs.TestFileChecksumCompositeCrc |

| | hadoop.hdfs.TestDFSInotifyEventInputStreamKerberized |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.40 ServerAPI=1.40 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2529/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2529 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient findbugs checkstyle |

| uname | Linux 0027ea038624 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 40f7543a6d5 |

| Default Java | Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2529/2/testReport/ |

| Max. process+thread count | 4326 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

[GitHub] [hadoop] qizhu-lucas commented on pull request #2494: YARN-10380: Import logic of multi-node allocation in CapacityScheduler

qizhu-lucas commented on pull request #2494: URL: https://github.com/apache/hadoop/pull/2494#issuecomment-740337104  @tangzhankun This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] qizhu-lucas commented on pull request #2494: YARN-10380: Import logic of multi-node allocation in CapacityScheduler

qizhu-lucas commented on pull request #2494: URL: https://github.com/apache/hadoop/pull/2494#issuecomment-740334523 @tangzhankun Thanks for your review, it passed in my local test, and it is unrelated to this change. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #2528: HDFS-15716. WaitforReplication in TestUpgradeDomainBlockPlacementPolicy

hadoop-yetus commented on pull request #2528:

URL: https://github.com/apache/hadoop/pull/2528#issuecomment-740323672

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 33s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | | 0m 0s | [test4tests](test4tests) | The patch

appears to include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 26s | | trunk passed |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | compile | 1m 11s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | checkstyle | 0m 49s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 20s | | trunk passed |

| +1 :green_heart: | shadedclient | 17m 24s | | branch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 54s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +0 :ok: | spotbugs | 3m 8s | | Used deprecated FindBugs config;

considering switching to SpotBugs. |

| +1 :green_heart: | findbugs | 3m 6s | | trunk passed |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 10s | | the patch passed |

| +1 :green_heart: | compile | 1m 10s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javac | 1m 10s | | the patch passed |

| +1 :green_heart: | compile | 1m 5s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | javac | 1m 5s | | the patch passed |

| +1 :green_heart: | checkstyle | 0m 39s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 10s | | the patch passed |

| +1 :green_heart: | whitespace | 0m 0s | | The patch has no

whitespace issues. |

| +1 :green_heart: | shadedclient | 14m 58s | | patch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 49s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 22s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | findbugs | 3m 4s | | the patch passed |

_ Other Tests _ |

| -1 :x: | unit | 64m 48s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 41s | | The patch does not

generate ASF License warnings. |

| | | 153m 41s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.namenode.snapshot.TestFSImageWithOrderedSnapshotDeletion |

| | hadoop.hdfs.server.namenode.TestFSImageWithAcl |

| | hadoop.hdfs.server.blockmanagement.TestUnderReplicatedBlocks |

| | hadoop.hdfs.server.namenode.snapshot.TestRandomOpsWithSnapshots |

| | hadoop.hdfs.server.namenode.snapshot.TestGetContentSummaryWithSnapshot

|

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.40 ServerAPI=1.40 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2528 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient findbugs checkstyle |

| uname | Linux b8affc6392c7 4.15.0-60-generic #67-Ubuntu SMP Thu Aug 22

16:55:30 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 40f7543a6d5 |

| Default Java | Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/2/testReport/ |

| Max. process+thread count | 4478 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

[GitHub] [hadoop] tangzhankun commented on pull request #2494: YARN-10380: Import logic of multi-node allocation in CapacityScheduler

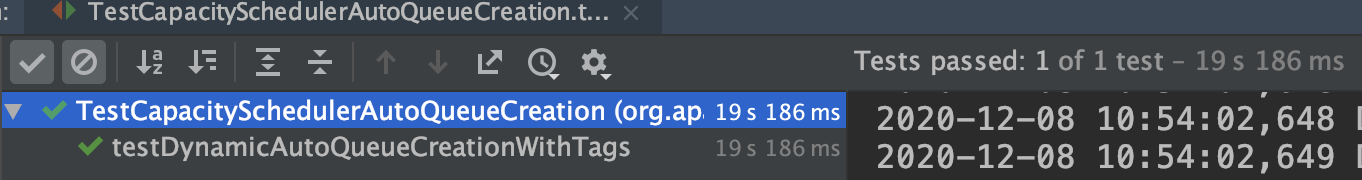

tangzhankun commented on pull request #2494: URL: https://github.com/apache/hadoop/pull/2494#issuecomment-740320479 @jiwq Thanks for the review. @qizhu-lucas Thanks for the hard work! From the Yetus result, there's a unit test failure which seems not related to this changes. I'm +1 to the latest patch. ``` [ERROR] Tests run: 15, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 23.822 s <<< FAILURE! - in org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.TestCapacitySchedulerAutoQueueCreation [ERROR] testDynamicAutoQueueCreationWithTags(org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.TestCapacitySchedulerAutoQueueCreation) Time elapsed: 0.858 s <<< ERROR! org.apache.hadoop.service.ServiceStateException: org.apache.hadoop.yarn.exceptions.YarnException: Failed to initialize queues at org.apache.hadoop.service.ServiceStateException.convert(ServiceStateException.java:105) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:174) at org.apache.hadoop.service.CompositeService.serviceInit(CompositeService.java:110) at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager$RMActiveServices.serviceInit(ResourceManager.java:884) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:165) at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.createAndInitActiveServices(ResourceManager.java:1296) at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.serviceInit(ResourceManager.java:339) at org.apache.hadoop.yarn.server.resourcemanager.MockRM.serviceInit(MockRM.java:1018) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:165) at org.apache.hadoop.yarn.server.resourcemanager.MockRM.(MockRM.java:158) at org.apache.hadoop.yarn.server.resourcemanager.MockRM.(MockRM.java:134) at org.apache.hadoop.yarn.server.resourcemanager.MockRM.(MockRM.java:130) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.TestCapacitySchedulerAutoQueueCreation$5.(TestCapacitySchedulerAutoQueueCreation.java:873) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.TestCapacitySchedulerAutoQueueCreation.testDynamicAutoQueueCreationWithTags(TestCapacitySchedulerAutoQueueCreation.java:873) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50) at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12) at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47) at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17) at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26) at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27) at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325) at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78) at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57) at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290) at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71) at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288) at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58) at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268) at org.junit.runners.ParentRunner.run(ParentRunner.java:363) at org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365) at org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273) at org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238) at org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159) at org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384) at org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345) at org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126) at org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418) Caused by: org.apache.hadoop.yarn.exceptions.YarnException: Failed to initialize queues at org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler.initializeQueues(CapacityScheduler.java:798) at

[GitHub] [hadoop] PHILO-HE edited a comment on pull request #1726: [WIP] Syncservice rebased onto HDFS-12090

PHILO-HE edited a comment on pull request #1726: URL: https://github.com/apache/hadoop/pull/1726#issuecomment-740304229 Based on this patch from @ehiggs, we developed the feature to support provided storage write. Please review our patch in https://issues.apache.org/jira/browse/HDFS-15714. Thanks @ehiggs so much! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] PHILO-HE commented on pull request #1726: [WIP] Syncservice rebased onto HDFS-12090

PHILO-HE commented on pull request #1726: URL: https://github.com/apache/hadoop/pull/1726#issuecomment-740304229 Based on this patch from @ehiggs, we developed the feature to support provided storage write. Please review our patch in https://issues.apache.org/jira/browse/HDFS-15714. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-17412) When `fs.s3a.connection.ssl.enabled=true`, Error when visit S3A with AKSK

[

https://issues.apache.org/jira/browse/HADOOP-17412?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17245602#comment-17245602

]

angerszhu commented on HADOOP-17412:

Sorry, AKSK means access key and secret key.

Yea, same exception with https://issues.apache.org/jira/browse/HADOOP-17017,

Old test bucket name we use `xxx-xxx-xxx`, but in new hadoop cluster we use

`xxx.xxx.xxx` as bucket name. Then meet this exception. And i have check the

version off httpclient since some issue say some version (4.5.9) httpclient

have similar problem, but both version's aws-java-bundle jar's httpclient is

4.5.6.

Thanks a lot for your reply. Helps a lot.

> When `fs.s3a.connection.ssl.enabled=true`, Error when visit S3A with AKSK

> ---

>

> Key: HADOOP-17412

> URL: https://issues.apache.org/jira/browse/HADOOP-17412

> Project: Hadoop Common

> Issue Type: Bug

> Components: fs/s3

> Environment: jdk 1.8

> hadoop-3.3.0

>Reporter: angerszhu

>Priority: Major

> Attachments: image-2020-12-07-10-25-51-908.png

>

>

> When we update hadoop version from hadoop-3.2.1 to hadoop-3.3.0, Use AKSK

> access s3a with ssl enabled, then this error happen

> {code:java}

>

> ipc.client.connection.maxidletime

> 2

>

>

> fs.s3a.secret.key

>

>

>

> fs.s3a.access.key

>

>

>

> fs.s3a.aws.credentials.provider

> org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider

>

> {code}

> !image-2020-12-07-10-25-51-908.png!

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-13571) ServerSocketUtil.getPort() should use loopback address, not 0.0.0.0

[ https://issues.apache.org/jira/browse/HADOOP-13571?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17245599#comment-17245599 ] Eric Badger commented on HADOOP-13571: -- Hey, [~ahussein], I uploaded a new patch to fix the checkstyle > ServerSocketUtil.getPort() should use loopback address, not 0.0.0.0 > --- > > Key: HADOOP-13571 > URL: https://issues.apache.org/jira/browse/HADOOP-13571 > Project: Hadoop Common > Issue Type: Bug >Reporter: Eric Badger >Assignee: Eric Badger >Priority: Major > Attachments: HADOOP-13571.001.patch, HADOOP-13571.002.patch > > > Using 0.0.0.0 to check for a free port will succeed even if there's something > bound to that same port on the loopback interface. Since this function is > used primarily in testing, it should be checking the loopback interface for > free ports. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-13571) ServerSocketUtil.getPort() should use loopback address, not 0.0.0.0

[ https://issues.apache.org/jira/browse/HADOOP-13571?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Eric Badger updated HADOOP-13571: - Attachment: HADOOP-13571.002.patch > ServerSocketUtil.getPort() should use loopback address, not 0.0.0.0 > --- > > Key: HADOOP-13571 > URL: https://issues.apache.org/jira/browse/HADOOP-13571 > Project: Hadoop Common > Issue Type: Bug >Reporter: Eric Badger >Assignee: Eric Badger >Priority: Major > Attachments: HADOOP-13571.001.patch, HADOOP-13571.002.patch > > > Using 0.0.0.0 to check for a free port will succeed even if there's something > bound to that same port on the loopback interface. Since this function is > used primarily in testing, it should be checking the loopback interface for > free ports. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] jojochuang merged pull request #2518: HDFS-15709. Socket file descriptor leak in StripedBlockChecksumRecons…

jojochuang merged pull request #2518: URL: https://github.com/apache/hadoop/pull/2518 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17414) Magic committer files don't have the count of bytes written collected by spark

[

https://issues.apache.org/jira/browse/HADOOP-17414?focusedWorklogId=521458=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521458

]

ASF GitHub Bot logged work on HADOOP-17414:

---

Author: ASF GitHub Bot

Created on: 07/Dec/20 23:43

Start Date: 07/Dec/20 23:43

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2530:

URL: https://github.com/apache/hadoop/pull/2530#issuecomment-740249672

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 14s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | markdownlint | 0m 0s | | markdownlint was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | | 0m 0s | [test4tests](test4tests) | The patch

appears to include 4 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 13m 57s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 23m 39s | | trunk passed |

| +1 :green_heart: | compile | 21m 57s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | compile | 18m 57s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | checkstyle | 2m 53s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 16s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 30s | | branch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 1m 23s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 2m 6s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +0 :ok: | spotbugs | 1m 10s | | Used deprecated FindBugs config;

considering switching to SpotBugs. |

| +1 :green_heart: | findbugs | 3m 26s | | trunk passed |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 26s | | the patch passed |

| +1 :green_heart: | compile | 20m 43s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javac | 20m 43s | | the patch passed |

| +1 :green_heart: | compile | 18m 17s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | javac | 18m 17s | | the patch passed |

| -0 :warning: | checkstyle | 2m 47s |

[/diff-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2530/1/artifact/out/diff-checkstyle-root.txt)

| root: The patch generated 2 new + 15 unchanged - 0 fixed = 17 total (was

15) |

| +1 :green_heart: | mvnsite | 2m 12s | | the patch passed |

| -1 :x: | whitespace | 0m 0s |

[/whitespace-eol.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2530/1/artifact/out/whitespace-eol.txt)

| The patch has 5 line(s) that end in whitespace. Use git apply

--whitespace=fix <>. Refer https://git-scm.com/docs/git-apply |

| +1 :green_heart: | xml | 0m 1s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | shadedclient | 17m 18s | | patch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 1m 23s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 2m 3s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | findbugs | 3m 44s | | the patch passed |

_ Other Tests _ |

| +1 :green_heart: | unit | 10m 1s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 1m 25s | | hadoop-aws in the patch passed.

|

| +1 :green_heart: | asflicense | 0m 49s | | The patch does not

generate ASF License warnings. |

| | | 196m 32s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.40 ServerAPI=1.40 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2530/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2530 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient xml findbugs checkstyle markdownlint |

| uname | Linux c05b3b4b492a 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

[GitHub] [hadoop] hadoop-yetus commented on pull request #2530: HADOOP-17414. Magic committer files don't have the count of bytes written collected by spark

hadoop-yetus commented on pull request #2530:

URL: https://github.com/apache/hadoop/pull/2530#issuecomment-740249672

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 14s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | markdownlint | 0m 0s | | markdownlint was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | | 0m 0s | [test4tests](test4tests) | The patch

appears to include 4 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 13m 57s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 23m 39s | | trunk passed |

| +1 :green_heart: | compile | 21m 57s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | compile | 18m 57s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | checkstyle | 2m 53s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 16s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 30s | | branch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 1m 23s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 2m 6s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +0 :ok: | spotbugs | 1m 10s | | Used deprecated FindBugs config;

considering switching to SpotBugs. |

| +1 :green_heart: | findbugs | 3m 26s | | trunk passed |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 26s | | the patch passed |

| +1 :green_heart: | compile | 20m 43s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javac | 20m 43s | | the patch passed |

| +1 :green_heart: | compile | 18m 17s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | javac | 18m 17s | | the patch passed |

| -0 :warning: | checkstyle | 2m 47s |

[/diff-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2530/1/artifact/out/diff-checkstyle-root.txt)

| root: The patch generated 2 new + 15 unchanged - 0 fixed = 17 total (was

15) |

| +1 :green_heart: | mvnsite | 2m 12s | | the patch passed |

| -1 :x: | whitespace | 0m 0s |

[/whitespace-eol.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2530/1/artifact/out/whitespace-eol.txt)

| The patch has 5 line(s) that end in whitespace. Use git apply

--whitespace=fix <>. Refer https://git-scm.com/docs/git-apply |

| +1 :green_heart: | xml | 0m 1s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | shadedclient | 17m 18s | | patch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 1m 23s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 2m 3s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | findbugs | 3m 44s | | the patch passed |

_ Other Tests _ |

| +1 :green_heart: | unit | 10m 1s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 1m 25s | | hadoop-aws in the patch passed.

|

| +1 :green_heart: | asflicense | 0m 49s | | The patch does not

generate ASF License warnings. |

| | | 196m 32s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.40 ServerAPI=1.40 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2530/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2530 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient xml findbugs checkstyle markdownlint |

| uname | Linux c05b3b4b492a 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 32099e36dda |

| Default Java | Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Test Results |

[jira] [Work logged] (HADOOP-17414) Magic committer files don't have the count of bytes written collected by spark

[ https://issues.apache.org/jira/browse/HADOOP-17414?focusedWorklogId=521456=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521456 ] ASF GitHub Bot logged work on HADOOP-17414: --- Author: ASF GitHub Bot Created on: 07/Dec/20 23:36 Start Date: 07/Dec/20 23:36 Worklog Time Spent: 10m Work Description: dongjoon-hyun commented on pull request #2530: URL: https://github.com/apache/hadoop/pull/2530#issuecomment-740247128 Thank you for pinging me. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 521456) Time Spent: 0.5h (was: 20m) > Magic committer files don't have the count of bytes written collected by spark > -- > > Key: HADOOP-17414 > URL: https://issues.apache.org/jira/browse/HADOOP-17414 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/s3 >Affects Versions: 3.2.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 0.5h > Remaining Estimate: 0h > > The spark statistics tracking doesn't correctly assess the size of the > uploaded files as it only calls getFileStatus on the zero byte objects -not > the yet-to-manifest files. > Everything works with the staging committer purely because it's measuring the > length of the files staged to the local FS, not the unmaterialized output. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] dongjoon-hyun commented on pull request #2530: HADOOP-17414. Magic committer files don't have the count of bytes written collected by spark

dongjoon-hyun commented on pull request #2530: URL: https://github.com/apache/hadoop/pull/2530#issuecomment-740247128 Thank you for pinging me. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #2529: HDFS-15717. Improve fsck logging.

hadoop-yetus commented on pull request #2529:

URL: https://github.com/apache/hadoop/pull/2529#issuecomment-740236102

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 30s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | | 0m 0s | [test4tests](test4tests) | The patch

appears to include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 1s | | trunk passed |

| +1 :green_heart: | compile | 1m 19s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | compile | 1m 16s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | checkstyle | 0m 55s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 19s | | trunk passed |

| +1 :green_heart: | shadedclient | 17m 54s | | branch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 55s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 25s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +0 :ok: | spotbugs | 3m 6s | | Used deprecated FindBugs config;

considering switching to SpotBugs. |

| +1 :green_heart: | findbugs | 3m 3s | | trunk passed |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 13s | | the patch passed |

| +1 :green_heart: | compile | 1m 23s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javac | 1m 23s | | the patch passed |

| +1 :green_heart: | compile | 1m 5s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | javac | 1m 5s | | the patch passed |

| -0 :warning: | checkstyle | 0m 43s |

[/diff-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2529/1/artifact/out/diff-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 1 new + 270 unchanged

- 1 fixed = 271 total (was 271) |

| +1 :green_heart: | mvnsite | 1m 9s | | the patch passed |

| +1 :green_heart: | whitespace | 0m 0s | | The patch has no

whitespace issues. |

| +1 :green_heart: | shadedclient | 15m 17s | | patch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 54s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 24s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | findbugs | 3m 50s | | the patch passed |

_ Other Tests _ |

| -1 :x: | unit | 126m 39s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2529/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 40s | | The patch does not

generate ASF License warnings. |

| | | 217m 57s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestBlockTokenWrappingQOP |

| | hadoop.hdfs.TestStateAlignmentContextWithHA |

| | hadoop.hdfs.TestBlockStoragePolicy |

| | hadoop.hdfs.TestHDFSFileSystemContract |

| | hadoop.hdfs.server.balancer.TestBalancer |

| | hadoop.hdfs.TestDFSStartupVersions |

| | hadoop.hdfs.TestSetrepIncreasing |

| | hadoop.hdfs.TestDFSInotifyEventInputStream |

| | hadoop.hdfs.TestDatanodeReport |

| | hadoop.hdfs.TestDistributedFileSystemWithECFileWithRandomECPolicy |

| | hadoop.hdfs.TestReadStripedFileWithDNFailure |

| | hadoop.hdfs.server.namenode.TestFsck |

| | hadoop.hdfs.TestWriteReadStripedFile |

| | hadoop.hdfs.TestClientProtocolForPipelineRecovery |

| | hadoop.hdfs.TestLeaseRecoveryStriped |

| | hadoop.hdfs.TestDFSStripedInputStreamWithRandomECPolicy |

| | hadoop.hdfs.TestDecommission |

| | hadoop.hdfs.web.TestWebHdfsWithRestCsrfPreventionFilter |

| | hadoop.hdfs.TestRollingUpgradeRollback |

| | hadoop.hdfs.TestErasureCodingPolicies |

| | hadoop.hdfs.web.TestWebHDFS |

| | hadoop.hdfs.TestReconstructStripedFileWithRandomECPolicy |

| | hadoop.hdfs.TestDFSStripedOutputStreamWithFailure |

| Subsystem | Report/Notes |

|--:|:-|

| Docker

[GitHub] [hadoop] hadoop-yetus commented on pull request #2528: HDFS-15716. WaitforReplication in TestUpgradeDomainBlockPlacementPolicy

hadoop-yetus commented on pull request #2528:

URL: https://github.com/apache/hadoop/pull/2528#issuecomment-740184212

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 30m 48s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | | 0m 1s | [test4tests](test4tests) | The patch

appears to include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 43s | | trunk passed |

| +1 :green_heart: | compile | 1m 17s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | compile | 1m 11s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | checkstyle | 0m 49s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 19s | | trunk passed |

| +1 :green_heart: | shadedclient | 17m 33s | | branch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 54s | | trunk passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +0 :ok: | spotbugs | 3m 5s | | Used deprecated FindBugs config;

considering switching to SpotBugs. |

| +1 :green_heart: | findbugs | 3m 3s | | trunk passed |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 8s | | the patch passed |

| +1 :green_heart: | compile | 1m 10s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javac | 1m 10s | | the patch passed |

| +1 :green_heart: | compile | 1m 5s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | javac | 1m 5s | | the patch passed |

| -0 :warning: | checkstyle | 0m 39s |

[/diff-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/1/artifact/out/diff-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 2 new + 6 unchanged -

0 fixed = 8 total (was 6) |

| +1 :green_heart: | mvnsite | 1m 12s | | the patch passed |

| +1 :green_heart: | whitespace | 0m 0s | | The patch has no

whitespace issues. |

| +1 :green_heart: | shadedclient | 14m 49s | | patch has no errors

when building and testing our client artifacts. |

| +1 :green_heart: | javadoc | 0m 48s | | the patch passed with JDK

Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04 |

| +1 :green_heart: | javadoc | 1m 21s | | the patch passed with JDK

Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| +1 :green_heart: | findbugs | 3m 4s | | the patch passed |

_ Other Tests _ |

| -1 :x: | unit | 119m 51s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 43s | | The patch does not

generate ASF License warnings. |

| | | 239m 1s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.namenode.ha.TestEditLogTailer |

| | hadoop.hdfs.TestDFSInotifyEventInputStreamKerberized |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.40 ServerAPI=1.40 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2528 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient findbugs checkstyle |

| uname | Linux 3abc7ecdf74d 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / da1ea2530fa |

| Default Java | Private Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.9.1+1-Ubuntu-0ubuntu1.18.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_275-8u275-b01-0ubuntu1~18.04-b01 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2528/1/testReport/ |

| Max. process+thread count | 3784 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console

[jira] [Commented] (HADOOP-17412) When `fs.s3a.connection.ssl.enabled=true`, Error when visit S3A with AKSK

[

https://issues.apache.org/jira/browse/HADOOP-17412?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17245525#comment-17245525

]

Steve Loughran commented on HADOOP-17412:

-

+are you using wildfly + openssl,

if so,

# which openssl version?

# change your settings to use JDK only

# if JDK, you using oracle, openjdk or Amazon corretto?

> When `fs.s3a.connection.ssl.enabled=true`, Error when visit S3A with AKSK

> ---

>

> Key: HADOOP-17412

> URL: https://issues.apache.org/jira/browse/HADOOP-17412

> Project: Hadoop Common

> Issue Type: Bug

> Components: fs/s3

> Environment: jdk 1.8

> hadoop-3.3.0

>Reporter: angerszhu

>Priority: Major

> Attachments: image-2020-12-07-10-25-51-908.png

>

>

> When we update hadoop version from hadoop-3.2.1 to hadoop-3.3.0, Use AKSK

> access s3a with ssl enabled, then this error happen

> {code:java}

>

> ipc.client.connection.maxidletime

> 2

>

>

> fs.s3a.secret.key

>

>

>

> fs.s3a.access.key

>

>

>

> fs.s3a.aws.credentials.provider

> org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider

>

> {code}

> !image-2020-12-07-10-25-51-908.png!

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17414) Magic committer files don't have the count of bytes written collected by spark

[ https://issues.apache.org/jira/browse/HADOOP-17414?focusedWorklogId=521369=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521369 ] ASF GitHub Bot logged work on HADOOP-17414: --- Author: ASF GitHub Bot Created on: 07/Dec/20 20:29 Start Date: 07/Dec/20 20:29 Worklog Time Spent: 10m Work Description: steveloughran commented on pull request #2530: URL: https://github.com/apache/hadoop/pull/2530#issuecomment-740161845 + @sunchao @dongjoon-hyun This is not for merging, just for run through yetus and discussion. Tested S3A London (consistent!) with/without S3guard ``` mvit -Dparallel-tests -DtestsThreadCount=4 -Dmarkers=keep -Dfs.s3a.directory.marker.audit=true mvit -Dparallel-tests -DtestsThreadCount=4 -Dmarkers=delete -Ds3guard -Ddynamo -Dfs.s3a.directory.marker.audit=true ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 521369) Time Spent: 20m (was: 10m) > Magic committer files don't have the count of bytes written collected by spark > -- > > Key: HADOOP-17414 > URL: https://issues.apache.org/jira/browse/HADOOP-17414 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/s3 >Affects Versions: 3.2.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > The spark statistics tracking doesn't correctly assess the size of the > uploaded files as it only calls getFileStatus on the zero byte objects -not > the yet-to-manifest files. > Everything works with the staging committer purely because it's measuring the > length of the files staged to the local FS, not the unmaterialized output. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran commented on pull request #2530: HADOOP-17414. Magic committer files don't have the count of bytes written collected by spark

steveloughran commented on pull request #2530: URL: https://github.com/apache/hadoop/pull/2530#issuecomment-740161845 + @sunchao @dongjoon-hyun This is not for merging, just for run through yetus and discussion. Tested S3A London (consistent!) with/without S3guard ``` mvit -Dparallel-tests -DtestsThreadCount=4 -Dmarkers=keep -Dfs.s3a.directory.marker.audit=true mvit -Dparallel-tests -DtestsThreadCount=4 -Dmarkers=delete -Ds3guard -Ddynamo -Dfs.s3a.directory.marker.audit=true ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17414) Magic committer files don't have the count of bytes written collected by spark

[ https://issues.apache.org/jira/browse/HADOOP-17414?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HADOOP-17414: Labels: pull-request-available (was: ) > Magic committer files don't have the count of bytes written collected by spark > -- > > Key: HADOOP-17414 > URL: https://issues.apache.org/jira/browse/HADOOP-17414 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/s3 >Affects Versions: 3.2.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > The spark statistics tracking doesn't correctly assess the size of the > uploaded files as it only calls getFileStatus on the zero byte objects -not > the yet-to-manifest files. > Everything works with the staging committer purely because it's measuring the > length of the files staged to the local FS, not the unmaterialized output. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17414) Magic committer files don't have the count of bytes written collected by spark

[ https://issues.apache.org/jira/browse/HADOOP-17414?focusedWorklogId=521367=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-521367 ] ASF GitHub Bot logged work on HADOOP-17414: --- Author: ASF GitHub Bot Created on: 07/Dec/20 20:26 Start Date: 07/Dec/20 20:26 Worklog Time Spent: 10m Work Description: steveloughran opened a new pull request #2530: URL: https://github.com/apache/hadoop/pull/2530 …tten collected by spark This is a PoC which, having implemented, I don't think is viable. Yes, we can fix up getFileStatus so it reads the header. It even knows to always bypass S3Guard (no inconsistencies to worry about any more). But: the blast radius of the change is too big. I'm worried about distcp or any other code which goes len =getFileStatus(path).getLen() open(path).readFully(0, len, dest) You'll get an EOF here. Find the file through a listing and you'll be OK provided S3Guard isn't updated with that GetFileStatus result, which I have seen. The ordering of probes in ITestMagicCommitProtocol.validateTaskAttemptPathAfterWrite need to be list before getFileStatus, so the S3Guard table is updated from the list. overall: danger. Even without S3Guard there's risk. Anyway, shown it can be done. And I think there's a merit in a leaner patch which attaches the marker but doesn't do any fixup. This would let us add an API call "getObjectHeaders(path) -> Future> and then use that to do the lookup. We can implement the probe for ABFS and S3, add a hasPathCapabilities for it as well as an interface the FS can implement (which passthrough filesystems would need to do). Change-Id: If56213c0c5d8ab696d2d89b48ad52874960b0920 ## NOTICE Please create an issue in ASF JIRA before opening a pull request, and you need to set the title of the pull request which starts with the corresponding JIRA issue number. (e.g. HADOOP-X. Fix a typo in YYY.) For more details, please see https://cwiki.apache.org/confluence/display/HADOOP/How+To+Contribute This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 521367) Remaining Estimate: 0h Time Spent: 10m > Magic committer files don't have the count of bytes written collected by spark > -- > > Key: HADOOP-17414 > URL: https://issues.apache.org/jira/browse/HADOOP-17414 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/s3 >Affects Versions: 3.2.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > > The spark statistics tracking doesn't correctly assess the size of the > uploaded files as it only calls getFileStatus on the zero byte objects -not > the yet-to-manifest files. > Everything works with the staging committer purely because it's measuring the > length of the files staged to the local FS, not the unmaterialized output. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran opened a new pull request #2530: HADOOP-17414. Magic committer files don't have the count of bytes wri…