[jira] [Resolved] (DRILL-6277) Query fails with DATA_READ ERROR when correlated subquery has "always false" filter

[

https://issues.apache.org/jira/browse/DRILL-6277?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Volodymyr Vysotskyi resolved DRILL-6277.

Resolution: Fixed

Fixed in the scope of DRILL-6294

> Query fails with DATA_READ ERROR when correlated subquery has "always false"

> filter

> ---

>

> Key: DRILL-6277

> URL: https://issues.apache.org/jira/browse/DRILL-6277

> Project: Apache Drill

> Issue Type: Bug

>Affects Versions: 1.13.0

>Reporter: Volodymyr Vysotskyi

>Assignee: Volodymyr Vysotskyi

>Priority: Major

> Fix For: 1.14.0

>

>

> Query with correlated subquery which contains "always false" filter fails:

> {noformat}

> select * from cp.`employee.json` t where exists(select employee_id from

> cp.`employee.json` where t.employee_id = 3 and 1 = 5);

> Error: DATA_READ ERROR: The top level of your document must either be a

> single array of maps or a set of white space delimited maps.

> Line -1

> Column 0

> Field

> Fragment 0:0

> [Error Id: 66b38c7e-7d12-4f38-93e4-f97f08f55e93 on user515050-pc:31013]

> (state=,code=0)

> {noformat}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Resolved] (DRILL-4807) ORDER BY aggregate function in window definition results in AssertionError: Internal error: invariant violated: conversion result not null

[

https://issues.apache.org/jira/browse/DRILL-4807?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Volodymyr Vysotskyi resolved DRILL-4807.

Resolution: Fixed

Fixed in the scope of DRILL-6294

> ORDER BY aggregate function in window definition results in AssertionError:

> Internal error: invariant violated: conversion result not null

> --

>

> Key: DRILL-4807

> URL: https://issues.apache.org/jira/browse/DRILL-4807

> Project: Apache Drill

> Issue Type: Bug

> Components: Execution - Flow

>Affects Versions: 1.8.0, 1.10.0

>Reporter: Khurram Faraaz

>Assignee: Volodymyr Tkach

>Priority: Major

> Labels: window_function

>

> This seems to be a problem when regular window function queries, when

> aggregate function is used in ORDER BY clause inside the window definition.

> MapR Drill 1.8.0 commit ID : 34ca63ba

> {noformat}

> 0: jdbc:drill:schema=dfs.tmp> SELECT col0, SUM(col0) OVER ( PARTITION BY col7

> ORDER BY MIN(col8)) avg_col0, col7 FROM `allTypsUniq.parquet` GROUP BY

> col0,col8,col7;

> Error: SYSTEM ERROR: AssertionError: Internal error: invariant violated:

> conversion result not null

> [Error Id: 19a3eced--4e83-ae0f-6b8ea21b2afd on centos-01.qa.lab:31010]

> (state=,code=0)

> {noformat}

> {noformat}

> 0: jdbc:drill:schema=dfs.tmp> SELECT col0, AVG(col0) OVER ( PARTITION BY col7

> ORDER BY MIN(col8)) avg_col0, col7 FROM `allTypsUniq.parquet` GROUP BY

> col0,col8,col7;

> Error: SYSTEM ERROR: AssertionError: Internal error: invariant violated:

> conversion result not null

> [Error Id: c9b7ebf2-6097-41d8-bb73-d57da4ace8ad on centos-01.qa.lab:31010]

> (state=,code=0)

> {noformat}

> Stack trace from drillbit.log

> {noformat}

> 2016-07-26 09:26:16,717 [2868d347-3124-0c58-89ff-19e4ee891031:foreman] INFO

> o.a.drill.exec.work.foreman.Foreman - Query text for query id

> 2868d347-3124-0c58-89ff-19e4ee891031: SELECT col0, AVG(col0) OVER ( PARTITION

> BY col7 ORDER BY MIN(col8)) avg_col0, col7 FROM `allTypsUniq.parquet` GROUP

> BY col0,col8,col7

> 2016-07-26 09:26:16,751 [2868d347-3124-0c58-89ff-19e4ee891031:foreman] ERROR

> o.a.drill.exec.work.foreman.Foreman - SYSTEM ERROR: AssertionError: Internal

> error: invariant violated: conversion result not null

> [Error Id: c9b7ebf2-6097-41d8-bb73-d57da4ace8ad on centos-01.qa.lab:31010]

> org.apache.drill.common.exceptions.UserException: SYSTEM ERROR:

> AssertionError: Internal error: invariant violated: conversion result not null

> [Error Id: c9b7ebf2-6097-41d8-bb73-d57da4ace8ad on centos-01.qa.lab:31010]

> at

> org.apache.drill.common.exceptions.UserException$Builder.build(UserException.java:543)

> ~[drill-common-1.8.0-SNAPSHOT.jar:1.8.0-SNAPSHOT]

> at

> org.apache.drill.exec.work.foreman.Foreman$ForemanResult.close(Foreman.java:791)

> [drill-java-exec-1.8.0-SNAPSHOT.jar:1.8.0-SNAPSHOT]

> at

> org.apache.drill.exec.work.foreman.Foreman.moveToState(Foreman.java:901)

> [drill-java-exec-1.8.0-SNAPSHOT.jar:1.8.0-SNAPSHOT]

> at org.apache.drill.exec.work.foreman.Foreman.run(Foreman.java:271)

> [drill-java-exec-1.8.0-SNAPSHOT.jar:1.8.0-SNAPSHOT]

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

> [na:1.7.0_101]

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

> [na:1.7.0_101]

> at java.lang.Thread.run(Thread.java:745) [na:1.7.0_101]

> Caused by: org.apache.drill.exec.work.foreman.ForemanException: Unexpected

> exception during fragment initialization: Internal error: invariant violated:

> conversion result not null

> ... 4 common frames omitted

> Caused by: java.lang.AssertionError: Internal error: invariant violated:

> conversion result not null

> at org.apache.calcite.util.Util.newInternal(Util.java:777)

> ~[calcite-core-1.4.0-drill-r14.jar:1.4.0-drill-r14]

> at org.apache.calcite.util.Util.permAssert(Util.java:885)

> ~[calcite-core-1.4.0-drill-r14.jar:1.4.0-drill-r14]

> at

> org.apache.calcite.sql2rel.SqlToRelConverter$Blackboard.convertExpression(SqlToRelConverter.java:4063)

> ~[calcite-core-1.4.0-drill-r14.jar:1.4.0-drill-r14]

> at

> org.apache.calcite.sql2rel.SqlToRelConverter$Blackboard.convertSortExpression(SqlToRelConverter.java:4080)

> ~[calcite-core-1.4.0-drill-r14.jar:1.4.0-drill-r14]

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.convertOver(SqlToRelConverter.java:1783)

> ~[calcite-core-1.4.0-drill-r14.jar:1.4.0-drill-r14]

> at

> org.apache.calcite.sql2rel.SqlToRelConverter.access$1100(SqlToRelConverter.java:185)

> ~[calcite-core-1.4.0-drill-r14.jar:1.4.0-drill-r14]

> at

> org.apache.calcite.sql2rel.SqlToRel

[GitHub] drill pull request #1210: DRILL-6270: Add debug startup option flag for dril...

GitHub user agozhiy opened a pull request: https://github.com/apache/drill/pull/1210 DRILL-6270: Add debug startup option flag for drill in embedded and s⦠â¦erver mode Works with the drillbit.sh, sqlline and sqlline.bat: Usage: --debug:[parameter1=value,parameter2=value] Optional parameters: port=[port_number] - debug port number suspend=[y/n] - pause until the IDE connects You can merge this pull request into a Git repository by running: $ git pull https://github.com/agozhiy/drill DRILL-6270 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/drill/pull/1210.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #1210 commit 5206268568a43864f6b0d144b56d3033c17d8031 Author: Anton Gozhiy Date: 2018-03-27T10:29:14Z DRILL-6270: Add debug startup option flag for drill in embedded and server mode Works with the drillbit.sh, sqlline and sqlline.bat: Usage: --debug:[parameter1=value,parameter2=value] Optional parameters: port=[port_number] - debug port number suspend=[y/n] - pause until the IDE connects ---

[jira] [Created] (DRILL-6331) Parquet filter pushdown does not support the native hive reader

Arina Ielchiieva created DRILL-6331: --- Summary: Parquet filter pushdown does not support the native hive reader Key: DRILL-6331 URL: https://issues.apache.org/jira/browse/DRILL-6331 Project: Apache Drill Issue Type: Improvement Components: Storage - Hive Affects Versions: 1.13.0 Reporter: Arina Ielchiieva Assignee: Arina Ielchiieva Fix For: 1.14.0 Initially HiveDrillNativeParquetGroupScan was based mainly on HiveScan, the core difference between them was that HiveDrillNativeParquetScanBatchCreator was creating ParquetRecordReader instead of HiveReader. This allowed to read Hive parquet files using Drill native parquet reader but did not expose Hive data to Drill optimizations. For example, filter push down, limit push down, count to direct scan optimizations. Hive code had to be refactored to use the same interfaces as ParquestGroupScan in order to be exposed to such optimizations. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] drill pull request #1210: DRILL-6270: Add debug startup option flag for dril...

Github user arina-ielchiieva commented on a diff in the pull request: https://github.com/apache/drill/pull/1210#discussion_r181732701 --- Diff: distribution/src/resources/runbit --- @@ -65,6 +65,47 @@ drill_rotate_log () fi } +args=( $@ ) +RBARGS=() --- End diff -- Does `RBARGS` have some meaning? Maybe it better to give clearer naming? Also please add comment describing that you remove debug string from original args but leave all other args. ---

[jira] [Created] (DRILL-6332) DrillbitStartupException: Failure while initializing values in Drillbit

Hari Sekhon created DRILL-6332:

--

Summary: DrillbitStartupException: Failure while initializing

values in Drillbit

Key: DRILL-6332

URL: https://issues.apache.org/jira/browse/DRILL-6332

Project: Apache Drill

Issue Type: Improvement

Components: Server

Affects Versions: 1.10.0

Reporter: Hari Sekhon

Improvement request to make this error more specific so we can tell what is

causing it:

{code:java}

==> /opt/mapr/drill/drill-1.10.0/logs/drillbit.out <==

Exception in thread "main"

org.apache.drill.exec.exception.DrillbitStartupException: Failure while

initializing values in Drillbit.

at org.apache.drill.exec.server.Drillbit.start(Drillbit.java:287)

at org.apache.drill.exec.server.Drillbit.start(Drillbit.java:271)

at org.apache.drill.exec.server.Drillbit.main(Drillbit.java:267)

Caused by: java.lang.IllegalStateException

at com.google.common.base.Preconditions.checkState(Preconditions.java:158)

at

org.apache.drill.common.KerberosUtil.splitPrincipalIntoParts(KerberosUtil.java:59)

at

org.apache.drill.exec.server.BootStrapContext.login(BootStrapContext.java:130)

at

org.apache.drill.exec.server.BootStrapContext.(BootStrapContext.java:77)

at org.apache.drill.exec.server.Drillbit.(Drillbit.java:94)

at org.apache.drill.exec.server.Drillbit.start(Drillbit.java:285)

... 2 more

{code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

Re: [DISCUSS] Regarding mutator interface

Hi Aman, TL;DR: Maps in Drill are nested tuples. The easiest solution may be for the operator to generate code to process each map member one-by-one. The original question and code appears based on an assumption: that a map is a value (like an INT or VARCHAR), but is a complex value (a value with inner structure.) We discussed that, for scalar types, we'd use a Freemarker template to generate, at compile time, an implementation for each combination of (scalar type, data mode). That is, we'd have an INT implementation and a NULLABLE INT implementation, and so on. What do we do for Map? Perhaps this is due, in part, to the type name. The term "Map" conjures up an image of a Java Map or a Python hash: a collection of name/value pairs in which the members of one instance are independent of those in another instance (or row, in Drill's case.) Ideally, the operator can obtain the Map value, pass it to the UDAF by value. The UDAF can then do its calculation, returning the final value for the group, which the operator copies into the output vector. For a Drill Map, perhaps the value can be in the form of a Java Map. Indeed, if we look carefully, we see that the getObject() method for the Map mutator can, indeed, return the value as a Java map. But, soon, we start seeing issues. There is no function in the Mutator to set the value of a map column from a Java map. UDAFs use "Holders" to pass values in and out, and there are no Map Holders that hold Java Maps. There is clearly a cost to the conversions. UDAFs maintain internal state (which Drill maps to vectors), but there is no good internal state for a Java map. So, we look for an alternative: maybe we pass in a Source for Map values and a Sink to write the output values. Now, we have to decide what to do inside the implementation. Here we must recognize that a Drill Map is actually what Impala and Hive call a "struct": it is a fixed set of columns that are the same for all records. So, we have to iterate over the members of the map and do something with each column. What do we do? Well, we could use the UDAFs we generated for each type. Recall that Maps can nest to any level, so our interpreted code that loops over map members must also handle nested maps. How? By recursively iterating over the map members. Next, we need a place to store intermediate values. For simple types, Drill allocates a (hidden) vector to hold these values based on the Holder type of a @Workspace field. (The temporary vector is needed by the Hash Agg though perhaps not for streaming.) How do we create these intermediate values for our Map UDAF? Right about now we get the sense that perhaps we are heading down the wrong path. But, where did we go wrong? Perhaps we should shift our thinking. Maps are not just another value that happens to be complex. Instead, Maps are special: they are nested tuples. Perhaps it should be the responsibility of the operator to "expand" the Map tuple the same way that it does for the top-level tuple (row). In this model, the Streaming Age would say, "hey, I have a Map. So, what I'll do is recursively expand the columns and process each nested column same as for the top column." What is the result? We can use the generated scalar UDAFs described above. We use the same technique to generate the internal hidden vectors for intermediate values. We do not need a Map implementation that iterates over nested columns; instead that iteration is done at code gen time. This does require new code in code gen. But, we were going to have to write new code somewhere anyway. But, what if the UDAF really wants to do something with a map? (Perhaps pull out a single column, perhaps compute a distance given a map with x and y values.) Code gen can start by looking for a UDAF that takes a map reader as input. If found, use it. Else, recursively expand the map columns using those columns types to find a UDAF. Note that this same thinking helps with REPEATED types. Rather than generating a REPEATED INT, say, UDAF, the generated code can instead apply the REQUIRED INT UDAF to each int array element. (Assuming that the aggregate is one where this makes sense.) In short, Maps (and arrays, lists, repeated lists and unions) are not just more complex forms of simple values. They are a different beast and require a different approach to computation, aggregation and UDAFs. The above is one approach; perhaps there are better solutions one we recognize that complex types requires a different approach than do simple types. As it turned out, treating Map columns as nested tuples worked surprisingly well in the Row Set mechanisms. What would otherwise have been overly complex turned instead into a simple recursive algorithm in which both the row and a Map are both tuples and the same code applies to both. Perhaps that same trick can help here. Thanks, - Paul On Sunday, April 15, 2018, 10:32:31 AM PDT, Aman Sinha wro

[GitHub] drill pull request #1210: DRILL-6270: Add debug startup option flag for dril...

Github user paul-rogers commented on a diff in the pull request:

https://github.com/apache/drill/pull/1210#discussion_r181799954

--- Diff: distribution/src/resources/runbit ---

@@ -65,6 +65,47 @@ drill_rotate_log ()

fi

}

+args=( $@ )

+RBARGS=()

+for (( i=0; i < ${#args[@]}; i++ )); do

+ case "${args[i]}" in

+ --debug*)

+ DEBUG=true

+ DEBUG_STRING=`expr "${args[i]}" : '.*:\(.*\)'`

+ ;;

+ *) RBARGS+=("${args[i]}");;

+ esac

+done

+

+# Enables remote debug if requested

+# Usage: --debug:[parameter1=value,parameter2=value]

+# Optional parameters:

+# port=[port_number] - debug port number

+# suspend=[y/n] - pause until the IDE connects

+

+if [ $DEBUG ]; then

+ debug_params=( $(echo $DEBUG_STRING | grep -o '[^,"]*') )

+ for param in ${debug_params[@]}; do

+case $param in

+port*)

+ DEBUG_PORT=`expr "$param" : '.*=\(.*\)'`

+ ;;

+suspend*)

+ DEBUG_SUSPEND=`expr "$param" : '.*=\(.*\)'`

+ ;;

+esac

+ done

+

+ if [ -z $DEBUG_PORT ]; then

+DEBUG_PORT=5

+ fi

+ if [ -z $DEBUG_SUSPEND ]; then

+DEBUG_SUSPEND='n'

+ fi

+

+ JAVA_DEBUG="-Xdebug -Xnoagent

-Xrunjdwp:transport=dt_socket,address=$DEBUG_PORT,server=y,suspend=$DEBUG_SUSPEND"

+fi

--- End diff --

This is overly complex and puts Drill in the business of tracking the

arguments that the JVM wants to enable debugging. Those flags changed in the

past and may change again.

Would recommend instead:

```

--jvm -anyArgumentYouWant

```

Multiple `--jvm` arguments can appear. Simply append this to the the

`DRILL_JAVA_OPTS` variable. Very simple.

---

[GitHub] drill pull request #1203: DRILL-6289: Cluster view should show more relevant...

Github user sohami commented on a diff in the pull request:

https://github.com/apache/drill/pull/1203#discussion_r181830465

--- Diff:

common/src/main/java/org/apache/drill/exec/metrics/CpuGaugeSet.java ---

@@ -0,0 +1,62 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.metrics;

+

+import java.lang.management.OperatingSystemMXBean;

+import java.util.HashMap;

+import java.util.Map;

+

+import com.codahale.metrics.Gauge;

+import com.codahale.metrics.Metric;

+import com.codahale.metrics.MetricSet;

+

+/**

+ * Creates a Cpu GaugeSet

+ */

+class CpuGaugeSet implements MetricSet {

--- End diff --

I am not sure how useful `getProcessCpuLoad` alone will be, but in addition

with total system load it can give the idea about how Drill and other services

on node are using the CPU. If you plan to include another metric then in that

case `CpuGaugeSet` class is fine. Thanks for clarification.

---

[GitHub] drill pull request #1203: DRILL-6289: Cluster view should show more relevant...

Github user kkhatua commented on a diff in the pull request:

https://github.com/apache/drill/pull/1203#discussion_r181834064

--- Diff: exec/java-exec/src/main/resources/rest/index.ftl ---

@@ -283,20 +308,114 @@

alert(errorThrown);

},

success: function(data) {

- alert(data["response"]);

+ alert(data["response"]);

button.prop('disabled',true).css('opacity',0.5);

}

});

}

}

-

+

+ function remoteShutdown(button,host) {

+ var url = location.protocol + "//" + host +

"/gracefulShutdown";

+ var result = $.ajax({

+type: 'POST',

+url: url,

+contentType : 'text/plain',

+complete: function(data) {

+alert(data.responseJSON["response"]);

+button.prop('disabled',true).css('opacity',0.5);

+}

+ });

+ }

+

+

+ function popOutRemoteDbitUI(dbitHost, dbitPort) {

--- End diff --

We can't put this and the remaining functions within the IF block since the

localhost operations are still valid. i.e. an authenticated client on a

specific Drillbit can ping that Drillbit for refresh of the metrics. Only

shutdown function needs to be managed with the IF block

---

[GitHub] drill issue #1207: DRILL-6320: Fixed License Headers

Github user ilooner commented on the issue: https://github.com/apache/drill/pull/1207 @arina-ielchiieva Please let me know if you have any comments or if things look good. ---

[GitHub] drill issue #1207: DRILL-6320: Fixed License Headers

Github user arina-ielchiieva commented on the issue: https://github.com/apache/drill/pull/1207 +1, thanks for making the changes! ---

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181851002

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerDecorator.java

---

@@ -262,68 +280,124 @@ public FlushBatchesHandlingClass(boolean

isLastBatch, boolean schemaChanged) {

}

@Override

-public void execute(Partitioner part) throws IOException {

+public void execute(Partitioner part) throws IOException,

InterruptedException {

part.flushOutgoingBatches(isLastBatch, schemaChanged);

}

}

/**

- * Helper class to wrap Runnable with customized naming

- * Exception handling

+ * Helper class to wrap Runnable with cancellation and waiting for

completion support

*

*/

- private static class CustomRunnable implements Runnable {

+ private static class PartitionerTask implements Runnable {

+

+private enum STATE {

+ NEW,

+ COMPLETING,

+ NORMAL,

+ EXCEPTIONAL,

+ CANCELLED,

+ INTERRUPTING,

+ INTERRUPTED

+}

+

+private final AtomicReference state;

+private final AtomicReference runner;

+private final PartitionerDecorator partitionerDecorator;

+private final AtomicInteger count;

-private final String parentThreadName;

-private final CountDownLatch latch;

private final GeneralExecuteIface iface;

-private final Partitioner part;

+private final Partitioner partitioner;

private CountDownLatchInjection testCountDownLatch;

-private volatile IOException exp;

+private volatile ExecutionException exception;

-public CustomRunnable(final String parentThreadName, final

CountDownLatch latch, final GeneralExecuteIface iface,

-final Partitioner part, CountDownLatchInjection

testCountDownLatch) {

- this.parentThreadName = parentThreadName;

- this.latch = latch;

+public PartitionerTask(PartitionerDecorator partitionerDecorator,

GeneralExecuteIface iface, Partitioner partitioner, AtomicInteger count,

CountDownLatchInjection testCountDownLatch) {

+ state = new AtomicReference<>(STATE.NEW);

+ runner = new AtomicReference<>();

+ this.partitionerDecorator = partitionerDecorator;

this.iface = iface;

- this.part = part;

+ this.partitioner = partitioner;

+ this.count = count;

this.testCountDownLatch = testCountDownLatch;

}

@Override

public void run() {

- // Test only - Pause until interrupted by fragment thread

+ final Thread thread = Thread.currentThread();

+ Preconditions.checkState(runner.compareAndSet(null, thread),

+ "PartitionerTask can be executed only once.");

+ if (state.get() == STATE.NEW) {

+final String name = thread.getName();

+thread.setName(String.format("Partitioner-%s-%d",

partitionerDecorator.thread.getName(), thread.getId()));

+final OperatorStats localStats = partitioner.getStats();

+localStats.clear();

+localStats.startProcessing();

+ExecutionException executionException = null;

+try {

+ // Test only - Pause until interrupted by fragment thread

+ testCountDownLatch.await();

+ iface.execute(partitioner);

+} catch (InterruptedException e) {

+ if (state.compareAndSet(STATE.NEW, STATE.INTERRUPTED)) {

+logger.warn("Partitioner Task interrupted during the run", e);

+ }

+} catch (Throwable t) {

+ executionException = new ExecutionException(t);

+}

+if (state.compareAndSet(STATE.NEW, STATE.COMPLETING)) {

+ if (executionException == null) {

+localStats.stopProcessing();

+state.lazySet(STATE.NORMAL);

+ } else {

+exception = executionException;

+state.lazySet(STATE.EXCEPTIONAL);

+ }

+}

+if (count.decrementAndGet() == 0) {

+ LockSupport.unpark(partitionerDecorator.thread);

+}

+thread.setName(name);

+ }

+ runner.set(null);

+ while (state.get() == STATE.INTERRUPTING) {

+Thread.yield();

+ }

+ // Clear interrupt flag

try {

-testCountDownLatch.await();

- } catch (final InterruptedException e) {

-logger.debug("Test only: partitioner thread is interrupted in test

countdown latch await()", e);

+Thread.sleep(0);

--- End diff --

Could we use Thread.interrupted() instead? Javadoc suggests it's a good

alternative to use for cleari

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181858695

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerDecorator.java

---

@@ -118,105 +127,114 @@ public PartitionOutgoingBatch

getOutgoingBatches(int index) {

return null;

}

- @VisibleForTesting

- protected List getPartitioners() {

+ List getPartitioners() {

return partitioners;

}

/**

* Helper to execute the different methods wrapped into same logic

* @param iface

- * @throws IOException

+ * @throws ExecutionException

*/

- protected void executeMethodLogic(final GeneralExecuteIface iface)

throws IOException {

-if (partitioners.size() == 1 ) {

- // no need for threads

- final OperatorStats localStatsSingle =

partitioners.get(0).getStats();

- localStatsSingle.clear();

- localStatsSingle.startProcessing();

+ @VisibleForTesting

+ void executeMethodLogic(final GeneralExecuteIface iface) throws

ExecutionException {

+// To simulate interruption of main fragment thread and interrupting

the partition threads, create a

+// CountDownInject latch. Partitioner threads await on the latch and

main fragment thread counts down or

+// interrupts waiting threads. This makes sure that we are actually

interrupting the blocked partitioner threads.

+try (CountDownLatchInjection testCountDownLatch =

injector.getLatch(context.getExecutionControls(), "partitioner-sender-latch")) {

--- End diff --

I'm not sure that we should be using the injector to create a count down

latch here. My understanding is that we have to define a

`partitioner-sender-latch` injection site on the

`"drill.exec.testing.controls"` property and it is intended only for testing.

See ControlsInjectionUtil.createLatch(). The default value for

`drill.exec.testing.controls` is empty so the getLatch method would return a

Noop latch since `partitioner-sender-latch` is undefined. Since we always want

to create a count down latch here (not just for testing) shouldn't we directly

create one?

---

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user vrozov commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181865979

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerDecorator.java

---

@@ -118,105 +127,114 @@ public PartitionOutgoingBatch

getOutgoingBatches(int index) {

return null;

}

- @VisibleForTesting

- protected List getPartitioners() {

+ List getPartitioners() {

return partitioners;

}

/**

* Helper to execute the different methods wrapped into same logic

* @param iface

- * @throws IOException

+ * @throws ExecutionException

*/

- protected void executeMethodLogic(final GeneralExecuteIface iface)

throws IOException {

-if (partitioners.size() == 1 ) {

- // no need for threads

- final OperatorStats localStatsSingle =

partitioners.get(0).getStats();

- localStatsSingle.clear();

- localStatsSingle.startProcessing();

+ @VisibleForTesting

+ void executeMethodLogic(final GeneralExecuteIface iface) throws

ExecutionException {

+// To simulate interruption of main fragment thread and interrupting

the partition threads, create a

+// CountDownInject latch. Partitioner threads await on the latch and

main fragment thread counts down or

+// interrupts waiting threads. This makes sure that we are actually

interrupting the blocked partitioner threads.

+try (CountDownLatchInjection testCountDownLatch =

injector.getLatch(context.getExecutionControls(), "partitioner-sender-latch")) {

--- End diff --

The `testCountDownLatch` is used only for testing and initialized to 1. The

wait is on `count`.

---

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181871311

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerDecorator.java

---

@@ -118,105 +127,114 @@ public PartitionOutgoingBatch

getOutgoingBatches(int index) {

return null;

}

- @VisibleForTesting

- protected List getPartitioners() {

+ List getPartitioners() {

return partitioners;

}

/**

* Helper to execute the different methods wrapped into same logic

* @param iface

- * @throws IOException

+ * @throws ExecutionException

*/

- protected void executeMethodLogic(final GeneralExecuteIface iface)

throws IOException {

-if (partitioners.size() == 1 ) {

- // no need for threads

- final OperatorStats localStatsSingle =

partitioners.get(0).getStats();

- localStatsSingle.clear();

- localStatsSingle.startProcessing();

+ @VisibleForTesting

+ void executeMethodLogic(final GeneralExecuteIface iface) throws

ExecutionException {

+// To simulate interruption of main fragment thread and interrupting

the partition threads, create a

+// CountDownInject latch. Partitioner threads await on the latch and

main fragment thread counts down or

+// interrupts waiting threads. This makes sure that we are actually

interrupting the blocked partitioner threads.

+try (CountDownLatchInjection testCountDownLatch =

injector.getLatch(context.getExecutionControls(), "partitioner-sender-latch")) {

--- End diff --

I see thx.

---

[GitHub] drill issue #1203: DRILL-6289: Cluster view should show more relevant inform...

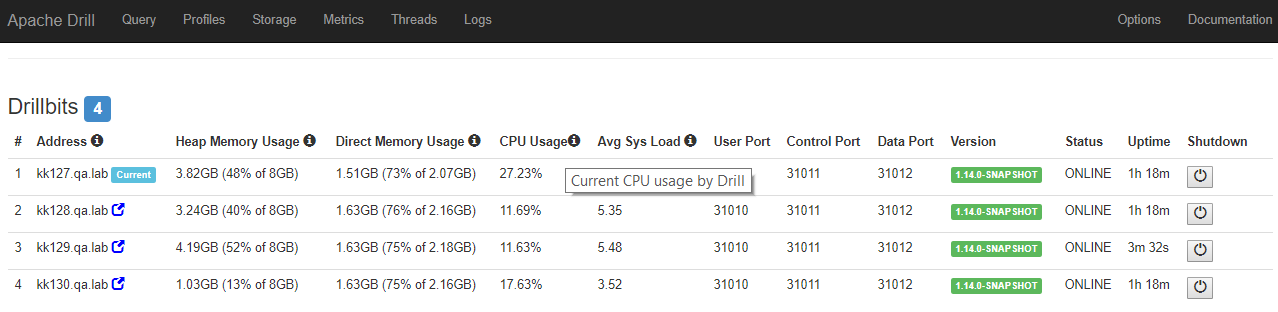

Github user kkhatua commented on the issue: https://github.com/apache/drill/pull/1203 Updated with an additional set of changes. * Added CPU metrics (obtained from [OperatingSystemMXBean.getProcessCpuLoad()](https://docs.oracle.com/javase/7/docs/jre/api/management/extension/com/sun/management/OperatingSystemMXBean.html#getProcessCpuLoad()) ) * Added uptime information so that you know if a Drillbit ever has a different start time. * Grey-out a button (disabled, i.e.) if the node is offline or remote & requires HTTPS * Additional changes based on comments Screenshot  ---

[jira] [Created] (DRILL-6333) Generate and host Javadocs on Apache Drill website

Kunal Khatua created DRILL-6333: --- Summary: Generate and host Javadocs on Apache Drill website Key: DRILL-6333 URL: https://issues.apache.org/jira/browse/DRILL-6333 Project: Apache Drill Issue Type: Improvement Reporter: Kunal Khatua Currently, there is no hosted location of Javadocs for Apache Drill. At a minimum, there should be a cleanly generated Javadoc published for each release, along with any Javadoc additions/enhancements. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181894189

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerTemplate.java

---

@@ -161,8 +161,11 @@ public OperatorStats getStats() {

* @param schemaChanged true if the schema has changed

*/

@Override

- public void flushOutgoingBatches(boolean isLastBatch, boolean

schemaChanged) throws IOException {

+ public void flushOutgoingBatches(boolean isLastBatch, boolean

schemaChanged) throws IOException, InterruptedException {

for (OutgoingRecordBatch batch : outgoingBatches) {

+ if (Thread.interrupted()) {

+throw new InterruptedException();

--- End diff --

Since we are checking for interrupts here already could we remove

`Thread.currentThread().isInterrupted()` in the flush(boolean schemaChanged)

method?

---

[GitHub] drill pull request #1211: DRILL-6322: Lateral Join: Common changes - Add new...

GitHub user sohami opened a pull request: https://github.com/apache/drill/pull/1211 DRILL-6322: Lateral Join: Common changes - Add new iterOutcome, Operator types, MockRecordBatch for testing @parthchandra - Please review. You can merge this pull request into a Git repository by running: $ git pull https://github.com/sohami/drill DRILL-6322 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/drill/pull/1211.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #1211 commit 2dbc6768857a0b8cd3e6e8c19fc133af2abf20d3 Author: Sorabh Hamirwasia Date: 2018-02-05T21:12:15Z DRILL-6322: Lateral Join: Common changes - Add new iterOutcome, Operatortypes, MockRecordBatch for testing Note: Added new Iterator State EMIT, added operatos LATERA_JOIN & UNNEST in CoreOperatorType and added LateralContract interface commit 15655ab3543521ff56f4d426ebf7ed4884eb3006 Author: Sorabh Hamirwasia Date: 2018-02-07T21:29:28Z DRILL-6322: Lateral Join: Common changes - Add new iterOutcome, Operatortypes, MockRecordBatch for testing Note: Implementation of MockRecordBatch to test operator behavior for different IterOutcomes. a) Creates new output container for schema change cases. b) Doesn't create new container for each next() call without schema change, since the operator in test expects the ValueVector object in it's incoming batch to be same unless a OK_NEW_SCHEMA case is hit. Since setup() method of operator in test will store the reference to value vector received in first batch ---

[GitHub] drill pull request #1212: DRILL-6323: Lateral Join - Initial implementation

GitHub user sohami opened a pull request: https://github.com/apache/drill/pull/1212 DRILL-6323: Lateral Join - Initial implementation @parthchandra - Please review. You can merge this pull request into a Git repository by running: $ git pull https://github.com/sohami/drill DRILL-6323 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/drill/pull/1212.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #1212 commit 38b82ce8ebca3b91ba8847229a568daf88f654a3 Author: Sorabh Hamirwasia Date: 2018-03-06T05:09:16Z DRILL-6323: Lateral Join - Initial implementation Refactor Join PopConfigs commit 0931ac974f7be41cd5ca4516524d04c4e5c6245d Author: Sorabh Hamirwasia Date: 2018-02-05T22:46:19Z DRILL-6323: Lateral Join - Initial implementation commit 08b7d38e6ffe4fed492a2ea67c3907f0f514717e Author: Sorabh Hamirwasia Date: 2018-02-20T22:47:48Z DRILL-6323: Lateral Join - Initial implementation Note: Refactor, fix various issues with LATERAL: a)Override prefetch call in BuildSchema phase for LATERAL, b)EMIT handling in buildSchema, c)Issue when in multilevel Lateral case schema change is observed only on right side of UNNEST, d)Handle SelectionVector in incoming batch, e) Handling Schema change, f) Updating joinIndexes correctly when producing multiple output batches for current left&right inputs. Added tests for a)EMIT handling in buildSchema, b)Multiple UNNEST at same level, c)Multilevel Lateral, d)Multilevel Lateral with Schema change on left/right or both branches, e) Left LATERAL join f)Schema change for UNNEST and Non-UNNEST columns, g)Error outcomes from left&right, h) Producing multiple output batches for given incoming, i) Consuming multiple incoming into single output batch commit f686877198857a23227031329b2f48c163f85021 Author: Sorabh Hamirwasia Date: 2018-03-09T23:56:22Z DRILL-6323: Lateral Join - Initial implementation Note: Remove codegen and operator template class. Logic to copy data is moved to LateralJoinBatch itself commit cb47407a8f47e7fc10ac04c438f7e903de92a80c Author: Sorabh Hamirwasia Date: 2018-03-13T23:41:03Z DRILL-6323: Lateral Join - Initial implementation Note: Add some debug logs for LateralJoinBatch commit 2ea968e3302ad8bb6d62a8032bd6b6329b900d3a Author: Sorabh Hamirwasia Date: 2018-03-14T23:59:29Z DRILL-6323: Lateral Join - Initial implementation Note: Refactor BatchMemorySize to put outputBatchSize in abstract class. Created a new JoinBatchMemoryManager to be shared across join record batches. Changed merge join to use AbstractBinaryRecordBatch instead of AbstractRecordBatch, and use JoinBatchMemoryManager commit fb521c65f91b69543ae9b5dbb9a727c79f852573 Author: Sorabh Hamirwasia Date: 2018-03-19T19:00:22Z DRILL-6323: Lateral Join - Initial implementation Note: Lateral Join Batch Memory manager support using the record batch sizer ---

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181915654

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerDecorator.java

---

@@ -262,68 +280,122 @@ public FlushBatchesHandlingClass(boolean

isLastBatch, boolean schemaChanged) {

}

@Override

-public void execute(Partitioner part) throws IOException {

+public void execute(Partitioner part) throws IOException,

InterruptedException {

part.flushOutgoingBatches(isLastBatch, schemaChanged);

}

}

/**

- * Helper class to wrap Runnable with customized naming

- * Exception handling

+ * Helper class to wrap Runnable with cancellation and waiting for

completion support

*

*/

- private static class CustomRunnable implements Runnable {

+ private static class PartitionerTask implements Runnable {

+

+private enum STATE {

+ NEW,

+ COMPLETING,

+ NORMAL,

+ EXCEPTIONAL,

+ CANCELLED,

+ INTERRUPTING,

+ INTERRUPTED

+}

+

+private final AtomicReference state;

+private final AtomicReference runner;

+private final PartitionerDecorator partitionerDecorator;

+private final AtomicInteger count;

-private final String parentThreadName;

-private final CountDownLatch latch;

private final GeneralExecuteIface iface;

-private final Partitioner part;

+private final Partitioner partitioner;

private CountDownLatchInjection testCountDownLatch;

-private volatile IOException exp;

+private volatile ExecutionException exception;

-public CustomRunnable(final String parentThreadName, final

CountDownLatch latch, final GeneralExecuteIface iface,

-final Partitioner part, CountDownLatchInjection

testCountDownLatch) {

- this.parentThreadName = parentThreadName;

- this.latch = latch;

+public PartitionerTask(PartitionerDecorator partitionerDecorator,

GeneralExecuteIface iface, Partitioner partitioner, AtomicInteger count,

CountDownLatchInjection testCountDownLatch) {

+ state = new AtomicReference<>(STATE.NEW);

+ runner = new AtomicReference<>();

+ this.partitionerDecorator = partitionerDecorator;

this.iface = iface;

- this.part = part;

+ this.partitioner = partitioner;

+ this.count = count;

this.testCountDownLatch = testCountDownLatch;

}

@Override

public void run() {

- // Test only - Pause until interrupted by fragment thread

- try {

-testCountDownLatch.await();

- } catch (final InterruptedException e) {

-logger.debug("Test only: partitioner thread is interrupted in test

countdown latch await()", e);

- }

-

- final Thread currThread = Thread.currentThread();

- final String currThreadName = currThread.getName();

- final OperatorStats localStats = part.getStats();

- try {

-final String newThreadName = parentThreadName + currThread.getId();

-currThread.setName(newThreadName);

+ final Thread thread = Thread.currentThread();

+ if (runner.compareAndSet(null, thread)) {

+final String name = thread.getName();

+thread.setName(String.format("Partitioner-%s-%d",

partitionerDecorator.thread.getName(), thread.getId()));

+final OperatorStats localStats = partitioner.getStats();

localStats.clear();

localStats.startProcessing();

-iface.execute(part);

- } catch (IOException e) {

-exp = e;

- } finally {

-localStats.stopProcessing();

-currThread.setName(currThreadName);

-latch.countDown();

+ExecutionException executionException = null;

+try {

+ // Test only - Pause until interrupted by fragment thread

+ testCountDownLatch.await();

+ if (state.get() == STATE.NEW) {

+iface.execute(partitioner);

+ }

+} catch (InterruptedException e) {

+ if (state.compareAndSet(STATE.NEW, STATE.INTERRUPTED)) {

+logger.warn("Partitioner Task interrupted during the run", e);

+ }

+} catch (Throwable t) {

+ executionException = new ExecutionException(t);

+} finally {

+ if (state.compareAndSet(STATE.NEW, STATE.COMPLETING)) {

+if (executionException == null) {

+ localStats.stopProcessing();

+ state.lazySet(STATE.NORMAL);

+} else {

+ exception = executionException;

+ state.laz

[GitHub] drill issue #1211: DRILL-6322: Lateral Join: Common changes - Add new iterOu...

Github user parthchandra commented on the issue: https://github.com/apache/drill/pull/1211 +1 ---

bi-weekly Hangout at April 17th 10:00am PST

We will have our routine hangout tomorrow. Please raise any topic you want to discuss before the meeting or at the beginning of the meeting. https://hangouts.google.com/hangouts/_/event/ci4rdiju8bv04a64efj5fedd0lc Best, Chunhui

[GitHub] drill pull request #1211: DRILL-6322: Lateral Join: Common changes - Add new...

Github user asfgit closed the pull request at: https://github.com/apache/drill/pull/1211 ---

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user vrozov commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181926928

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerTemplate.java

---

@@ -161,8 +161,11 @@ public OperatorStats getStats() {

* @param schemaChanged true if the schema has changed

*/

@Override

- public void flushOutgoingBatches(boolean isLastBatch, boolean

schemaChanged) throws IOException {

+ public void flushOutgoingBatches(boolean isLastBatch, boolean

schemaChanged) throws IOException, InterruptedException {

for (OutgoingRecordBatch batch : outgoingBatches) {

+ if (Thread.interrupted()) {

+throw new InterruptedException();

--- End diff --

I'll revert back throwing 'InterruptedException' to avoid mishandling of

the last batch.

---

[GitHub] drill pull request #1208: DRILL-6295: PartitionerDecorator may close partiti...

Github user vrozov commented on a diff in the pull request:

https://github.com/apache/drill/pull/1208#discussion_r181927070

--- Diff:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/partitionsender/PartitionerDecorator.java

---

@@ -262,68 +280,122 @@ public FlushBatchesHandlingClass(boolean

isLastBatch, boolean schemaChanged) {

}

@Override

-public void execute(Partitioner part) throws IOException {

+public void execute(Partitioner part) throws IOException,

InterruptedException {

part.flushOutgoingBatches(isLastBatch, schemaChanged);

}

}

/**

- * Helper class to wrap Runnable with customized naming

- * Exception handling

+ * Helper class to wrap Runnable with cancellation and waiting for

completion support

*

*/

- private static class CustomRunnable implements Runnable {

+ private static class PartitionerTask implements Runnable {

+

+private enum STATE {

+ NEW,

+ COMPLETING,

+ NORMAL,

+ EXCEPTIONAL,

+ CANCELLED,

+ INTERRUPTING,

+ INTERRUPTED

+}

+

+private final AtomicReference state;

+private final AtomicReference runner;

+private final PartitionerDecorator partitionerDecorator;

+private final AtomicInteger count;

-private final String parentThreadName;

-private final CountDownLatch latch;

private final GeneralExecuteIface iface;

-private final Partitioner part;

+private final Partitioner partitioner;

private CountDownLatchInjection testCountDownLatch;

-private volatile IOException exp;

+private volatile ExecutionException exception;

-public CustomRunnable(final String parentThreadName, final

CountDownLatch latch, final GeneralExecuteIface iface,

-final Partitioner part, CountDownLatchInjection

testCountDownLatch) {

- this.parentThreadName = parentThreadName;

- this.latch = latch;

+public PartitionerTask(PartitionerDecorator partitionerDecorator,

GeneralExecuteIface iface, Partitioner partitioner, AtomicInteger count,

CountDownLatchInjection testCountDownLatch) {

+ state = new AtomicReference<>(STATE.NEW);

+ runner = new AtomicReference<>();

+ this.partitionerDecorator = partitionerDecorator;

this.iface = iface;

- this.part = part;

+ this.partitioner = partitioner;

+ this.count = count;

this.testCountDownLatch = testCountDownLatch;

}

@Override

public void run() {

- // Test only - Pause until interrupted by fragment thread

- try {

-testCountDownLatch.await();

- } catch (final InterruptedException e) {

-logger.debug("Test only: partitioner thread is interrupted in test

countdown latch await()", e);

- }

-

- final Thread currThread = Thread.currentThread();

- final String currThreadName = currThread.getName();

- final OperatorStats localStats = part.getStats();

- try {

-final String newThreadName = parentThreadName + currThread.getId();

-currThread.setName(newThreadName);

+ final Thread thread = Thread.currentThread();

+ if (runner.compareAndSet(null, thread)) {

+final String name = thread.getName();

+thread.setName(String.format("Partitioner-%s-%d",

partitionerDecorator.thread.getName(), thread.getId()));

+final OperatorStats localStats = partitioner.getStats();

localStats.clear();

localStats.startProcessing();

-iface.execute(part);

- } catch (IOException e) {

-exp = e;

- } finally {

-localStats.stopProcessing();

-currThread.setName(currThreadName);

-latch.countDown();

+ExecutionException executionException = null;

+try {

+ // Test only - Pause until interrupted by fragment thread

+ testCountDownLatch.await();

+ if (state.get() == STATE.NEW) {

+iface.execute(partitioner);

+ }

+} catch (InterruptedException e) {

+ if (state.compareAndSet(STATE.NEW, STATE.INTERRUPTED)) {

+logger.warn("Partitioner Task interrupted during the run", e);

+ }

+} catch (Throwable t) {

+ executionException = new ExecutionException(t);

+} finally {

+ if (state.compareAndSet(STATE.NEW, STATE.COMPLETING)) {

+if (executionException == null) {

+ localStats.stopProcessing();

+ state.lazySet(STATE.NORMAL);

+} else {

+ exception = executionException;

+ state.lazy

[jira] [Created] (DRILL-6334) Code cleanup

Paul Rogers created DRILL-6334: -- Summary: Code cleanup Key: DRILL-6334 URL: https://issues.apache.org/jira/browse/DRILL-6334 Project: Apache Drill Issue Type: Improvement Reporter: Paul Rogers Assignee: Paul Rogers Fix For: 1.14.0 Minor cleanup of a few files. Done as a separate PR to allow easier review. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] drill pull request #1213: Minor code cleanup

GitHub user paul-rogers opened a pull request: https://github.com/apache/drill/pull/1213 Minor code cleanup Pulled the remaining code cleanup items out of the Result Set work into this simple PR. You can merge this pull request into a Git repository by running: $ git pull https://github.com/paul-rogers/drill DRILL-6334 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/drill/pull/1213.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #1213 commit 3439dfe8e20a8883c32a524ca0823c4bdfc10b53 Author: Paul Rogers Date: 2018-04-17T01:24:07Z Minor code cleanup ---

[GitHub] drill pull request #1213: Minor code cleanup

Github user vrozov commented on a diff in the pull request: https://github.com/apache/drill/pull/1213#discussion_r181932078 --- Diff: exec/vector/src/main/java/org/apache/drill/exec/exception/OversizedAllocationException.java --- @@ -1,4 +1,4 @@ -/** +/* --- End diff -- Should be already handled by Tim's PR #1207. ---

[jira] [Created] (DRILL-6335) Refactor row set abstractions to prepare for unions

Paul Rogers created DRILL-6335: -- Summary: Refactor row set abstractions to prepare for unions Key: DRILL-6335 URL: https://issues.apache.org/jira/browse/DRILL-6335 Project: Apache Drill Issue Type: Improvement Reporter: Paul Rogers Assignee: Paul Rogers Fix For: 1.14.0 The row set abstractions will eventually support unions and lists. The changes to support these types are extensive. This PR introduces refactoring that puts the pieces in the correct location to allow for inserting those additional types. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] drill issue #1207: DRILL-6320: Fixed License Headers

Github user priteshm commented on the issue: https://github.com/apache/drill/pull/1207 @arina-ielchiieva or @Ben-Zvi would it make sense to merge this in earlier before the batch commits later in the week? the number of files changes are large so don't want @ilooner to have to rebase each time. ---

[GitHub] drill issue #1206: DRILL_6314: Add complex types to result set loader

Github user paul-rogers commented on the issue: https://github.com/apache/drill/pull/1206 Going to close this one and try to split this into smaller chunks. ---